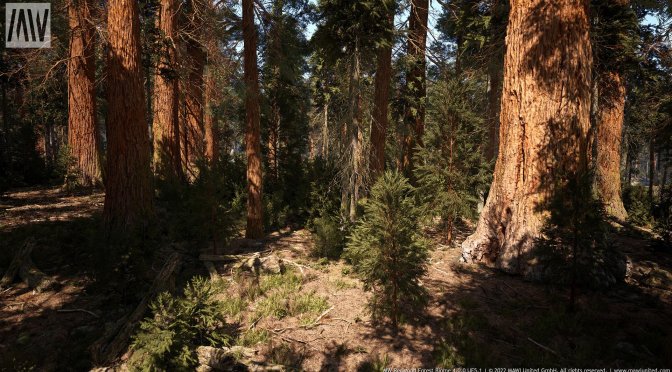

MAWI United GmbH has shared a new tech demo for Unreal Engine 5.1 which uses full Nanite for foliage and trees, Lumen and Virtual Shadow Maps.

This tech demo showcases all of the new features that Unreal Engine 5.1 brings to the table. Moreover, it can give you a glimpse at what the forest areas in some upcoming games may look like.

According to MAWI United GmbH, the minimum required GPU for running this tech demo is the NVIDIA GeForce RTX 2080. The team also recommends using an NVIDIA GeForce RTX 3080.

You can download the Full Nanite Redwood Forest Demo from here, here or here.

Lastly, and speaking of Unreal Engine 5, we suggest taking a look at these other fan remakes. Right now, you can download a Superman UE5 Demo, a Halo 3: ODST Remake, and a Spider-Man UE 5 Demo. Moreover, these videos show Resident Evil, Star Wars KOTOR and Counter-Strike Global Offensive in UE5. Additionally, you can find a Portal Remake and an NFS3 Remake. And finally, here are Half Life 2 Fan Remake, World of Warcraft remake, GTA San Andreas Remake, Doom 3 Remake, The Elder Scrolls V: Skyrim, God of War Remake and GTA IV Remake.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email