In 2018, NVIDIA released the high-end model for its Turing GPUs, the NVIDIA GeForce RTX2080Ti. Back then, NVIDIA advertised that GPU as a 4K/60fps graphics card. Thus, we’ve decided to collect in a single article all of the games we’ve benchmarked these past two years, and see whether this GPU was able to achieve that initial goal.

For these benchmarks, we used an Intel i9 9900K with 16GB of DDR4 at 3600Mhz. We also used the latest version of Windows 10.

Do note that in this article we’ll be focusing on pure rasterization performance. We’ve also excluded any DLSS results, as the earlier versions of this tech weren’t that good. With DLSS 2.0, NVIDIA has managed to achieve something extraordinary. However, there are currently only five games that support DLSS 2.0 and these are: CONTROL, Mechwarrior 5, Wolfenstein Youngblood, Death Stranding and Deliver Us The Moon.

We also won’t focus on this GPU’s Ray Tracing support/performance. Similarly to DLSS, there are only a few games that support real-time ray tracing. Among them, there are only two that offer a full ray/path tracing renderer, and these are Minecraft RTX and Quake 2 RTX. Both of them look absolutely stunning, and will undoubtedly give you a glimpse at the future of PC gaming graphics. However, the overall adoption of real-time ray tracing wasn’t that high these past two years.

It’s also worth explaining what 4K/60fps means to us and to companies. For us, a GPU that has a minimum framerate of 60fps in 4K is a GPU that can be truly described as a 4K/60fps graphics card. However, most companies advertise their GPUs as “4K/60fps” when their average framerate hits 60fps or above (regardless of whether there are drops below 60fps in some scenes). Therefore, keep that in mind when viewing the following results.

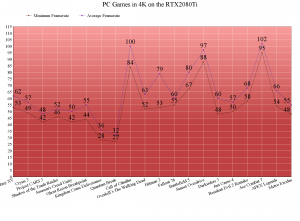

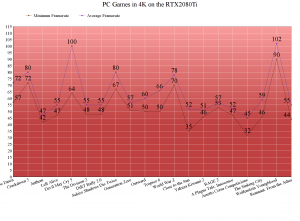

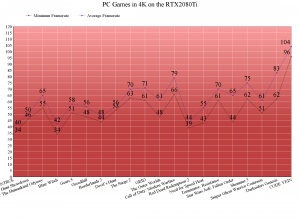

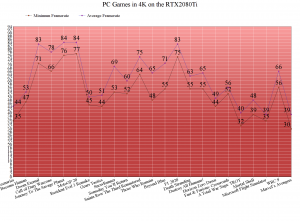

So, with these out of the way, let’s see how the NVIDIA GeForce RTX2080Ti performed in 83 PC games on 4K/Ultra settings. Needless to say that you can find below benchmarks from a lot of triple-A games; from Battlefield 5 and Doom Eternal to Red Dead Redemption 2 and Horizon Zero Dawn.

As we can see, the NVIDIA GeForce RTX2080Ti was unable to offer a 60fps experience in 40 games. Additionally, and while the average framerate was above 60fps, in 21 games the minimum framerate was below 60fps. Thus, in only 22 games the NVIDIA GeForce RTX2080 was able to offer a constant 60fps experience on 4K/Ultra.

These results also justify why we were describing the RTX2080Ti as a “1440/Ultra settings” GPU. Despite what some PC gamers thought when this GPU came out, the RTX2080Ti was only ideal for 1440p. While you can hit 60fps in 43 games in 4K, there are drops below 60fps in half of them. In total, 73% of our benchmarked games were dropping below 60fps on the RTX2080Ti in 4K. On the other hand, and in all of these games, the RTX2080Ti can offer a smooth and constant 60fps gaming experience on 1440p/Ultra settings.

With the release of the next-gen games, the RTX2080Ti may even struggle running some of them in 1440p/Ultra. Thus, it will be interesting to see how the RTX2080Ti will handle the upcoming next-gen games. It will be also interesting to see whether more games will adopt DLSS 2.0.

Before closing, we’d like to let you know that we’ll get an NVIDIA GeForce RTX3080 when it comes out. As always, we’ll purchase this GPU ourselves (like a customer, just like you). Since availability may be limited, we may not get one at launch day. Still, we plan to get both the RTX3080 and the Big Navi. Naturally, we’ll be sure to benchmark the RTX3080 with our most demanding games. Therefore, it will be interesting to see whether games like Quantum Break can finally run in 4K/Ultra with 60fps. It will also be interesting to see for how long this GPU will run games with 60fps on 4K/Ultra settings!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email