2K Games has just released Tiny Tina’s Wonderlands on PC. Powered by Unreal Engine 4, it’s time now to benchmark this new game and see how it performs on the PC platform.

For this PC Performance Analysis, we used an Intel i9 9900K with 16GB of DDR4 at 3800Mhz, AMD’s Radeon RX580, RX Vega 64, RX 6900XT, NVIDIA’s GTX980Ti, RTX 2080Ti and RTX 3080. We also used Windows 10 64-bit, the GeForce 512.15 and the Radeon Software Adrenalin 2020 Edition 22.3.2 drivers.

Gearbox has added a respectable amount of graphics settings to tweak. PC gamers can adjust the quality of Anti-aliasing, Texture Streaming, Materials, Shadows, Draw Distance, Clutter, Terrain Detail, Foliage, Volumetric Fog, Screen Space Reflections, Characters and Ambient Occlusion. The game also has a Field of View slider, a Resolution Scaler, and a Frame Rate Limiter.

Tiny Tina’s Wonderlands features a built-in benchmark tool which is what we used for both our GPU and CPU benchmarks.

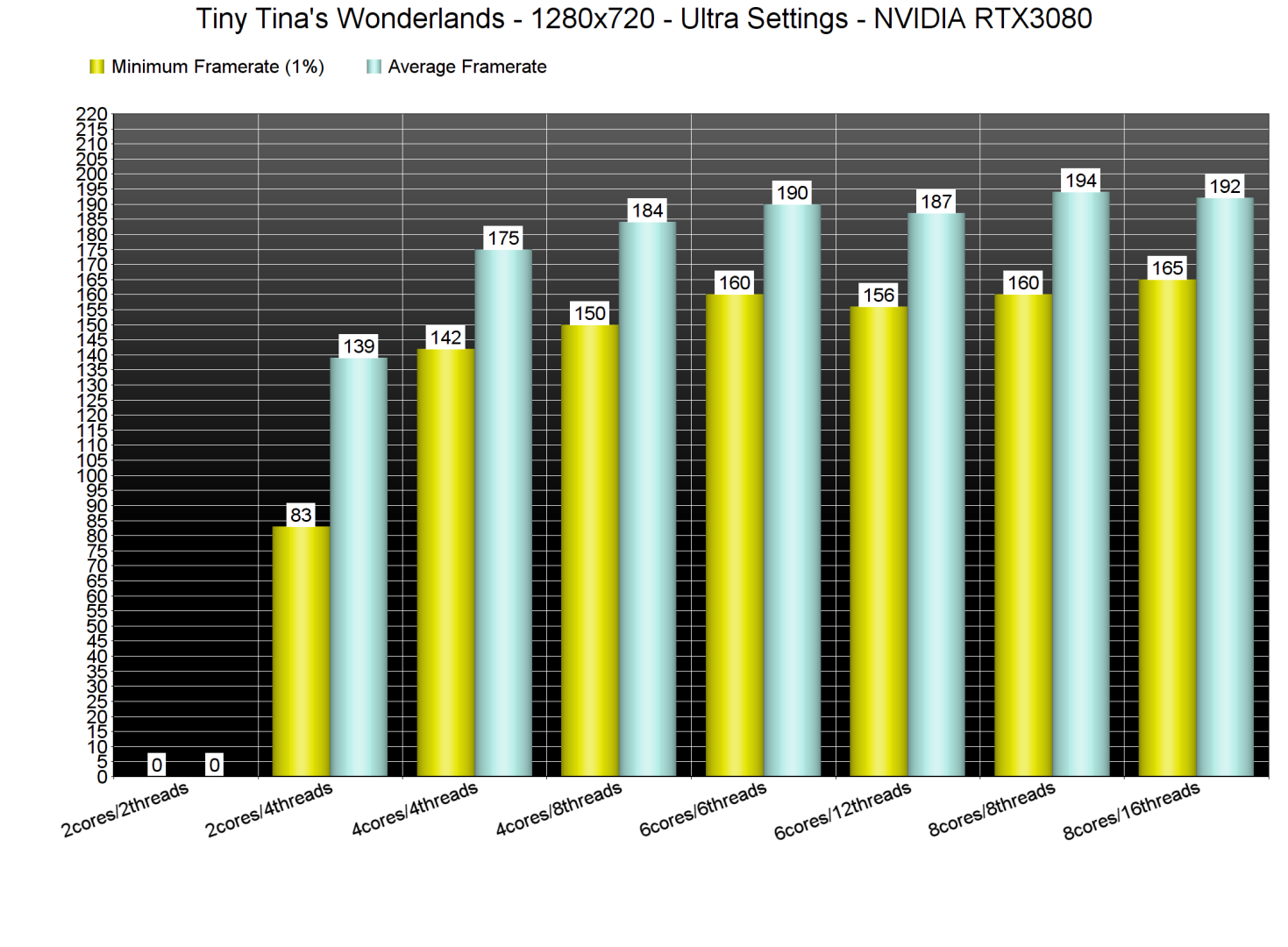

In order to find out how the game scales on multiple CPU threads, we simulated a dual-core, a quad-core and a hexa-core CPU. Without Hyper-Threading, our simulated dual-core system was unable to run the game. With Hyper-Threading, that particular system was able to push more than 60fps at all times at 720p/Ultra settings. There were some rare stutters here and there, though they are nowhere close to what we’ve experienced in other recent games. Furthermore, the game has a shader compilation at launch which eliminates the shader cache stutters (that plagued a lot of recent triple-A PC games).

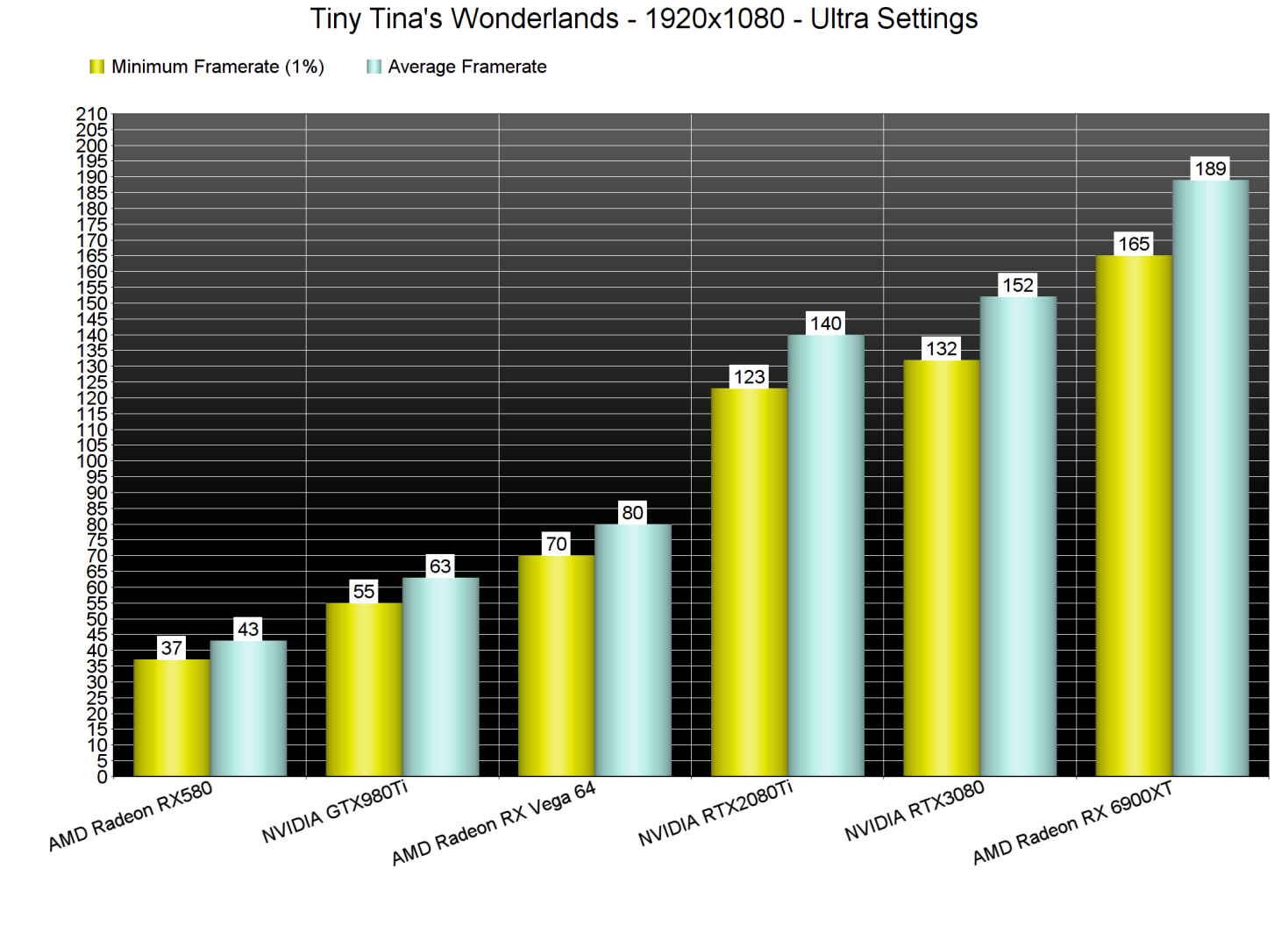

Tiny Tina’s Wonderlands does not require a high-end GPU for gaming at 1080p/Ultra. As we can see, even the AMD Radeon RX Vega 64 was able to provide a constant 60fps experience. At this point, we should note that the game performs significantly better on AMD’s hardware.

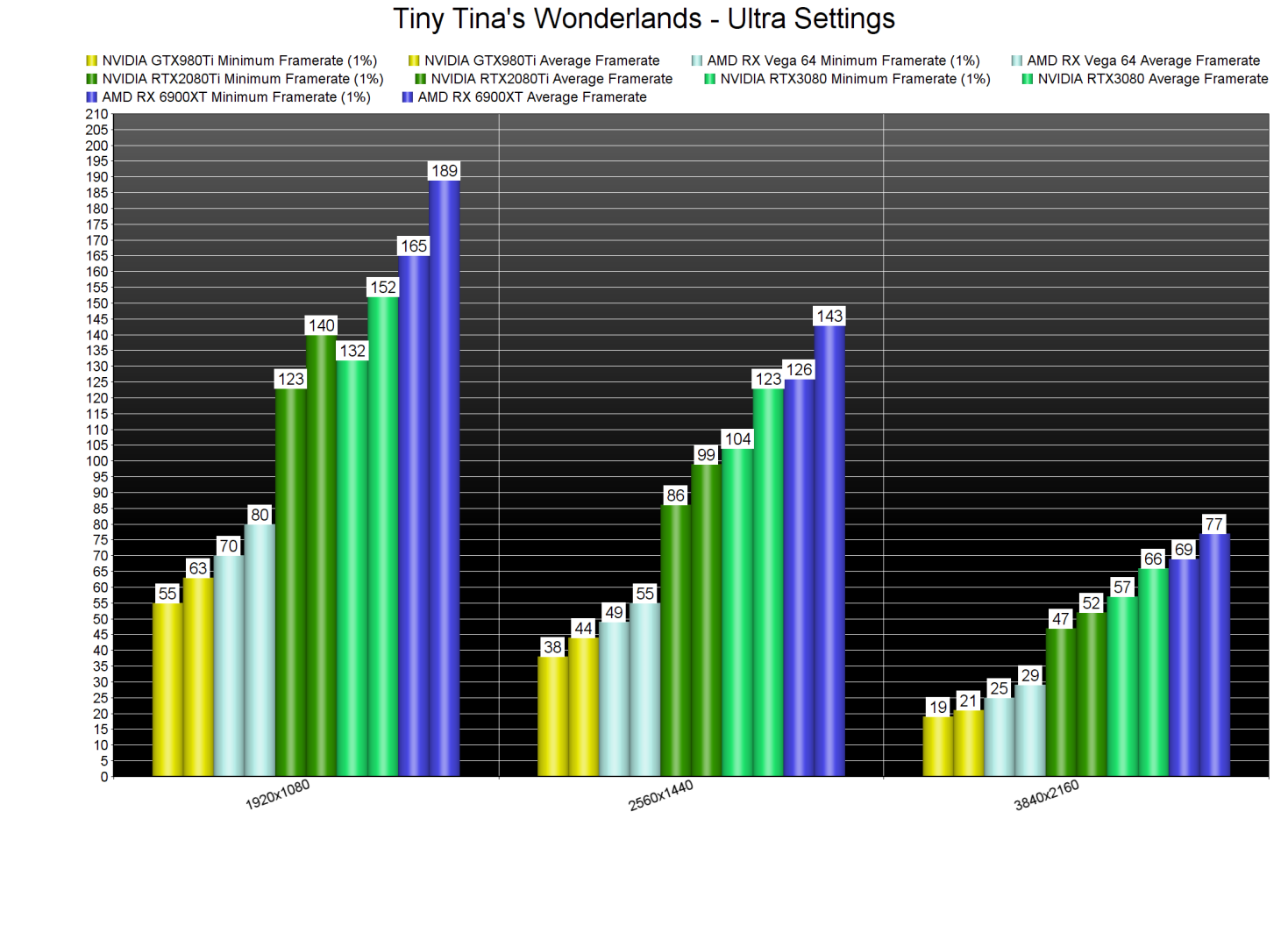

At 1440p/Ultra, our top three GPUs were able to run the game smoothly. And as for 4K/Ultra, the only GPU that was able to provide a constant 60fps experience was the RX 6900XT.

Graphics-wise, Tiny Tina’s Wonderlands looks similar to Borderlands 3. Therefore, and if you enjoyed that title, you’ll love this game’s art style. Tiny Tina’s Wonderlands also packs some destructible objects. However, don’t expect any kind of next-gen-ish destructibility of interactivity. Tiny Tina’s Wonderlands looks and feels like a cross-generation title.

All in all, Tiny Tina’s Wonderlands can run smoothly on a wide range of PC configurations. The game does not require a high-end CPU, though it does require high-end GPUs for resolutions higher than 1080p. The game also does not suffer from any shader cache stutters, though we did notice some rare traversal stutters. And, surprisingly enough, it runs noticeably better than the launch version of Borderlands 3.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email