Square Enix has just released the highly anticipated third part of the Tomb Raider reboot, Shadow of the Tomb Raider. Once again, Nixxes has handled the PC version so it’s time now to benchmark it and see how it performs on the PC platform.

For this PC Performance Analysis, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 8GB RAM, AMD’s Radeon RX580 and RX Vega 64, NVIDIA’s GTX980Ti and GTX690, Windows 10 64-bit and the latest version of the GeForce and Catalyst drivers. Shadow of the Tomb Raider supports multi-GPUs from the get-go, which is why we’ve decided to also test our GTX690 (otherwise we wouldn’t).

Nixxes and EIDOS Montreal have implemented a nice amount of graphics settings to tweak. PC gamers can adjust the quality of Textures, Texture Filtering, Shadows, Ambient Occlusion, Depth of Field, Level of Detail, Screen Space Contact Shadows and Pure Hair. There are also options to enable or disable Tessellation, Bloom, Motion Blur, Screen Space Reflections, Lens Flares and Screen Effects. The game also supports both DirectX 11 and DirectX 12, supports high refresh rates, features an unlocked framerate and comes with four anti-aliasing solutions.

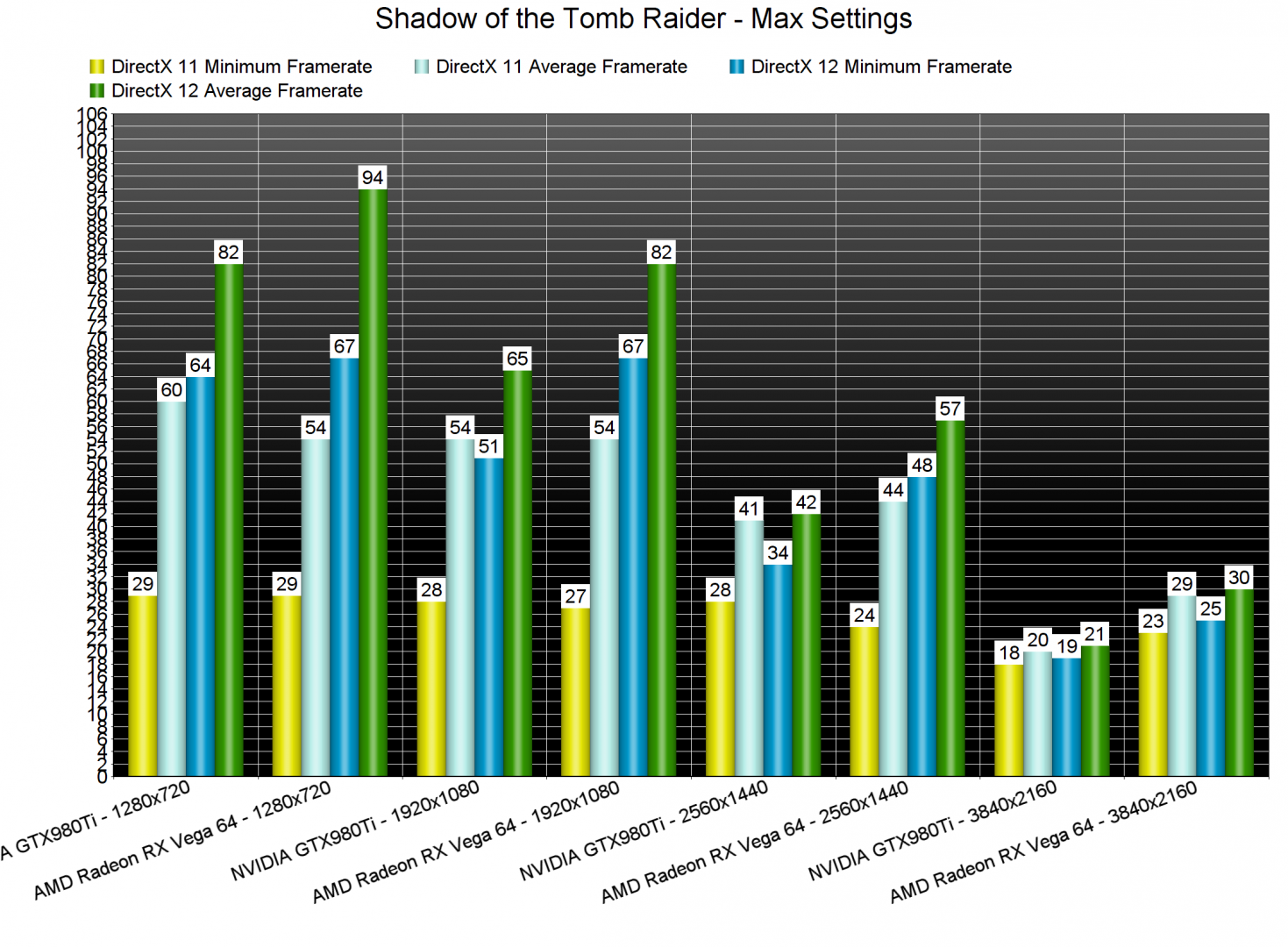

Since the game supports both DX11 and DX12, we’ve decided to benchmark first these APIs. And it appears that DX12 is the API that all PC gamers should be using. DirectX 12 performs incredibly well and benefits from a lot of threads, and we witnessed higher minimum framerates by almost 100% on both our AMD Radeon RX Vega 64 and NVIDIA GeForce GTX980Ti. Do note, however, that DX11 seems to be completely unoptimized in this title. And we are saying this because other open-world games with way more NPCs on screen, like Watch_Dogs 2, Assassin’s Creed Unity, Assassin’s Creed Origins and Just Cause 3, perform significantly better than Shadow of the Tomb Raider and scale way better on quad-cores and six-cores.

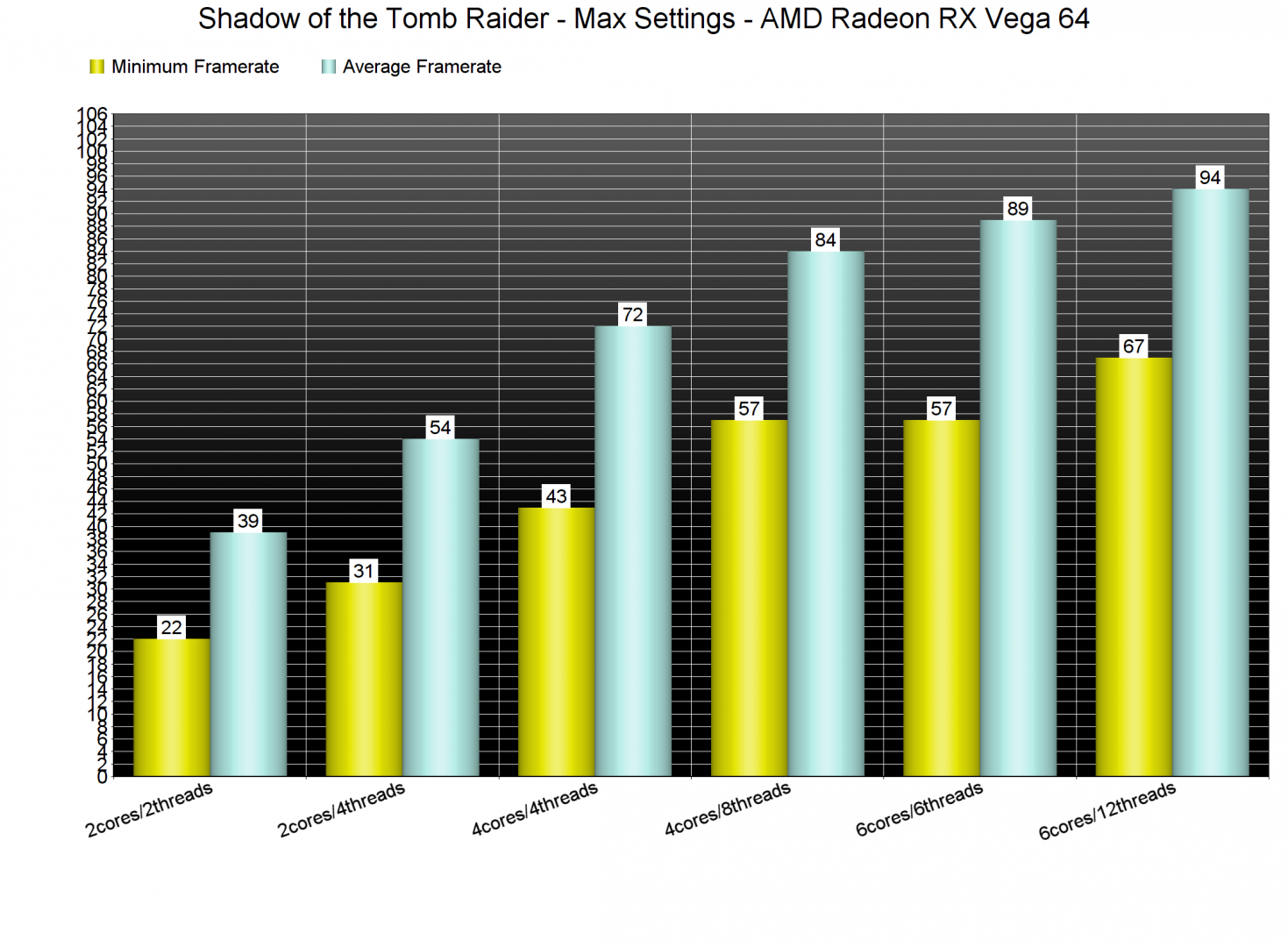

Since DX12 is way faster than DX11, we’ve decided to use it in all of our other benchmarks. In order to find out how the game performs on a variety of CPUs, we simulated a dual-core and a quad-core CPU. Without Hyper Threading, our simulated dual-core system was able to run the built-in benchmark with a minimum of 22fps and an average of 39fps on Max settings at 720p. With Hyper Threading enabled, it was able to push a minimum of 31fps and an average of 54fps. Not too bad actually for such a CPU. What really impressed us, however, was the fact that Hyper Threading makes a lot of difference in minimum framerates on both our six-core and simulated quad-core systems. Shadow of the Tomb Raider is one of the few games that sees actual performance improvements on CPUs that can handle more than six threads, so we strongly suggest enabling Hyper Threading if your CPU supports it.

As you can clearly see, although Shadow of the Tomb Raider requires a high-end CPU in order to be enjoyed with constant 60fps, it can run smoothly even on a bit older quad-cores (that support Hyper Threading). Unfortunately, there aren’t any graphics settings that can improve CPU performance, meaning that there is nothing they can do in order to improve overall performance those that are CPU-limited.

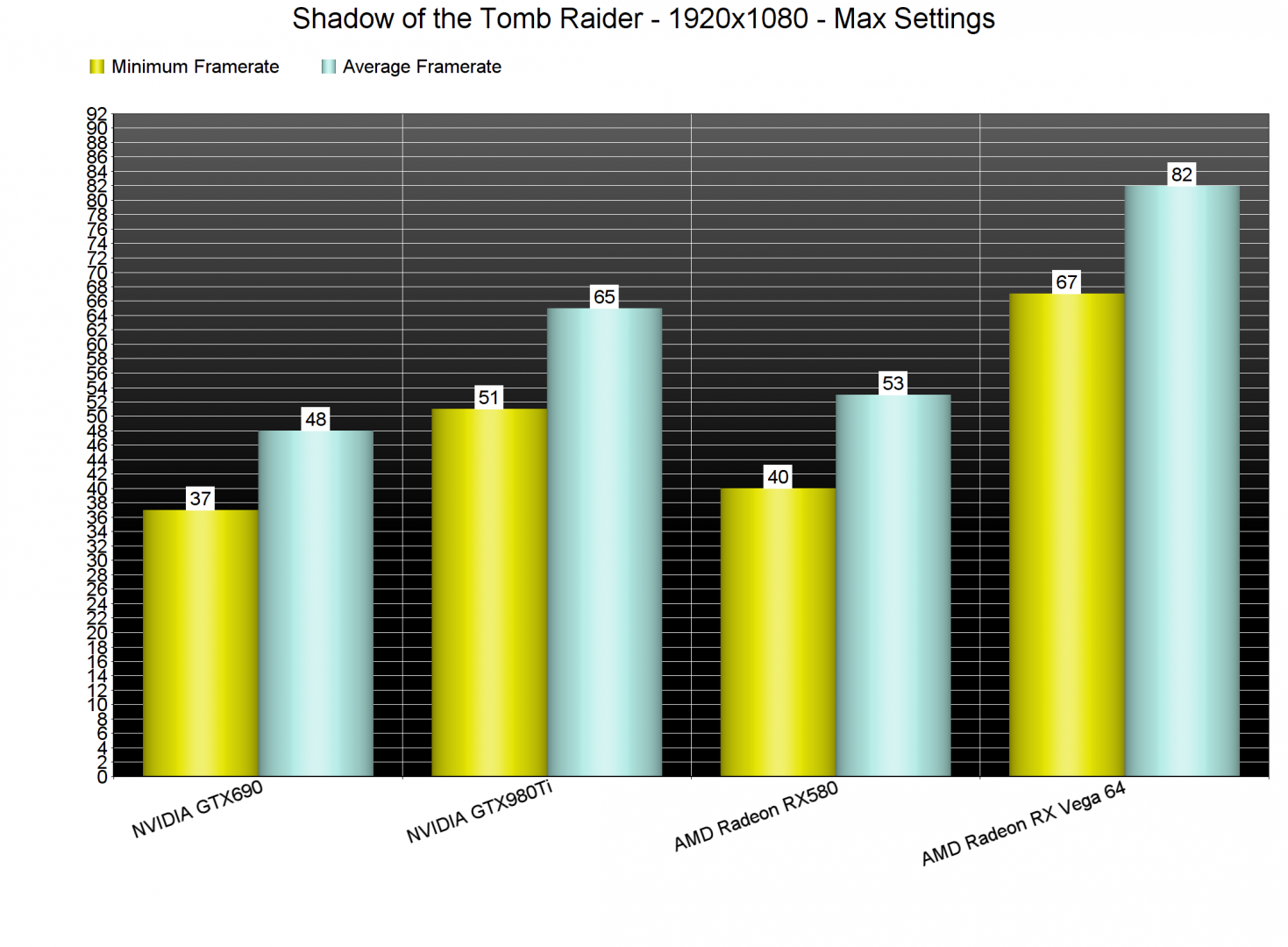

Shadow of the Tomb Raider also requires a high-end GPU in order to be enjoyed. For our GPU tests we used SMAA for our anti-aliasing option and set the game to Max settings. As we’ve already stated, the Highest present does not max out the game (as there are still some settings that can be pushed further). The settings that can be further pushed to higher values are Shadows, Anisotropic Filtering, Level of Detail and Screen Space Contact Shadows.

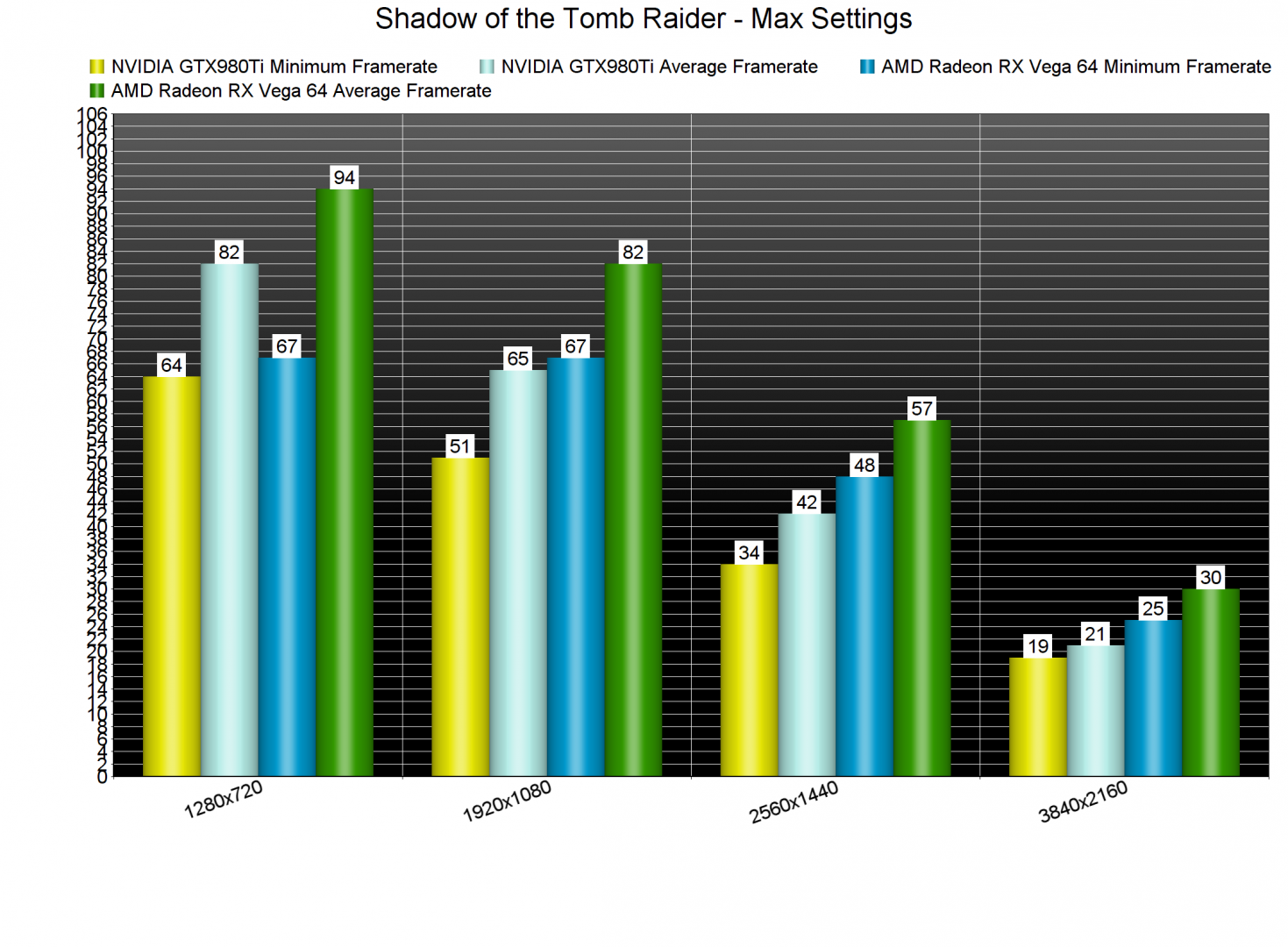

Shadow of the Tomb Raider is one of the very few games in which our NVIDIA GeForce GTX980Ti was unable to offer a constant 60fps experience at 1080p as we got a minimum of 51fps and an average of 65fps. On the other hand, AMD’s Radeon RX Vega 64 had no trouble at all running the game with more than 60fps at all times. As said, SLI is working fine in Shadow of the Tomb Raider and as such, our GTX690 was able to run the game with a minimum of 37fps and an average of 48fps (though we had to lower our Textures to Low in order to avoid any VRAM limitation).

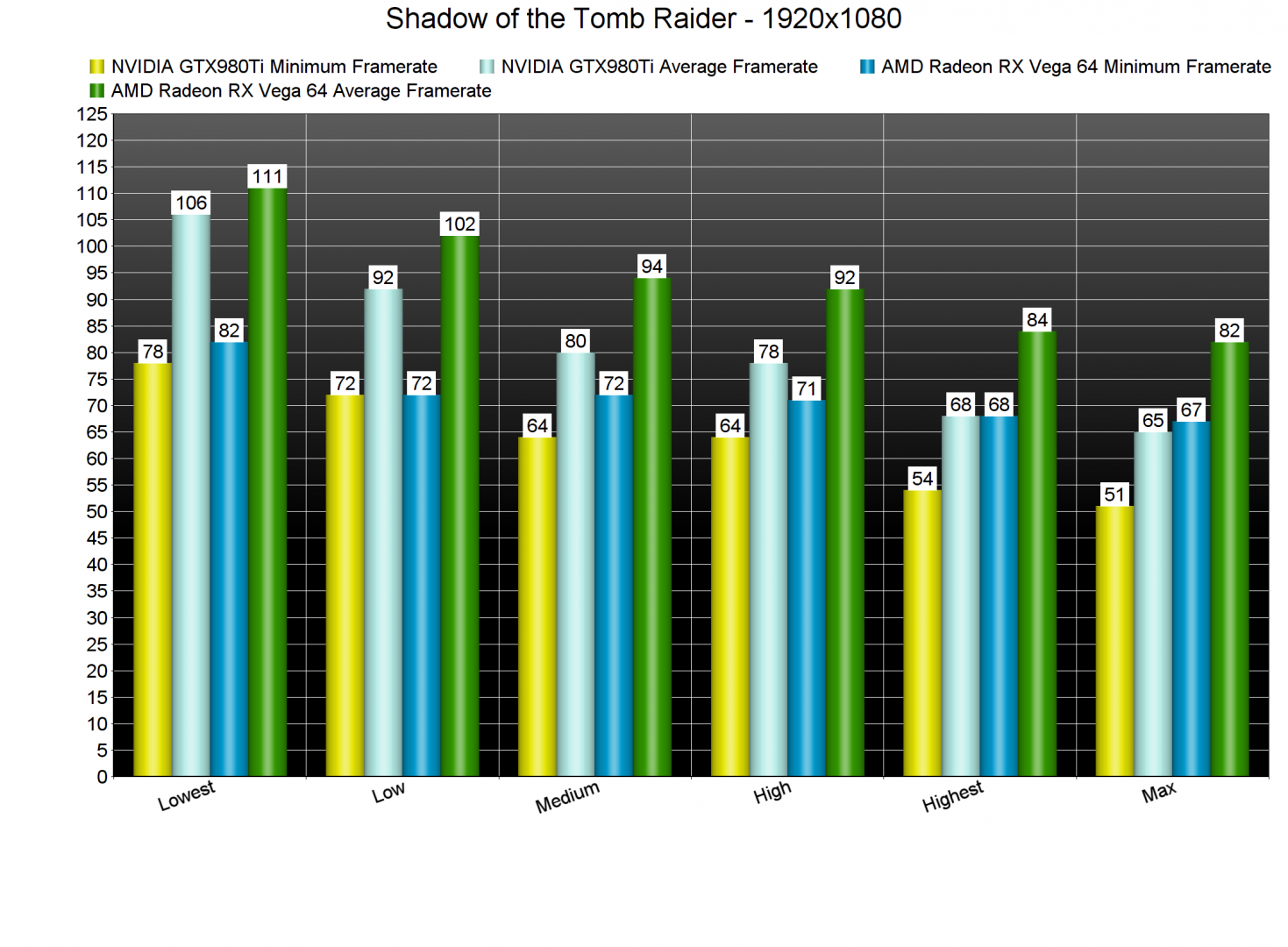

The game comes with five graphics presets: Lowest, Low, Medium, High and Highest. However, and as we’ve already stated, most of these settings have little to no effect at all to the overall CPU burden. On High settings our GTX980Ti was able to offer a constant 60fps experience and on the Lowest settings our minimum framerate was 78fps and 82fps on the GTX980Ti and the Vega 64, respectively.

Surprisingly enough, our GTX980Ti was maxed out even on 1280×720. Yeap, on that really low resolution our GTX980Ti was used, most of the times, at 97%. As we’ve already mentioned, there are options to lower the GPU burden, however we are a bit surprised by how GPU-bound this game can actually be. Both the GTX980Ti and the Vega 64 were unable to offer a smooth gaming experience at 1440p on Max settings. And as for 4K… well… the AMD Radeon RX Vega 64 is borderline close to a 30fps experience.

Graphics wise, Shadow of the Tomb Raider looks amazing. Lara’s character model – and most of the main characters – are highly detailed and look awesome. NPCs, on the other hand, are not particularly impressive, though they are better than those featured in Assassin’s Creed Origins. The environments are full of details and the Volumetric effects are gorgeous. Lara can also interact with numerous objects and bend grass or bushes.

Now while Shadow of the Tomb Raider is undoubtedly one of the most beautiful PC games to date, it does suffer from minor graphical issues/glitches. The shadow cascade, for example, is really low and you will easily notice the transition between Low and High shadows. There are also a lot of object pop-in issues even on Max settings, and some dynamic shadows are extremely pixelated when viewed from specific angles. Furthermore, almost every anti-aliasing solution blurs the image and since Reshade does not work with DX12, there is nothing you can really do other than completely disabling AA (yes, even SMAA softens the image).

Overall, Shadow of the Tomb Raider performs great on the PC, though there is still room for improvement. Yes you will need a high-end system, however the game justifies those requirements. Also, it runs way, way better – at launch – than its predecessor. Though we don’t expect to see any new settings that will lessen the CPU burden, it would be really cool if Nixxes could further optimize the game (especially when it implements its real-time ray tracing effects). Shadow of the Tomb Raider also currently suffers from some graphical issues and the DX11 performance is questionable to say the least. The game also comes with lots of settings to tweak and there is an option to completely disable mouse smoothing (it’s enabled by default so make sure to disable it). It’s a well polished product, though it’s not perfect yet.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email