According to a recent finding posted by Phoronix, ZLUDA is a new independent project built off Intel’s oneAPI Level Zero Application Programming Interface (API), which appears to be a drop-in replacement for CUDA on Intel GPUs.

It is able to run programs geared for NVIDIA’s CUDA on Intel’s UHD/Xe Graphics hardware. As you may already know, CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model created by NVIDIA and implemented by the GPUs that they produce.

Nvidia’s solution allows for software developers and software engineers to use a CUDA-enabled GPU for general purpose processing, basically an approach termed as GPGPU (General-Purpose computing on Graphics Processing Units).

ZLUDA is different from Nvidia’s proprietary CUDA ecosystem. This is an independent project created by Twitter user @Longhorn, and allows for unmodified or unaltered CUDA applications to run on Intel GPUs with “near native” performance.

It works with current integrated Intel UHD GPUs, and will also work with future upcoming Intel Xe GPU architecture.

This is a totally private project and is not connected to Intel or Nvidia in any way. ZLUDA is still in its early phase of development, and it lacks full CUDA support as of now.

Zluda: what I've been waiting for as an implementation, at https://t.co/97fu0OMChJ.

ZLUDA is a drop-in replacament for CUDA on Intel GPU. ZLUDA allows to run unmodified CUDA applications using Intel GPUs with near-native performance.

— Longhorn (@never_released) November 24, 2020

Compatibility at the binary level.

— Longhorn (@never_released) November 24, 2020

Many CUDA applications will not properly work with ZLUDA right now, but the author has been able to get Geekbench 5 program to run, and it provides almost “near-native” performance.

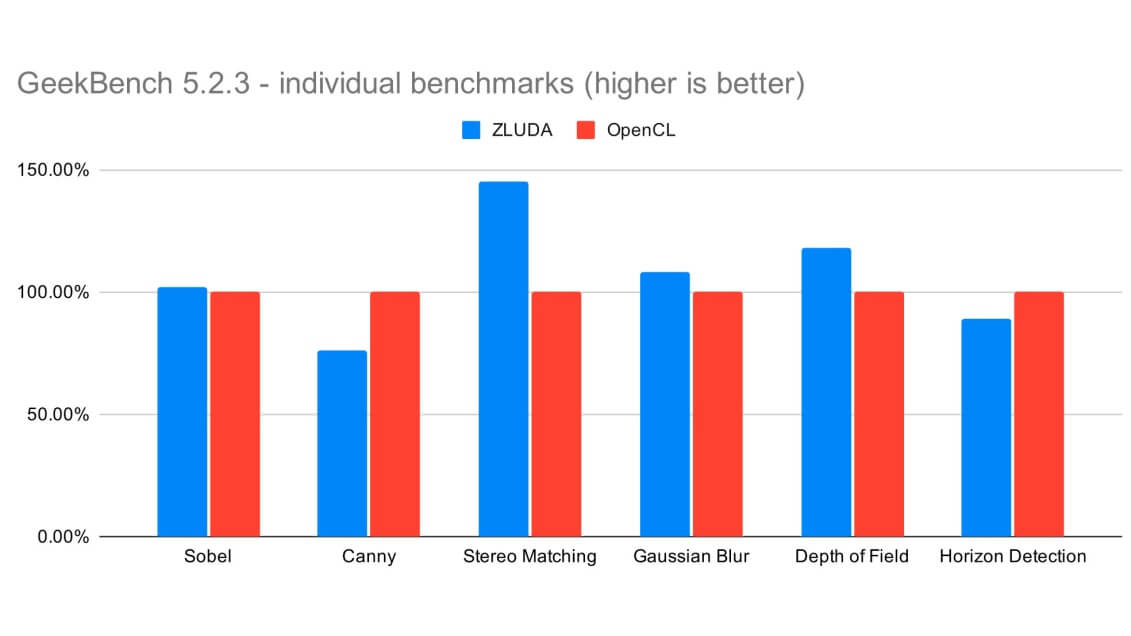

The performance loss appears to be minimal. The author has provided GeekBench 5.2.3 results for the Intel Core i7-8700K CPU, which sports the Intel’s UHD Graphics 630 iGPU. This project is a proof of concept. About the only app/program that currently works is Geekbench (but not completely).

One measurement has been done using OpenCL, and the other measurement using CUDA with Intel GPU masquerading as a (relatively slow) Nvidia GPU with the help of ZLUDA. Both measurements use the same iGPU though.

Basically for the ZLUDA test run, the author has tricked GeekBench into thinking that the Intel iGPU emulates a slow Nvidia GPU.

According to the results, ZLUDA’s performance was up to 10% faster in comparison to OpenCL. Overall there appears to be 4% performance uplift. Performance shown in the above chart is normalized to OpenCL performance. 110% means that ZLUDA-implemented CUDA is 10% faster on Intel UHD 630 graphics.

The author also made this very clear that ZLUDA is completely different from AMD’s HIP or Intel’s DPC++, in the sense that both of these are porting toolkits which require programmer’s effort to port applications to the API in question.

However with ZLUDA, existing applications “just work” on an Intel GPU, and don’t require any extra effort. The CUDA applications will work on an Intel GPU, as long as the CUDA subset is supported.

Right now, ZLUDA is only compatible with Intel Gen9 iGPUs (from Skylake, to newer gen like Comet Lake), which are supported by Intel Level 0, but the author plans to offer support for Intel’s upcoming Xe GPUs as well.

Currently, ZLUDA does not offer support for AMD GPUs, but it might be technically possible in future. Some will also find this ZLUDA project interesting in that it’s written in the Rust programming language.

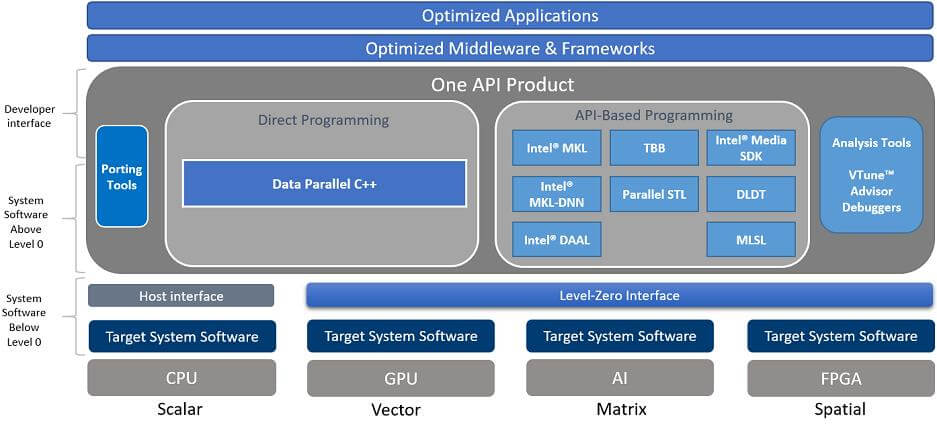

In case you didn’t know, INTEL’s oneAPI is an initiative for a unified programming model for performance-driven, cross-architecture applications. It provides code reuse and aims to eliminate the complexity of separate code bases and multiple tools and workflows.

The objective of the ‘oneAPI’ Level-Zero Application Programming Interface (API) is to provide direct-to-metal interfaces to offload accelerator devices.

Its programming interface can be tailored to any device needs and can be adapted to support broader set of languages features such as function pointers, virtual functions, unified memory, and I/O capabilities.

However, most applications should not require the additional control provided by the Level-Zero API. The Level-Zero API is intended for providing explicit controls needed by higher-level runtime APIs and libraries.

oneAPI is based on industry standards and open specifications, and consists of both the industry initiative and an Intel implementation of oneAPI.

The industry initiative, open to all hardware vendors, basically specifies both a direct programming language, Data Parallel C++ based on C++ and the SYCL cross-platform abstraction layer, as well as API-based programming for accelerating domain-focused functions. Many parts of it are open source.

The Level-Zero API serves a dual purpose. While it gives fine-grain access to multiple low-level functions, most applications won’t require such explicit control. However, the Level-Zero API also provides that control to the higher-level runtime APIs and libraries.

More details on this ZLUDA project can be found on GitHub.

Stay tuned for more!

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email