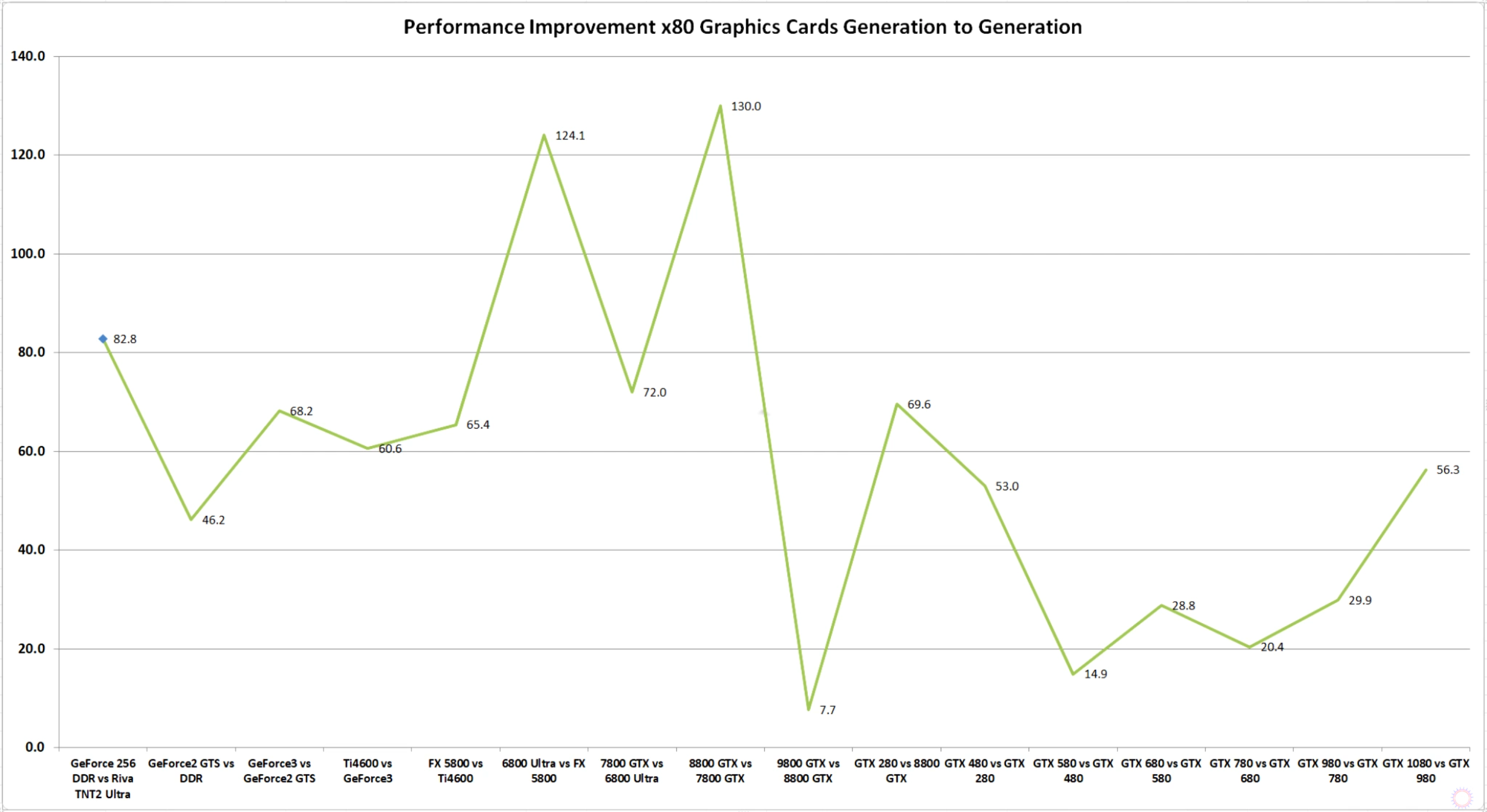

YouTube’s ‘AdoredTV’ has shared two interesting videos, highlighting the history of NVIDIA’s GeForce graphics cards. In these videos, AdoredTV has shared a graph detailing the performance improvement for NVIDIA’s GPUs per generation. And as we can see, the performance improvement has been significantly reduced.

Prior to 2010, and with the exception of the NVIDIA GeForce 9800GTX, PC gamers could expect a new high-end graphics card to be faster by 70% than its predecessor (that’s average percentage). Not only that, but in some cases, PC gamers got graphics cards that were more than 100% faster than their previous generation offerings. Two such cards were the NVIDIA GeForce 6800Ultra and the NVIDIA GeForce 8800GTX.

However, and as we can see in the following graph, these days the performance gap has been severely reduced. New high-end GPUs no longer offer a performance increase over 50% (thankfully the GTX1080 was an exception to that), with the performance increase of these past few years averaging around 30%.

The reason behind this decrease is quite obvious. For starters, AMD cannot keep up with NVIDIA. Due to the lack of competition, NVIDIA does not feel the pressure to release a graphics card that will be way, way better than its previous generation offerings. Obviously, that wasn’t the case a decade ago when ATI was able to compete and surpass NVIDIA’s graphics cards.

Not only that, but NVIDIA has been releasing multiple versions of its high-end GPU. These days we are getting a cut-down version of the high-end GPU (GTX980 and GTX1080 are examples of that), a Titan version that is overpriced, and a proper high-end version (GTX980Ti and GTX1080Ti). As such, NVIDIA gradually increases performance per version, thus giving the impression that every new NVIDIA GPU that comes out is noticeable faster than its previous one.

We strongly suggest watching AdoredTV’s videos if you are interested in the history of NVIDIA’s GeForce GPUs!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email

AdoredTV is probably one of the most blatant AMD fanboy there’s over the internet. i wouldn’t really trust what he says or what he tries to show.

If we make an actual analysis we’ll realize that even if performance between generations decreases, the level of cards in games goes up, today with a 1060 you can run pretty much anything at 1080p, even maxed out in most cases, if we analyze what 960, 760, 660, 560, 460, 260 were capable of, we’ll realise that performance in games actually increased.

For example few years back, in Maxwell times, if you wanted a card that ran pretty much anything you needed a 970, with a 960 you wouldn’t have gone far, this pretty much applies to Kepler 700 series also, and if we go even more back, to Kepler 600 series and to Fermi 500 series, if you wanted no problems you were aiming probably at 580/680/670 at least, it’s also true tho, that prices increased sensibly, i remember buying my old GTX 580 like, 2 months after it came out for ~480€ a year ago a 1080 was like how much? 600/700€? Basically nothing has changed, the same money gets you roughly the same overall performance. it’s actually not easy to explain what i mean, but well i tried

gamers standards/engines change etc etc.

True, but most of the people is still playing a 1080p, so yeah that will probably change, just not yet tho, i mean 230 to 300€ gets you a card that makes you play at 1080p with basically no compromises, unless the game is badly optimized, which happens, but still…

Indeed. I’d be curious to see the stats of resolution being used by gamers. I’m pretty sure like you that 1080p is still the king. Not everybody has money to buy a monitor every 2-3 years and hop on the 2k/4k bandwagon.

Steam isn’t a particularly accurate source, but it does serve to give a decent overall picture; only a fraction of people use 4K monitors so far.

Well what would be the best source of pc gamers specs ? Isn’t steam the most used platform ? If there’s a better source besides knocking on everyone’s door i’d like to know :).

And yea 4K monitors are there just yet. When you get that butter smooth 2k/144hz (gsync/freesync) you just won’t go to 4k without it :). Plus the cost. Panels. etc.

Steam is the most used platform by far, but the Hardware Survey is on an opt-in basis, so its results fluctuate considerably every month.

It’s good for overall pictures, but it can’t give you specifically accurate results that you can brandish about. Either way, there’s really no better source, either, especially not if you want something that specifically solely targets PC gamers, rather than “x amount of people use 4K monitors”, since that includes sizeable professionals that use 4K for their workplace computers for various reasons.

Didi you even watch the videos or are just blowing smoke from your a$$

I watched many videos of AdoredTV otherwise i wouldn’t go to any conclusion.

Watch this one TWICE and then come back, and one more thing I am 34 now and I have owned many many many GPU-s from geforce4 ti to my fury-s and all I have to say that ADORED is 1000 percent correct in his analysis.. I remember the glory days of 70+ perf increases gen to gen.. hell ati had 100 percent with the 5000 series vs the 4000 series.. these days ppl have allowed to be milked by nvidia and amd for much much less but with a bigger and bigger price tag…

Yeah adored is 10000 percent correct, sure.

Watch it and come back.. Put aside your blind assumptions and watch it.. he is IN FACT 1000 percent correct

Those aren’t blind assumption i know they guy, what he says and how he thinks, so no, i won’t watch another of his hate vomiting again. Thanks.

Be blind then and live in ignorance if it pleases you because IT IS FACT that for some ppl convenient lie is far better than the inconvenient truth

It is fact to people like him that only want to vomit hatred the most ways he can.

watch it.. it is 1000 percent true and documented and backed up by previous reviews from tens of review sites… just watch it

Enen HD 5870 Lightning or Matrix weren’t 100% faster than HD 4000 counterparts. Get your Adorked BS out of here.

You upvoted your own post??

ffs who cares about upvotes? sure its sad that the man upvotes his own comments but who cares?

that is when we can still get shrink more frequent that it is right now. as we nearing to the physical limit on how small we can design the transistor it is very difficult to offer much bigger performance increase jumping one generation to another. just look at 20nm node that is not feasible for big GPU. both AMD and nvidia have to sacrifice FP64 to increase gaming performance on the same 28nm node. and don’t forget going with smaller node no longer always equal to cheaper price since the smaller node are expensive to get right.

He called out AMD for their BS with VEGA, and said they won’t be catching up to Nvidia anytime soon.

Yeah, he’s pretty balanced. As someone who has only owned Nvidia GPUs, I see Adored as a level-headed dude. There’s a lot of butthurt Nvidia fanboys that don’t like the truth.

Or is it the opposite? AMD butthurt fanboys that are using any mean to discredit nvidia? Every one of these big corporations are not white nor black, it’s just stupid to support either one or the other, in any situation.

I’m the last thing from an AMD fanboy as I’ve never owned an AMD GPU and only a few AMD CPUs. Both companies have their indiscretions but Nvidia has been top dog in the GPU market for years and they didn’t get there by playing nice. Not that that matters. They’re tops for a reason and I commend them, but no one’s closet is skeleton-free.

As consumer we have every right–and should exercise the right–to criticize the companies that we give money to. It ensures that we keep them on their toes and ultimately get better products in the future.

The last thing we should want to be is complacent consumers that will buy anything, because then nothing , especially not technology, will improve.

Agreed, but the guy isn’t of of those neutral consumers who’s doing constructive criticism, i mean look at the video’s title, even from there you realise what’s the amount of hatred.

It’s not hatred. It’s just stating facts. He regularly bashes AMD, too. However, the top dog deserves the most scrutiny. Nvidia gets enough love from consumers that aren’t conscientious enough.

In the end, I want better performance for better prices. We’re not going to get that from these companies if we’re too busy gargling their nut juice.

His is just hatred, these are no facts other than the fact that moore’s law doesn’t apply anymore, and that there was no competition from ATi/AMD, these are the main facts.

Nether side is black & white, but they aren’t exactly the same either. People here like to laud NV and gameworks, yet the asking price and performance gains are very little. I find it hard to defend NV when the above happens.

nvidia did the same bad stuff AMD did, same exactly, so they’re on the same level, really, it’s just a matter of giving credit and bashing the right one at the right time.

No, when I meant “not on the same level”, I meant in terms of what each of them has done from start to the current time. They haven’t done exactly the same moves, charged exactly the same prices, made the exact same marketing stunts, etc. Each has done things their own way. otherwise we may as well say that everyone and everything is exactly the same in this world and we all should know by now that that simply isn’t the case.

Giving credit?, you mean like giving NV credit for taking consumers for granted, or how we’ll no doubt bring up AMD doing that at some point and calling it a day?.

They have done different things, which pretty much explains why nvidia is where it’s at, and AMD is where it’s at, and before it ATi, and please don’t start with the usual conspiracies where nvidia and intel pay everyone to talk good about them and sht about AMD and ATi, because if AMD or ATi are/were where they are/were, isn’t nvidia’s or intel’s fault.

Giving credit to nvidia which has developed better hardware than its competitor for years, and still does, and give AMD credit for the great value hardware they make, then if you want to start talking about bashing, i’ve got for both of them, in the same quantity.

“They have done different things, which pretty much explains why nvidia is where it’s at, and AMD is where it’s at,”

which heavily lends back to you calling about credit where it’s due talk. I’m on about actual differences not differences that gave a company boons and boy scout merit badges that must be called up when the time is right.

I don’t need to talk about conspiracy theories. I just look at the big picture, without the need to talk about one company “paying off” another to make them look good.

I’ll always give more credit to the company that delivers good tech and a very, very good price, which isn’t nvidia. AMD isn’t rocking the kasbah atm, but they aren’t charging insane prices for slight perf gains like NV is doing with the 980ti, 1080 (upon release), 1080ti, Titan, Titan Xp and so on.

I find taking the approach to the balance of power and price to be the better merit to strive for, than to advance little by little, while charging more and more as time goes on. Charging 1m for some fancy car?, cool beans, but it’s clearly not doing much for the billions that cannot afford it.

For example, we have high end PC’s, yet devs still choose to go for consoles first, PC’s second, the high end power being squandered for years, with only a minute few games ever using the power entirely, both visually and performance wise (Christ, we still have 60fps locks in 2017 and 30fps cutscenes, no amount of power is going to change that). The day we start seeing all games utilizing higher end hw and coding for it first, then scaling back for the lower end hw and consoles, is the day we start seeing actual full on advancements, not the piddling excuse for a crap shoot we have had the past decade.

So Next-Gen VEGA HBM2 consoles, and finally we will have some DX12 progress 😀

The best tech has been delivered from nvidia in the latest years, low power consumption with same or better performance and temperatures, so they actually have an excuse to charge more than its competitor (not as much as they do, but still), if you analyze 3/4 gens of GPUs from both nvidia and amd/ati, look who has done the best overall product, so why shouldn’t nvidia charge more, after all they can do that, and it’s what every company in the same position would do, charge more since they can boast the best hardware, with the best tech. HBM isn’t even a thing.

At this point, it’s become a pointless endeavor when it comes to talking to people who think NV>anything else on the known planet.

nvidia isn’t better than anything, but just better of AMD/ATi at the moment at least, now you want to deny that too?

Like I said, there is nothing more to be said to those that want a merit badge regardless of what is said and done.

You want to toss more insults while you’re at it?, because the other post implies that you do.

I’m not insulting anybody here, what are you talking about?

because you called me a “mad AMD fanboy”?, when I’m clearly not even an AMD user nor have I been one for a very long time.

Not mad nor fanboy are insults, i don’t know what to tell you, if you rate them as such.

Fanboy is in fact an insult. It’s been used time and time again to make someone look so obsessive for something in a negative light, compared to being just a simple “fan” of something or someone. There is a difference between saying “I’m a fan of X” to “you’re a fanboy of Y”.

I’m sorry that you cannot accept the splitting of the two, but that’s how the general public views it. People take it negatively when you call them a fanboy, rather than a fan of something. I’ve been there, I’ve seen the results, it’s never pretty.

It’s not an insult, insulting someone is another thing, fanboy is more like an adjective, anyway it’s how you’re sounding, obsessively defending AMD and bashing nvidia, you had to buy nvidia hardware and you sucked it up, and ran nvidia’s way, but if you had a choice you probably wouldn’t have done that, so you could actually be even frustrated for that, and instead on bashing AMD for not putting a up a competition, your frustration is making you spout out all the poison you have towards nvidia.

I know you don’t think it’s an insult, but the public view says otherwise and that outnumbers you by default. The term “fanboy” has been viewed and seen as a negative insult for decades, compared to calling someone a fan of something they like. Fanboy is someone who is “obsessive” over something, being “obsessive” about something in it’s own light is not regarded as a healthy outlook to have on something, which again, the public eye sees as well.

I’m not even defending AMD here, I’m putting the spotlight squarely on Nvidia here. You want to paint the picture that I’m “defending” AMD because I called your defence of NV, to make it so the argument is cancelled out, another tactic I’ve seen people play before when faced with an opposing argument/opinion. That doesn’t cancel out my original argument on Nvidia.

See, you even say “bashing NV”, because that’s how you want it to be viewed as and in turn, you defend against said “bashing”, so that proves in general that you are in fact defending NV. You even made that counter argument that both are the same, because you simply didn’t want NV slighted.

I didn’t have to suck up anything, again you imply as such, but I’ve done no such thing. I choose what I want to buy and when I want to, not even you can decide on such choices made.

“poison” lol, you really are resorting to what you go against in terms of tactics, it amuses me that you have to resort to this style of play.

Yes i’m defending nvidia, because it’s being over bashed, just to defend AMD, and that’s many are doing, it’s basically the opposite you said.

Why do you even need to defend a company, that clearly isn’t your friend, clearly doesn’t care about you as a person and only wants you money?.

Why on earth does anyone need to defend a company at all?. They aren’t some little lost kid that’s off moping in the corner because their fee fees were hurt, or their brand image isn’t doing so hot.

Like I said before, I use Steam, doesn’t mean I have to defend it. I use NV cards, doesn’t mean I have to defend them from “poison”, because there is no need, nor will there ever be a need. The only people that feel they need to, are those who feel like they will lose some sense of investment, or feel like they made the “wrong choice” in something. Inf act, that’s what people often tend to display when facing another person who talks about something they are invested in, they defend right off the bat,m without question.

I could ask you the same, why are you bashing nvidia when someone is bashing amd in order to defend amd and make it look less bad?

Ask the same?. I don’t know how you can ask the same when I’m not the one “defending” a company here. I’m well aware of AMD’s position as well as nvidia’s, but I see no need to defend either of them. I do however see a need to point out that the one at the top is exploiting their gains to a point where it’s effecting the consumer. I want what’s best for the consumer, not the company.

Why are you defending a company that doesn’t give a toss about what you think, let alone your life?.

Why do you feel the need to make two totally different sides look exactly the same, what benefit does that net you or your life?, what does that net me for that matter?.

Why can’t you come up with more than just a few words to what I’m saying?. Why do you resort to “bashing” and “poison” tactics so easily?. its it harder for you to write a little more to what’s being said, or do you not feel “invested” enough to care?.

You’re in fact defending AMD by changing goal post answering to someone who is bashing AMD, and your response is bashing nvidia, this is pretty much defending honestly.

I do not need more words than bashing and poison because the situation is plain simple and, besides, it’s 2:30 am here and english isn’t my native language, so i really can’t come up with many words, as i don’t even have such big dictionary.

it’s not even about changing goalposts here. You’re not changing the conversation itself from shifting from NV to AMD “defending”. I already told you multiple times that I don’t even own an AMD card and in fact own multiple NV cards, yet you willingly ignore this fact and decide to now focus on AMD “defending”.

No mate, you’re the one doing all the defending here. You cite anything about NV as bashing and call people “butthurt AMD fanboys”, simple as, clear as day.

You have wasted hours of my time acting like what you claim I am. Yet you willingly ignore that as well.

Thinking outside the box isn’t your strong suit either. I don’t care if you cannot speak proper English, you know how to use insults and you know how to dance around in circles and cite others as fanboys while not even bothering to look in a mirror.

You have the time, you learn proper English, either get at it or get out. If I’m to talk on a non English speaking website or server, I have to adhere to the language spoken, same with any other non English speaking country.

Indeed it’s not me shifting the goalpost, it’s you, and the fact you don’t own AMD products doens’t mean anything, you just joined the bandwagon of AMD hype like many else, it’s like a trend, if you don’t join it you’re either a nv fanboy, or you’re trying to make AMD look bad in any case, like i’m supposedly doing, right?

So let’s analyse the whole discussion, both this and the other one.

I start saying that AdoredTV is a blatant AMD fanboy because he’s constantly bashing nvidia, over anything, and you and few other say “He’s not a fanboy, he’s totally neutral, he’s been bashing AMD also in the past months” go count all the videos where he talks sht about nvidia praising AMD, in every possible way, again, look at the video from this article, even that is trying to make fun of nvidia in a certain way, how can you not see how toxic he’s towards nvidia? It’s like nvidia did something wrong to him in person, i don’t know. Then another guys says “There’s a lot of butthurt Nvidia fanboys that don’t like the truth.” i answer saying, that what looks to me is that there are more AMD butthurt fanboys that when the topic is “nvidia’s good performance” (it’s an example), they go down the comments, and start writing, “nvidia is greedy” “they stole our money” “they’ve been doing bad practices to sell stuff” “they’re gimping AMD” “they’re paying reviewers to make them look better than the competitor”, all these things, is just hatred, coming from the badwagon, why do you guys feel this need on shtting on someone when the goal post is another? Another example is this exact article’s comment, the articles talks about how nvidia’s improvement per generation increased, as per AdoredTV video, someone start saying that this is probably because moore’s law doesn’t apply anymore (and that’s for everyone, not just nvidia), and because there’s actually no need of such improvement, if even the “minimum” you do is enough to beat the competitor, others say that once videocards cost less, and all sort of things, now these are proper neutral comments, now AMD boyos comments: “nvidia has been milking everyone” “gameworks rofl” “nvidia keeps raising prices, greedy bastards” “Dont bother.. nvidia dolts will just close their eyes and take it regardless” and many other things i won’t be quoting, or it’d take me a week.

At some point i post on a completely different topic which is a Prey mod, and what i post is this “Tried the demo, it has several issues with audio, and graphics has also other problems. Also the demo was pretty boring.” You come there, saying “Well that card didn’t save you now did it?. At that point i recognize you and another interesting amd vs nvidia debate starts, which then moves just to this. Now from a neutral person standpoint, how can someone consider you not an AMD fanboy, if even on a Prey mod you come there and put the amd vs nvidia into the mix, answering to me my comment that has NOTHING to do with amd vs nvidia thing? How can you not sound obsessive?

But let’s move forward, at this point you play the victim card,

saying that’s it’s pointless talking to someone like me that think that nvidia is the best, and there’s nothing better (never said such stuff), i answer saying that i only said that performance wise (despite the moore’s law decaying) nvidia has been doing better than AMD in the latest years, and there’s no denying that, and you keep pushing the victim card saying i insulted you, and i usually insult everyone, “You want to toss more insults while you’re at it?, because the other post implies that you do.” because i used “butthurt fanboy” (again you might see how you want but it’s not an insult, it’s not like i’ve told you stupid or rtard, completely different) so you keep saying i insult everyone that is trying to bash nvidia, when it’s in fact the opposite (see above), you and others bashing nvidia on other things, when the goal post was talking about nvidia’s decreasing improvements over the last years.

At this point you say i’m defending nvidia, and it’s what i was doing, since the goalpost was another, and it was already given the explanation by many other real neutral comments, but yet you kept bashing nvidia over prices, bad practices, and saying that AMD didn’t do such bad things or not even in the same amount.

Now you say something like “Why do you need to defend some company, it’s not like it’s ur friend, some lost kid or anything” and i say ok, why are you doing the same then, because clearly, the one who started bashing (again on another different thing of the goal post) nvidia, to DEFEND amd, it was you, not me (it’s a common thing people do, bashing someone to defend its/his/hers counterpart in the discussion) .

Now at this point, in relation to the “defending” thing, you play the “importance” or “meaning” card saying that nvidia doesn’t care about me or my life, so i shouldn’t care about either, so i shouldn’t defend them, which is in fact what i do, i do not care for neither of them, if a year nvidia makes better graphics card i buy that, if amd makes better cpus, i buy that, if the year after, amd makes better graphics cards, i buy that, if intel makes better processors, i buy that, etc, etc, i don’t care, i’ve been buying the best my money could buy for years, not caring at all about brands and stuff, and again, the why i defend some company is simple, no matter what company is, if the goalpost is another, and someone is being not neutral, i just feel i need to rebalance the equilibrium.

The last point is where you say, “You’re not changing the conversation itself from shifting from NV to AMD “defending” (?????)and continue saying, you don’t own AMD cards or haven’t in years, which means anything because it’s not like a requirement, owning something to talk good of it (I haven’t owned AMD stuff in years, last was 5850 on my bro’s pc, and before buying the 1060 i’m using now, i tried a rx480 which i had to send back due to coil whine and other minor problems, so that doesn’t count as owned), when i go around talking good about ryzen, i’m still here with my 2600K which is from intel, so what? That’s why i’m ignoring completely the fact you don’t own anything from AMD.

Now this part is bit more salty, in which you say i cannot think outside the box (still trying to find the meaning of this, in this context), and that you don’t care if i don’t speak proper english, because i know how to use insults (????) and dance in circles (???????????) while not bothering to look in the mirror when i use the word fanboy (ok so now you’re basically saying that i’m a fanboy, ok), needless to remind you that the one who started bashing in the discussion wasn’t, it was actually you, i started defending some company, from the pointless and out of place attacks of a youtuber. And this comes to an end, with you saying i should learn proper english if i’m to write on a international or english speaking website, while i think my english is decent enough to talk about the topics i’m most interested in, like hardware or videogames, i just struggle a little when it comes to these kind of posts, but whatever.

Also, do you happen to be either American or british? Because, i mean, you level of saltiness would be excused. Oh and i’m also done talking with you, because there’s really nothing more to say, especially after this humongous post (probably the first time i write something this long). Have a good day.

“Indeed it’s not me shifting the goalpost, it’s you, and the fact you don’t own AMD products doens’t mean anything, you just joined the bandwagon of AMD hype like many else, it’s like a trend, if you don’t join it you’re either a nv fanboy, or you’re trying to make AMD look bad in any case, like i’m supposedly doing, right?”

Nah mate, that’s all on you. Trying to paint me as an AMD fanboy despite the fact that I’ve told you multiple times that I don’t own any current AMD cards, yet you deal in absolutes like some teenage Anakin Skywalker, who cannot accept what was told to him.

“I start saying that AdoredTV is a blatant AMD fanboy because he’s constantly bashing nvidia, over anything, and you and few other say “He’s not a fanboy, he’s totally neutral,”

Because he does in fact look at the bigger picture, the industry itself. All you see is “bashing” and “poison” like someone who’s clearly heavily invested into a brand, like their fee fees were hurt and that you needed to defend the other company by only bringing up it’s merits and bringing up the cons of the other side to make them appear “balanced”, when that’s actually a lot further from the actual reality we live in. You are trying your damned hardest to overwrite that with your own view on this current reality with your own opinions.

“There’s a lot of butthurt Nvidia fanboys that don’t like the truth.” i answer saying, that what looks to me is that there are more AMD butthurt fanboys that when the topic is “nvidia’s good performance”

So you want to meet the point where you are calling the kettle black then?, because you’re headed that way easily if you want to go by that logic.

You call someone a “butthurt AMD fanboy”, expect consequences to follow.

“Why do you guys feel this need on shtting on someone when the goal post is another?

You have gall mate, you have the sheer gall to call that when you’ve called me a “butthurt AMD fanboy”. Seriously, you are falling right into pot calling the kettle black territory, like it bloody well or not.

“But let’s move forward, at this point you play the victim card”.

Yeah, no, that’s not going to win any debates when you bring up the victim card. Sorry but that basically screws over your argument when you try to pick at straws so fickle as that.

I Bring up the “butthurt AMD fanboy”, because you insulted me and you refuse to acknowledge the way you acted, which again proves my point, that you’re a stuck up brick wall that loves to think he’s right all the time (because this long winded novel you’ve been writing shows that, when you could have been writing books worth of arguments from the start, you only do this now when I backed you into a corner).

You bash AMD as well as other people in general, that doesn’t make you some messiah of truth or anything, it just makes you the same as everyone else in the end, in calling the kettle black.

“At this point you say i’m defending nvidia, and it’s what i was doing, since the goalpost was another, and it was already given the explanation by many other real neutral comments, but yet you kept bashing nvidia over prices, bad practices, and saying that AMD didn’t do such bad things or not even in the same amount.”

because I’m not talking about AMD, if you hadn’t already noticed and read with the two eyes you were given, I was talking strictly about Nvidia. I don’t have to bring up merits from AMD or their cons when I’m talking about Nvidia, but according to your weird borked law of logic, I have to, in order for you to then bring up NV’s merits and go with the whole “nope, can’t deny it, accept that I’m right” type counter points.

“At some point i post on a completely different topic which is a Prey mod, and what i post is this “Tried the demo, it has several issues with audio, and graphics has also other problems. Also the demo was pretty boring.” You come there, saying “Well that card didn’t save you now did it?. At that point i recognize you and another interesting amd vs nvidia debate starts, which then moves just to this.”

Because you bring up NV’s merits while ignoring their cons, you call me a “butthurt AMD fanboy” and expect nothing more in return?, Dunno what country you came from mate, but what goes around comes around. You want to praise NV to the high heavens, be ready for someone like me to be there when you trip up on those merits of yours.

Yeah, after reading your long winded retort, I’ve come to the conclusion that you’re full of hot air. You pull out “card” arguments that hold no weight and you’ve honestly never once felt like you’ve done anything wrong in calling others names. You are a waste of time and not even remotely a pleasant person to talk to. i’ll take the mods advice this time and do what should have been done in the first place.

“you level of saltiness would be excused”

Yeah, just what I thought, an utter trite waste of my time.

No, you don’t mean for me to have a good day, so drop the petty act.

I really mean for you to have a good day, what we’re talking here, is stuff which is highly affected by each one’s opinion, i don’t care about your opinion you don’t care about mine, that’s basically not going to change, and apparently neither of us is going to make each other change their mind, so basically, it ends here, you think of believe what you want. and i’ll do the same ofc. I see no other way of ending this.

PC gamer is simply hard to please. when developer makes their games easy to run the people will complain that developer are not taking advantage of the raw power available on PC especially the high end hardware. but when game developer makes their games more demanding that can even choke high end hardware people will said poor optimization. when developer use extra graphical stuff like gameworks people complain that developer are using stuff that people not asking for.

Same. I’ve owned more NV cards over the years and even I see him as level headed and decently thought out when it comes to him presenting information.

So what? If he wouldn’t he would be even more blatant than he already is, Vega is a failure, you really have nothing to say otherwise, not even the fact that RTG has been starving on funds.

What has he said that got you so butthurt?

Butthurt? I don’t care about him at all, he’s just an AMD fan and uses any means necessary to shttalk nvidia.

Give examples then.

No examples i don’t remember any example, i just remember he’s an AMD fanboy and the way he acts and talks is pretty eloquent.

Failure how? That it isn’t fastest card? Or it uses 60W more power? Where is failure?

That is no fastest card and uses much more than its counterpart, also, recent tests showed even the best AIB versions like asus’ strix are not good enough to dissipate all those Watts, so it will probably need either a big a$$ heatsink or just a liquid one. like seen on Guru3d’s review, that was taken down because asus couldn’t get the right frequencies on the bios, so i imagine temps to go even higher once that update drops.

So far, he’s one of the few out there that look at the big picture. All hardcore AMD/NV fanboys have been doing is blowing smoke and slinging trash at one another.

The guy calls out both sides, though would anyone truly want to care for the company with the biggest share and very, very little concern for it’s consumer base (let’s be absolutely real here, NV don’t truly care).

That’s exactly what he does, so yeah

The world doesn’t end at 1080p. Im still waiting for a proper 4k graphic card. NOT a 1300 bucks one. So go figure out. Is this amd fault? well, yes… somehow they are part of the problem. Is this nvidia fault? You can bet on it. It is. Freaking greed.

Ps i have a 1080 ti. Not an amd blind fanboy

I know, and i agree, but atm that’s still the most used, it’ll change for sure, but not within 2017 and probably not even within 2018, hardly in 2019 but who knows. Anyway nvidia is as greedy as amd and as intel, they’re all greedy, they’re not non profit organizations, they’re corporation their goal is do make money delivering what they think it’s enough for the money they ask.

He likes AMD yes, but calling someone a fanboy indicates that they are completely biased which AdornedTV is not.

He’s very biased, the fact that he also bashes AMD doesn’t make it less of a fanboy.

He knows a lot more than you sad sacks that call this place home.

AMD has been playing the chasing game (and still is) for the past decade and especially for the past 4 years. Much like Intel on a CPU front NVidia has no real competition and thus slows down.

Makes sense but still AdoredTV is always ready to show stuff that puts nvidia in bad light, and sometimes even making it worse than it looks.

Are you sure about that? He’s been bashing AMD’s GPU division for the past 6 months.

Doesn’t make him less of a fanboy to be honest, he’s just doing it to not look that blatant.

Oh so now you’re embracing conspiracy theories? Who’s the fanboy here? Good lord lol

No conspiracy theories, that’s what anyone would do to look more neutral.

Do you even believe your own bullshit? This is ridiculous.

Just deal with the fact he’s a fanboy.

Just deal with the fact you’re wrong

Digital-foundry released a Vega64 benchmark, then they tested how far the technology improved and downclocked it to FuryX level,m well in some game it lost to FuryX and in others it was identical.

Look like Vega is a scam, overclocked Fury

Amd surpassed intel in cpu-s tbh , especially in the hedt dept

Whilst GPU prices have shot up. I remember when £400 to 500 got you the top GPU nowadays it’s nearer £800 plus.

And it’s getting higher each gen. It’s ridiculous at this point.

we are all paying for mid-range card with a high end pricing… we are the stupid ones not nvidia or rtg

read my comment above, its true that each generation the flagship xx80 card by nvidia is more cutdown in terms of cuda cores from the full unlocked chip but its because of competition…but to be honest…i have the 1070, at only 50% of the maximum cuda cores (1920 from 3840 in the GP100) and that performance at 150W…can you really complain though? when AMDs offering is vega 56….

1070s with 1x8pin working on HDPlex PSUs…check

1070s in slim laptops/desktops like MSI trident with relatively good performance/acoustics…check

1070s in itx format…gigabyte and msi check

AMDs offering on these 3 fronts…error :/

NOT AN NVIDIA FANBOY I SWEAR TO GOD! JUST STATING FACTS HERE 🙂 THATS WHY TDP MATTERS FOR AMD CARDS…LAPTOPS AND ITX

I agree dont get me wrong here I will never even try to justify vega , I can only recommend vega 56 @ 399 all I am saying is that given the obvious lack of competition we the consumers (this is on us) actually go out a pay a premium price for a sub-par gains compared to the times when competition was rampant and companies fought for our hard earn money. Let me give you another example… The only nvidia card that I had was the G4600 ti (a masterpiece of a card no doubt , only the ati 9700 pro outshines it) I payed 745 euro for it (today’s money ) the 1080ti (which is not a full chip) is 1125 dollars here in my country… No one and I mean no one can argue with me that NVIDIA given the lack of competition from rtg is not trying to rob me blind..

Remember when the “70” of each nvidia generation used to cost closer to 300 then 400$

I remember like 8 years ago when i got my A+ cert the book said 200$ was generally the best price for a gpu. Now that seems to be 300$.

I guess we can blame inflation

A book that is talking about MSRP’s and telling you which the right one is for GPU’s…. Something that is not ever static in any market… and it was a book you were learning from for some A+ cert….

ooookaay…

Vega would be much better option if all those features Vega has would be used. Problem is Vega is unfinished product (software-wise) in some cases it is not a problem like FP16, as no games currently support that (similar to SMT which also wasn’t supported on pascal when it was released), but many other features which are crucially important. Vega can also be incredibly efficient but again AMD did not optimize it even on that front. It is understandable, money-wise, and at the end of the day customers are to blame for this. But as most customers actually do not want any competition, they deserve to get a monopoly. Which is exactly what happen in high end.

Nice AMD defending. 🙂 Do you realize that Vega needs significantly much power to compete its competition? It is obvious that AMD was aiming to GTX 1080 performance level and GTX 1080 Ti surprised them. Now they are pushing Vega to its limits. AMD is presenting Vega from December 2016. How it is possible that they were not capable to make all these miracle features ready before release? Sorry but that’s unexpectable.

“Problem is Vega is unfinished product (software-wise) in some cases ”

This is real problem. How can you apologizing release of unfinished product? Why would customers cares about it if they have GPUs with no problems based on competitors units? It is not their mistake. It’s just AMD problem and they can be blamed. No customers. Please stop blaming customers for mistakes of your favorite company.

i do get that, but if that is true… why praise an unfinished product for amd???? why not bash them for it? are we that desperate for competition?

my main question, how do u know its unfinished? the rapid packed math for example is “new” and “un-utilized” not unfinished…

in my country the 108ti is around 1050$ as well…but you can somehow find deals for 900 unless the prices are not updated :/ or offers for a full build

Actually Vega 56 is a good option over the GTX 1070, ~20% faster but with a higher TDP.

And I say this as an owner of a 1070, mind you.

Well, in the UK the Vega 56 is £469, while the GTX 1070 is £389, £399 for Vega 56 was a offer price.

That I didn’t know mate, but thanks for the info.

its 2% faster according to hardware unboxed 🙂 not 20

My bad, I read an article here where it was mentioned around 20%, that’s not the difference in performance.

I’ve checked the Guru3D review of the Vega 56 now, Vega 56 is faster than the GTX 1070. Not by 20%, but definitely much more than 2%.

if its founders edition…then 56 is about 5-7% faster, but max OC both cards…theyre almost neck and neck, if you exclude games that are incredibly better on a certain card (IE dirt 4 for AMD and PUBG, dishonored 2 for NVIDIA)

Founders Edition is stock, when you bench 2 cards they should be stock VS stock. Not overclocked VS overclocked card; the overclocking lottery should not be a factor in any serious review.

It’s definitely more than 5~7%. The lead in Battlefield 1 is massive.

yes battlefield is good for amd but notice that ppl test it on dx12, while not all ppl do play it on dx12, some play it on win 7 so that is dx11…all im saying is that massive outliers should be excluded first…get the %…then add them, with massive outliers out of the way you can see the actual performance difference because every game is coded/optimized differently, some games like frequency, some like memory bandwidth like resident evil 7 at Vhigh, else ryzen would have eaten intel alive in gaming 😀

There you’re right mate, I’ve seen only benches in DX12. Perhaps in DX11 it’s another story.

Check the Guru3D review of the Vega 56, it surpasses the GTX 1070 in almost every game.

Though I’d admit it was my bad, I read an article here where the ‘20% faster’ was mentioned, and I took the rumor for granted. That’s not the performance difference.

i saw it, but i mainly see hardware unboxed for the 30 game average, which is far ahead from any review site! what do you mean by surpasses then? does the 1070 get 100 fps and the 56 get 105? or even 110? that does make it faster, but for example at 1440p high/max settings and 60 fps id be better off saving a 100+ bucks getting the 1070 than getting a 1080…the xx80 series are almost always terrible value for money. in the case of vega 56, its bad for the need of a beefy cooler, i may want a laptop 1070 or itx 1070. you see my point?

Must be a typo that 20%…

have you actually bought radeon in years when it was much more power efficient? Because the whole problem is ATI even with much better GPUs never made profit close to nvidia, therefore they cannot invest enough into R&D. Regardless of that they still are viable competition, in CPU they are actually better than intel with fraction of investment. If this is not something worth to support than I have no clue what is.

may I ask you if it was more power efficient in the early years…why did it not sell more than nvidia? it doesnt make sense then, and yes i had 2 very old amd gpus…hd 5450 and hd 7750

putting all this aside, lets assume im new in this whole gpu war, who is more power efficient now? if they simply cannot invest more…find a source then

inflation and higher manufacturing costs maybe? we dont know the actual die manufacturing costs? globalfoundry might be getting greedier…but its certain that nvidia is not only to blame for that, the 980ti launched at 650 as well as the fury x

AMD is the greedy one +$500 for FE AiO watercooling. +$200 for RX watercooling and then the MSRP scam. Not to mention selling stock coolers separately for $35

Pretty much…

Yeah, i remember getting the HIGH END ati radeon 9800xt for 499… these days that is the price for mid range whilst the HIGH END as in full DIE is 1200…

pascal titan x was 1200 and not the full die 😛 only 3584/3840 CUDA cores before the *relaunched it”

Titan Xp not the 1000 bucks TXP

TBH it’s inflation + top GPUs being… well really top (except for those weird Titans and Furies that are beaten by a cheaper card in 6 months). If you head 10+ years into the past and check top GPUs of the time they weren’t offering such a leap in performance over what was considered to be performance grade. Radeon 9800 vs. 9600 wasn’t the same leap as, say, GTX1080Ti vs. GTX1060. Same goes for mid-range 8800GT vs top notch 8800 Ultra. Mid-range pricing (e.g. comfortable play at the current most common resolution) stayed very much the same (300-400 usd)

Inflation, come on don’t be that naive.

Not only inflation. Transitions to never and better better manufacturing processes are more expensive (because it is more and more complicated). And of course there are things like situation on market in given time. There are many factors which are influencing the goal price os GPUs. It is not only about greediness.

Do not bother, it’s just nvidia being greedy

/s

Get your sht together everyone, people needs to learn to give credit to either AMD nvidia and intel, when they deserve it, and criticize and bash, when they deserve it, not bash or give credit only to a certain brand every time.

The facts are these:

1) There is definitely inflation

2) Manufacture processes are more and more expensive

3) NVIDIA has no real competition in high end

4) People are buying GPUs even in these prices (look at NVIDIAs quarter results after 1080/1070 were released)

There are many factors which are influencing prices. And there are of course merchants like ASUS, Gigabyte, MSI and others who are real manufacturers of GPUs which are we buying. NVIDIA and AMD are responsible only for one part of one finalized product. Look at recommended prices from both of them and how are they different comparing to final prices. This is really not about defending one brand. It’s just not that easy to say that someone is greedy.

Mind the “/s” i was agreeing with you, it’s just that people is fking fixated on the fact that nvidia is greedy and AMD isn’t when one could very well define AMD very greedy, but that’s how fanboys work.

I did not realize, that “/s” is for sarcasm. 🙂

🙂

A card that would cost $600 in 2004 would cost $800 now if a manufacturer wants the same return. It’s just a reality. USD has 1/3 less value these days. And top tier videocards pricing indeed went up by that percentage give or take.

ok lets leave inflation out of it…what about r&d?! developing a whole new architecture? increase in company size and assets? heck..what if they just want more ROI (return on investment)? for the past 4 years delivers pure performance, and AMD…while competing nicely I might add, is just playing catch up, specially this generation. to the point that any game that is gimped on amd hardware is 90% due to “gameworks”. no buddy, look on paper, the vega 64 is 12 tflops and the 1080ti is 12 tflops…but they are miles away

People who don’t understand economics should never use the “it’s inflation, stupid” excuse.

This isn’t inflation. Boosting your product’s price by $50 every year isn’t inflation, that’s price hiking.

Indeed. It’s mostly been a combination of ‘It’s a lack of meaningful competition, stupid’ and ‘It’s greed, stupid’.

i dont need a freakin masters in economics to say its inflation, i live it! i see the prices going up every year in everything…food, clothes, etc… inflation is part of it yes! you cant deny that, you just cant…adding 50$ to the price of a xx80 nvidia flaghship every generation may very well boil down to +25 inflation +25 because we are greedy and amd cannot compete to force us to lower our prices…other generations it can be +30/+20…or 10/40..it depends on manufacturing costs

in october prices will increase by 10% due to gddr5 memory price hike, if nvidia released the 11 series in december, and accounted for that increase dont you think ppl will say they are greedy?

Like I said, almost doubling a product’s MSRP within half a decade is more than just standard inflation – like you said yourself, actually;

“+25 because we are greedy and amd cannot compete to force us to lower our prices…”

“in october prices will increase by 10% due to gddr5 memory price hike, if nvidia released the 11 series in december, and accounted for that increase dont you think ppl will say they are greedy?”

Obviously. Considering how expensive their products already are & how massive their profit margins on them should be by this point, there’s absolutely no reason what-so-ever for them to push the GDDR5 cost increases onto the consumer; they can choke on it themselves – and they should.

your opinion is respected and all, but when they are already in a good position, increasing the profit margin as much as possible is also a choice. all in all we are not the ones who decide this. they have a financing team that studies “should we choke on the increase in price, or add it on the price of the cards, lets calculate the profits using each model”. keep in mind though that increased profit = increased r&d budget to stay on top = better products (slightly more expensive :/). to give you a little perspective of where I live (Egypt). the gtx 1080 Ti is at lowest!!! = 950 USD. the gtx 1050 = 180-200 USD the gtx 1060= four freakin hundred USD so NO I am not happy about price increase but greediness is not the only one to blame

“increased profit = increased r&d budget”

Yeah, no. In a better world, sure. In a world where Nvidia has to actually give us their best in order to remain competitive, absolutely. However, in this world, wherein AMD is struggling just to keep up, not a f*cking chance in hell. Watch the videos linked in the above article, the bloke describes this exact problem; Nvidia isn’t even trying anymore, because they know they’re going to win either way & they’ve been this way every-single-time their competition turns to sh*t, ever since the very beginning.

Likewise, there’s a reason Volta was delayed; they knew they’d get away with it & they did, simple as. As for your local prices (Jesus……) if it’s country-wide (or even region-wide) then you’re probably getting f*cked by the typical “distribution costs” excuse. It’s an old one that the tech industry is still pedalling like it’s 1999 because nobody’s calling them out on it enough – yet.

yeah the local prices are outrageous (I can show you used prices as well from a 25k+ used pc parts group on fb…so a large group, but you will tear up). my point is that you cannot say they are not trying anymore, the 10 series cards are very good, gpu boost 3.0 alone is enough to tell you that they are indeed trying. my 1070 g1 gaming is doing 2000-2012 alone just by putting it in oc mode, the only thing the xtreme gaming model will do better is lower temps but increased power draw. nvidia basically put all air cooled cards on plain field if the cooler is designed correctly.

nvidia is not solely responsible for that…if 500 got you the highest end, why doesnt the fury x launch at 500? inflation exists…

I mean at this point in time, technology had to slow down. Moore’s law doesn’t apply anymore. Intel, amd are suffering the same fate.

Nvidia is amongs them, they’re not better.

This is also true

Uh, what? Moore’s Law doesn’t what?

You might want to revisit that.

He’s actually right, moore’s law doesn’t apply anymore, we don’t get double the transistor each half and a year or so, not anymore at least, so yeah…

The increase is still occurring, just not as often because the industry is terrified we’re going to reach that cap sooner, rather than later. That doesn’t mean the Law is dead, the Law still applies so long as the increase still occurs, it just means it’s a modified version of the law that’s in effect, now.

Here;

“In my 34 years in the semiconductor industry, I have witnessed the advertised death of Moore’s Law no less than four times. As we progress from 14 nanometer technology to 10 nanometer and plan for 7 nanometer and 5 nanometer and even beyond, our plans are proof that Moore’s Law is alive and well.”

April 2016, Intel CEO Brian Krzanich

The increase isn’t occurring now, we’re not getting that number of transistor increase every 18/24 months, the law can remain but doesn’t apply anymore at this time. Also doesn’t really matter what Krzanich say, he could aswell trying to push what the founder of Intel said just for the sake of it, and because it’s Intel’s CEO.

The increase is occurring every 2.5 years, they slowed it down, but it is still happening.

The law has remained & does still apply, just at a slower rate.

Even if they’re down to raising transistor counts every 3-5 years, so long as the count still goes up on a schedule, no matter what that schedule may be, the Law applies.

Yes, we are reaching the end of Moore’s Law, but we’re still at least half a decade out from it, at the minimum, assuming the manufacturers don’t actually try to slow the growth process down even further in order to stretch it out some more.

Man the law says on thing exactly, you can’t just slow it down, “Moore’s law is the observation that the number of transistors in a dense integrated circuit doubles approximately every two years”

or again

“The period is often quoted as 18 months because of Intel executive David House, who predicted that chip performance would double every 18 months (being a combination of the effect of more transistors and the transistors being faster)”

example: 980Ti, maxwell, september 2014 (architecture release), 8 billions transistors

1080ti, pascal, may 2016 (architecture release) 12 billions transistor

from sept 2014 to may 2016 there are 20 months, and the transistor count isn’t doubled, it’s a 50% more.

source is wikipedia.

Here’s some more Wikipedia;

Moore’s law is an observation or projection and not a physical or natural law. Although the rate held steady from 1975 until around 2012, the rate was faster during the first decade. In general, it is not logically sound to extrapolate from the historical growth rate into the indefinite future. For example, the 2010 update to the International Technology Roadmap for Semiconductors, predicted that growth would slow around 2013, and in 2015 Gordon Moore foresaw that the rate of progress would reach saturation: “I see Moore’s law dying here in the next decade or so.”

“Gordon Moore foresaw that the rate of progress would reach saturation”

More like it reached it already and since it’s not applicable anymore, nvidia (in this case) is just releasing more architectures, that’s why we had so many refreshes, in the latest architectures, starting from fermi 400, the same year saw fermi 500, then we saw kepler 600, which was a minor improvement over fermi 600, and then we saw kepler 700 which was the actual improvement over fermi 500, and it came out 3 years later but it was 2 gens earlier, not 1 earlier, from 500 to 700.

Architecture is a separate thing from transistor count. The recycling architectures is Nvidia being lazy with R&D because their competition has been sh*t, which is why they’ve recycled Maxwell so much, instead of moving on to Volta by now.

Architecture comes down to R&D, as does PSU consumption, etc. Transistors are standard.

Still, it’s not what we had on the market, the moore’s law isn’t applicable here, and most likely won’t be in the future, but you never know.

No it doesn’t like it did back in the 2000’s.

yep, were reaching the limit of how little we can make each transistor

Dont bother.. nvidia dolts will just close their eyes and take it regardless

There’s a major mistake in this graph: it skips some rebadges, but not others. The 9800 rebadge of the 8800 is included, then the 300 series rebadge of the 200s is skipped, then the 500 rebadge of the 400s is included, and the 700 series rebadge of the 600s is included but the 800 re-rebadge is skipped…

Consequently the graph isn’t always showing generation-to-generation performance gains, especially in the last few years.

300? 800? What?

The 300 series is just a marketing label to sell more 200 series GPUs. Same for the 700s and 800s which were both just relabeled 600 series GPUs.

There was no 300 nor 800 series, those were exclusively mobile.

AdorkedTV lol.

Here’s another fool with AMD hype. Post his BS too. youtube . om /watch?v=Vl7r-zLq7wQ

Get a life.

Salty much, trash?

Just block him.

How’s Vega 40% faster than 1080Ti doing?

He upvotes his own comments, he really is in need of attention…

I’m sorry guess you have to be AMD fanboy for permission to upvote your comments.

Which AMD fanboy here upvotes his own comments??

yeah Get a life and stop upvoting your own comments

Change your name to Denial.

Watched these yesterday, they’re insanely interesting videos and well worth a watch. We’re at least still getting good products put out there, it’s just the outrageous prices and staggered high end releases (980 –> 980 Ti –> 1080 –> 1080 Ti) that chaps my a*s.

AdoredTV’s videos are always incredibly insightful.

His channel is AWESOME.

NVIDIA low performance…yeap,another fault from the AMD!!!

How much of this is because of Moore’s Law ?

I’m sure competition is a factor here, but I think the bigger factor is diminishing returns – it’s getting harder to make the same leaps as before because of technological limitations.

I mean, AMD has also been facing the same issues, it’s not like they’ve been getting constant performance increases every generation.

Just AdoredTV things…

What Adored meant to say that back in the day for 600usd you got Top GPU, but now for 600usd you only can get 1080, not 1080Ti, not a Titan.

Well since the graphics have probably reached it’s near-realistic visuals this doesn’t come as a surprise

games before 2010 had much simpler textures and visuals

though honestly much better gameplay

i know everyone loves to bite Nvidia in the ar$e but AMD has done F**k all in a decade or so in the GPU department

all of their cards are overpriced and underpowered

with the addition of vega (which isn’t even for sale due to stock shortages)

i can’t see the point of fanboying a company who is pretty much identical to Nvidia (but $hittier)

sorry fanboys, but i don’t buy into this bull$hit, Nvidia has made great progress into making a 4K60FPS ready single card which many people wanted for years

and for 700$+ it’s not that expensive honestly (considering Inflation that is)

i actually think that from generation to generation the difference is still around 50-70% because of the cut down CUDA cores of xx80 which launches as the flagship model! when AMD cannot/ can barely compete with a full VEGA 10 card at 300+ watts and 4096 Stream processors against a SEVERELY CUT DOWN GTX 1080 at 2560 CUDA cores from a maximum of 3840 CUDA cores of the the GP100 at only 300W max power draw at that…then the performance increase per generation will decrease…the gtx 1080 only has 66% of the max cuda cores amount of the gp100 full chip. heck, the gtx 1070 has 50%! (1920) and i have that little beast.. 2ghz and all games at high/ultra 60+ on 1440p so did my gtx 970 before it…never felt the 3.5gb issue…so you can excuse nvidia for cutting down costs by producing a cut down chip…altough their very low end is trash value (gtx 1050 should not have existed imo…1050ti is great at 110$). the next volta generation may as well be the same pascal only 1. higher cuda cores per card…say 3072 cores for the 1180 and 2304 for the 1170

2. 12nm process optimization (higher speeds and IPC)

3. ?????

4. profit

There are fundamental reasons for this including the unified shader architecture and dx9 introductions pre 2010, the tech simply isn’t moving as fast since those days

At this rate Nvidia could be losing performance and it would hardly matter with the amount of fight AMD is putting up at the high end. You know things are bad when AMD puts out a range of graphics cards and Nvidia’s response is a effectively a price INCREASE :S

This is what happens when your competition had decent hardware but failed in both a marketing / software support aka drivers standpoint to take on Nvidia.

Hopefully AMD will get back on track soon so Nvidia can Try again. If you want that 70% performance increase. AMD has to bring it hard while taking away a ton of the market from Nvidia.

Well who buys new GPU every year ? Just wait this 3-4 years and you will have that 100% boost. Ps. I have AMD card and I’m not a fanboy.

What is also being ignored here is Moore’s law.

You can’t ignore that this is hindering the progress of gpu generations.

This is reason both companies are exploring ways to have multiple gpu working in tandem as a single gpu

Moore’s Law only applies to die shrinks, it doesn’t justify why they’ve been repackaging the same architecture for the last….. 3? generations while inflating the prices every year.

And die shrinks are directly related to performance.

Look at AMD and their million watt mini nuclear power plant gpus.

They can only produce so much power due to the size of ther gpu and power draw. If they could reduce that further and thus reduce power draw then they would also be able to increase the output further.

Reliability of the manufacturing of and gpu gpu when increasing size. Hence amd and Nvidia hoping they can find way to rubber band two or more gpu together

Die shrinks haven’t stopped, though.

I mean, yeah, they’ve been repeatedly delayed in the last few years due to manufacturing issues, but neither AMD, Nvidia nor Intel has ever stopped shrinking dies (thus far).

Though I think I understand what you mean about making multiple GPUs function in tandem, in order to overcome the current transistor limitations.

No, they haven’t stopped yet,but it is getting increasingly difficult and ideally AMD and Nvidia saw Pascal and Vega coming much earlier and die shrinking was a big part of that due to stability issues.

I agree Nvidia generally drag out their generation slightly, but I don’t think this is purely a calculated decision based on AMD not offering competition.

I think there’s limitations to development that both are working with and they stagger cards accordingly to maximise revenue until next generation is ready.

Part of this though, is that manufacturing reliability increases over time allowing them to push hardware further due to better yields.

In some ways I think this is better situation as previously pc hardware was so far ahead of consoles that hardware just didn’t get taken advantage of.

My 690 when first purchased was playing games with msaax8 and still had headroom at times. But realistically there was little other advantages that justified such power

Partly agree. R&D issues associated with die shrinking is definitely one reason for the delay, but I ardently believe if AMD’s competition had been beter, we’d have seen Volta by now, rather than a recycled Maxwell.

Manufacturing reliability is definitely one factor, though, as delays have constantly affected Nvidia’s & AMD’s release dates, both.

It is better in the sense that Sony & Microsoft know they can’t spend 10 years sitting on the PoorStation 4 & the XBLOWER this time, yeah, but otherwise it’s much the same, as even the Scorpio will be outdated in a couple of years, whereas the PC will always be the PC 😛

Yea, back in the days when AMD was actually competitive…

back in the days it wasn’t even AMD. It was ATi.

the 5000 series onward it was amd

Haha, you’re right. I should have simply stated Radeon. 🙂

I remember 8800ultra times, I could run games better than 7800GTX in SLI. Now people say that each new NV GPU generation offers much smaller performance increase, but IMO we still see similar huge jumps from generation to generation. The only difference comes from marketing and confusing names. Before the best GPU chip in each generation was sold in x800 gtx series, but since kepler the best GPU are sold in titan and Ti series, while gtx series are using 2’nd or even third chip from top. If you compare 980ti/maxwell titan vs 1080ti/pascal titan huge performance difference, many times the best pascal doubles performance of the best maxwell.

Nice post adoredTV. The lack of competition is an issue for the customer. This is what happens when monopoly is in da house. The customer always loose. Even tho we have a ton of users insulting and wishing amd go bankrupt.

Humans…

less then 70% of the market is not a monopoly.

AMD needs to make a gpu arch designed for gaming instead of putting compute workhorses into the mainstream segment. Competition and performance leaps would be more fierce. And…PRICE WARS

290X 390X was competitive, but where does it helped AMD? And remember HD5xxx times when AMD was leading in performance but still Nvidias sh*t GTX260 outselled it like a lot.

don’t talk non-sense. you don’t simply gain massive market share overnight. when AMD have the lead with HD5k series they finally able to topple nvidia in terms of market share. and when AMD have the lead once more with 7970 AMD also slowly gaining market share despite selling the card at much more expensive price than nvidia previous gen flagship. being competitive in certain segment is not enough. AMD need to gain the top spot for themselves.

moore’s law is running out.

Prime opportunity for AMD to step up its game and take some market share from Ngreeedia. I am not loyal to any brand. I will switch to any product with the best performance and lowest price – and anything else that helps get me away from anti-consumer companies.

“I will switch to any product with the best performance and lowest price”

lol funny thing is this is why we got this duopoly situation between AMD and nvidia.

“For starters, AMD cannot keep up with NVIDIA. Due to the lack of competition, NVIDIA does not feel the pressure to release a graphics card that will be way, way better than its previous generation offerings.”

Bu-but green team and gameworks!.

But but “monopoly is good”

Chizow the imbecile

“ATI was able to compete and surpass NVIDIA’s graphics cards.ATI was able to compete and surpass NVIDIA’s graphics cards.”

Miss those days at least it happen some freaking times. Radeon 4850 finally took down the 9800gtx haha. Radeon 5000 series was better then what nvidia had.

Then 7000 series was at least competitive after that everything went down hill

moore’s law no longer working we hit the limitations of making die smallers and adding more transistors

Well, when you have NO competition, you can triple your prices and decrease your performance 10 fold. I cannot stand the GPU market. A BYOPC high end is mega expensive now because a decent GPU costs around $800+ whereas 6 years ago it was only $400.

More like everyone bat an eye. But the difference is that AMD tries while Nvidia laughs from us. You choose whats worse.

This is our fault. Stop being loyal to any one brand.

Tell that to the green team fanboys, only they’ll ignore and defend it easily.

Some depressing home truths from Wee Jock Mcplop especially at the end of the last video.

Jim’s never sugar coated Nvidia, now he’s eventually realized AMD are now just as scuzzy with the price gouging enabled by the acquiescence of fanboys.

AMD has launched competitive tech every generation with the exception of the RX 400 series, where they did not release a halo product because of lagging development.

Jeez, they miss one cycle, and then launch Vega, and suddenly they cannot compete. What BS.

Stupid inaccurate article with even more stupid people believing the article…

Here is the more accurate chronology

Gtx 285 – > gtx 480 – > gtx 680 – > gtx 780 ti – > gtx 980ti – > gtx 1080ti and heck, arguably I should cut the gtx 680 from that list, so it jumped from fermi to big kepler.

Now do your math and homework, it’s still on the region of +70% performance increase per true generation of chips.

So yeah, these are amd fault for not being able to compete, thus nv is toying with the customers by only releasing smaller parts of the real max performance of the architecture

u forget about gtx 580…. ^^

gtx 285 -? gtx 480 -> gtx 580 -> gtx 680 … etc…. ^^

Nope… It’s precisely why I said what I said.

G80 (8800gtx) -> whatever the codename that was gtx 285 and it’s cousins -> fermi (gtx480) -> real kepler (gtx780ti) -> real maxwell (gtx980ti) -> real pascal (gtx1080ti)

Everything else weren’t real new generation/architecture and weren’t real flagship.

Example, gtx1080 to gtx1080ti is basically what geforce 4MX was to geforce 4ti4600.

Yeah, if you bought a gtx1080, you are essentially paying a high end price for what is actually a mid end product at best.

GTX 1080. The only reason I left Amd/Radeon. Is it worth it? I will tell you that VR rift 2.0 supersampled is AWESOME! 4k in almost the majority of most games to date, is AWESOME. When will I upgrade? When 4k VR is out, and the GTX1080, can’t keep up. I wont care if it Nvidia or AMD, but as per usual, it wwill depend on the metrics most reviewers never tell you. And that is… HOW long will it be for another card to come out and beat it by 50% again. (Including an OC score to a stock score. That metric, has never failed me. For a YEAR nothing but Titan’s even compare for price/performance. Best value in a vid card since geforce 256 smoked voodoo bansee’s in SLI.

Nturdia ftw!

If the next gen upgrade is only 30% faster than what you already have wait for the follow up gen. I know plenty of gamers that are happy with their GTX 960 and GTX 970 cards since the 1060 and 1070 arent that much of an upgrade. I speak to these guys quite often and not one of them complain about a game not running right.

Sure…if you ignore the increase from Maxwell to Pascal…who wrote this garbage?

Who believes that 50% increase in performance is sustainable year over year? Nobody including the attention ho at Adored. What a dumb article.

Then how does he explain the 30/40% boost the 1080ti alone already had.

This site is pretty much green team by now, so it’s pointless trying to explain anything wrong with NV and what they do, it’s just a waste of time.

gtx980 is as fast as gtx1060

gtx980 launch price $549

gtx1060 launch price $249

baaaaad nvidia, baaaaaad lol

It’s going to be that way when the devs keep putting focus on those lower end consoles, while NV puts focus on denting their highest end GPU’s in terms of performance/visual fidelity.

Don’t let the fans hear that though.

Let’s also remember how they re-released the 980ti and called it a 1070 but at a cheaper price.

> The reason behind this decrease is quite obvious.

I don’t think it’s obvious at all, actually. Chip manufacturers are starting to approach the minimum possible transistor sizes. It’s not quite there yet, but it is getting increasingly difficult to make them any smaller, and we’re already to the point where they have to factor in quantum effects during manufacturing.

Also, gamers are no longer the sole driving force behind GPU development. Research involving deep learning and neural networks is huge, and NVIDIA is taking them seriously as customers, which means putting more effort into improving GPUs for them; gamers may not see a huge difference between the 10x and 90x line of GPUs, but there were some much more significant changes for developers using CUDA on massively parallel build farms.

And there are probably more reasons I’m unaware of that contribute to it, but I don’t think “NVIDIA is lazy” is one of them.

I miss 3Dfx.

bf1 does have a nice lead with vega and the polaris cards, but its only 1 game, if the review watcher is like me (i own it but my brother is the one playing it)..i play for honor online mostly for example, so when im deciding, ill say gtx 1070. i get that 56 is faster, but not “compelling argument unless i am fond of amd products” faster. not to mention AAA titles sponsored by nvidia more than makes up for it

sorry i’m not talking about nvidia specifically here. nvidia gameworks is just one of the example that developer use so they can add extra bit to the game to make it “different” than console version. my point is more about PC gamer itself that is hard to please when it comes to games performance and visual effect.

Well, those that pay more expect more performance out of the game, I don’t think it’s unfair to expect that outcome. Devs are supposed to optimise the game in general, rather than cobbling it together and demanding everyone buy a GTX 980/1080 just to run it well, while they get it running on the consoles at the same time with lesser hw.

Visual effects are also there to take advantage of the extra power, just like how the pro/X1X will take advantage by upping their visual end.