The specifications of the cards based on the next-gen Ampere GPU architecture, the GeForce RTX 3090, GeForce RTX 3080, and the GeForce RTX 3070 have been leaked by Videocardz.

According to Videocardz’s sources, Nvidia will initially launch three SKUs in September: RTX 3090 24 GB, RTX 3080 10 GB and RTX 3070 8 GB. All three cards will be announced on the 1st of September during the GeForce live digital event.

The custom AIB board partners are also preparing a second variant of the RTX 3080 featuring twice the memory (20 GB), but this SKU will launch at a later date. This product is yet to be announced, but a 20 GB Model is surely in the works. The GeForce RTX 3090 and GeForce RTX 3080 will be the first cards to hit the retail shelves, with a hard launch planned for mid of September.

According to this leak from Videocardz, the GeForce RTX 30 series cards will feature the 2nd Generation Ray Tracing/RTX cores, and the 3rd Generation Tensor Cores. The GeForce RTX 30 lineup will also support the HDMI 2.1 and DisplayPort 1.4a connector interface for the display output, and will pack a brand new NVLINK connector. These new cards will also support the PCI Express 4.0 interface/standard.

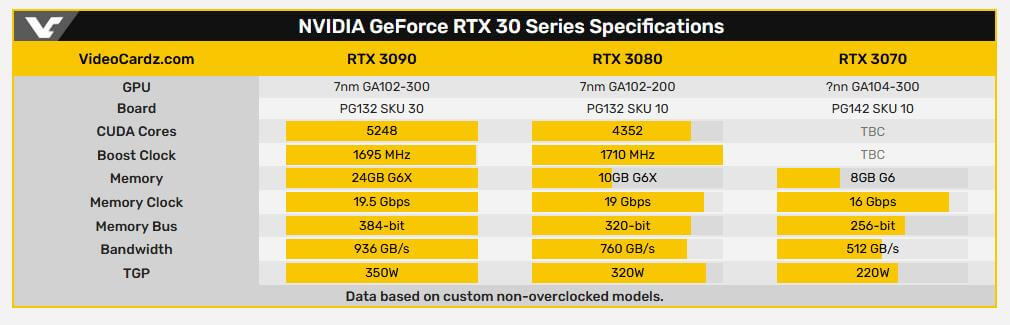

Coming to the specs, the GeForce RTX 3090 will feature the GA102-300 GPU having 5248 cores and 24 GB of GDDR6X memory across a 384-bit wide bus, thus giving a maximum theoretical bandwidth of 936 GB/s or 1TB.

Most importantly, according to Videocardz’s sources, the custom AIB cards will utilize dual 8-pin power connectors because this flagship card has a TGP of 350W (total graphics power). The RTX 3090 represents a significant increase in core count over the RTX 2080 Ti, which featured 4352 CUDA cores. The GA102-300 GPU will have a boost clock frequency of 1695 MHz.

We don’t have any details on the TMU (texture mapping unit), ROP count (raster operations pipeline) yet. The RTX 3080 on the other hand gets 4352 CUDA cores and 10 GB of GDDR6X memory. The RTX 3080 will feature the GA102-200-KD-A1 GPU die, which is a cut-down SKU having the same 4352 CUDA cores as the RTX 2080 Ti with a total of 68 SMs.

Assuming the memory is running at 19 Gbps across a 320-bit wide bus interface, we can expect a bandwidth of up to 760 GB/s. This model has a TGP of 320W and the custom AIB models will again require dual 8-pin PCIe connectors. RTX 3080 will feature a maximum clock speed of 1710 MHz. The memory speeds for both the RTX 3090 and 3080 cards are expected to be around 19 Gbps.

The RTX 3070 will also launch at the end of next month. This GPU is rumored to get 8 GB of GDDR6 memory (the non-X variant). The memory speed will be 16 Gbps and this GPU will have a TGP of 220W. The GeForce RTX 3070 will feature the GA104-300 GPU core. CUDA cores are expected to be around 2944-3072, the same as the existing Turing RTX 2080 SUPER lineup. This card will have a bandwidth of around 512 GB/s.

Nvidia is using the high-end GA102 GPU for the RTX 3080 as well, which appears to be an upgrade over the previous TU104 core featured on the RTX 2080. This could also mean that the new Ampere card has higher wattage requirements and thermals, and it will fall under the high-end enthusiast segment.

There is also a possibility that the Ampere GeForce RTX 30 series will be utilizing the 7nm process node, though this is yet to be confirmed.

Nvidia is hosting a Geforce Special Event on September 1st, and we expect the company to announce the next-gen Ampere Gaming GPUs.

Stay tuned for more!

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email