At GTC 2017, NVIDIA revealed the first Volta GPU that is aimed at very high end of the compute market. And while a gamer/consumer GPU based on Volta won’t be coming out – most probably – until 2018, the green team showcased Square Enix’s Kingsglaive: Final Fantasy XV running on it.

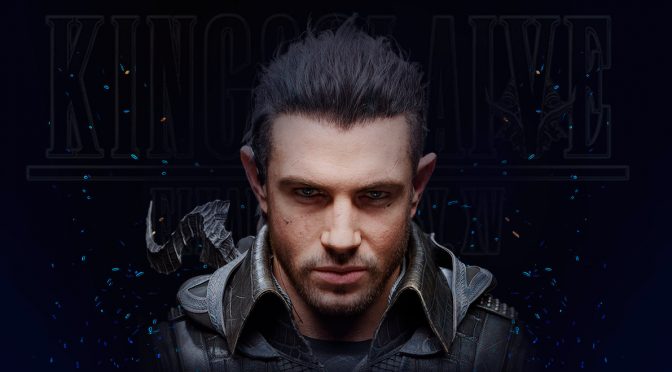

In case you didn’t know, Kingsglaive: Final Fantasy XV is a CG movie based on Final Fantasy XV, and this tech demo features the main protagonist Nyx Ulric.

The fact that this scene runs in real-time is simply mind-blowing, even though it features only one detailed character in a somehow basic environment.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email