EIDOS Montreal and NIXXES have added DX12 support to the main build of Deus Ex: Mankind Divided. As such, we decided to test the game and see whether this latest DX12 build is able to match the performance of the DX11 build on NVIDIA’s hardware.

As we’ve already said, the benchmark tool of Deus Ex: Mankind Divided is not representative of the in-game performance. Therefore, we decided to test a number scenes from the game’s city hubs.

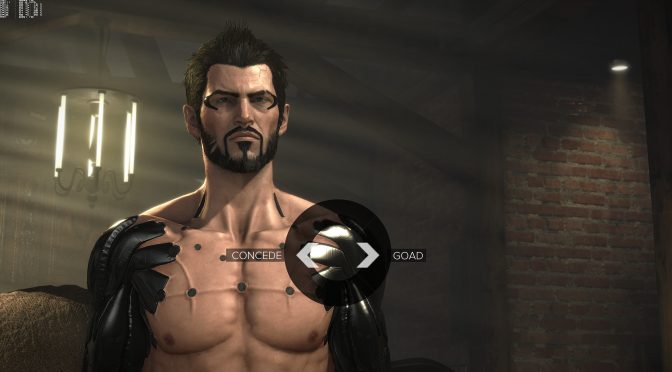

Unfortunately, DX12 still performs poorly on NVIDIA’s hardware. As we can see below, Deus Ex: Mankind Divided was unable to take full advantage of our GTX980Ti in DX12. In DX11, however, the game was running smoothly.

As a result of the underwhelming performance in DX12, the game was unable to offer a constant 60fps experience, therefore – and once again – we strongly suggest avoiding the game’s DX12 if you are gaming on NVIDIA’s hardware.

For our tests, we used an Intel i7 4930K (turbo boosted at 4.2Ghz) with 8GB RAM, NVIDIA’s GTX980Ti, Windows 10 64-bit and the latest WHQL version of the GeForce drivers.

DX12 images are on the left, whereas DX11 images are on the right.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email