Tom Clancy’s Ghost Recon Breakpoint is the next part in the Ghost Recon series. Breakpoint is an online tactical shooter that is using the AnvilNext 2.0 Engine. Ubisoft has provided with a review code, so it’s time to benchmark it and see how it performs on the PC platform.

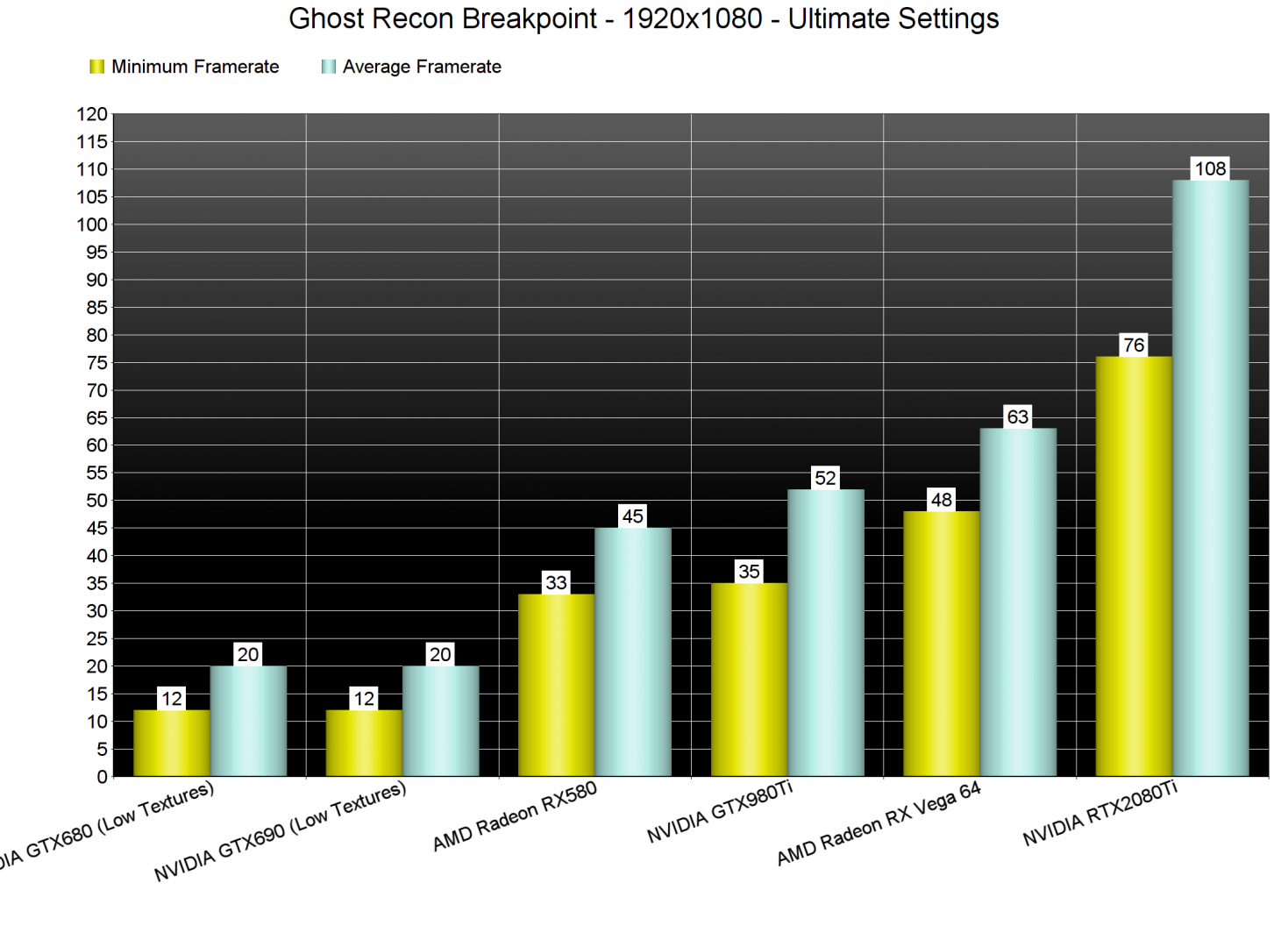

For this PC Performance Analysis, we used an Intel i9 9900K with 16GB of DDR4 at 3600Mhz, AMD’s Radeon RX580 and RX Vega 64, NVIDIA’s RTX 2080Ti, GTX980Ti and GTX690. We also used Windows 10 64-bit, the GeForce driver 436.48 and the Radeon Software Adrenalin 2019 Edition 19.9.3 drivers. NVIDIA has not included any SLI profile for this title, meaning that our GTX690 performed similarly to a single GTX680.

Ubisoft has implemented a respectable amount of graphics settings. PC gamers can adjust the quality of Ambient Occlusion, Level of Detail, Textures, Anisotropic Filtering, Screen Space Shadows, Terrain and Grass. There are also options for Screen Space Reflections, Sun Shadows, Subsurface Scattering, Long Range Shadows and Volumetric Fog. Ubisoft has also added a FOV slider, a resolution scaler, Image Sharpening, support for FidelityFX and more.

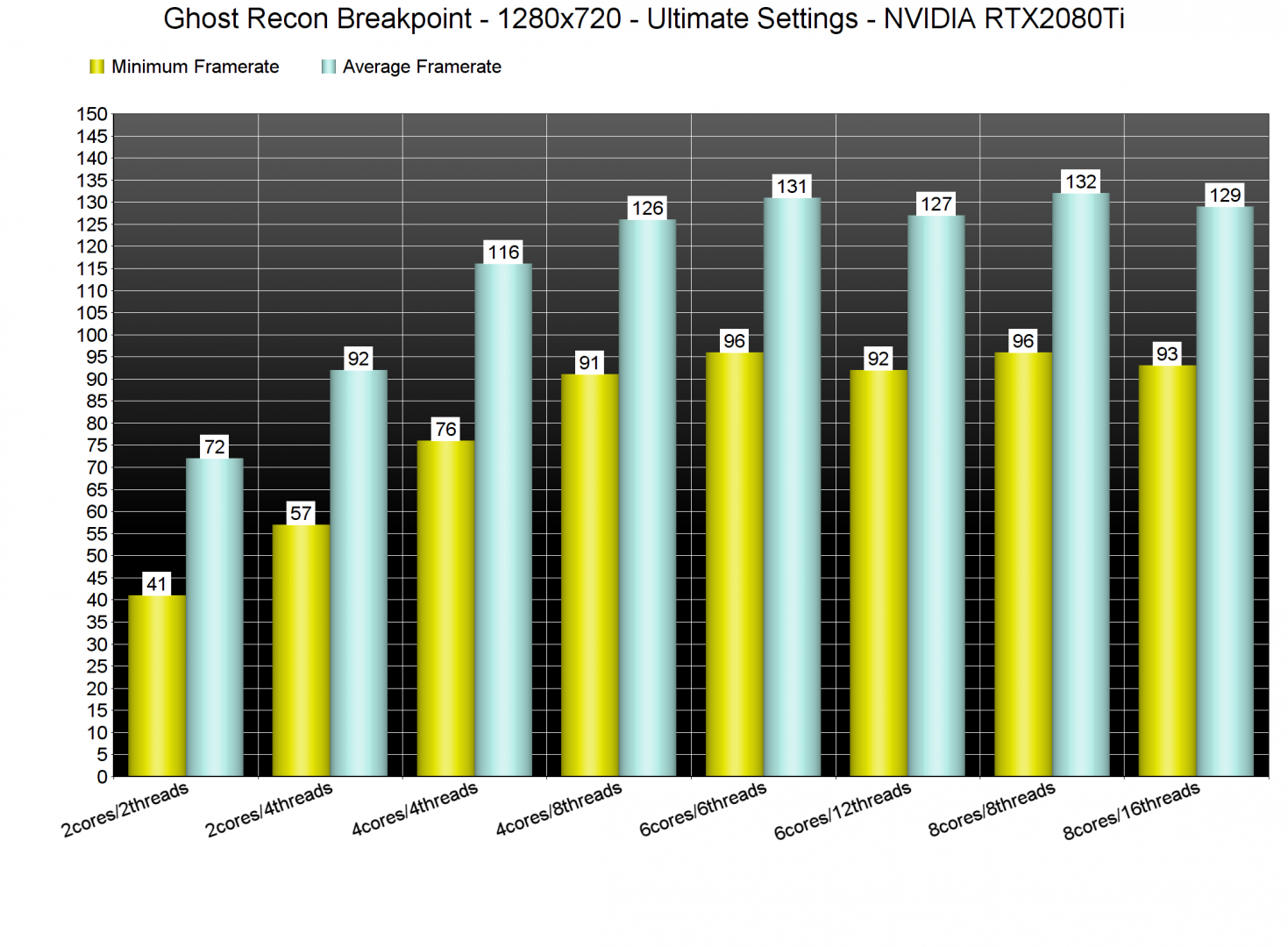

In order to find out how the game scales on multiple CPU threads, we simulated a dual-core, a quad-core and a hexa-core CPU. For both our CPU and GPU tests, we used the built-in benchmark tool as it’s representative of the in-game performance. For our CPU tests, we also reduced our resolution to 1280×720 (so we could avoid any possible GPU bottlenecks).

Surprisingly enough, Ghost Recon Breakpoint can run smoothly even on a modern-day dual-core system. Our simulated dual-core was able to push a minimum of 41fps and an average of 72fps. When we enabled Hyper threading, our minimum framerate went to 57fps and our averaged climbed at 92fps. Furthermore, Ghost Recon Breakpoint appears to be mainly using 6 CPU cores/threads.

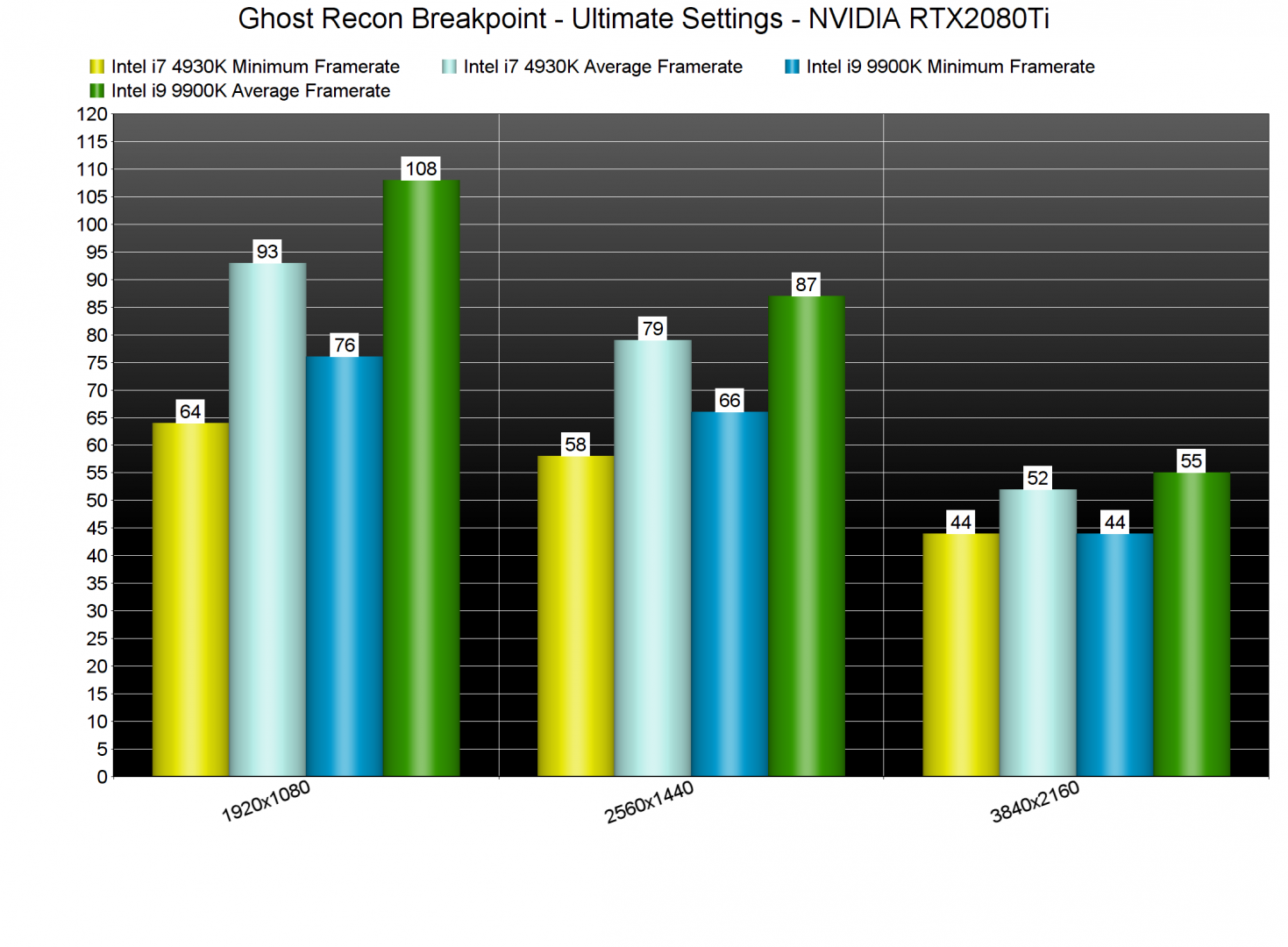

What’s also interesting here is that the game runs with constant 60fps even on older CPUs. Our Intel i7 4930K was able to push a minimum of 64fps and an average of 93fps. At both 1080p and 1440p, our NVIDIA RTX2080Ti was not used to its fullest. Still, the game was running smoothly on Ultimate settings.

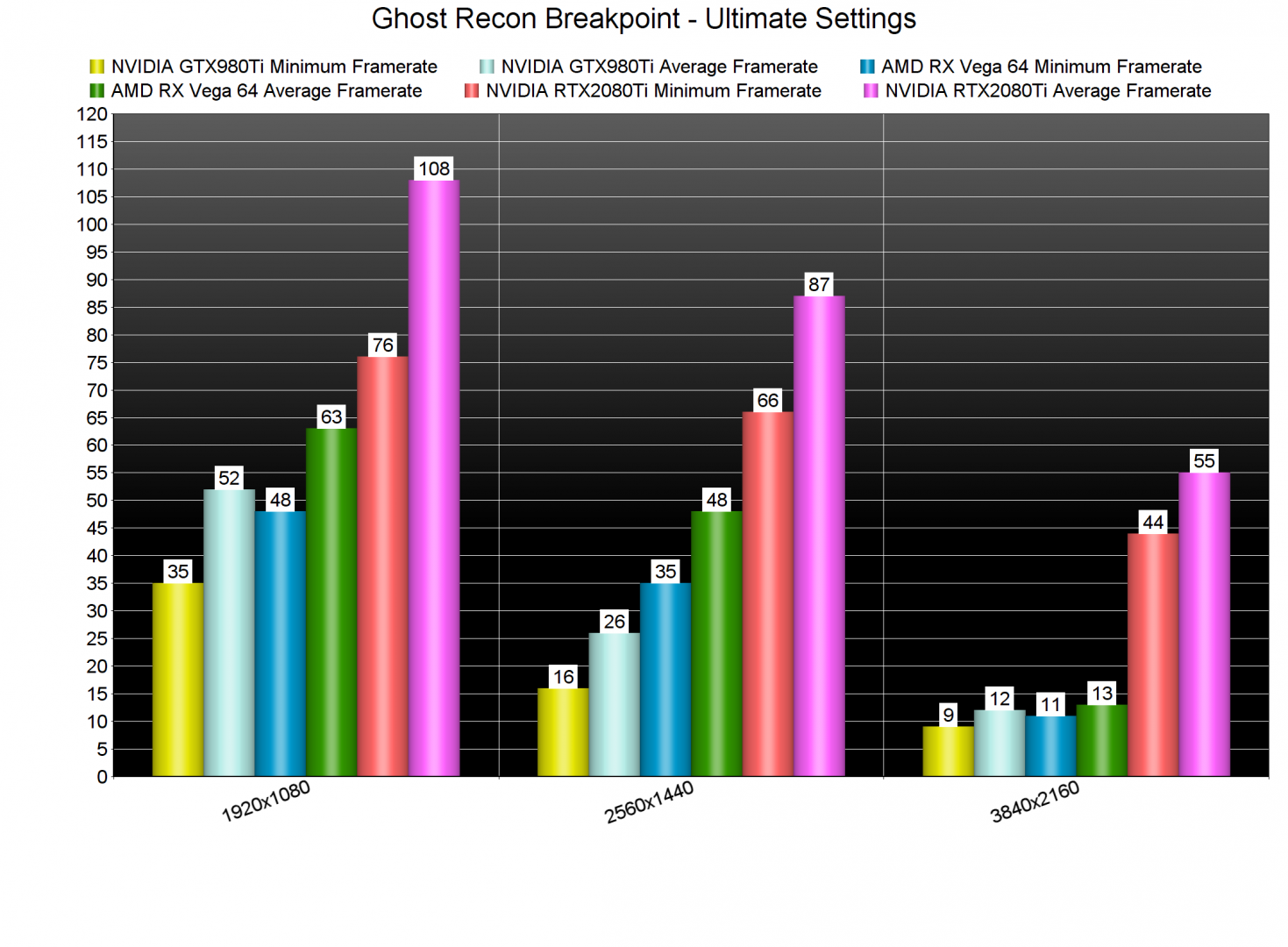

Ghost Recon Breakpoint comes with six presets: Low, Medium, High, Very High, Ultra and Ultimate. At 1080p and on Ultimate settings, the only GPU that was able to offer a constant 60fps experience was the RTX2080Ti. The AMD Radeon RX Vega dropped during some scenes below 50fps, and averaged at 63fps.

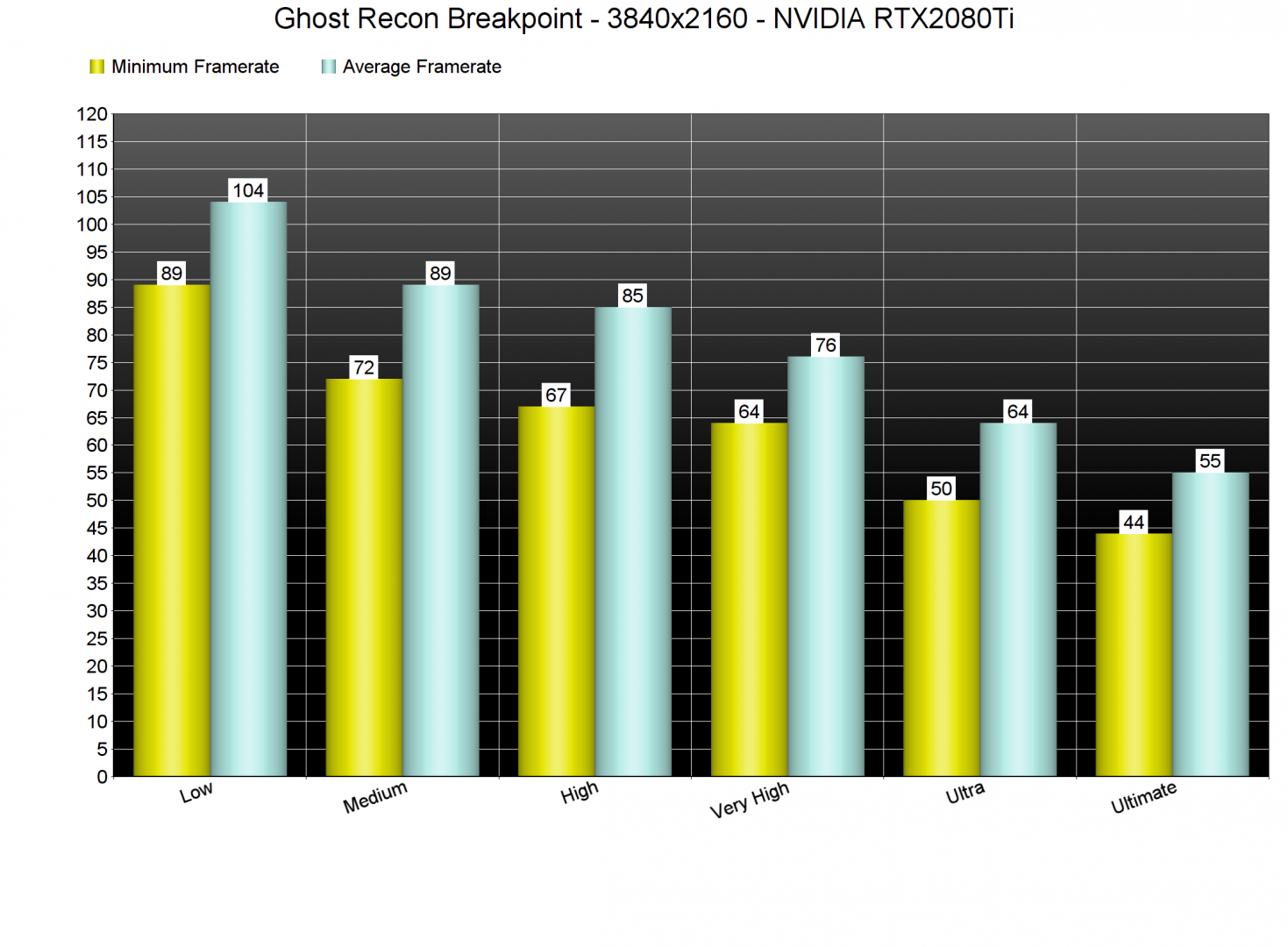

At 1440p, our GTX980Ti was limited by its VRAM and under-performed. Once again, the only GPU that was able to run smoothly the game was the RTX2080Ti. As for 4K, both the GTX980Ti and AMD Radeon RX Vega 64 were VRAM-limited. On the other hand, the RTX2080Ti was able to push a minimum of 44fps and an average of 55fps.

Now the good news here is that the game can scale on less powerful GPUs. By lowering our resolution scaler to 80%, we were able to run the game smoothly in 4K and on Ultimate settings on the RTX2080Ti. In native 4K, we also came close to a smooth gaming experience on Ultra settings on the RTX2080Ti. And as we’ve already reported, this final version runs significantly better than the closed beta build.

Graphics wise, Ghost Recon Breakpoint looks absolutely stunning. Its environments are among the best we’ve seen in a triple-A game. Moreover, the game features some cool weather/wind effects and the grass bends as you walk through it. Ubisoft has used photogrammetry and all of the textures look incredible. The only downside here are the character models that are not as mind-blowing as what COD or Battlefield currently offer. Still, Ghost Recon Breakpoint is one of the best visually games of 2019.

All in all, Ghost Recon Breakpoint performs great on the PC platform. The game looks and runs better than Assassin’s Creed: Odyssey, Ghost Recon: Wildlands and Watch_Dogs 2. Still, we did notice some rare stuttering issues, so here is hoping that Ubisoft will be able to resolve them via a post-launch update. Other than that, Ghost Recon Breakpoint’s PC version is actually pretty solid. It has a lot of graphics settings, it can scale on older hardware, and it does not suffer from mouse acceleration/smoothing issues. Kudos to Ubisoft for delivering such a polished PC product, though let’s hope that it will reduce the game’s micro-transactions after the backlash.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email