Earlier this week, Sony released Ratchet & Clank: Rift Apart on PC. Powered by Insomniac’s in-house engine and ported by Nixxes, it’s time to benchmark it and examine its performance on PC.

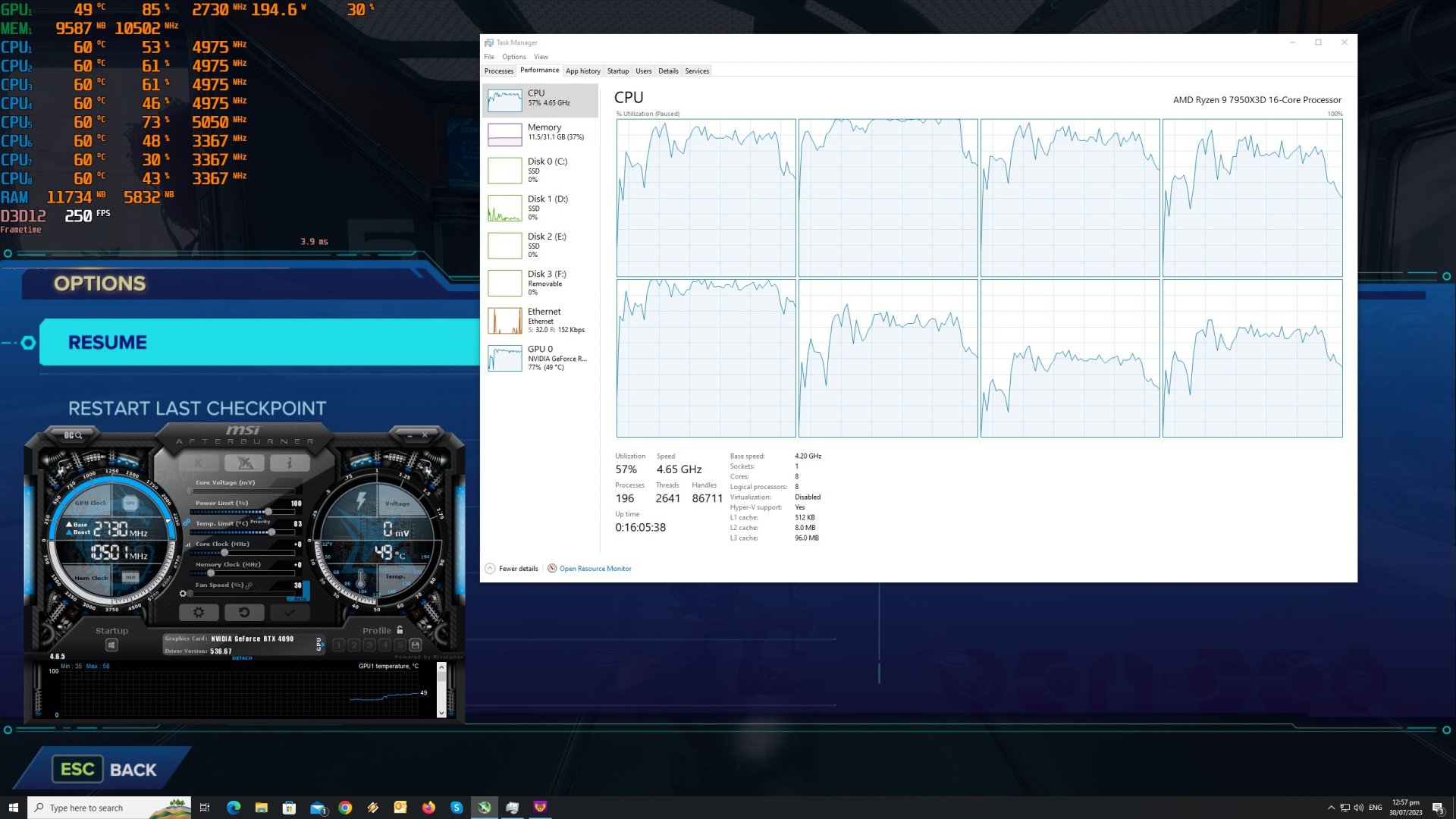

For our Ratchet & Clank Rift Apart PC Performance Analysis, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, AMD’s Radeon RX580, RX Vega 64, RX 6900XT, RX 7900XTX, NVIDIA’s GTX980Ti, RTX 2080Ti, RTX 3080 and RTX 4090. We also used Windows 10 64-bit, the GeForce 536.67 and the Radeon Software Adrenalin 2020 Edition 23.7.1 drivers. Moreover, we’ve disabled the second CCD on our 7950X3D.

Nixxes has included a lot of graphics settings to tweak. PC gamers can adjust the quality of Textures, Shadows, Reflections, Hair, Level of Detail and more. As we’ve already reported, Rift Apart also supports Ray Tracing effects on PC. Nixxes has used Ray Tracing in order to enhance the game’s reflections, shadows and ambient occlusion. Additionally, Rift Apart supports all major PC upscaling techniques (DLSS 2/3, FSR 2 and XeSS).

Ratchet & Clank: Rift Apart does not feature any built-in benchmark tool. As such, for both our CPU and GPU benchmarks, we used the following scene. This scene features a lot of NPCs and enemies on-screen, and it allows you to use some portals. Thus, it can give us a pretty good idea of how the rest of the game runs. We’ve also disabled the game’s RT effects (you can find our DLSS 3 and Ray Tracing benchmarks here). Additionally, we enabled DLSS 2 Quality for our CPU benchmarks (in order to avoid any possible GPU bottleneck).

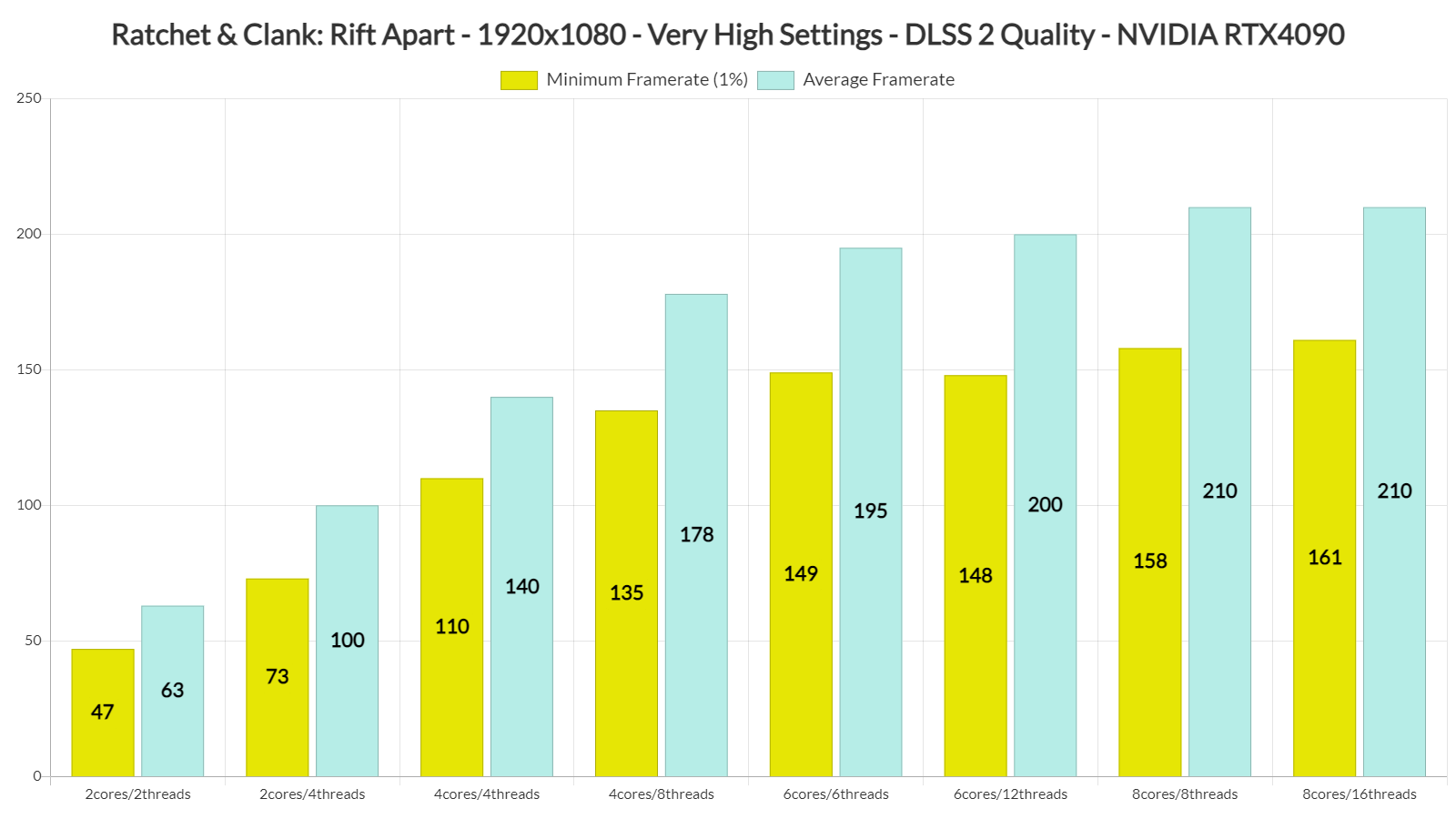

In order to find out how the game scales on multiple CPU threads, we simulated a dual-core, a quad-core and a hexa-core CPU. And, surprisingly enough, even our dual-core PC system was able to push an enjoyable experience at 1080p/Very High Settings/No Ray Tracing. That was with SMT/Hyper-Threading enabled. We can also see that the game can scale up to eight CPU cores.

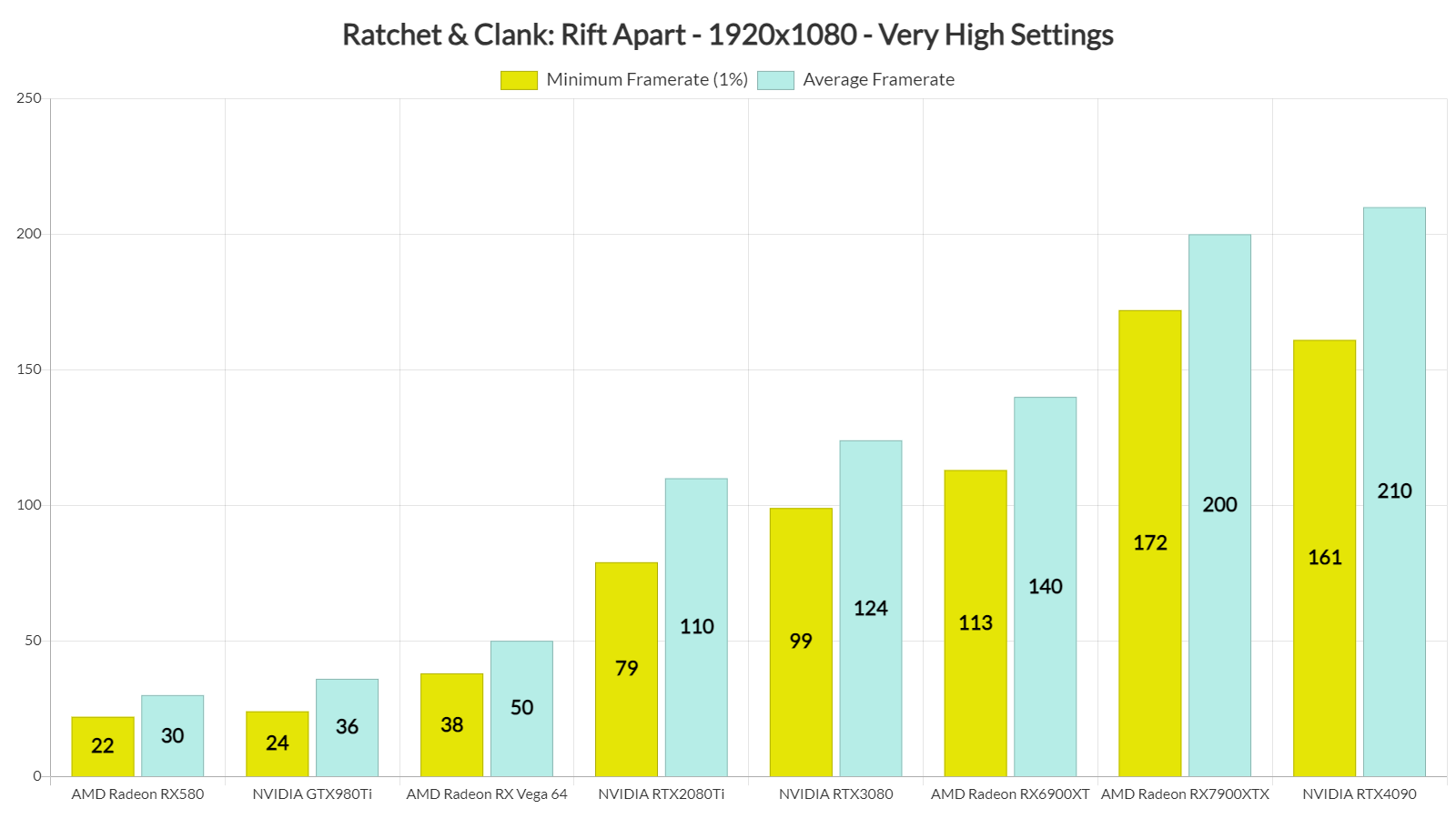

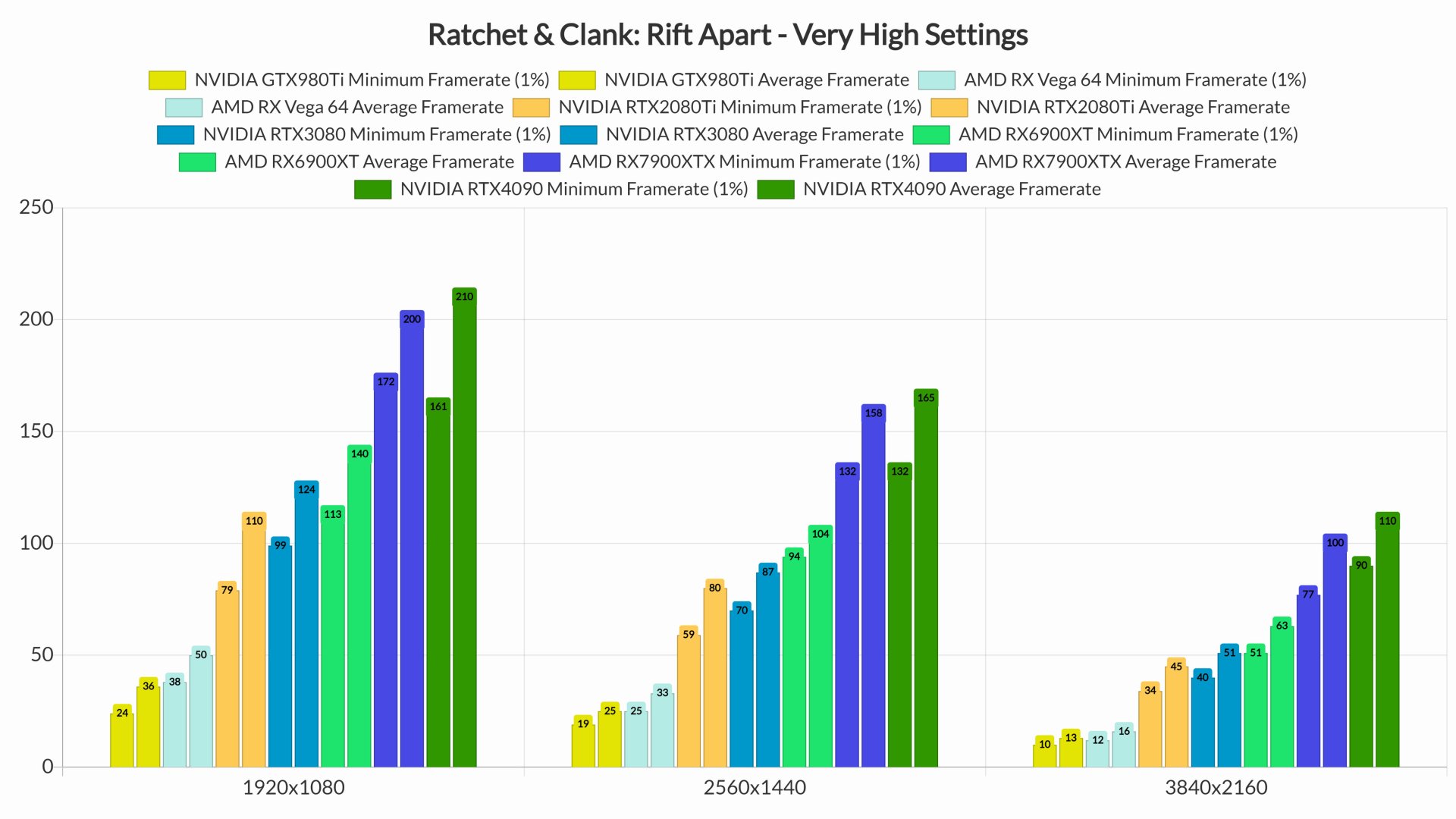

Our top five GPUs had no trouble pushing framerates higher than 60fps at 1080p/Very High Settings. As we can also see, Rift Apart performs incredibly well on AMD’s GPUs. And while our average framerate on the RTX 4090 was higher, the AMD RX 7900XTX was able to beat NVIDIA’s high-end GPU at minimum framerates.

At 1440p/Very High Settings, our top five GPUs were still capable of offering a smooth gaming experience. Again, it’s pretty incredible how close to the RTX4090 the RX7900XTX actually is. And as for native 4K/Very High, the only GPUs that could run the game with over 60fps were the NVIDIA RTX 4090 and the AMD RX 7900XTX.

Graphics-wise, even without its RT effects, Ratchet & Clank: Rift Apart looks great on PC. This is an incredibly good-looking game, pushing some highly detailed characters on screen. Players can also break a lot of objects, with debris and particles flying all over the screen. It’s also worth noting that Nixxes has fixed some graphical issues that were present in its launch version via a hotfix.

Overall, we are quite happy with the performance of Ratchet & Clank Rift Apart on PC. Is it a perfect port? Not quite as there is still room for improvement. For instance, the game does not appear to be taking full advantage of DirectStorage 1.2. Then there are the frame pacing/stutters we’ve mentioned when enabling RT and DLSS3.

Regardless of these nitpicks, though, the game can already run smoothly on a wide range of PC configurations. It also looks great on PC, especially with the RT improvements that Nixxes has brought to the table. The fact that you can also enable its upscaling options to further increase its performance is another big bonus. And yes, RTX 40 series owners can take advantage of DLSS 3 in order to enjoy Rift Apart’s Ray Tracing effects!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email