During GTC 2018, NVIDIA revealed that Epic Games’ amazing real-time raytracing tech demo in Unreal Engine 4, Reflections, was running on four Tesla V100 graphics cards. And while it took four Tesla V100 GPUs to power it, it’s pretty incredible that this demo was running in real-time at a smooth framerate.

Furthermore, NVIDIA revealed that its NVIDIA RTX technology will be supported by both Microsoft’s DirectX Raytracing API and the Vulkan API.

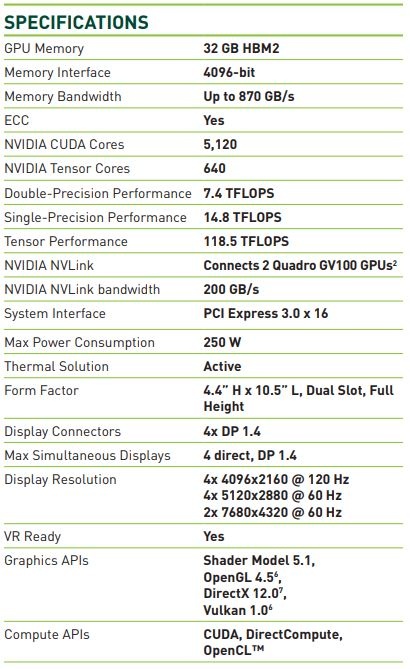

Last but not least, the green team revealed its new graphics card targeted for workstations, the NVIDIA Quadro GV100. NVIDIA Quadro GV100 features 32GB of HBM2 memory, 5120 CUDA cores and 118 Teraflops tensor cores.

Oh, and in case you’re wondering… no word yet about the rumoured GTX1180 or GTX2080 GPUs.

Edit: Here are the full specifications for the NVIDIA Quadro GV100 (thanks Metal Messiah).

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email