As you may already know by now, NVIDIA’s DLSS (Deep Learning Super Sampling) is an AI rendering technology that takes the visual fidelity to a whole new level using dedicated Tensor Core AI processors on GeForce RTX GPUs. DLSS taps into the power of a deep learning neural network to boost frame rates and generate beautiful, sharp images for your games.

You even get some performance headroom to maximize ray tracing settings and increase output resolution. The Tensor Cores upscale lower resolution render buffers to the desired ‘native’ scale, and at the same time DLSS upscales, it is also performing anti-aliasing effectively gaining performance from rendering the scene and post-processing at reduced input resolution.

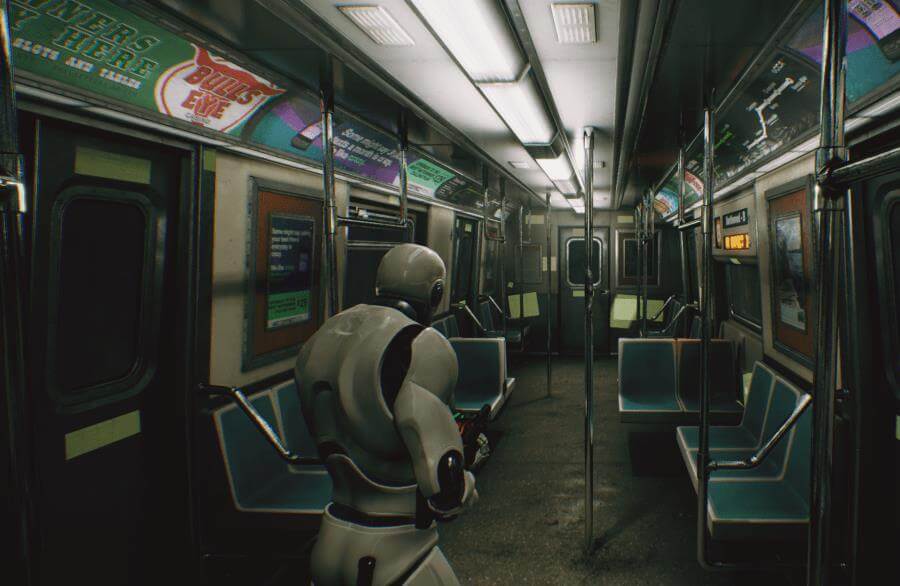

Recently, Tom Looman, a game developer working with Unreal Engine shared his thoughts and findings on how DLSS 2.0 can be easily integrated in Unreal Engine 4.26, and the performance and quality benefits it brings to the table. For testing this tech feature, Tom Looman has used the open-source SurvivalGame (available on GitHub), and Dekogon Studios’ City Subway Train assets.

The developer has shown some examples in his recent blog post. Nvidia gave Tom an RTX GPU, and access to the Unreal Engine RTX/DLSS Branch on Github.

So how do we get DLSS running in this Unreal Engine project? The integration with Unreal Engine 4.26 is pretty simple. For now you need an AppID provided by Nvidia (though, you must apply at NVIDIA’s website to receive an AppId) to enable this tech along with the custom engine modifications on GitHub.

Once you compile your project with a special UE4 RTX branch, you then need to apply your AppID. You can then choose between 5 DLSS modes ranging from Ultra Performance, Performance, Balanced, Quality, and Ultra Quality. There is also an option to sharpen the output.

The baseline for the test result was the TXAA sampling technique used in the game demo. The internal resolution is going to be downscaled automatically based on the DLSS quality setting applied.

According to Tom, for 4K displays, when you select the Ultra Performance mode, the internal resolution can go down up to 33%, which is a huge saving in screen-space operations such as pixel shaders, post-processing, and ray-tracing/RTX in particular.

In Unreal Engine the default anti-aliasing solution is TXAA, although FXAA is also supported, albeit with subpar results. Overall, DLSS 2.0 offered 60-180% increase in frame rate depending on the scene. Below you can find some screenshots showcasing the visual difference between DLSS and TXAA.

As you can see in this first image comparison scene, the trees look crisper on DLSS than native TXAA. Cables also hold up incredibly well, but slightly harsh in places.

In this second image comparison scene, according to Tom’s testing, both offer almost indentical quality. As the developer noted, you can notice some slight error in the ceiling lights where a white line in the original texture got blown out by the DLSS algorithm causing a noticeable stripe. This should however be fixed in the source texture instead.

First image is DLSS, vs. 1440p TXAA setting.

Here in this next scene, the reflections look slightly different. This scene used ray-traced reflections on highly reflective materials.

Below you can find a video showcasing the quality and performance comparison difference between DLSS 2.0 vs. TXAA. The developer has used 3 different AA modes, TXAA, DLSS Quality & DLSS Performance, in order to see the difference.

Speaking of FPS numbers, in this first image from the Forest Scene which was likely bottlenecked by the ray-traced reflections, DLSS QUALITY mode offered 56 FPS, which is a decent gain when compared to the TXAA baseline which offers only 35 FPS. This is with RTX on, at 2560×1440p resolution.

These are the scores as posted by Tom:

- TXAA Baseline ~35 FPS

- DLSS Quality ~56 FPS (+60%)

- DLSS Balanced ~65 FPS (+85%)

- DLSS Performance ~75 FPS (+114%)

- DLSS Ultra Performance ~98 FPS (+180%?! – Noticeably blurry on 1440p, intended for 4K)

The next comparison is for the Subway Train scene with RTX on, at 2560×1440p. These are the scores:

- TXAA Baseline ~38 FPS

- DLSS Quality ~69 FPS (+82%)

- DLSS Performance ~100 FPS (+163%)

Lastly, we have the Subway Train scene with RTX off, at 2560×1440. Here the difference in performance between internal resolutions appears to diminish to some extent.

- TXAA Baseline ~99 FPS

- DLSS Quality ~158 FPS (+60%)

- DLSS Performance ~164 FPS (+65%)

When it comes to the DLSS “render pass” which occurs during post processing much like traditional AA, the cost to upscale to 1440p was about 0.8-1.2ms, on an Nvidia RTX 2080 Ti GPU, regardless of the DLSS mode. TXAA on the other hand, at full 1440p costs 0.22ms, though this value will still depend on the test system used.

In overall testing, the image quality was good and sometimes even managed to be crisper than TXAA. And this is the performance conclusion as outlined by Tom Looman:

“The performance potential of DLSS is huge depending on your game’s rendering bottleneck. Reducing internal resolution by 50% has an especially large benefit on RTX-enabled games with ray-tracing cost highly dependent on resolution.

Ray-tracing features appear to be working better with DLSS enabled from a visual standpoint too. RTAO (Ray-traced Ambient Occlusion) specifically causes wavy patterns almost like a scrolling water texture when combined with TXAA. However, Enabling DLSS above Performance-mode completely eliminates these issues and provides a stable ambient occlusion.”

The DLSS branch of Unreal Engine isn’t accessible right now though, so you’ll need to contact Nvidia to get access, if you are interested.

Stay tuned for more tech news!

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email