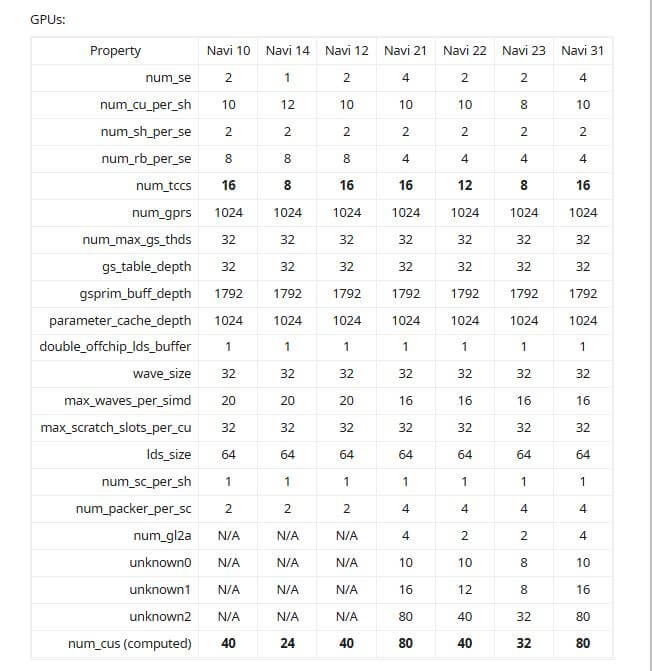

We have some new technical data on Navi 2X graphics processors based on the new RDNA2 architecture. A Reddit user stblr has discovered the potential specifications for the Radeon RX 6000 series in Apple’s macOS Big Sur 11 beta code, and they look promising.

The user reverse-engineered this information from drivers present in the macOS and it is likely accurate as well as legitimate, though you should still exercise caution with any leaked data. Some of the specs were already confirmed before by AMD in the ROCm 3.8 software update. We now have some data on the Compute Unit/CU count, boosts clocks, and power target values which were extracted from macOS 11 beta having the firmware for the AMD Radeon RX 6000 series of cards.

As per rumors, AMD might be initially preparing 3 Navi 2X variants, Navi 21, Navi 22, and Navi 23, respectively. Navi 21 will likely house the RX 6900 XT flagship GPU, which AMD has teased before as well, whereas the Navi 22 chip should land up in the Radeon RX 6700 XT or RX 6800 XT series of cards, successor to the RX 5700 XT GPU. Navi 21 SKU has been codenamed as ‘Sienna Cichlid’, whereas the ‘Navy Flounder’ codename could refer to Navi 22.

The higher the Navi suffix the lower the GPU will be positioned in the hierarchy, which means Navi 22/23 will land up in entry-level and mainstream GPUs most likely.

First we have the Navi 21 GPU (most likely the RX 6900 XT) which will sport 80 CUs, assuming that each CU still carries 64 SPs on RDNA 2, and the ratio of CU to SP remains the same, then we are looking at a total of 5120 stream processors. According to the leaker, there are two different dies and variants, the Navi 21A silicon having a boost clock of up to 2,050 MHz, while the Navi 21B silicon has a 2,200 MHz boost clock. The power limit varies from 220W to 238W. With a boost clock of 2200 MHz, the Navi 21B will have a shader performance of 22.5 TFLOPs, i.e. single precision compute.

For comparison, the Ampere GeForce RTX 3080 offers a peak single-precision (FP32) performance of 29.8 TFLOPs, which is 32.4% higher than Navi 21. But the Navi 21 does pull a lead over the GeForce RTX 3070, which offers 20.4 TFLOPs. If this card comes in 16 GB VRAM flavor and is also priced correctly by AMD, then it could be an absolute winner, and a strong GPU contender.

Navi 22 (codenamed as Navy Flounder) mid-tier GPU on the other hand might arrive with 40 CUs, amounting to a total of 2,560 stream processors/SPs, the same number of CUs as found on Navi 10 GPU. Which means the Navi 22 is a direct successor to Navi 10.

Navi 22 has an impressive boost clock at 2,500 MHz within a 170W power limit. The Radeon RX 5700 XT, which is based on Navi 10, comes with 2,560 SPs with a boost clock of 1,905 MHz, and has a maximum single-precision performance of 9.8 TFLOPs. Navi 22 delivers 12.8 TFLOPs of FP32 shader compute performance, so that’s a roughly 30.5% improvement.

Lastly, the Navi 23 (codenamed as Dimgrey Cavefish) would be the entry-level RDNA 2 silicon chip. The Navi 23 die may end up with 32 CUs, amounting to 2,048 stream processors /SPs. Though unfortunately, the clock speeds and power limits for Navi 23 weren’t mentioned in Apple’s latest firmware.

Surprisingly, the first RDNA3-based next-gen Navi 31 GPU is also listed in the macOS Big Sur 11 beta firmware. The GPU appears to feature the same CU count as Navi 21, meaning we are looking at a refresh under a new GPU microarchitecture. Thus, the Navi 31 GPU would also feature 5120 stream processors. We expect to see the Navi 31 silicon being used in the Radeon RX 7000 series, and also future Radeon Pro SKUs for Apple Mac.

Right now we don’t know whether Navi 31 will be a proper replacement or just a refresh of Navi 21 though. Here is the method to get this firmware data, courtesy of Reddit user stblr:

- Install macOS 11 beta (you can also use a VM).

- Get this file: /System/Library/Extensions/AMDRadeonX6000HWServices.kext/Contents/PlugIns/AMDRadeonX6000HWLibs.kext/Contents/MacOS/AMDRadeonX6000HWLibs.

- Use a reverse-engineering tool like radare2 to find the offset to the firmware (look for _discovery_v2_navi21).

- Use a tool like ddto extract it. Example: dd skip=47252400 count=1344 if=AMDRadeonX6000HWLibs of=navi21_discovery.bin bs=1.

- Get the relevant values using the definitions from here. There is also a toolthat does that automatically.

We have also seen pictures of AMD’s Radeon RX 6000 RDNA2 GPU before, showing triple-fan and dual-fan designs. The triple fan variant would most probably be launched as the RX 6900 series, whereas the dual-fan model might house the RX 6800 or 6700 series family. The dual-fan version also features two 8-pin PCIe power connectors. It will be interesting to see whether the new RDNA 2 will be able to compete with NVIDIA’s high-end RTX 3090/3080, or its mid-tier brother, the RTX 3070.

AMD will officially reveal these new GPUs on October 28th. We expect the RX 6000 series to officially debut in November. Stay tuned for more!

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email