By this time, we all pretty much know that NVIDIA will shortly release the GeForce GTX1080Ti. And while the green team has not officially announced anything yet, a lot of people believe that it will reveal (and perhaps release) this GPU during a GeForce event at this year’s GDC 2017. And…. well… we now know for a fact that the GeForce GTX1080Ti is real, as the official packshot of Halo Wars 2’s retail version has revealed/leaked this brand new unannounced graphics card.

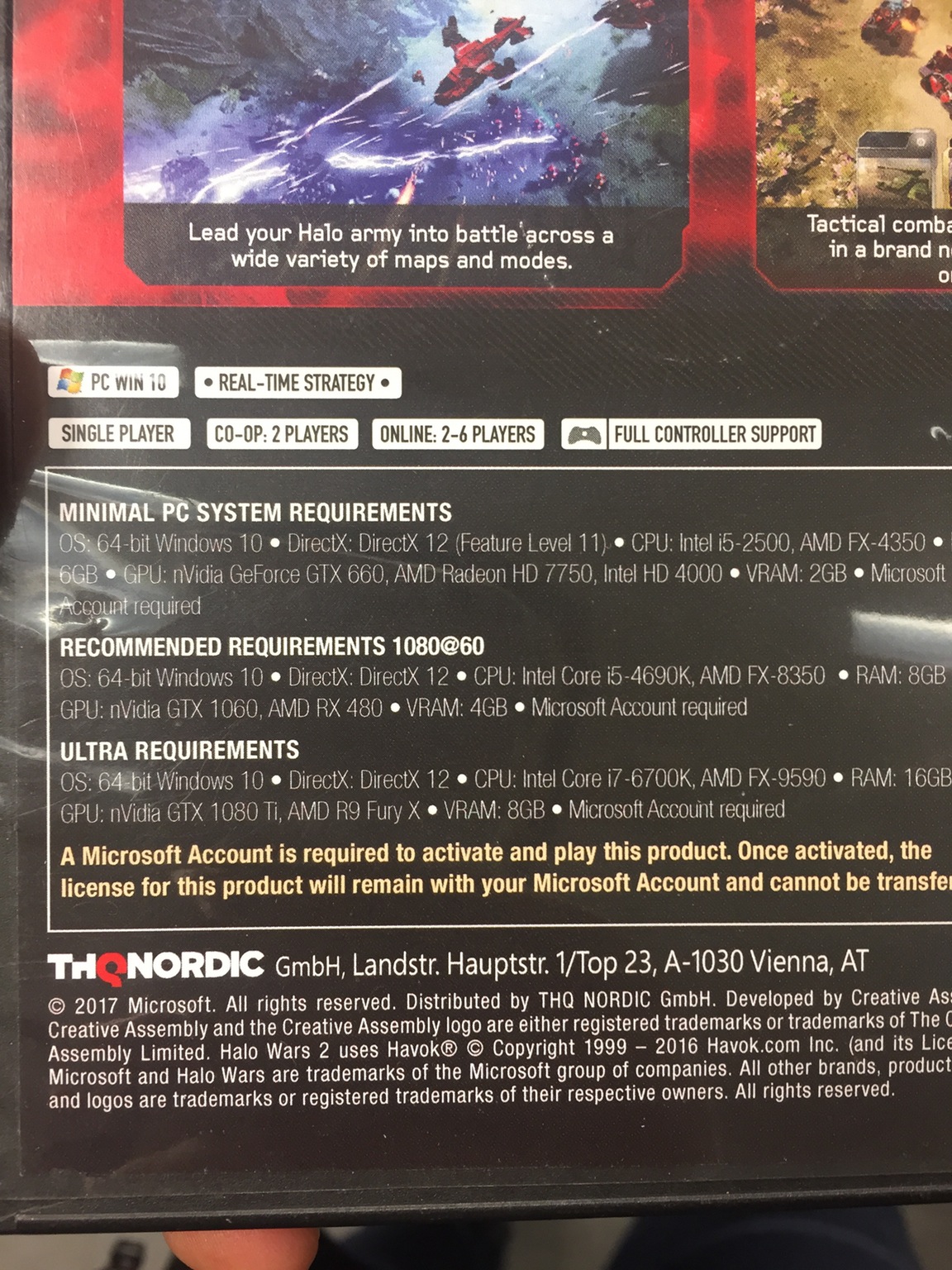

As we can see below, Microsoft and THQ Nordic list NVIDIA’s GTX1080Ti GPU for the game’s Ultra Requirements.

Do note that the retail version of Halo Wars 2 will be released only in Europe as its North American release has been cancelled.

So there you have it everyone, the NVIDIA GeForce GTX1080Ti is indeed coming (duh).

According to rumours, the GeForce GTX1080Ti will pack 12GB of GDDR5, will feature 3328 Cuda cores, will be clocked at 1503MHz and will sport 10.8TFLOPs.

Stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email