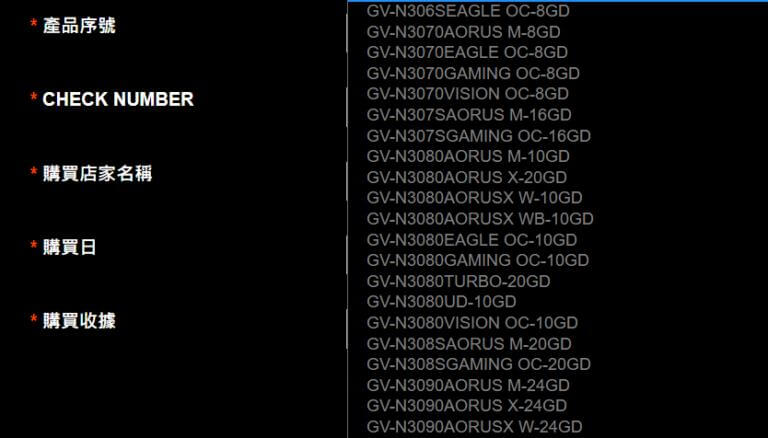

It appears that some product SKU codenames for some of the unreleased Nvidia GeForce RTX 30 series cards have been leaked by Gigabyte, as spotted by Videocardz. Nvidia has not announced any of these GPU variants yet.

Gigabyte has spilled the beans on three upcoming RTX 30 series graphics cards, the GeForce RTX 3080 20 GB, GeForce RTX 3070 16 GB, and GeForce RTX 3060 8 GB. Gigabyte’s Watch Dogs Legion code redeeming website has listed these new SKUs.

The list includes several variants from Gigabyte. We have variants listed with the S suffix/moniker which could mean a SUPER series branding, or maybe a Ti SKU as well, but we are not fully sure about this. Some models are also listed with twice the memory buffer size but without the S series suffix.

- Gigabyte GeForce RTX 3080 (SUPER/Ti) AORUS Master 20 GB (GV-308SAORUS M-20GD)

- Gigabyte GeForce RTX 3080 Gaming OC 20 GB (GV-308GAMING OC-20GD)

- Gigabyte GeForce RTX 3070 (SUPER/Ti) AORUS Master 16 GB (GV-307SAORUS M-16GD)

- Gigabyte GeForce RTX 3070 (SUPER/Ti) Gaming OC 16 GB (GV-3070SGAMING OC-16GD)

- Gigabyte GeForce RTX 3060 (SUPER/Ti) Eagle OC 8 GB (GV-3060SEAGLE OC-8GD)

The GeForce RTX 3080 20 GB model is expected to feature the same CUDA core count as the 10 GB SKU at 8704 cores, so we are not sure if the product code is final yet, or the GPU will just feature the Ti/SUPER branding. There are two variants based on the listed product codenames for the RTX 3080 20 GB. It makes little sense for Nvidia to just release a GPU having more VRAM, if the specs remain unchanged, so there are chances that Nvidia might also release a higher-spec’d x80 series variant as well, to counter AMD’s Big Navi GPU.

The GeForce RTX 3070 on the other hand would be getting a 16 GB VRAM buffer, since NVIDIA claims that the card is going to be faster than the RTX 2080 Ti that features 11 GB memory. This leak confirms the Memory specifications which were rumored before as well. More importantly, the RTX 3070 16 GB GDDR6 variant will feature the full GA104 GPU die, having 6144 CUDA cores. The plain non-S/Ti RTX 3070 is having a total of 5888 CUDA cores, which is 256 cores less.

Finally, we have the GeForce RTX 3060 SUPER GPU featuring 8 GB of GDDR6 VRAM, and it has been reported that this card would end up using a cut-down GA104 GPU die, instead of the GA106 GPU core which is designed for the mid-tier and mainstream SKUs.

According to @Kopite7kimi, who is a well known leaker, the RTX 3060 Ti/SUPER GPU would allegedly feature the GA104-200 GPU having 4864 CUDA cores, in a total of 38 SMs, which is 1024 CUDA cores less than the RTX 3070’s 5888 count. The card is also said to feature only 8 GB of GDDR6 memory, since this is more of a successor to the GeForce RTX 2060 SUPER SKU.

But do note that the Ti and SUPER variants might also be the same GPU, since the RTX 3060 is based on the same board, the GA104-200 SKU/ PG142 PCB. Also, don’t expect similar pricing for these Ti or Super variants, since the GeForce RTX 3070 with 16 GB of VRAM could end up close to the pricing of the GeForce RTX 3080, and the RTX 3080 20 GB would cost roughly around $799-$899 USD.

These Ti/Super variants aren’t meant to replace the existing RTX 30 series lineup, but they rather offer a step-up and upgrade in terms of memory and better core specs, but at higher prices. This all depends on how AMD responds back with their NAVI 2X lineup based on the RDNA2 architecture.

Stay tuned for more!

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email