Yesterday, we informed you about the exclusive PC features that will be implemented in Far Cry 4 and Assassin’s Creed: Unity. Well, today we’ve got some additional titles that will benefit from NVIDIA’s GameWorks. According to slides from NVIDIA’s event, Batman: Arkham Knight, Project CARS, The Witcher 3: Wild Hunt, and Borderlands: The Pre-Sequel. In addition, we’ve got additional information about the GameWorks effects that will be featured in Assassin’s Creed: Unity.

According to NVIDIA, Batman: Arkham Knight will support Turbulence, Environmental PhysX, Volumetric Lights, FaceWorks and Rain Effects. Project CARS on the other hand will come with DX11, Turbulence, PhysX Particles, and Enhanced 4K support.

We’ve known for a while that The Witcher 3: Wild Hunt will support specific NVIDIA features, but today we got the complete list of those features. The Witcher 3 will support HairWorks, HBAO+, PhysX, Destruction and Clothing (do note that Destruction and Clothing will be supported by the console versions).

Here is the complete list for a number of titles that will support NVIDIA’s GameWorks:

Assassin’s Creed: Unity – HBAO+, TXAA, PCSS, Tessellation

Batman: Arkham Knight – Turbulence, Environmental PhysX, Volumetric Lights, FaceWorks, Rain Effects

Borderlands: The Pre-Sequel – PhysX Particles

Far Cry 4 – HBAO+, PCSS, TXAA, God Rays, Fur, Enhanced 4K Support

Project CARS – DX11, Turbulence, PhysX Particles, Enhanced 4K Support

Strife – PhysX Particles, HairWorks

The Crew – HBAO+, TXAA

The Witcher 3: Wild Hunt – HairWorks, HBAO+, PhysX, Destruction, Clothing

Warface – PhysX Particles, Turbulence, Enhanced 4K Support

War Thunder – WaveWorks, Destruction

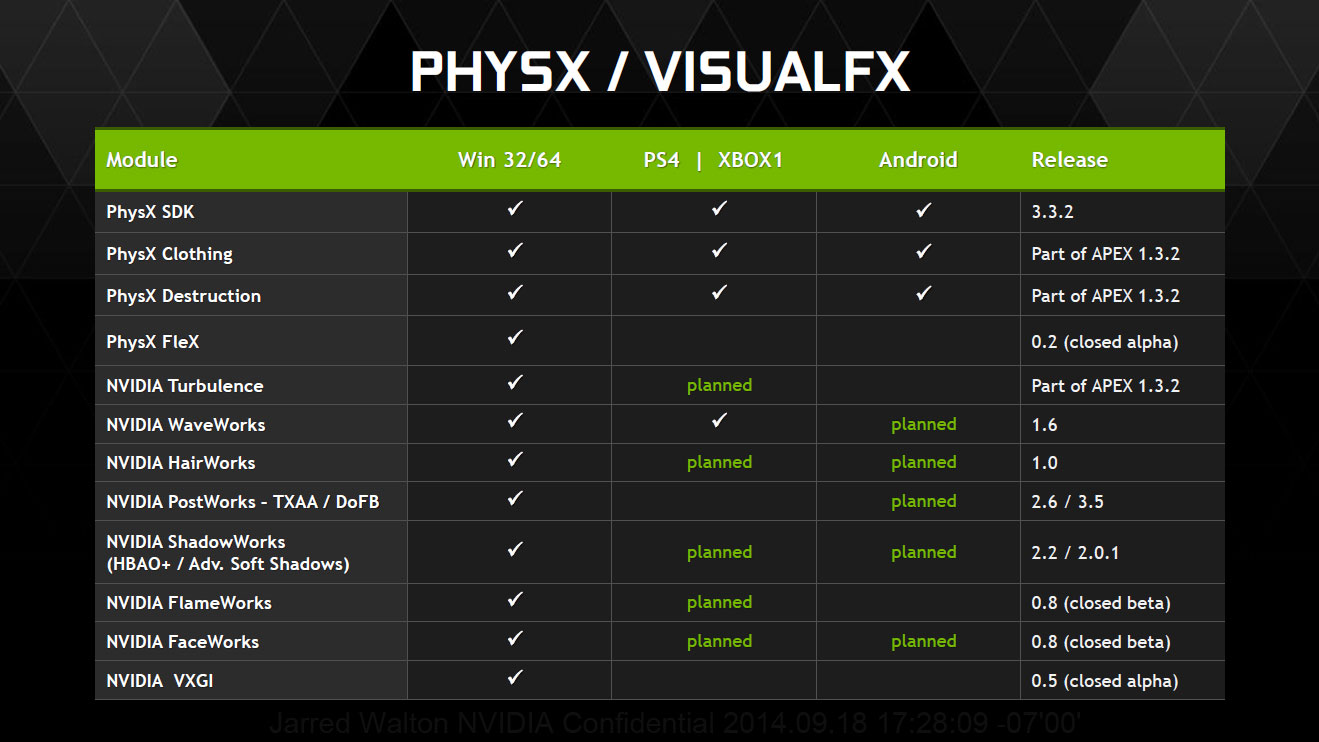

And here are the slides themselves. Current-gen consoles currently support PhysX Destruction, Clothing and WaveWorks. While additional effects will be supported at a later, there is no ETA as of yet. Furthermore, some of these graphical features will also be supported by AMD’s GPUs on the PC.

Enjoy!

[UPDATE]

Here are some comparison shots for Far Cry 4 and Assassin’s Creed: Unity. Enjoy!

Assassin’s Creed: Unity NVIDIA GameWorks

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email