AMD has shared the 4K and 1440p PC system requirements for Bethesda’s highly anticipated new game, Starfield. These more detailed PC requirements will give you an idea of the PC system you’ll need in order to enjoy the game at either 1440p or 4K.

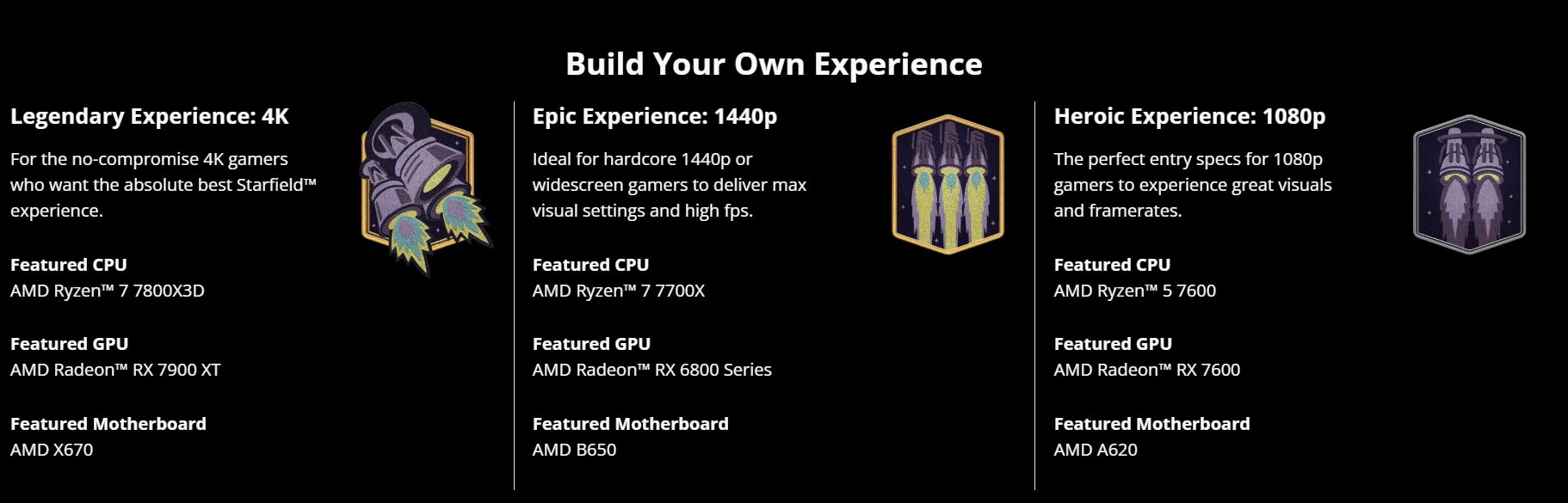

AMD recommends using an AMD Ryzen 7 7700X CPU with an AMD Radeon RX 6800 Series graphics card for 1440p. According to the red team, this combo will be “ideal for hardcore 1440p or widescreen gamers to deliver max visual settings and high fps.”

For 4K gaming, AMD suggests using an AMD Ryzen 7 7800X3D with an AMD Radeon RX 7900 XT graphics card. AMD states that this PC system will provide the “absolute best Starfield experience in 4K.”

Since these requirements are part of AMD’s promotion, they do not feature any NVIDIA or Intel GPUs. Thus, let’s hope that Bethesda will reveal more detailed PC specs for this title before it comes out. After all, it will be interesting to compare the NVIDIA and AMD 1440p and 4K requirements for Starfield.

For what it’s worth, Bethesda has not clarified yet whether or not the game will support DLSS 2 or XeSS. For the time being, we know that it will only support AMD’s FSR 2.0.

Modder PureDark has already stated that he will add support for NVIDIA DLSS 3 via a mod. Unfortunately, though, this DLSS 3 Mod will most likely be behind a Patreon wall. And, in case you’re wondering, the DLSS 3 Mods for The Last of Us Part I, Jedi Survivor, Elden Ring and Skyrim are still behind a Patreon wall.

Bethesda will release Starfield on September 6th. You can also find the game’s “limited” PC requirements here.

Stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email