Daedalic Entertainment released last week The Lord of the Rings: Gollum on PC. Powered by Unreal Engine 4 and using DLSS 2/3 and Ray Tracing, it’s time to benchmark these features first and share our results.

For these benchmarks, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, AMD’s RX 7900XTX, NVIDIA’s RTX 2080Ti, RTX 3080 and RTX 4090. We also used Windows 10 64-bit, the GeForce 532.03 and the Radeon Software Adrenalin 2020 Edition 23.5.1 drivers. Moreover, we’ve disabled SMT and the second CCD on our 7950X3D.

The Lord of the Rings: Gollum uses Ray Tracing to enhance the game’s reflections and shadows. And, to be honest, these effects are underwhelming in this title. The game would greatly benefit from RTGI which would significantly improve its visuals. Instead, Daedalic has used RT for a relatively minimal visual improvement. Furthermore, the game will be constantly switching between SSR and RT Reflections as the draw distance for RT Reflections is very limited.

Daedalic Entertainment has also provided a universal On/Off setting for Ray Tracing. Thus, you can’t select between RT Reflections or RT Shadows, and you cannot adjust their quality. Instead, you can only enable or disable them.

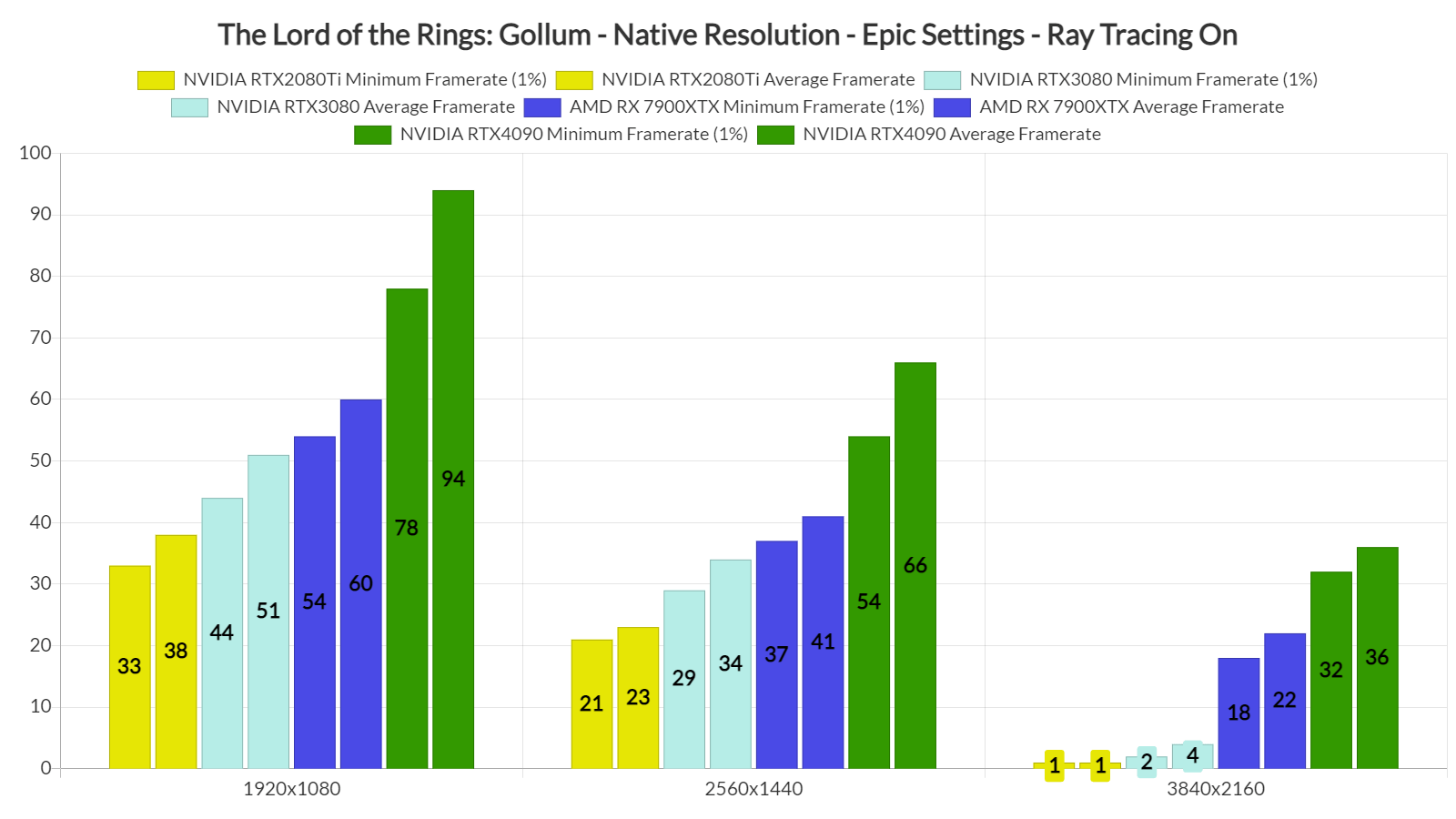

At Native 1080p/Epic Settings/Ray Tracing, the only GPU that can run the game with over 60fps is the NVIDIA GeForce RTX 4090. At Native 1440p/Epic/Ray Tracing, the RTX 4090 can drop below 60fps (so you’ll need a G-Sync monitor to properly enjoy it). Lastly, regarding 4K, the RTX2080Ti and RTX3080 ran out of VRAM at that resolution and that’s why they performed so horribly.

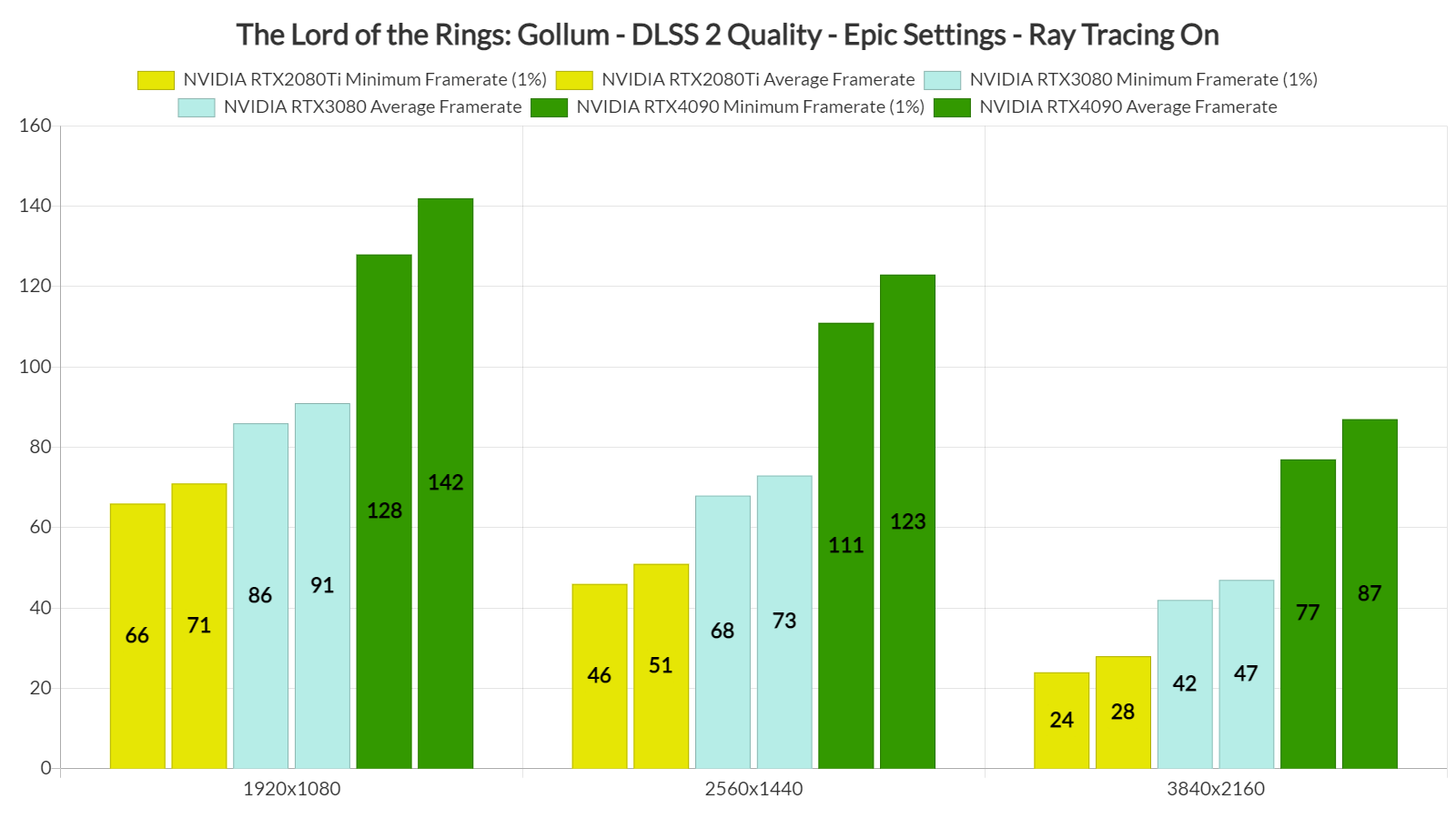

Now the good news is that The Lord of the Rings: Gollum takes advantage of DLSS 2 and DLSS 3. With DLSS 2 Quality, the RTX 4090 can push over 60fps even at 4K/Epic/RT. Similarly, the RTX 3080 can provide a smooth experience at both 1080p and 1440p. Unfortunately for AMD users, though, the game does not support AMD FSR 2.0. The good news is that Daedalic plans to add support for it via a post-launch update.

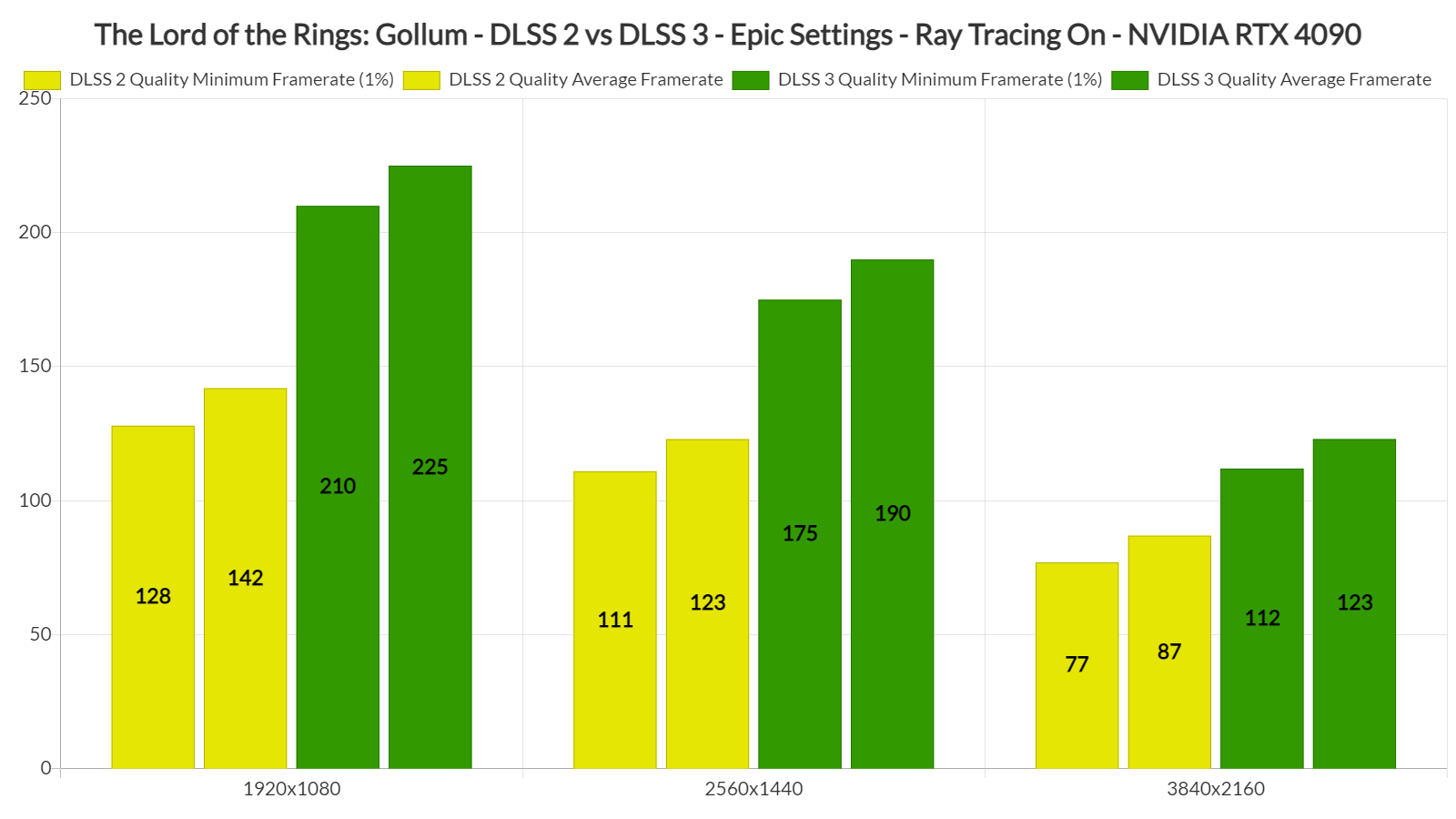

DLSS 3 also brings a significant performance boost to this game. As we can see, DLSS 3 Frame Generation can improve minimum framerates by 45% and average framerates by 41%. As such, owners of the RTX 4090 can run this game fully maxed out at 4K with over 110fps at 4K/DLSS 3 Quality Mode.

To be honest, though, I don’t see anything here that justifies these performance figures. The Lord of the Rings: Gollum is an ugly game, and all characters look mediocre. This is a big letdown, and it’s nowhere close to what cross-gen games should look like. Yes, Daedalic is a small team. However, this game should be running WAY, WAY BETTER for the graphics it displays on screen. Yes, the game can run really smoothly with DLSS 2 and DLSS 3, but developers should not be solely relying on these techniques.

Stay tuned for our “rasterized” PC Performance Analysis, in which we’ll be testing more AMD GPUs!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email