Sony has just released Marvel’s Spider-Man: Miles Morales on PC. Powered by Insomniac’s in-house engine, the game supports NVIDIA’s latest DLSS tech, DLSS 3, from the get-go. As such, we’ve decided to benchmark it and share our initial impressions.

For our benchmarks, we used an Intel i9 9900K with 16GB of DDR4 at 3800Mhz and NVIDIA’s RTX 4090. We also used Windows 10 64-bit, and the GeForce 526.86 driver. And, as always, we used the Quality Mode for both DLSS 2 and DLSS 3.

Marvel’s Spider-Man: Miles Morales does not feature any built-in benchmark tool. Therefore, we benchmarked a populated area after the game’s first/prologue mission. Our benchmarking scene appeared to be stressing both the CPU and the GPU, which is ideal for our tests. We’ve also enabled the game’s Ray Tracing effects, and maxed out all of its other graphics settings.

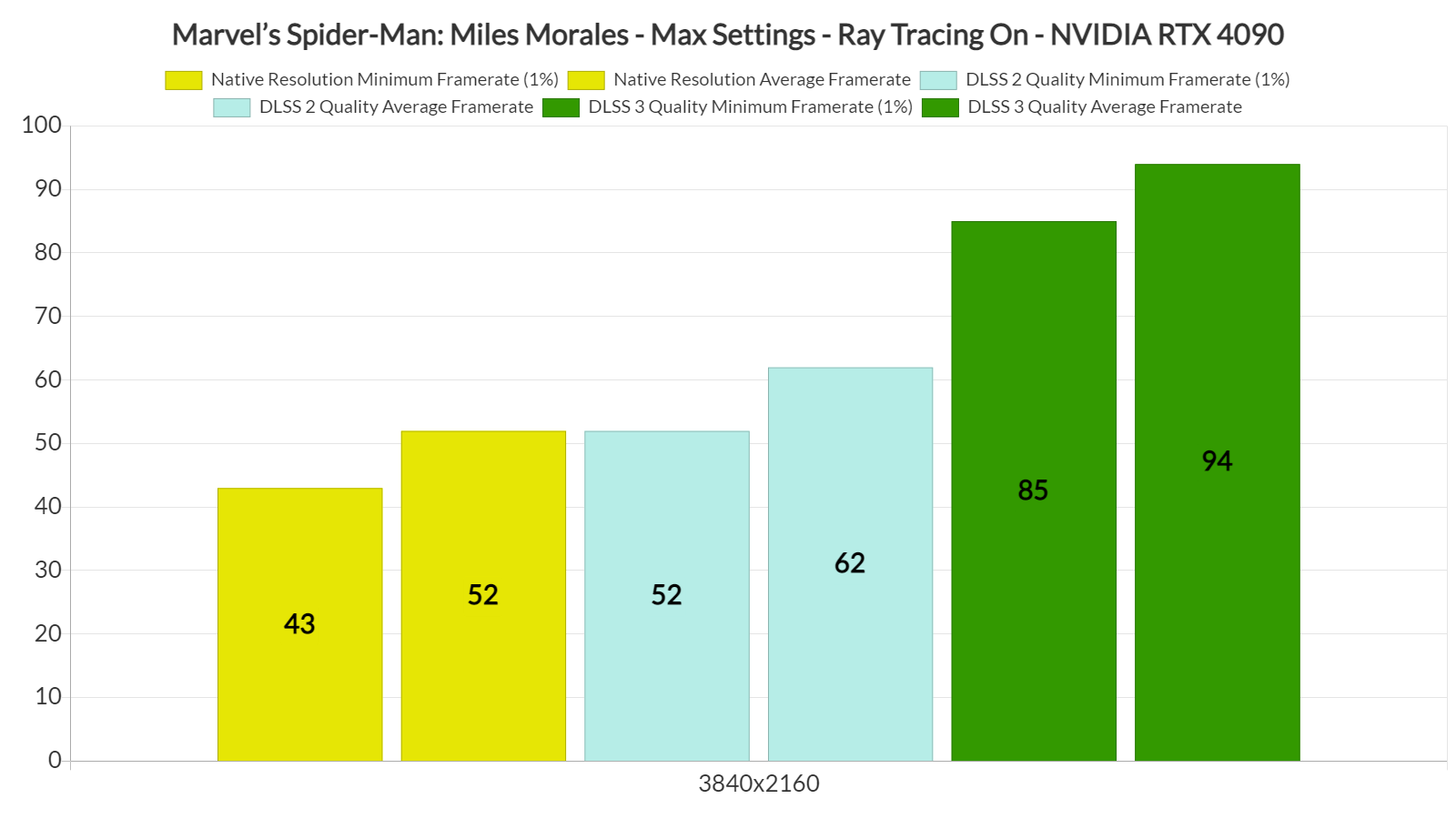

At 4K/Max Ray Tracing Settings, we saw some dips at mid-40s, and had an average framerate of 52fps. By enabling DLSS 2 Quality, we were able to increase our average framerate to 62fps (though there were still some drops to 52fps).

Now as you will see, at both native 4K and with DLSS 2 Quality, we’re CPU-limited. Normally, in CPU-limited scenes, DLSS 2 wouldn’t be improving overall performance. However, the reason we see better framerates with DLSS 2 Quality in this game is because the rendering resolution actually affects the game’s RT reflections and RT shadows. By lowering the in-game resolution, you can minimize their CPU hit, which – obviously – brings a noticeable performance boost.

In order to get a constant 60fps experience in Marvel’s Spider-Man: Miles Morales on our Intel i9 9900K, we had to enable DLSS 3. By doing so, we were able to get framerates higher than 85fps. Not only that, but we could not spot any visual artifacts, and the mouse movement felt great. Contrary to WRC Generations and F1 22, the additional input latency of DLSS 3 in Marvel’s Spider-Man: Miles Morales isn’t really noticeable. Thus, and if you own an RTX 40 series GPU, we highly recommend using DLSS 3.

Our PC Performance Analysis for this new Spider-Man game will go live later this weekend, so stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email