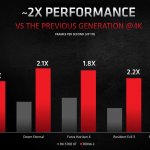

AMD has officially announced its RDNA 2 high-end GPUs, the AMD Radeon RX 6800XT and RX 6900XT. According to the red team, this GPU is as fast as NVIDIA’s high-end latest GPU, the NVIDIA GeForce RTX 3080.

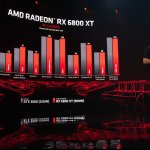

AMD has shared some first-party gaming benchmarks, in which it put the RX 6800XT against the NVIDIA GeForce RTX 3080 in 4K and 1440p.

The AMD Radeon RX 6800XT features 72 compute units, 2015Mhz game clock, 2250Mhz boost clock, and 128MB Infinity Cache. It also comes with 16GB of GDDR6 VRAM, and requires 300W total power. This GPU will be priced at $649, and will be available on November 18th.

The AMD Radeon RX 6800 features 60 compute units, 1815Mhz game clock, 2105Mhz boost clock, and 128MB Infinity Cache. It also comes with 16GB of GDDR6 VRAM, and requires 250W total power. This GPU will be priced at $579, and will be more powerful than the RTX2080Ti.

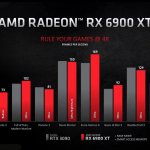

AMD also revealed its highest RDNA 2 model, the AMD Radeon RX 6900XT. AMD Radeon RX 6900XT will be available on December 8th and will cost $999. This GPU will challenge the RTX3090, a GPU that costs way more than it. The AMD Radeon RX 6900XT features 80 compute units, 2015Mhz game clock, 2250Mhz boost clock, and 128MB Infinity Cache. It also comes with 16GB of GDDR6 VRAM, and requires 300W total power.

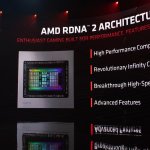

Lastly, AMD’s RDNA 2 GPUs will support DirectX 12 Ultimate as well as DirectX Storage. AMD RDNA 2 also supports two new features; RAGE Mode and Shared Memory Access (stay tuned for an upcoming article by Nick for these two features).

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email

6800 XT looks like an excellent contender against the 3080, only concern is lack of DLSS like features, although they claim that they have a pretty decent Ray Tracing De-noiser. Also lack of Ray tracing benchmarks is curious. I assume that at the Ray tracing level, Nvidia still holds a performance advantage.

Please can someone explain to me, WHAT THE FUQ is so special about DLSS. Nvidia puts up any marketing jargon and you people just babble it on and on like it’s the holy grail. There is nothing special about this BS. It’s a damn AI, i want raw power and pure image quality, no damn trickery. So instead of getting power they use some phony tricks and you just buy it as if it’s have all end all. Oh lordy.

It gives you a massive performance and visual quality advantage. Phony tricks? What are you talking about. You get massively better performance and better visuals that rendered natively. You can actually turn rtx on at 4k.

You sure about that “natively”?

Dlss2 is quite great i saw it in action and it seems to give an even better and sharper image quality than native res so its not some console quality checkerboard crap.

And running 4k with the performance penalty of 1080p is quite a good deal imo.

Ok i can understand that, but why are people acting as if it’s the holy grail and AMD have nothing else to offer. It’s as if, if they don’t offer the same then they have nothing. I don’t understand this notion with consumers, don’t people not understand how product offerings and innovation works. I just get annoyed when i hear the same jargons being thrown around. I play on a huge projector so i don’t see DLSS but what i can see is Radeon Image Sharpening . That is something to phone home about.

Nv already has image sharpening just like AMD built into the control panel you can turn it on. DLSS however is a huge game changer, it gives you a large performance boost and a better looking image

Because atm Nvidia does have that as holy grail , because DLSS it’s something new and no one can beat that, it’s offering better performance with greater image quality than your native resolution is offering. True that not many games have it but it’s something really cool developed specifically for n cards. If someone comes and offers the same thing that’s good, for now no one can compete on that feature. Its like wondering why iOS runs great just on the ecosystem of Apple. It really does wonders…

LE: Nvidia also has sharpening since long time ago but this is not about that…

Its an AI Driven solution that helps deal with the massive impact (at least on first gen Turing RTX cards) when enabling Ray tracing, even without Ray Tracing enabled it can greatly improve image quality without sacrificing performance. Here is a short snippet

AMD does not really have a solution to DLSS at this time, hence me hoping that they either come up with something resembling that, or introduce a new standard that is not hardware/platform specific.

They said they are working on a DLSS like solution with partners for all the systems, including consoles…..

Also, there is DirectML (Machine Learning) for DirectX 12

First of all man, I clearly know what DLSS. We all can read a wiki page or listen to some youtuber ramble on. This is my problem:

“AMD does not really have a solution to DLSS at this time, hence me hoping”

Why is it that everytime Nvidia comes up with some gimmick, And has to have it too? Do y’all understand what innovation or product offering is. How come i don’t hear y’all booming about fidelity and Radeon Image Sharpening have you seen what beauty that thing adds? Why is it that as soon as one company starts one thing you want another to do it. If you want DLSS then go with nvidia, and those who wants radeon sharpening can go with AMD, that’s how business works Most of y’all have NEVER owned both cards, so you don’t even know what the other one offers, all you know is that AMD/Nvidia offers this and i want the other one to do it too. That is just terrible road to innovation. That is my point really.

Have you taken a look at AMD’s Adrenalin suite, Nvidia have nothing close to the amount of tech in that suite. How come i don’t hear anyone saying, i can’t wait till Nvidia has this thing from AMDs Suite? Yo know why? pure ignorance. Since as i’ve used both cards, i moved over to AMD because i don’t want anything that nvidia is offering. Does that make sense to you? if i wanted Nvidia rubbish i’d be right over there lining up to pay twice the price for AI and not hardware. I want hardware, not god damn AI.

AMD will have DLSS alternative called Super Resolution, quote from presentation “and we are already working on a super resolution feature to give gamers more performance when using ray-tracing”

DLSS 2.0 gives you a huge performance uplift and a better sharper 4k image at a low performance cost. Without it, you would be dumb to buy an AMD gpu

WITH DLSS EVEN 120 fps 4k is doable at the moment. do some research before saying ”it’s just AI”

4K will always be an expensive resolution to run. First and foremost.

Dlss is an ai based upscaler but i don’t care about that part for that tech really, what it does great is fix what taa destroys with blur meaning that even when its actually having a lower resolution baseline its output often wins vs native destroyed by taa blur (and that’s i would guess 99% of today’s aaa titles who employs that aa scheme). The performance boost is a bonus 🙂

AMD will have its own DLSS tech, “Super Resolution”. No benchmarks or details as of yet, so it will be interesting to see whether it’s as good as DLSS 2.0.

Yeah seems like everybody missed that feature in presentation.

I had to re-read tom’s hardware snippet on it, they barely discussed anything about it though.

No one watch anything or read articles they just go by snippets and then the mouth spewing starts.

And there is DirectML (Machine Learning) for DirectX 12

But super resolution has been a thing for years now, in the Adrenalin Suite? Am i missing something here?

It will be different to the option (currently available in the drivers) that simply allows you to run higher resolutions than your native one. Right now, the setting that AMD has basically works like DSR.

AMD said that they are working on DLSS like solution for all all the systems, including consoles…..

Falling for the nvidia gimmicks yet again. How many games are going to even implement it?

Bravo AMD

$580 for the 6800 which is comparable to the 2080ti. The 3070 is $499 and also comparable to the 2080ti. Hmm. Why not just get the Nvidia card (when you can)?

because 8gb ram will become limitation in year or so?

On ultra settings and not one year, more like 4 maybe.

just google when new consoles will release and how many vram will they have – 8gb will become obsolete in couple of years even for 1440p gaming

You can’t compare consoles with PC. Consoles have combined memory whereas PC has Vram and regular ram.

Yes and that same combined is WHAT FUQ’d us at the beginning of this generation. You remember when devs were just throwing everything unto VRAm and PC’s with only 4-6 GB’s of Vram got fuq’d, which was MOST. Have you forgotten that already? Of course you did

In a couple of years 3070 will become a $150 card anyway. I don’t game at 4k so i don give a damn about 16gb vram.

It’s been happening for years now, WTF are you talking about. That’s why i know most of y’all never play on Ultra

i9, 2080ti.. lick my boots!!

There are already games which can easily eat up to 8 / 11 GB of VRAM….. and not even the highest settings.

Like ?

Awenger? You have the review on this site…. let alone upcomming games (1-2 years from now).

The same argument every single generation.

And every single generation it turns out that low Vram cards like the 3070 have Vram issues very soon.

How is this generation somehow different?

not in the year or so, it’s already happening. In some titles 3070 gets beaten by 2080Ti because of smaller ram size.

6800 wipes the floor with both 3070 and 2080 while having more VRAM AND being more power efficient.

It’s a win-win all around. Pricing is adequate.

No one in their right mind will use the term wipes the floor, lol.

The RAM issue won’t be a bottleneck for many years, though it’s comforting to have it. Then also no DLSS, which is a big deal in games that utilize it.

The 8 GB VRAM buffer is a bottleneck today, in 4K, in Doom Eternal. Lowering texture settings 1 notch improves performance significantly.

Next-gen requirements will only get higher.

I’m not really suggesting it’s a 4K card, though one very new game that chokes on it isn’t the be-all and end-all of it’s 4K capabilities. And we’re all PC gamers here right though? Who has a 4K monitor?

I respect your input all the same, and it’s a valid point nonetheless.

I do believe there are more people downsampling from 4K/3K/2K to 1080p than people who own a 4K monitor. I’m from the former crowd

3070 Is the same, just slightly better than 2080Ti, sometimes worse….. 6800 is noticeable better than RTX 2080 Ti and add to that all the other “tech”.

Its not really a 3070 competitor, its a 3070Ti competitor.

Just like 6900XT is not a 3090 competitor but a 3080Ti competitor.

Its just that AMD can’t compare those cards with products that aren’t released yet.

Expect a 6700XT to be around the 3070 (probobly 5-10% slower because its only 40CUs but at super high clock speeds).

That’s a good point.

Nice show by AMD. Didn’t like the Nvidia enthousiast monopoly one bit.

Finally……. some competition. That’s all I’ve ever wanted. I don’t care about “Team Red”, or “Team Green” bulls**t. I just want competition between the two, because it’s always a win for the end users.

Hopefully the start of a long era of it, now that AMD “appears” to have gotten its game together in the GPU market.

y’all still aint gonna buy AMD, most people wanted competition because they wanted AMD to help them get nvidia 50 bucks cheaper. Let’s be real here. I on the other hand was gonna buy AMD regardless. Nvidia has been robbing people blind for years with their washed out a$$ color pilate and yet people line up 6 hours at microcenter to get their rubbish.

I’ll buy AMD, when AMD gives me a reason to buy them. I don’t give a crap about brand loyalty. I went from ATI, to Matrox, to 3DFX, back to ATI, and then to Nvidia, because they were simply the best cards for the money, in their eras.

Radeon has been 3rd rate since AMD bought ATI, and that’s just the truth of it. If AMD has a competing card for a competing price next gen, I’ll get AMD. If they don’t, I’ll get Nvidia. It’s really as simple as that.

TBH, AMD pretty much had cheaper and better cards in mid range every generation. But people don’t care, they still buy more expensive mid range nvidia card with less performance because nvidia had no competition for xx80Ti. Idiots logic I guess.

That’s the point really, The end up getting robbed and don’t even know it. Best example on the Mid-range. I couldn’t have said it better. Because AMD wasn’t competing with Nvidia’s top end they automatically assumed that their mid range was crap. The 5600 XT is A BEAST, enough said.

Even when you’re selling graphics card the NUMBER ONE question: Is this card Nvidia or AMD? and when you tell them it’s AMD they react like it’s suddenly infected

I said nothing about Brand loyalty. I’m not loyal to any brand, if there was a 3rd player in town i’d go with them, i just aint spending my hard earned cash with N’greedia. I’ve had enough of them. Don’t put words in my mouth Homie, c;mon now. You know what i’m talking about. Even if you don’t agree, you still understand the WHY. This has nothing to do with Brand Loyalty.

Nvidia disgusts me by their practices and i don’t care to give Jensen and his leather Jacket anymore money. they got enough money out of me.

ATI had some hard issues…the 970 was bad for coil whine and not being upfront about the 0.5 segment for slower cache but the card was well price for the time…both companies had hardware problems in the pass…I use to like ATI for the difference of technologies how they render and still do…ATI drivers/GUI was a problem for me…so I went back to Nvidia but…Now I am having problems with Nvidia..I was thinking of going back with ATI the problems I had with ATI just cards burning out is a problem that ATI still comes with same goes for Nvidia I had more cards burn out with ATI over Nvidia I also had more ATI cards at the time…mind you Nvidia is not perfect either…I had problems with Asus like you mention in you’re other comment and that is why I switch to EVGA they are top notch for Nvidia side…that was the other reason why I wanted to go back with Nvidia they have good official partners

Voodoo and 3DFX those were the days. I’ve still got one up in my loft somewhere just couldn’t part with it.

Had 2x Voodoo2’s in sli when i played quake competitively back in the days – Miss those days 🙂 Kinda fun that its just recently that lcd’s have started to get the same or better refresh rates as the best crt’s had back then… and they called that progress lol

Well now we can see that it seems that ATI is coming back like it use to be in the early days of the 90’s

Dont be so sure….. I was aiming minimum for RTX 3080 (maybeeee 3090) but after this, Im now really confused and dont know what to choose. AMD is also working on “DLSS” like solution……

the fidelity fx is their alternate but i only use native so don’t care really

No, they have the real alternative….. DirectML

true, I will never touch amd.

Nvidia beat AMD ever since 8800GTX. R-tard calls their GPU rubbish…..

If you think Nvidia have beaten AMD since then, when their are SOOO MANY FACTORS.. you are clearly an uneducated Fanboy and i have no time for fanboyism, NEXT!!! COme talk to me when you’ve own 5 GPUs from both sides each over the years and have dug deep in them both.

AMD had huge problems in the GPU department, drivers being particularly bad. 5700XT was released all broken, they even publicly admitted it.

I haven’t bought AMD graphics card since 270. I don’t plan to atm, before I see all the indications they did their homework.

I had a Vega 64, dead within 6 weeks of use. I had a Titan X Dead in 3 years. DO you know which one won? The Vega 64!!! contacted sapphire and they sent me a modified pro version of a Vega 64, works flawlessly. Contacted Asus about my Titan X that was still under warranty, it’s been 2 years no one has even dare to sh*t on me. So, EVERYBODY cards gives problems. It’s all about who delivers the most at the end of the day and what works for you. Because i don’t think anyone would be too happy when their Titan X keeps crashing to desktop over the years and then died. None of my AMD cards crashed during games or to desktop. But that’s not a point is it, because other people have. So this argument is really MUTE, when it comes to quality assurance. My gripe is Ngreedia and their dirty methods of business, that is all i care about.

Batman Arkham Knights false representation by speeding up the gameplay video to make it looks as if it’s playing at a constant 60 fps when NO CARD could run that game constantly on release. The GTX 970, with their lying about having 4GB of memory when it’s 3.5. Go look up Batman Arkham KNight Gameworks on Youtube and you will hear the npc’s sounding like chipmunks because nvidia sped up the video and faked the gameplay. The video is still up on nvidia’s Youtube channel, theywere busted and Never took it down. If you love nvidia that’s on you but don’t try to convince me to when i have receipts. Love what you love and leave others be.

You had a Titan X die on you, but still in warraty yet you waited for over 2 years for a reply, that’s utter BS mate.

Hey Dude, I never waited. They just never contacted me period. I’m not waiting, I don’t care I moved the Fuq on. So don’t start with calling me a liar. Please read and comprehend what I said. I repeat, im not waiting for a damn thing. My Vega 64 performed better than the Titan X for more than half the price, so I don’t care. I see it as a blessing. 1) I’ll never buy anything Asus again 2) wanted to leave Nvidia long time ago and I finally got to. I don’t give rats man. For what I want and I’m good.

Look even if it was a bit dated when it died you would have still paid well over a thousand pounds for it initially. Plus as you said it was after 3 years and still under warranty which you would have had to have paid extra for as the manufacturers is only 3.

So no I just don’t see it, no one would contact them and then just leave it if they didn’t reply, especially as it was still under warranty that you’d paid extra for. It just doesn’t add up.

I’ve returned many things over the years under warranty and companies don’t just ignore emails especially ones as large as Asus. Even if they initially do you would try again and then keep trying because to me and 99% of most other people it’s the principal.

I agree with some of his stuff but the way he talks to people makes think hes not from the old school era

The guy is a rage troll…

EVGA is top notch for Nvidia…not sure how Sapphire is now days if they are better that is good news…I miss Visiontek for ATI. I would like to go back to ATI

the 970 does have 4GB but only 3.5 GB of it is faster speed while the last 512 MB is slower

You are aware that we all know that and it’s still 3.5 GB which is EXTREMELY misleading. And you already know the outcome of that case that there was a CLASS ACTION LAWSUIT & NVIDIA LOST! See this is the type of BULLSH*T I’m talking about. The blatant defense of Nvidia’s Bullsh*t. No matter what they do and how CLEARLY Wrong they are there is always someone out there to defend a clear cut Loss on their part. Man how do you people do that? How do you type and hold Nvidia’s d*ck in your hand at the same time, while so efficiently stroking it’s shaft & cradling the balls?…

SoOOOO like I said and everyone says, THREE POINT FUQIN GIGABYTE GIGABYTE NOT 4. It was even hard coded into the chips SKU & Nvidia went through the hassle of trying to cover it up in a soft hardware update. Ahhh you know what, FUQ IT MAN! You’re right The 970 even had a cup holder with an additional 54 GB of VRAM. It also has its own cpu on board as well with liquid nitrogen dispensed cooling. Fuq man, why do I even bother. FUQ THIS SH*T!

I own 970 & still using it…Rainbow Six HD texture pack is using my 4GB…I been with the Nvidia court hearing follow ups…since day one this is the official situation with the 970…and I am not defending Nvidia or ATI…I used both for many years since the 80’s and 90’s…ATI had a fare share of hardware situations like Nvidia but…you do know that ATI had problems right? Nvidia & ATI pulled some shady moves over the years…I read the comments you post you get to offensive and rage over some comments…they might be wrong or right but…you do not need to rage out for someones bad opinion…I do understand what you are saying by people being a fan boy but…we are both on the same page and maybe we both grew up in the same era but before you chow on me remember that I am a very knowledge warrior of life…I gave you a thumbs down on you;re reply because of you’re attitude and how you talk to people…it is starting to make me wonder if you are a new age

Officila court statement from Nvidia: The GeForce GTX 970 is equipped with 4GB of dedicated graphics memory. However the 970 has a different configuration of SMs than the 980, and fewer crossbar resources to the memory system. To optimally manage memory traffic in this configuration, we segment graphics memory into a 3.5GB section and a 0.5GB section

I own 970 & still using it…Rainbow Six HD texture pack is using my 4GB…I been with the Nvidia court hearing follow ups…since day one this is the official situation with the 970…and I am not defending Nvidia or ATI…I used both for many years since the 80’s and 90’s…ATI had a fare share of hardware situations like Nvidia but…you do know that ATI had problems right? Nvidia & ATI pulled some shady moves over the years…I read the comments you post you get to offensive and rage over some comments…they might be wrong or right but…you do not need to rage out for someones bad opinion…I do understand what you are saying by people being a fan boy but…we are both on the same page and maybe we both grew up in the same era but before you chow on me remember that I am a very knowledge warrior of life

Officila court statement from Nvidia: The GeForce GTX 970 is equipped with 4GB of dedicated graphics memory. However the 970 has a different configuration of SMs than the 980, and fewer crossbar resources to the memory system. To optimally manage memory traffic in this configuration, we segment graphics memory into a 3.5GB section and a 0.5GB section

They both have lost the crown…ATI is doing so much better since the new CEO

“Nvidia beat AMD ever since 8800GTX.”

How about the Radeon HD 5870? It kept AMD’s single-GPU performance crown over Nvidia for a full six months.

The Nvidia 8000 series came out in 2006 while the ATI 5000 series came out in 2009…the Nvidia 400 series cards came out 6 months after in 2010 and took the win from the 5000 series & keep taking the crown from ATI for a while after…ATI took back the lead, its like that with every generation

i remember amd kicking nvidia a*s in 2008 with the 4000 series the 4870 used gddr5 for the first time and had a 1gb model while the nvidia 9000 and 200 series still used gddr3.

i remember also the amd 6000 series being great and the 7970 trading blow with the gtx 680 and even beating it and due to having more vram aged better… the r9 series had a lot of vram and even used that to laugh at nvidia 3.5gb gtx 970 scandal, the rx480 and rx580 are great mid range gpu…also amd were the first one to adopt hbm memory with the r9 fury and fury x.

so maybe they are rarely on top in term of high end gpu but in the midrange and budget they are great and in those last 13 years(since the 8800gtx) and even before that they got many wins over Nvidia.

I actually used a Sapphire Radeon 4870 back then, the 1GB model. Went with Radeon over GTX 280.

It was a pretty bad a** card. Sapphire models were always the best cooled Radeons, and was certainly a good brand. Not sure how they are these days, but if I go with AMD again next card, will certainly be looking in to them again.

Used the 4870 until the GTX 580. Still have it in my drawer of old components. Likely will use it in a retro build someday.

48xx was the last series to have remnants of ATI design in it. Not surprising it was still a great card.

I had the HD 4870/4890…in 2008 Nvidia release the GT 200 series that did put up a good fight with the HD 4000 series…I switch to the 5000 series but had really bad luck that is when I cross over to Nvidia side with the GTX 480’s they ran fast but very hot…even much hotter vs the ATI HD 4000 series

Not, RX 200 series and 7000 series were good, later nVidia was better (had the strongest GPU), well until now…..

r9 290 vs gtx 770

same msrp.

yes same here

True I always buy Nvidia due to my G-sync monitor couldn’t be without it now even though I’ve tried.

In my experience Freesync 1 and 2 are garbage compared to G-Sync.

freesync is not garbage

i use it alot

it is great actually

True I was too harsh there. I’ve used both extensively and as stated, in my opinion G-Sync is far superior.

i haven’t used g sync but content with freesync

Some are brand loyal (don’t get why really) while other pick whats best for their price bracket. Nvidia had the best cards in my bracket for a long time (enthusiast space) but it seems Amd finally got an contender in that space again and that competition is very welcome as Nvidia could choose whatever price they liked and got away with it due to lack of competition.

Will be interesting to see how the new amd card looks after the more independent reviews (still missing quite a few pieces of the puzzle – Like how are RT performance, how does the cache behave when utilizing more vram heavy games (ie its hit-rate) and will the direct storage support have hardware offload on for instance decompression to name a few) – Depending on how it does there the new gaming rig could very well be a team green this time around.

For me Warranty,Drivers,support…Nvidia wins this for me thanks to EVGA…but I want to go back with ATI give them a try…I just prefer the rendering technology path Nvidia provides in the render vectors

It makes sense for people that will buy a ryzen 3 to buy a rdna2.

I agree but…its more a 50/50 if it helps bring down cost on the brand you like but…for me ATI is the clear smarter choice to go with and now I might just do that…I am mad at Nvidia for the greed and strategy

There is no competition….. When nvidia cant sell you the card

I want to switch back to ATI I miss them but I left due to problems and needs not because of being a fan boy…I am also not to happy with Nvidia greed and strategy

Excellent news!

all of them with 16gb vram,this is what i love about amd they don’t skimp on vram.

Agree but it would be cool if there was 8gb 6800 at the same price as 3070.

I just think it would be funny if they had a 15% faster gpu at the same price.

Won’t happen of course.

they could have lowered it to 12gb to try to match the 3070 price or be lower, i wonder why they didn’t do that

Nice numbers, but, I will still wait for 3rd party benchmarks as well. Still, it is promising.

DLSS (2.0 and 2.1, 1.0 not) is pretty great but people forget to mention that doesn’t work on the fly in all games and needs the developers send samples do Nvidia generate a file specific for that game. Also even with DLSS when using Ray Tracing there is frame drops to below 60 in Control for example, caused by the stress of RT. RT imho still is not worth. Even developers are just now beginning to use and just for reflections and marketing. I have a RTX card and finished/tested games with RTX enabled and even so I will glad buy a 6800 when possible. Though I am confident the future 6700 will/can be a even better deal.

that’s the only thing that’s not great about dlss.

Well, you don’t say which RTX card you are using but I suppose is a 2080 Ti. Go to Dead Letters or Communications on the Executive Sector and wait to spawn enemies. Fight using all the powers maxed out at the same time (actually just the one you grab three rocks at same time will serve). Make this using the 1080p mode (also read as performance mode) at High Settings with all RT features enabled. I am pretty sure it will drop too. Those benchmark just sprinting and shooting like a normal police officer don’t stress anything. Quality mode is 1440p internal btw.

https://youtu.be/eWeIeZof9cM?t=52

I’ll wait for third party and thermals.

Very nice, but I just don’t see myself ever buying another Radeon.

These cards would be a game changer if Nv didn’t have DLSS :/

They are working on it and there is a good chance its going to be a more widely adopted tech if every developer uses it for consoles.

Its better like this then releasing early and it sucking like DLSS 1

I just hope its gonna be good enough when it comes.

Benchmarks done on a all AMD system. I’ll wait for the benchmarks with Intel cpu’s.

waiting fo 150W total power + 12GB GDDR6… so far nvidia has the best “sane” gpu (3070)

for a homeowner how pays electricity bills -like me- efficiency is king, now if you are a kid living in yr mom’s basement by al means go crazy!

or you live in a country where electricity is cheap.

Have to say DAMN AMD HAVE COME A LONG WAY, just missing independent reviews with more titles (and some with RT please) and a complete feature set (for instance they mentioned direct storage support but at what capacity… will it feature hardware based decompression or will the cpu still have to do that for instance) or what about dlss alternative (taa blur is BAD!).

Might end up with team red this round and its been a long way coming (blue + green mostly due to lack of competition in the enthusiast space… until now it would seem!)

https://uploads.disquscdn.com/images/2e97312bbe0ee3eb463750f0e4d743a7f2d28ed04d0b15f3c5d3308e30c2fa45.jpg

Jensen Huang: “How did AMD do that” ? “They weren’t supposed to be able to do that.”

yep i am also surprised they managed to catch up this fast

Thanks to the CEO ATI/AMD she is also related the CEO of Nvidia

F**K YEAH!

This is sooo satisfying to see…a real competition to Nvidia’s bullying in GPU market!

2020 might actually be one of the best years for AMD contrary to what it has been for most of the civilized world!

6900XT and 3090 are both vanity products, horrible value. The pricing of 6800 is really odd compared to 6800XT, it’s almost like they don’t want to sell any. At 500 it would sell like crack at wherever Hunter Biden is partying.

Their hesitation to mention RT perf and DLSS has me sceptical,. I’ll wait for open world benchmarks and see how their RT performance goes and if they’ll even bother with their first gen DLSS (because it will be 1st gen compared to Nvidia’s approaching 3rd).

That being said, I’m still likely to wait for the 3080/ti model for my purchase.

Where are all the people that said AMD will only have a 3070 competitor now that they have 3 cards all faster then 3070?

AMD is offering a good deal just wish the 6800 was 500$ & the XT a 100$ cheaper than the 3080 🙁

They could cut the Vram to 8gb and cut the price to 500$ if they wanted.

But as it is now 500$ just isn’t realistic. Its a considerably faster card with double the Vram.

I am prefer waiting for intel’s discrete GPU Xe to see tougher competiton = lower price 🙂