It appears that we have some preliminary Gaming benchmarks of the upcoming RTX 3090 Titan-class Flagship GPU. TechLab has once again lifted the embargo, after the RTX 3080 review leak.

The Chinese Bilibili channel @TecLab has just leaked some alleged benchmark results of the GeForce RTX 3090 GPU. There are both synthetic and gaming benchmark scores, but we will only focus on the gaming part of this leak. But before I continue, do take these results and fps scores with a grain of salt.

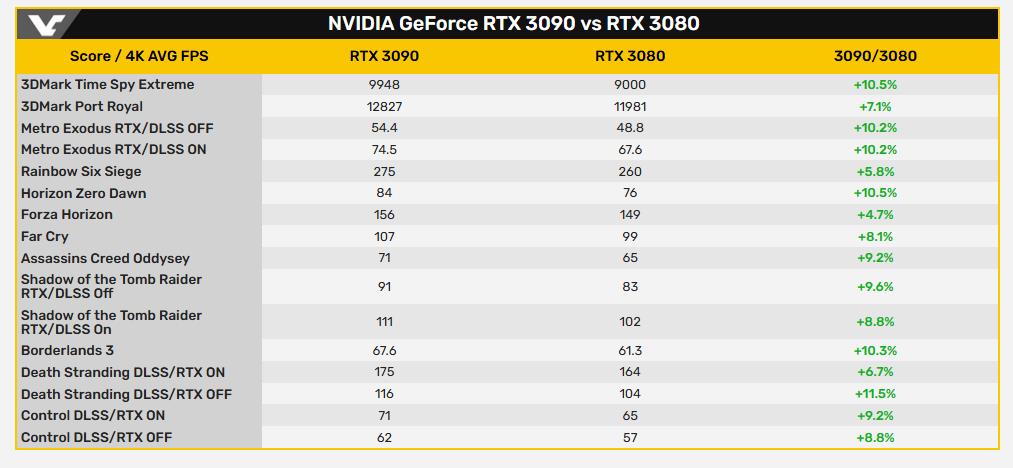

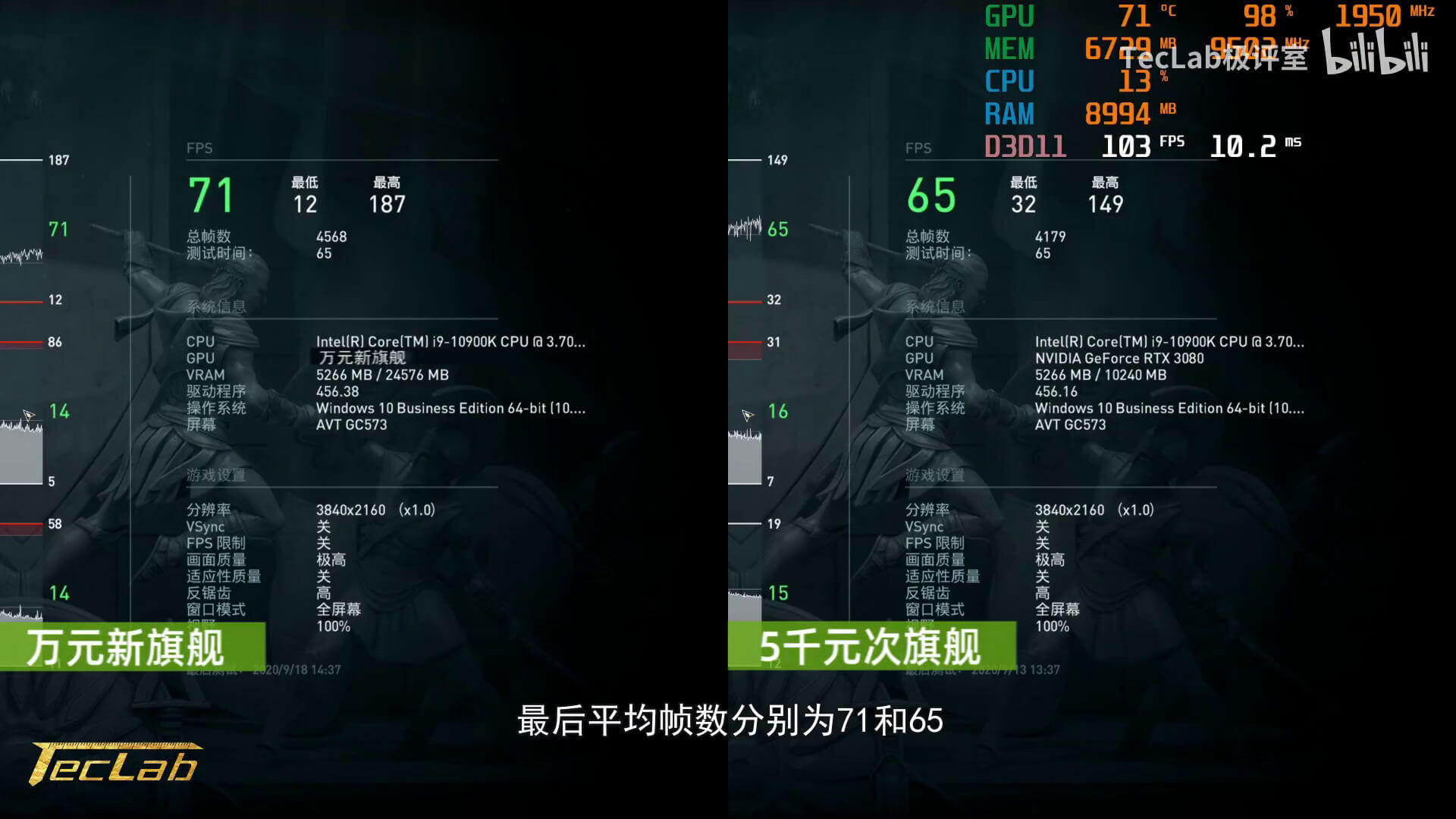

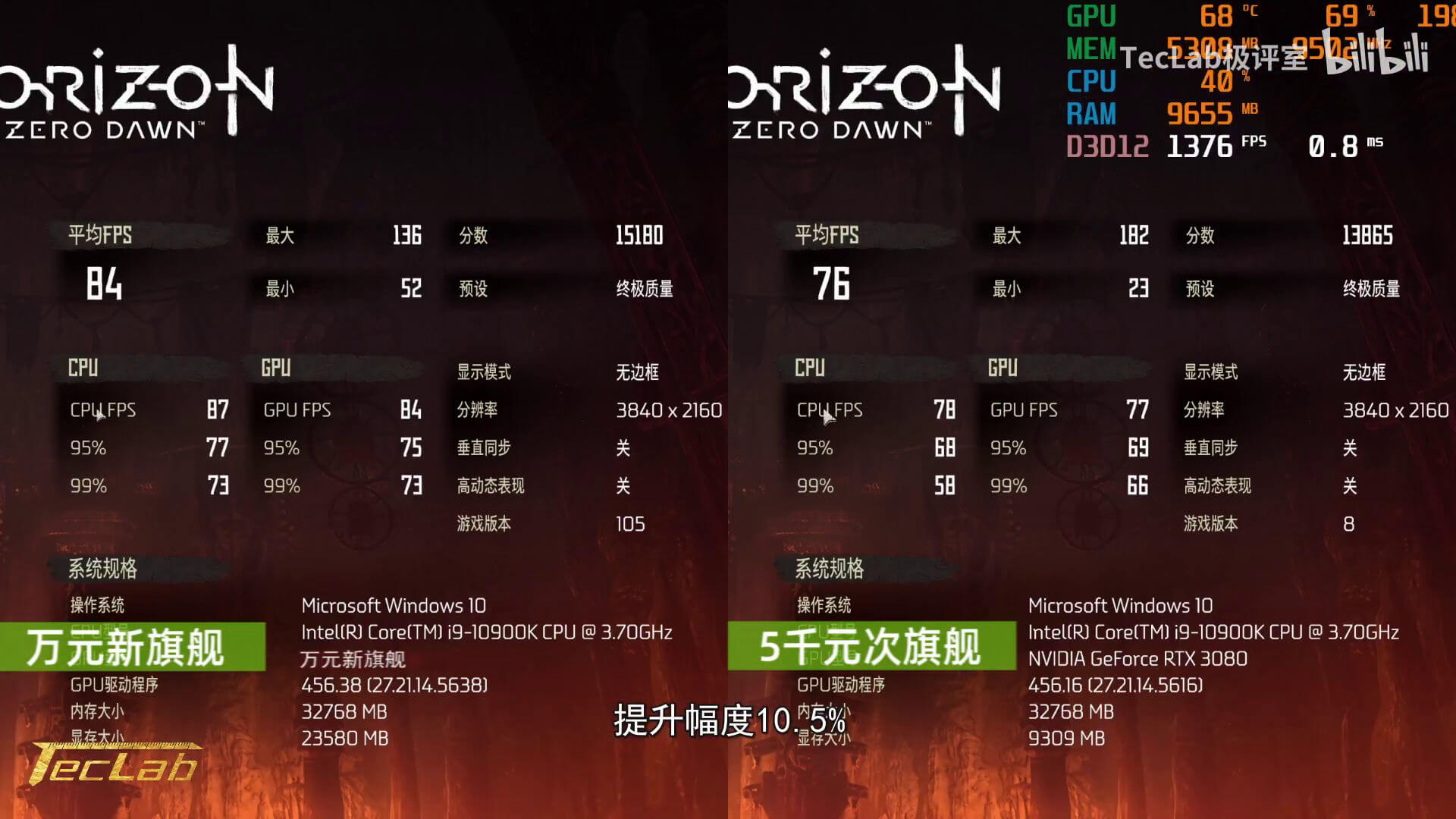

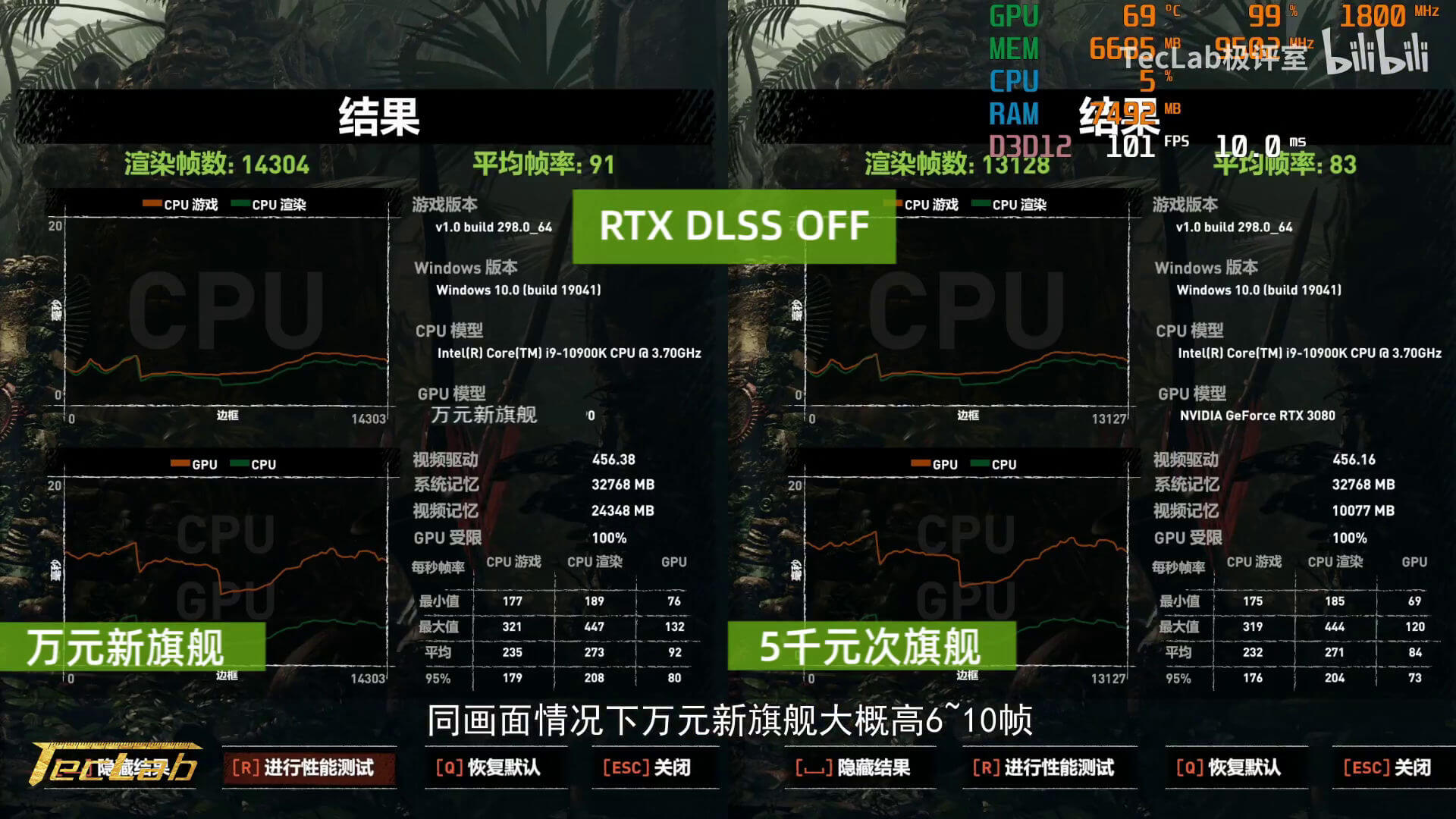

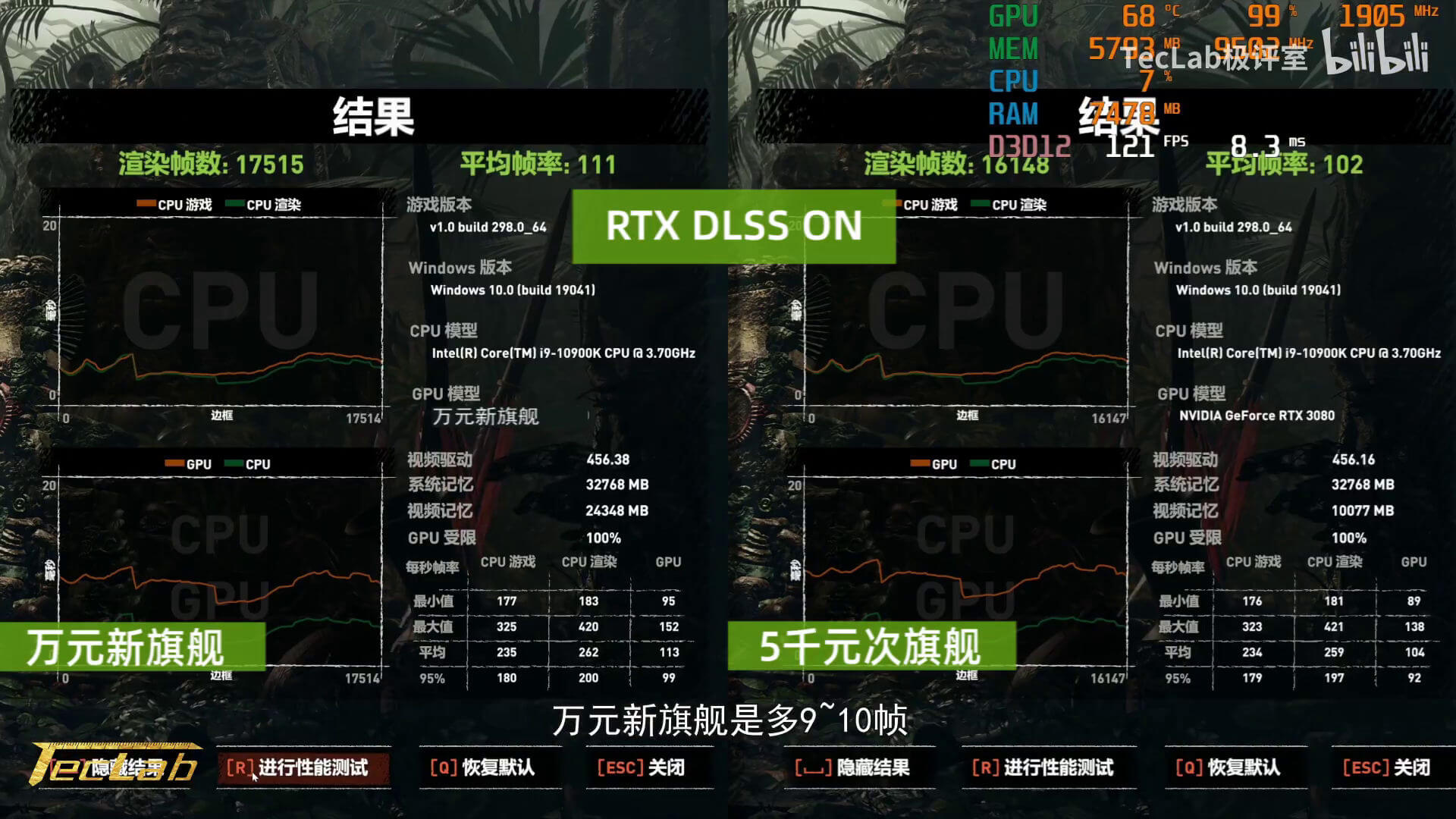

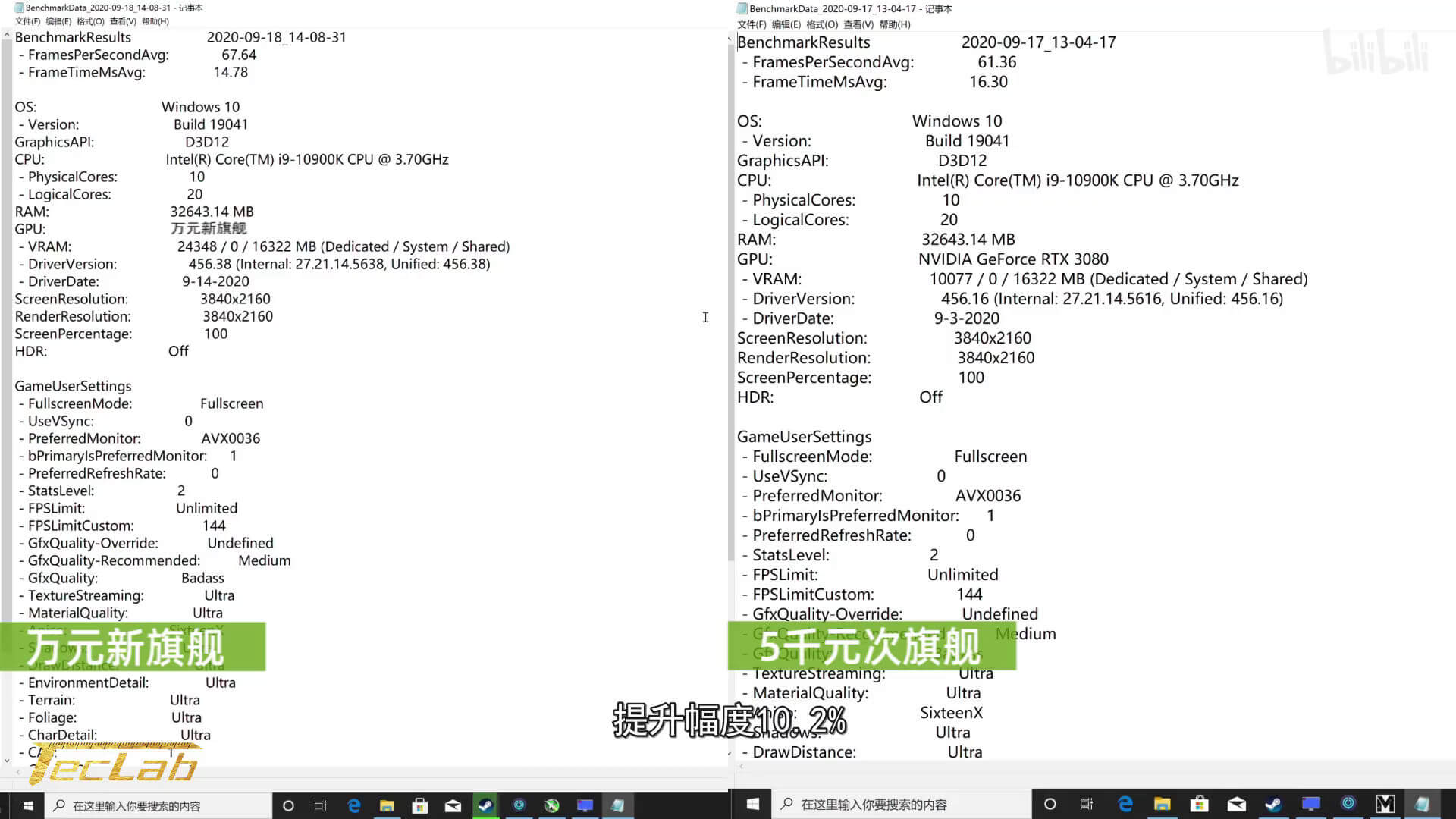

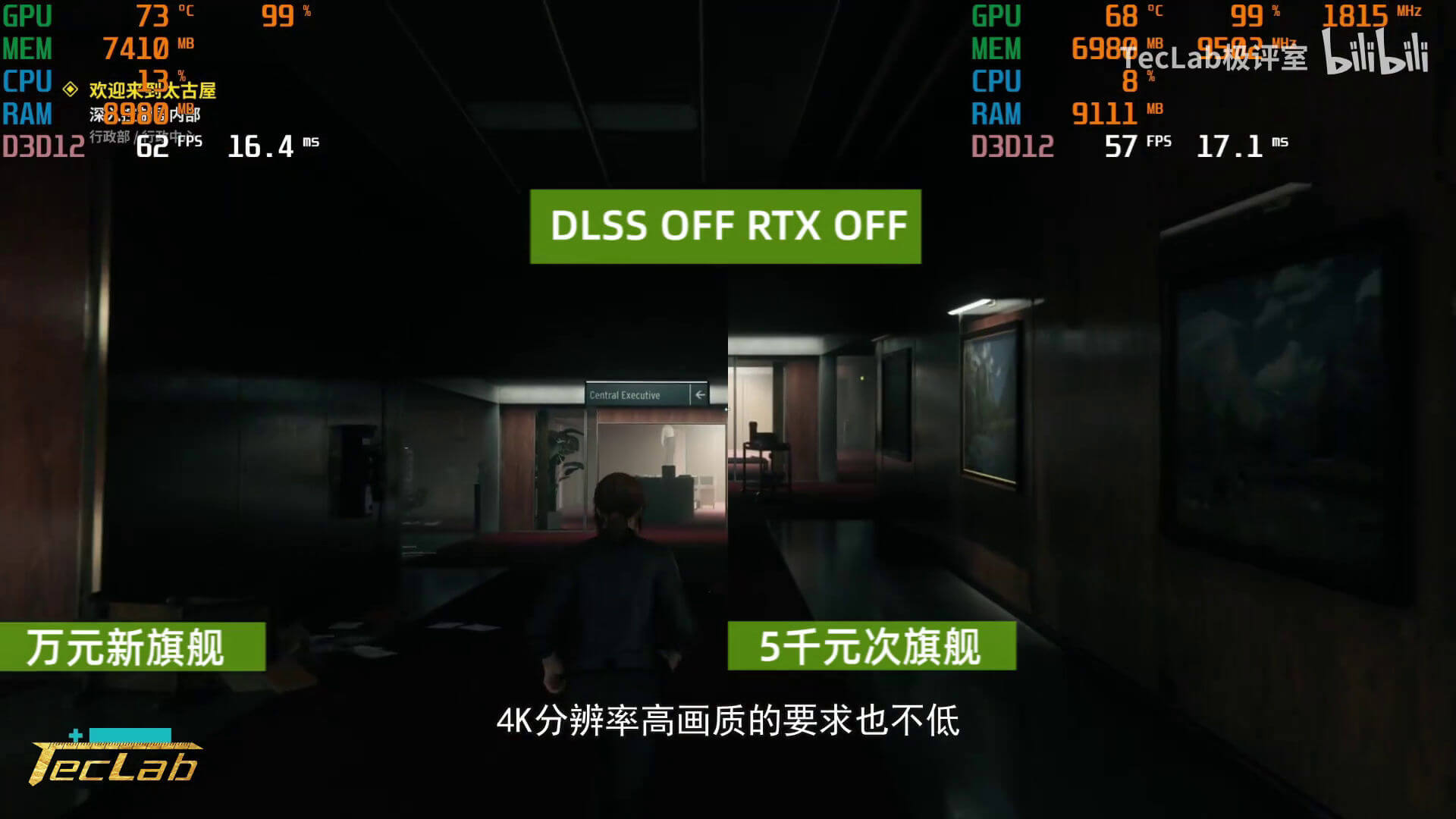

The test was done on the same system as before, using an Intel Core i9-10900K CPU, and the DDR4 memory was clocked at 4133 MHz. Coming to the Gaming benchmarks, the following games were tested; Far Cry 5, Borderlands 3, Horizon Zero Dawn, Assassin’s Creed Odyssey, Forza Horizon 4, Shadow of the Tomb Raider RTX DLSS On/Off, Control with DLSS On/OFF, and lastly Death Stranding with DLSS ON/OFF. These are 4K in-game benchmarks.

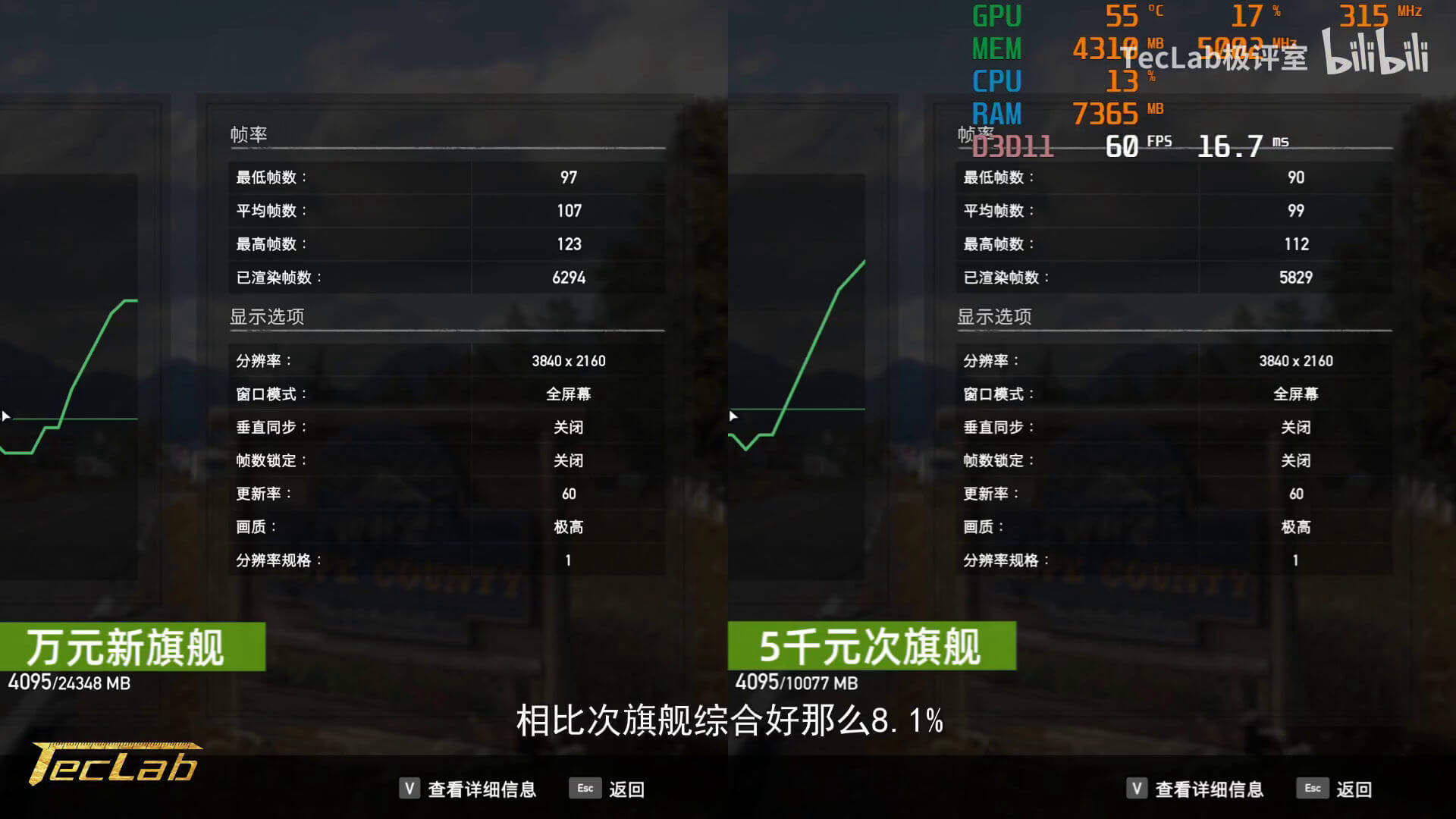

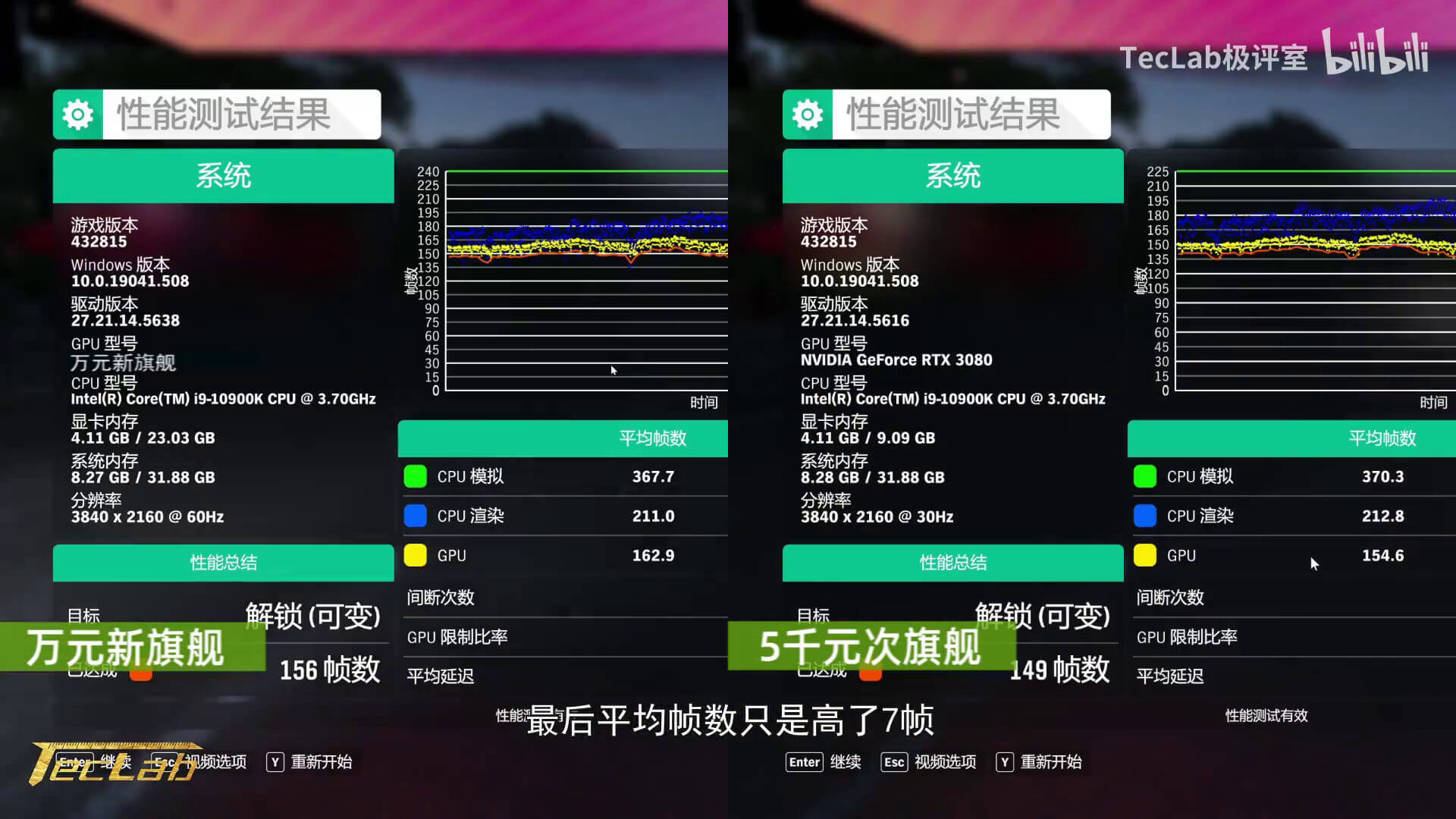

In the above system specs, the line just before the RTX 3080 means “10 thousand Yuan Flagship Display Card”, after translation, which is the RTX 3090 GPU. The reviewer does not refer to the RTX 3090 by its actual name, instead, the leaker mentions ‘10 thousand Yuan Flagship’ GPU vs ‘5 thousand Yuan Flagship’ tested, which is the RTX 3090 1500 USD GPU and the RTX 3080 700 USD GPU, respectively.

Seriously, why can’t this guy just show some solid PROOF, like a GPU-Z screenshot to showcase that he really owns the RTX 3090 GPU? Anyways, overall it appears that the RTX 3090 is only 10% faster than the RTX 3080, but these benchmarks might be done on early GPU drivers, so the final results are going to vary. Just 10% faster seems a bit off, at least in my opinion.

Below you can find the chart as compiled by Videocardz, to give you a better idea on the performance difference between these two Flagship GPUs. Again, do take these results and fps scores with a grain of salt. The performance difference could also be due to poor scaling as well.

Far Cry 5 / Forza Horizon 4:

Assassin’s Creed Odyssey/ Horizon Zero Dawn:

Shadow of the Tomb Raider (RTX DLSS ON / OFF):

Borderlands 3:

Death Stranding (DLSS OFF/ON, here the pictures are tagged incorrectly):

CONTROL DLSS On/Off:

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email