According to one reliable source over at Igor’s Lab, it was predicted before that Nvidia is planning to release at least three different high-end variants in the RTX 3000 series of Ampere lineup. These are RTX 3080, RTX 3080 (Ti/Super) and RTX 3090 (Ti/Super). Some rumored specs were also shared by one twitter user kopite7kimi before. All three variants are based on the same PG132 board/PCB, and they are different bins of the same “GA102” chip.

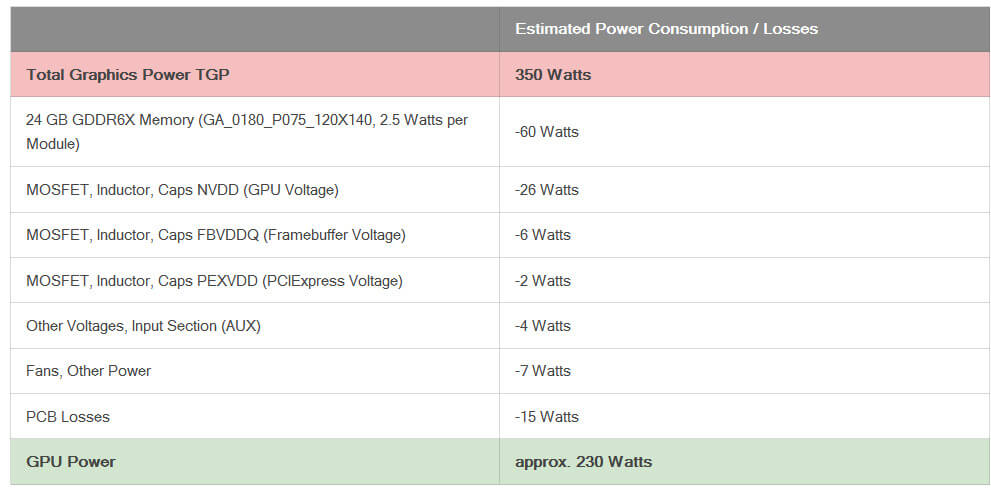

Igor’s Lab has once again highlighted that the flagship RTX 3090 GPU will feature a Total Graphics Power/TGP of 350 Watts. We also have some confirmation on the complete breakdown of the estimated total power consumption and losses of this flagship Ampere card.

According to Igor, the RTX 3090 (or whatever name NVIDIA goes for) will have a TGP of 350 Watts and a GPU core TDP of 230 Watts. This is a huge increase in power consumption, and it looks like Nvidia is trying to squeeze every ounce of performance from the GA102 chip and the PCB. Shifting to the 7nm fabrication node will actually help lower the overall power consumption of Ampere cards, but it looks like Nvidia might trade off efficiency with brute GPU horsepower.

That’s a “total graphics power” of 350 Watts, and if we compare this value with the previous-gen flagship RTX 2080 Ti which had a TGP of 260 Watts on some of the custom GPU variants, we are looking at a higher overall power consumption.

Anyways, let us see the full breakdown and dissection of the TGP. The RTX 3090’s GA102 GPU core alone will draw roughly 230 watts of power, with the 24GB memory drawing around 60 watts. The MOSFETs, Inductor, Caps will consume a further 30-34 watts of power, and 7 watts goes for the fan. We can expect PCB losses to be in the range of 15 watts, and the input section/AUX consumption should hover around 4 watts. So we get a total graphics power of 350 Watts, and this is for the reference Founder’s Edition SKU variant. Expect custom AIB cards to go even higher, based on the cooling and PCB.

Igor’s Lab claims all three GeForce Ampere Founders Edition cards will be based on the same PG132 circuit board with the main difference being the VRAM and board power. With a die size of 700 mm² and a transistor count of 45,000 million the GA102 is indeed a very big chip, which might also require some serious cooling at least for the high-end flagship Models.

The flagship RTX 3090 SKU will end up with 24 GB of GDDR6X double-sided vRAM, on a 384-bit bus width, and will have a 350W TGP, as per the above speculation. We don’t for sure whether the RTX 3090 will be a direct replacement of the TITAN GPU. Sure, this GPU supports NVLINK and has double the VRAM, but this doesn’t confirm whether Nvidia plans to drop the TITAN nomenclature. Though, current-gen TITAN already carries the RTX branding/nomenclature.

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email