NVIDIA has announced that PhysX is now open source, meaning that developers can use its latest version to GPU-accelerate the PhysX effects on graphics cards from AMD (and in the future from Intel).

According to NVIDIA, the PhysX SDK is a scalable multi-platform game physics solution supporting a wide range of devices, from smartphones to high-end multicore CPUs and GPUs. PhysX has already been integrated into some of the most popular game engines, including Unreal Engine (versions 3 and 4) and Unity3D.

Now some of you may never realized that PhysX has been used in a lot of games via its CPU-side. These PhysX CPU effects have been running in various games and were calculated by the CPU instead of the GPU. However, and since PhysX is now open source, developers can utilize GPUs in order to improve performance and offer better physics effects.

To be honest, I don’t expect the older PhysX GPU-accelerated games to work on AMD’s GPUs as the latest PhysX version is only open sourced. In theory, games using this new latest version will not be locked behind NVIDIA’s GPUs.

Here is hoping that we see more GPU-accelerated physics now that PhysX will work on all graphics cards!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email

Good.

It’s a good move, but I’ve always thought PhysX effects look like crap.

I’m pretty sure “regular” things like ragdolls and rigid body physics can be powered by PhysX as well (and are in many games).

I have read this news before, it was posted on NVidia’s BLOG.

https://uploads.disquscdn.com/images/aeaf134f836537926475ac37385fcaed4844a0cb75570a847822f9322a7fc827.gif

This is what they had to say.

PhysX will now be the only free, open-source physics solution that takes advantage of GPU acceleration and can handle large virtual environments. It’s available today as open source under the simple BSD-3 license. It solves some challenges.

In AI, researchers need synthetic data — artificial representations of the real world — to train data-hungry neural networks.

In robotics, researchers need to train robotic minds in environments that work like the real one.

For self-driving cars, PhysX allows vehicles to drive for millions of miles in simulators that duplicate real-world conditions.

In game development, canned animation doesn’t look organic and is time consuming to produce at a polished level.

In high performance computing, physics simulations are being done on ever more powerful machines with ever greater levels of fidelity.

But the keyword here is “hardware-accelerated”. UE4 and Unity are mostly CPU-based only, unless the DEV goes out of their way to add more features that require GPU acceleration as well.

UE4 is very GPU based. As it by default uses physX for the physics system. Also, all the postprocessing and normal rendering is generally GPU based. Unless you go out of your way to bind things to the CPU. Source: I use UE4 daily

Im a simple man i see a star wars gif/meme i give a thumbs up.

Huh, well didn’t see this one coming, but, cool of Nvidia. Didn’t think I’d be saying that anytime soon.

Why now? They paid a handsome sum to acquire this technology back in the day.

Did they really paid lot of money to acquire Ageia? Honestly i don’t really think so since it never end up bogging down the company (the same way ATI did to AMD).

It is likely because it simply isn’t making them any money. If it isn’t making you any money, there isn’t a reason to horde it.

Here is hoping they continue the trend and dump Gsyn for Freesync.

These changes are great for the consumer.

It sure didn’t. – At least it never made me decide to stick with GeForce, even though now it’s a problem to run games like ‘Mafia II’ and some of the “Arkham” games with PhysX turned on, even if you have a mighty AMD card.

Also, not many developers ended up using GPU-based PhysX, so there’s also less reason to necessarily go with a GeForce.

Good thing that AMD is coming up with their own ray-tracing support soon, so that won’t be an exclusive for long anymore either.

As for the sync-technologies; I don’t know what either companies want or will do, but I know that now more and more monitors are getting both built in. Pretty funny that THEY are the ones deciding that they won’t put up with the exclusivity crap. XD

Nvidia made released the source code for something. Has Hell frozen over? Man they must really desperate for some good press.

Part of physx has been open since 2015. Now they just release the full package. And in the professional world nvidia did spear head several open source project as well.

Gameworks has been open for years just not on the level that qualify it to be open sourced. Also being open source will not going to solve the adoption problem. Bullet for example with it’s GPU accelerated feature has been open source for almost 10 years and yet not a single game ever use it’s gpu accelerated feature (bullet was create as a direct counter to nvidia proprietary physx back in 2009).

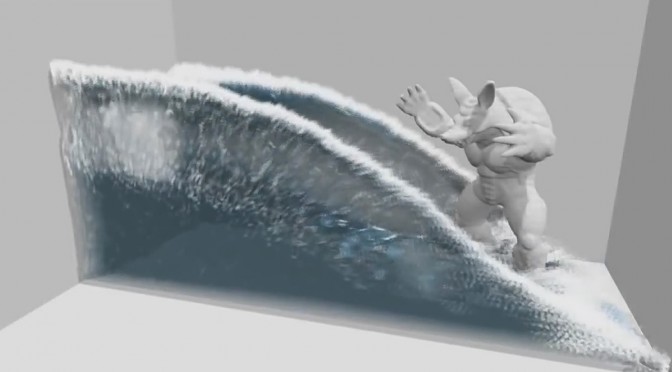

That water in your header is a gameworks feature called Flex. It is (sort of) a part of PhysX, but not open sourced

GPU can do it a lot faster reducing the strain it makes on CPU

it will fix UrinaEngine4 performance issues in the future

A CPU will run most physics calculations many times slower than something designed to run on a GPU. Battlefield V, for example, scatters GPU generated particles and physics all over the screen. The same effect on a CPU would destroy the FPS.

It’s kind of like trying to run ray tracing on a CPU. it can be done, but it is very, very slow.

So will Arkham Knight now have all the nvidia effects on AMD gpus???

I think the GeForce GPUs had dedicated processors much like now the RTX-cards have dedicated processors for ray-tracing. – I could be wrong, but I think that’s why you just get horrible performance trying to run older PhysX-supported games with an AMD-GPU. I know it off-loads it to the CPU, but the reason it does that is probably because there’s nothing physically on the GPU to calculate PhysX.