Saber Interactive has released its four-player co-op third-person shooter that is inspired by Paramount Pictures’ blockbuster film, World War Z. The game is powered by the Swarm Engine that pushes hordes of zombies, and it’s time now to benchmark it and see how it performs on the PC platform.

For this PC Performance Analysis, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 16GB of DDR3 RAM at 2133Mhz, AMD’s Radeon RX580 and RX Vega 64, NVIDIA’s RTX 2080Ti, GTX980Ti and GTX690, Windows 10 64-bit, GeForce driver 425.31 and the Radeon Software Adrenalin 2019 Edition 19.4.2. NVIDIA has not included any SLI profile for this title in its latest drivers, meaning that our GTX690 behaved similarly to a single GTX680.

Saber Interactive has included very few graphics settings to tweak. PC gamers can adjust the quality of Details, Antialiasing, Post Processing, Shadows, Lighting, Effects, Textures and Texture Filtering. Moreover, PC gamers can choose between the DirectX 11 and Vulkan APIs. As we’ve already stated, the Vulkan API suffers from major stuttering issues which is why we’ve decided benchmarking the game using DX11.

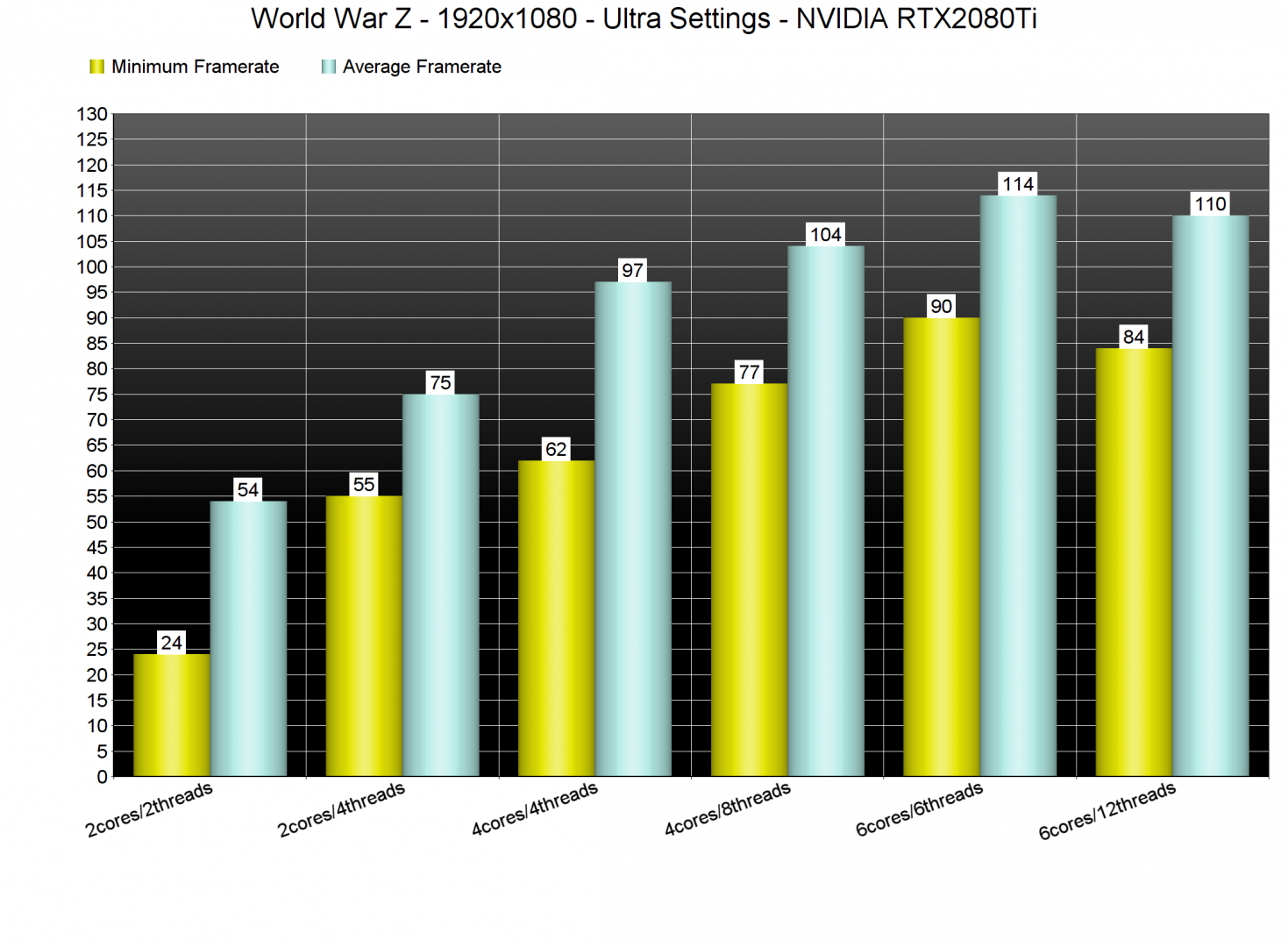

In order to find out how the game performs on a variety of CPUs, we simulated a dual-core and a quad-core CPU, and we are happy to report that even our simulated dual-core system was able to provide a playable experience at 1080p and on Ultra settings. Without Hyper Threading, our simulated dual-core system was able to push a minimum of 24fps and an average of 54fps, and we also experienced occasional stutters. With Hyper Threading enabled, our simulated dual-core system offered a minimum of 55fps and an average of 75fps.

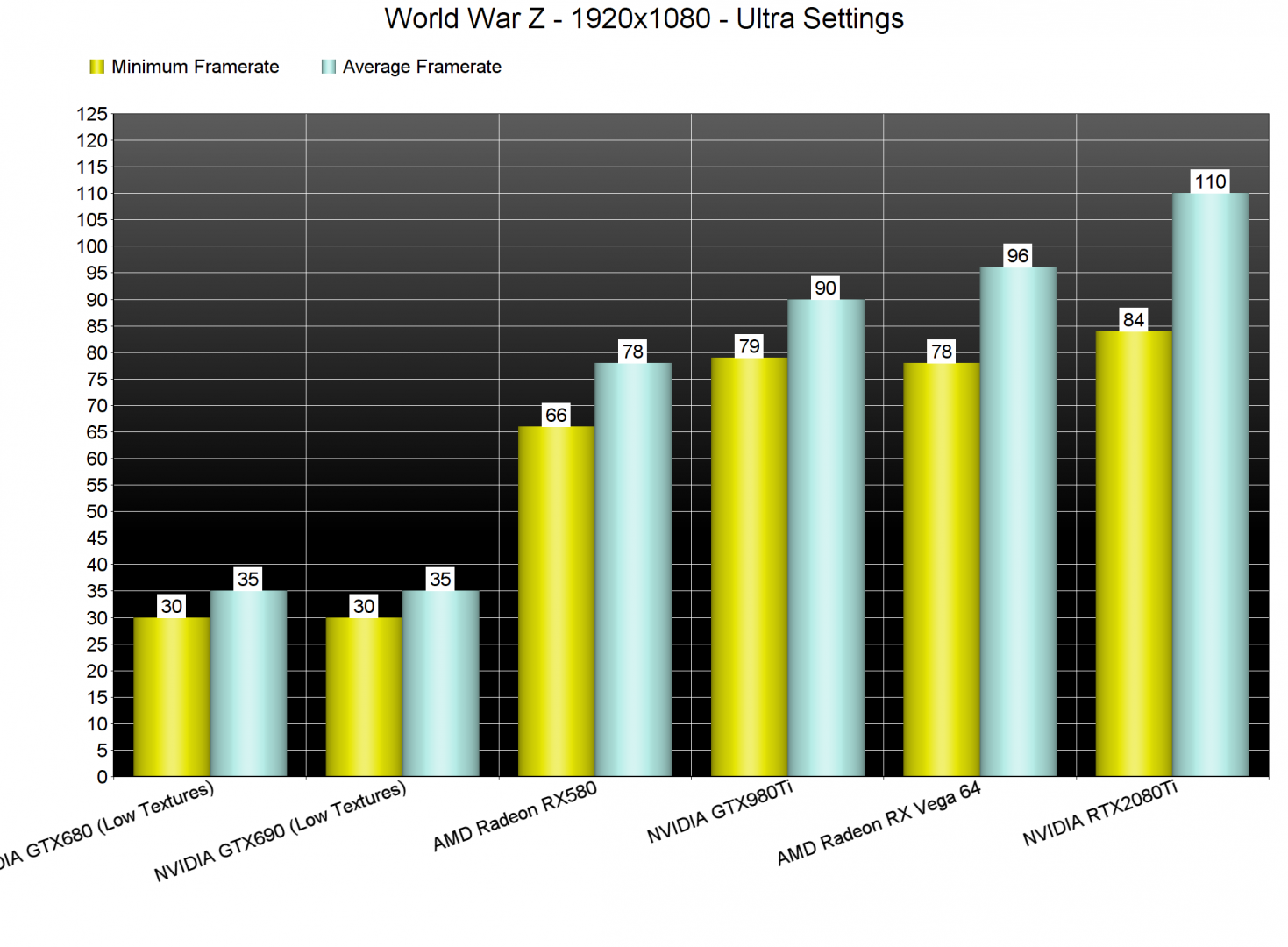

World War Z also does not require a high-end GPU in order to be enjoyed. At 1080p/Ultra, our AMD Radeon RX580, RX Vega 64, NVIDIA GTX980Ti and RTX2080Ti were able to offer a smooth gaming experience. Not only that, but our dated GTX680 (with Low Textures mind you) was able to offer a smoother experience than current-gen consoles. The game is currently locked at 30fps on consoles and our GTX680 was able to run the game with a minimum of 30fps and an average of 35fps. Also note that we were memory limited at 1080p on both of our AMD Radeon RX Vega 64 and NVIDIA RTX2080Ti.

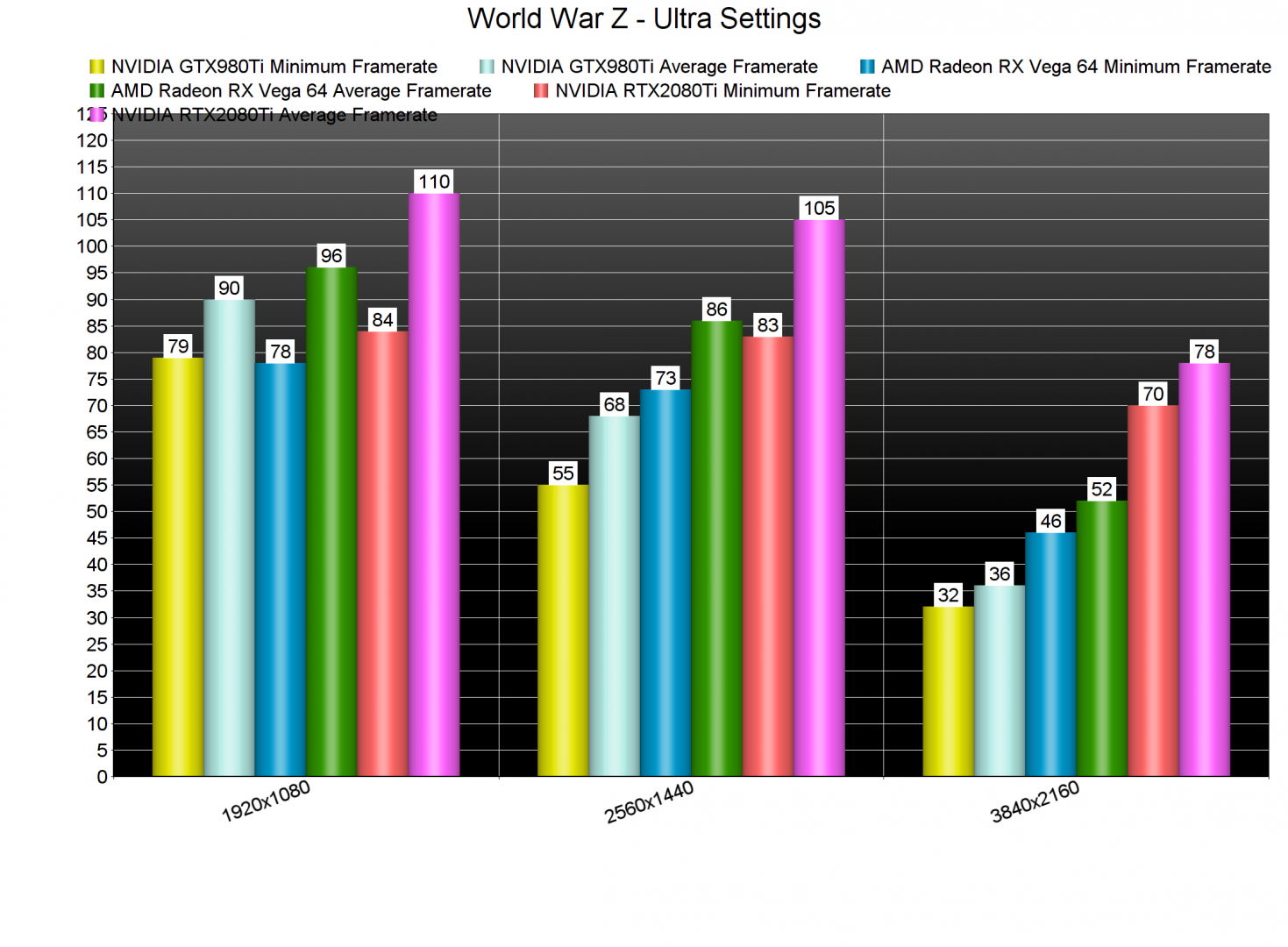

At 2560×1440, our AMD RX Vega 64, NVIDIA GTX980Ti and RTX2080Ti were also able to offer a smooth gaming experience. As for 4K, our GTX980Ti offered a “console” experience (I’m using quotes here because we’re talking about native 4K and not checkerboard 4K), our Vega 64 came close to a 50fps experience and our RTX2080Ti had no trouble running the game with constant 60fps.

What this ultimately means is that the game is greatly optimized on the PC, even when using the DX11 API. Moreover, the mouse controls felt fine, there are proper on screen indicators, we did not encounter any crashes, and everything appeared to be running as intended.

Graphics wise, World War Z looks great but it’s not among the most visually impressive titles. It’s big ace is the hordes of zombies and we have to say that it’s pretty impressive witnessing as many zombies attacking without any framerate drops or hiccups. This is easily what this game will be remembered for. Other than that, though, there is nothing particularly amazing here. The environments, for example, are really static and there are very few objects to interact with. Honestly, this feels like a current-gen title; it looks great but – apart from the huge armies of zombies – there is nothing wow-ish or next-gen-ish.

In conclusion, World War Z is a really optimized PC title. Even though the Vulkan API currently has some issues, the game runs like a charm on a wide range of PC configurations even when using the DX11 API. World War Z does not require a high-end CPU or GPU, and looks on par with what you’d expect to see in a current-gen FPS.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email