Microids has just released the next part of its Syberia series, Syberia: The World Before. Powered by the Unity Engine, it’s time now to benchmark it and see how it performs on the PC platform.

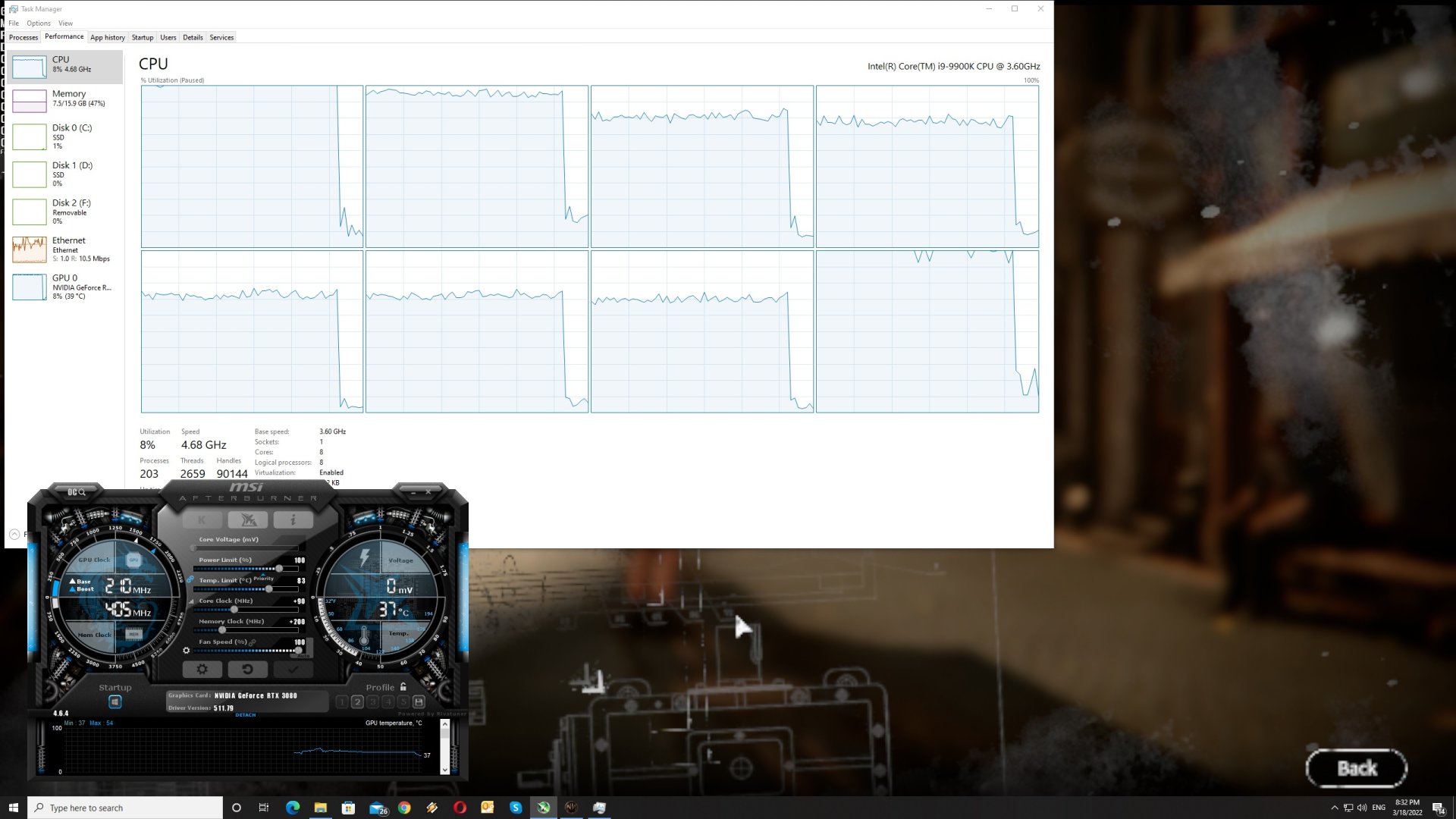

For this PC Performance Analysis, we used an Intel i9 9900K with 16GB of DDR4 at 3800Mhz, AMD’s Radeon RX580, RX Vega 64, RX 6900XT, NVIDIA’s GTX980Ti, RTX 2080Ti and RTX 3080. We also used Windows 10 64-bit, the GeForce 511.79 and the Radeon Software Adrenalin 2020 Edition 22.3.1 drivers.

Microids has added very few graphics settings to tweak. PC gamers can adjust the quality of Textures, Shadows and Anti-Aliasing. There are also options for Ambient Occlusion, Chromatic Aberration and Dynamic Elements.

Syberia: The World Before does not feature any built-in benchmark tool. As such, we’ve decided to benchmark the starting area.

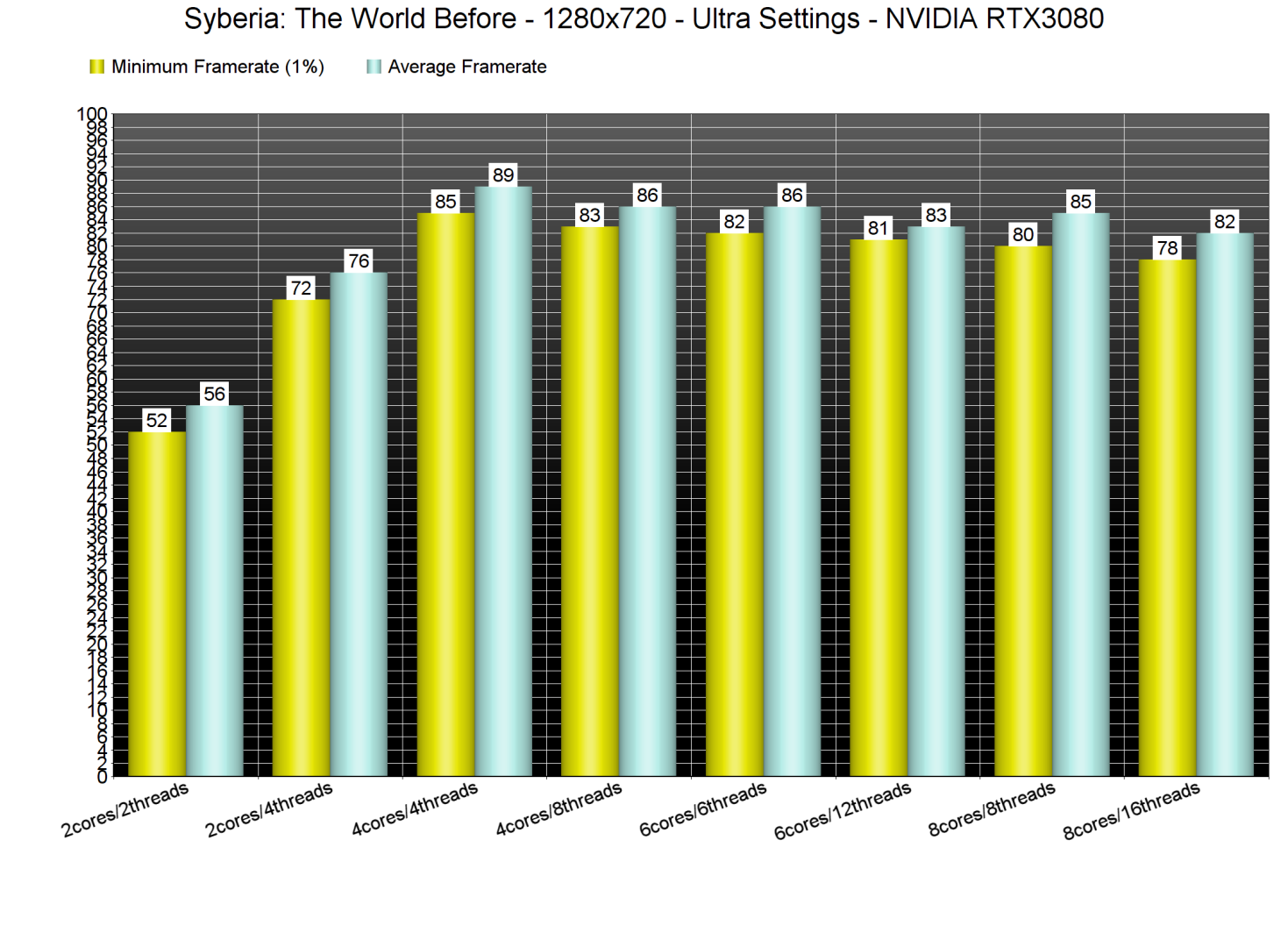

In order to find out how the game scales on multiple CPU threads, we simulated a dual-core, a quad-core and a hexa-core CPU. And, from what we can see, a dual-core system (with Hyper-Threading) can run the game with more than 60fps at 1280×720 with Ultra settings.

Now as you can see, the game behaves really weird on NVIDIA’s hardware. For instance, and even at 720×480, the RTX 3080 is being used at 98%. Not only that, but the performance difference between 480p and 720p is almost non-existent. Additionally, there is a slight performance boost once you disable some CPU cores/threads. We really don’t know what is going on here. However, these CPU issues are only present on NVIDIA’s hardware. The game, as you will see below, performs fine on AMD’s hardware.

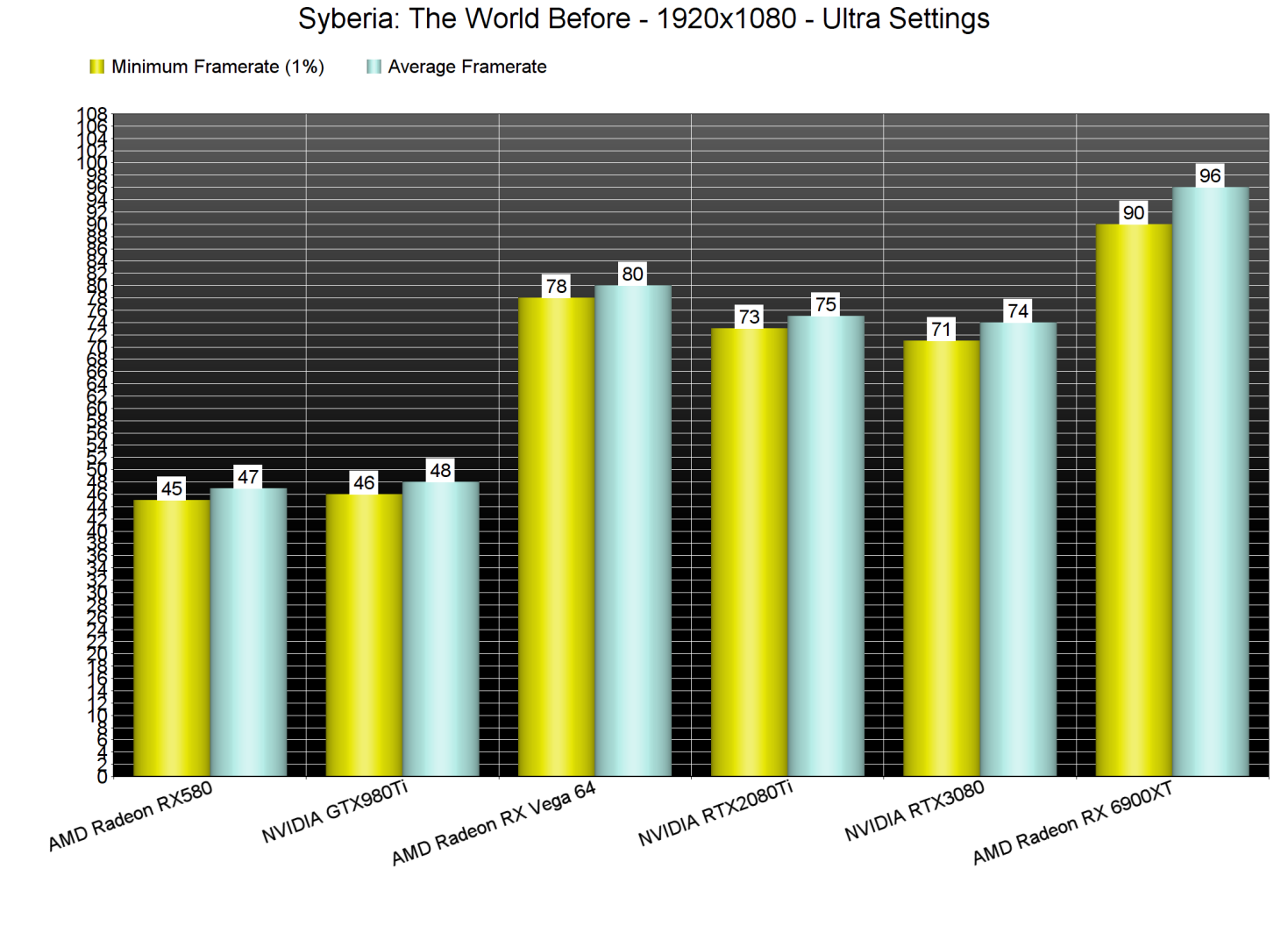

At 1080p/Ultra, the AMD Radeon RX580 runs the game as fast as the NVIDIA GeForce GTX980Ti. Not only that, but the AMD Radeon RX Vega 64 can even outperform both the NVIDIA GeForce RTX2080Ti and RTX3080. There is seriously something weird going on with all NVIDIA GPUs, and we’ve already informed NVIDIA about it.

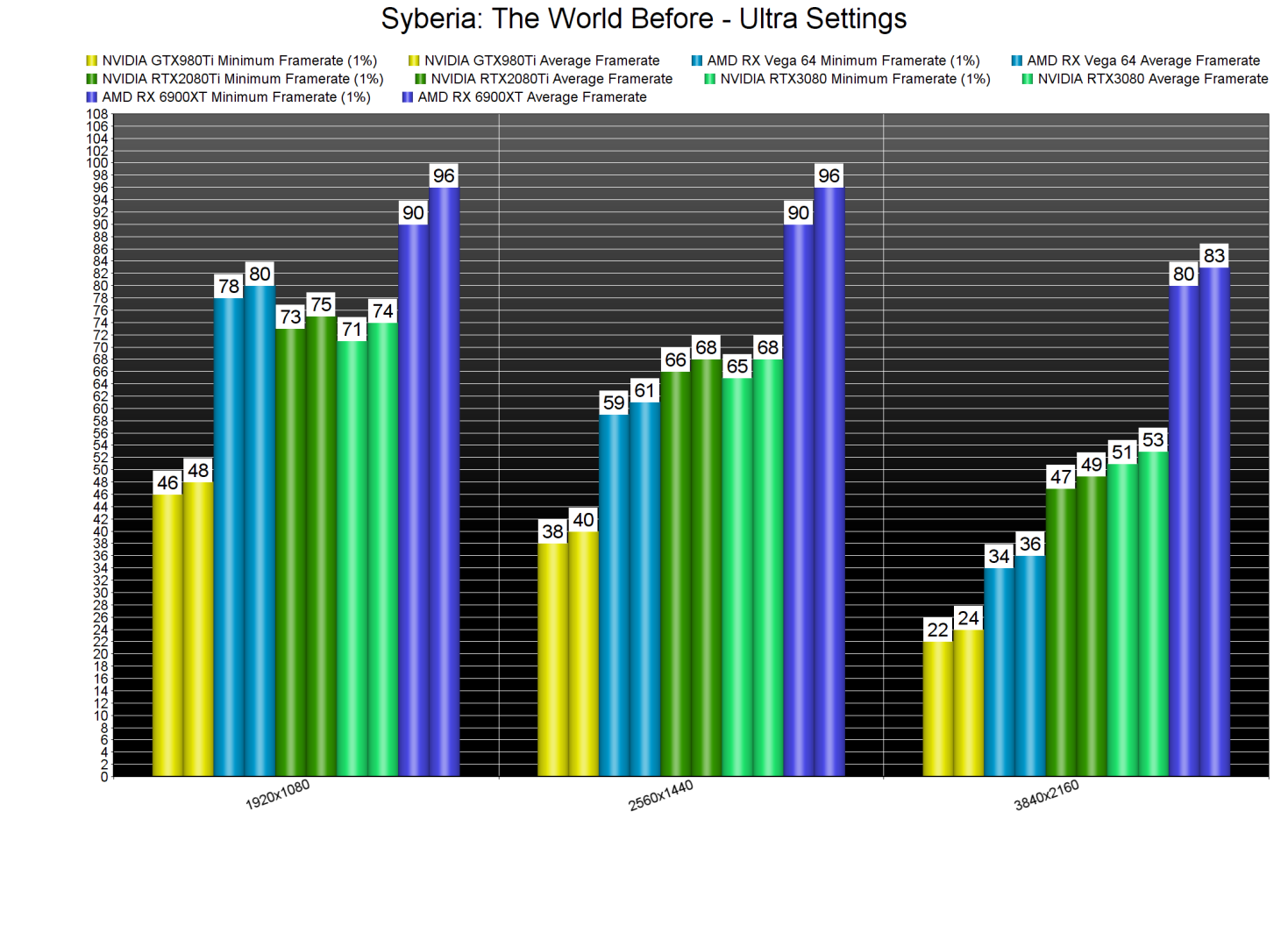

At 1440p/Ultra, the RTX2080Ti and RTX3080 run the game faster than the Vega 64. However, the performance difference between these two NVIDIA GPUs is non-existent (at both 1080p and 1440p). As for 4K/Ultra, the only GPU that could run the game with constant 60fps was the AMD Radeon RX Vega 64. Both the RTX2080Ti and RTX3080 could not run the game smoothly. Moreover, the Vega 64 was 50% faster than the GTX980Ti (which is not what we normally see in other PC games).

Graphics-wise, Syberia: The World Before looks fine, though it does not look anything impressive. The character models look fine, and the environments are pleasing to the eye. However, overall interactivity is limited. You can interact with various objects (in a true adventure style), however, you can’t move objects like chairs or tables.

All in all, Syberia: The World Before has major performance issues on NVIDIA’s hardware. This isn’t obviously the game’s fault, and we hope that NVIDIA will improve overall performance via new drivers. Unfortunately, there are also some traversal stutters that may annoy numerous gamers. Sadly, the game also doesn’t support any PC-only features (like DLSS, FSR or Ray Tracing). Thankfully, the game can run smoothly on a wide range of CPUs. And lastly, the game runs fine on AMD’s graphics cards.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email