CI Games has just released its Unreal Engine 5-powered Souls-like game, Lords of the Fallen, on PC. Lords of the Fallen takes advantage of Lumen and Nanite, and its Ultra Settings are “Next-Gen” demanding. In fact, the game can be so demanding that it can drop to even 40fps at Native 4K on the NVIDIA GeForce RTX 4090.

First things first though. For these early PC benchmarks, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, and NVIDIA’s RTX 4090. We also used Windows 10 64-bit and the GeForce 537.58 driver. Moreover, we’ve disabled the second CCD on our 7950X3D.

Lords of the Fallen does not feature any built-in benchmark tool. Therefore, for our benchmarks, we used this scene. This was one of the most demanding areas early in the game, so consider this a stress test. Other areas will definitely run better, so be sure to keep that in mind.

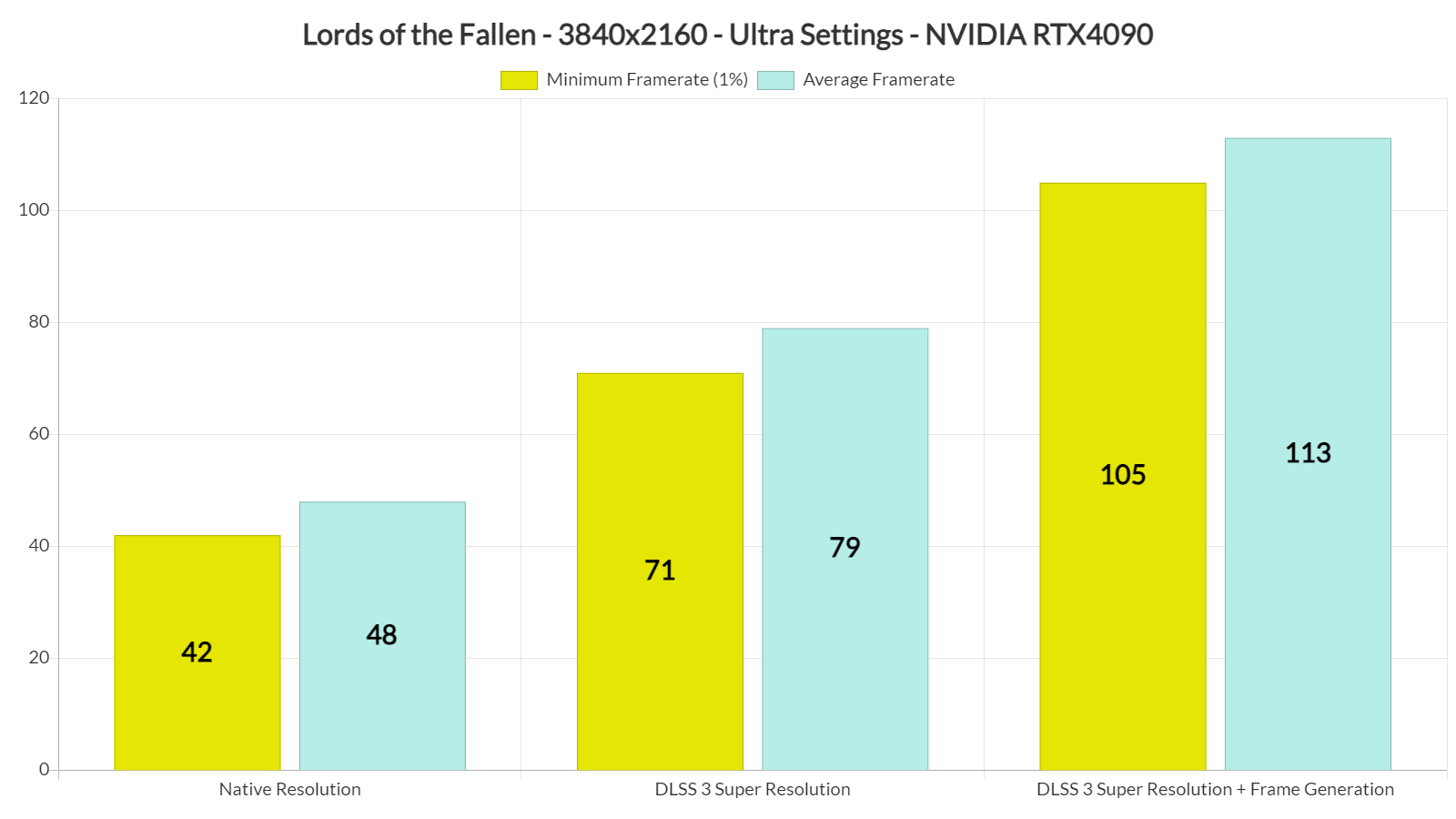

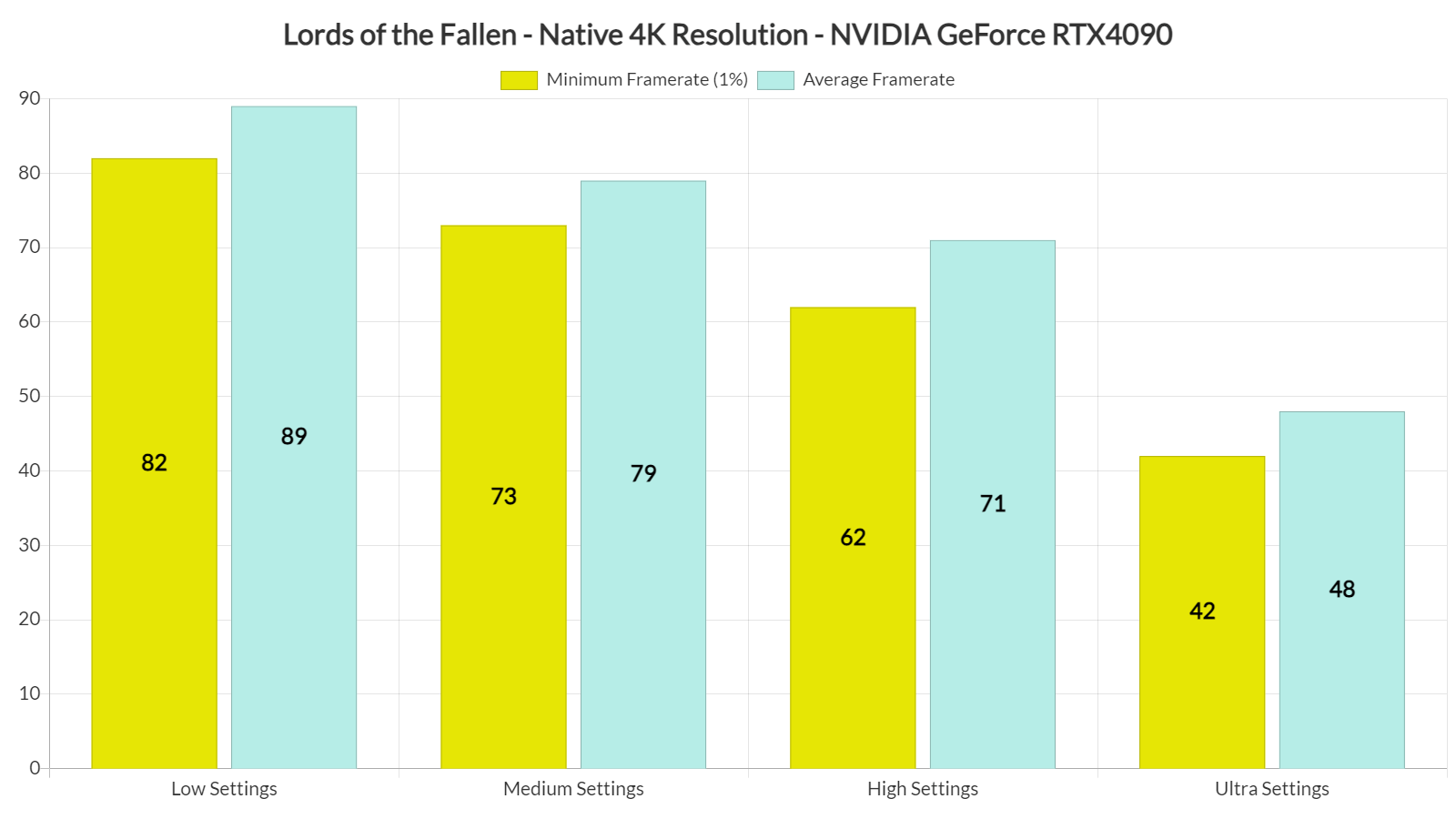

At Native 4K/Ultra Settings, the NVIDIA GeForce RTX4090 cannot come close to a 60fps experience. In our benchmark/stress test, the RTX4090 could push a minimum of 42fps and an average of 48fps. However, by enabling DLSS 3 Super Resolution Quality Mode, we were able to almost double our performance. With DLSS 3 Quality, we were able to get constant 70fps. Then, with DLSS 3 Frame Generation, we were able to increase our performance to a minimum of 105fps.

Now the good news here is that, contrary to other Unreal Engine 5 games, Lords of the Fallen is scalable. By simply dropping the settings to “High”, we were able to get over 60fps at Native 4K on the RTX 4090. This clearly proves that the game’s Ultra Settings are incredibly demanding, and you should avoid them if you don’t want to use an upscaler. And I can assure you that most of you won’t even notice the visual differences between Ultra and High.

Hell, this is Lords of the Fallen at Native 4K/Low Settings with Ultra Textures. These could have easily passed as “High” settings in other games.

In fact, Assassin’s Creed Mirage looks on par with it (or even worse). Here’s a screenshot from Mirage at Native 4K/Max Settings.

So, Lords of the Fallen looks absolutely amazing on PC and is scalable. As such, it’s really funny witnessing gamers and influencers putting the game on Ultra and complaining about its performance. This would have been a major issue IF the game could not scale down. That’s not the case here.

Now I’m not saying that there isn’t room for improvement. However, this isn’t an “unoptimized mess” (at least on PC) as some ironically describe it. Let’s also not forget that the game uses Lumen and Nanite. These techniques are really demanding, which explains why most UE5 games perform so horribly on consoles.

Our PC Performance Analysis for Lords of the Fallen will most likely go live tomorrow, so stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email