Last month, THQ Nordic released Destroy All Humans! 2 – Reprobed. Powered by Unreal Engine 4, it’s time to benchmark it and see how it performs on the PC platform.

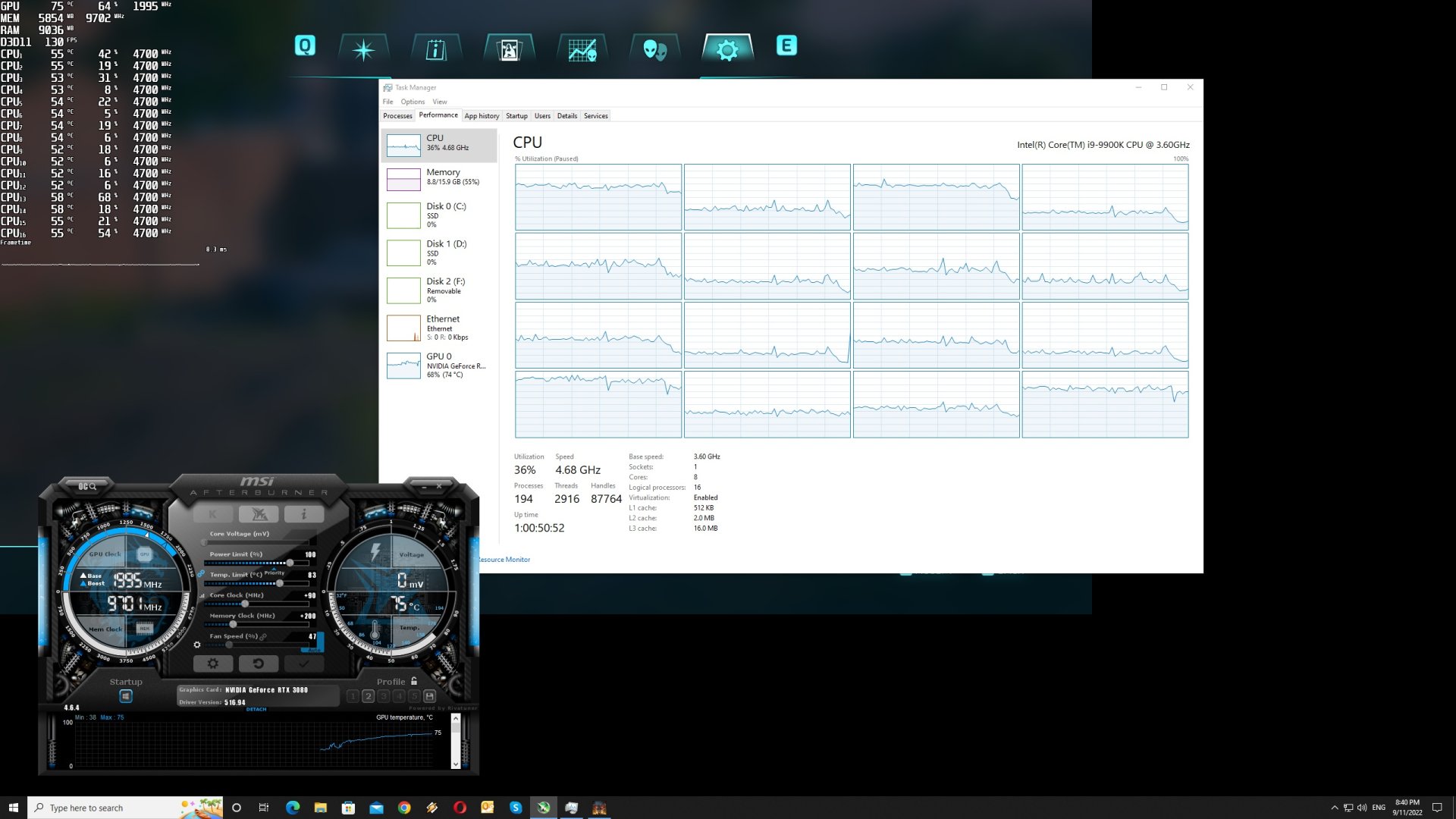

For this PC Performance Analysis, we used an Intel i9 9900K with 16GB of DDR4 at 3800Mhz, AMD’s Radeon RX580, RX Vega 64, RX 6900XT, NVIDIA’s GTX980Ti, RTX 2080Ti and RTX 3080. We also used Windows 10 64-bit, the GeForce 516.94 and the Radeon Software Adrenalin 2020 Edition 22.8.2 drivers.

Black Forest Games has included a few graphics settings to tweak. PC gamers can adjust the quality of View Distance, Anti-Aliasing, Textures, Shadows and more. The game also supports both NVIDIA’s DLSS 2.2 and AMD’s FSR 2.0. You can find the benchmarks for these two AI upscaling techniques here.

Destroy All Humans! 2 – Reprobed does not feature any built-in benchmark tool. Thus, and for both our CPU and GPU benchmarks, we used the game’s first mission. That particular mission put a lot of stress on our CPU, so you should keep that in mind.

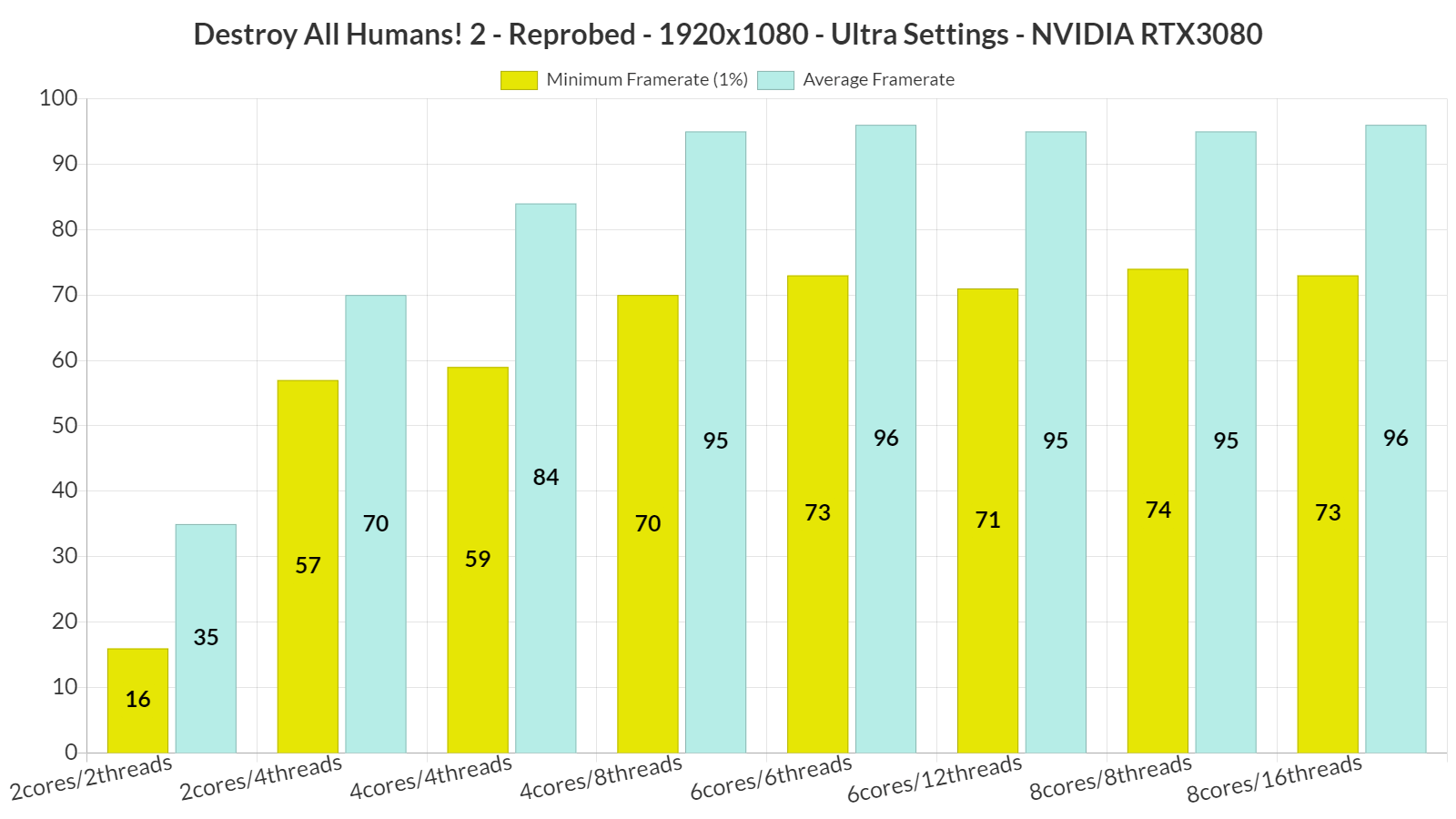

In order to find out how the game scales on multiple CPU threads, we simulated a dual-core, a quad-core and a hexa-core CPU. Our simulated dual-core system was unable to offer a smooth gaming experience at 1080p/Ultra. Even with Hyper-Threading, we had some frame pacing issues that made the game feel jerky (even when it was running with 60fps). As such, we highly recommend using a modern-day quad-core CPU for this game.

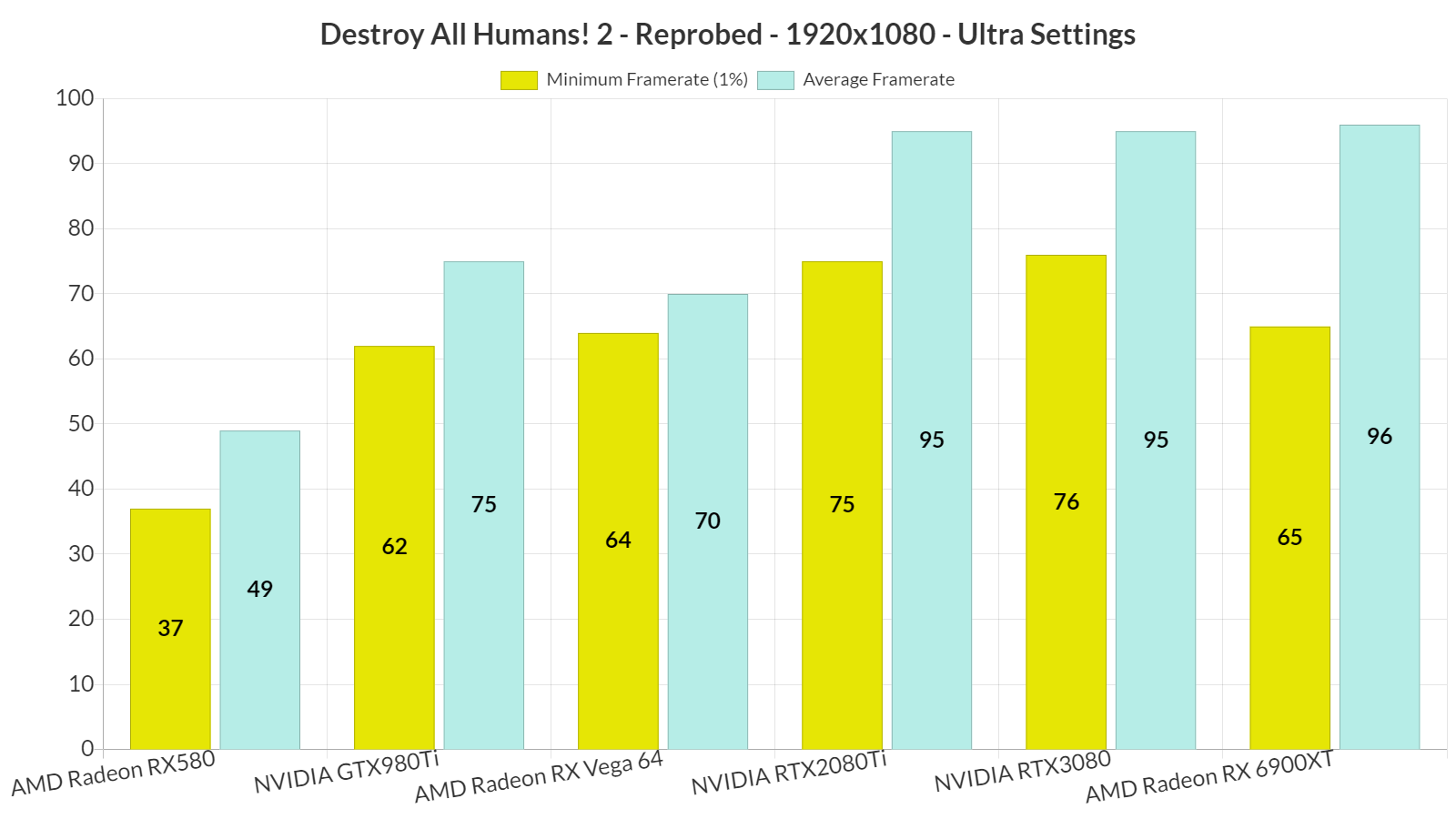

At 1080p/Ultra, most of our GPUs were able to offer a constant 60fps experience. Since this is a DX11 game, it runs better on NVIDIA’s hardware, especially during CPU-heavy scenes. This explains why the GTX980Ti can match the performance of the Vega 64, and why the RTX3080 can compete with the RX 6900XT.

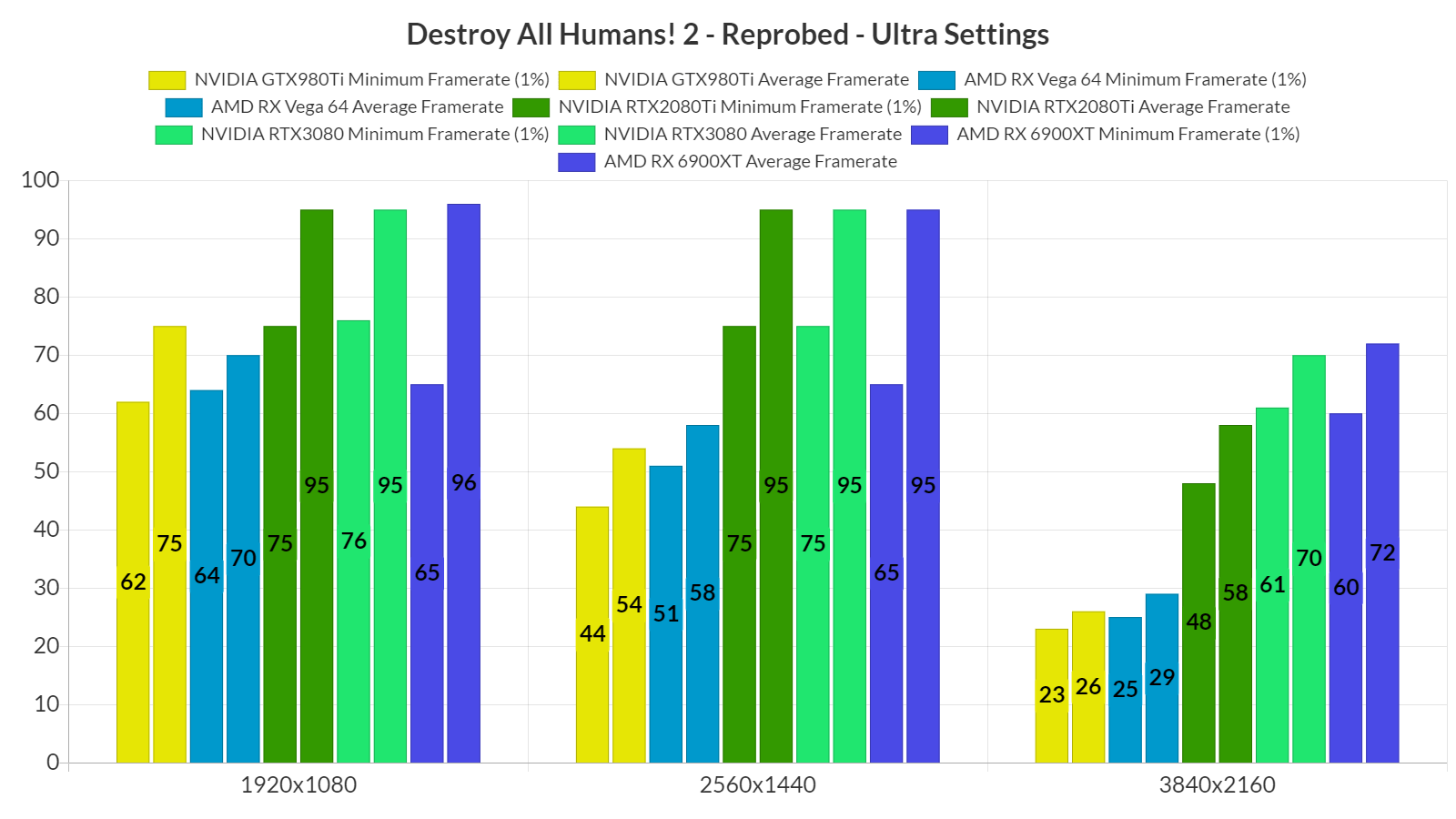

At 1440p/Ultra, the only GPUs that were able to provide a smooth gaming experience were the RTX2080Ti, RTX3080 and RX 6900XT. As for native 4K/Ultra, the only GPUs that could offer a constant 60fps experience were the RTX3080 and the RX 6900XT. However, and since both DLSS 2.2 and FSR 2.0 look great in this game, we highly recommend using them when targeting a 4K resolution.

Graphics-wise, Destroy All Humans! 2 – Reprobed looks fine. While it does not push the graphical boundaries of PC games, it’s at least pleasing to the eye. We did notice some pop-in issues, as well as some minor texture streaming issues. In shadowy places, the game can also look a bit flat. However, and mostly due to its art style, the game looks overall great.

Unfortunately, though, Destroy All Humans! 2 – Reprobed suffers from major stuttering issues. In case you’re wondering, no, these aren’t shader compilation stutters. Instead, the game has frequent stutters that occur randomly. And as said, the game uses DX11 so don’t blame DX12 for these stutters. You can force the game to run in DX12 via commands, however, we suggest sticking with DX11.

All in all, Destroy All Humans! 2 – Reprobed feels like a mixed bag. While the game can run smoothly on a variety of GPUs at 1080p, its GPU requirements for gaming at 4K feel a bit too high (at least for what the game display on screen). The good news here is that PC gamers can at least use AMD FSR 2.0 and NVIDIA DLSS 2.2 to boost performance. The game isn’t also particularly impressive graphically. Not only that, but it currently suffers from some truly awful stuttering issues. So while this PC port isn’t a disaster, there is still room for improvement.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email