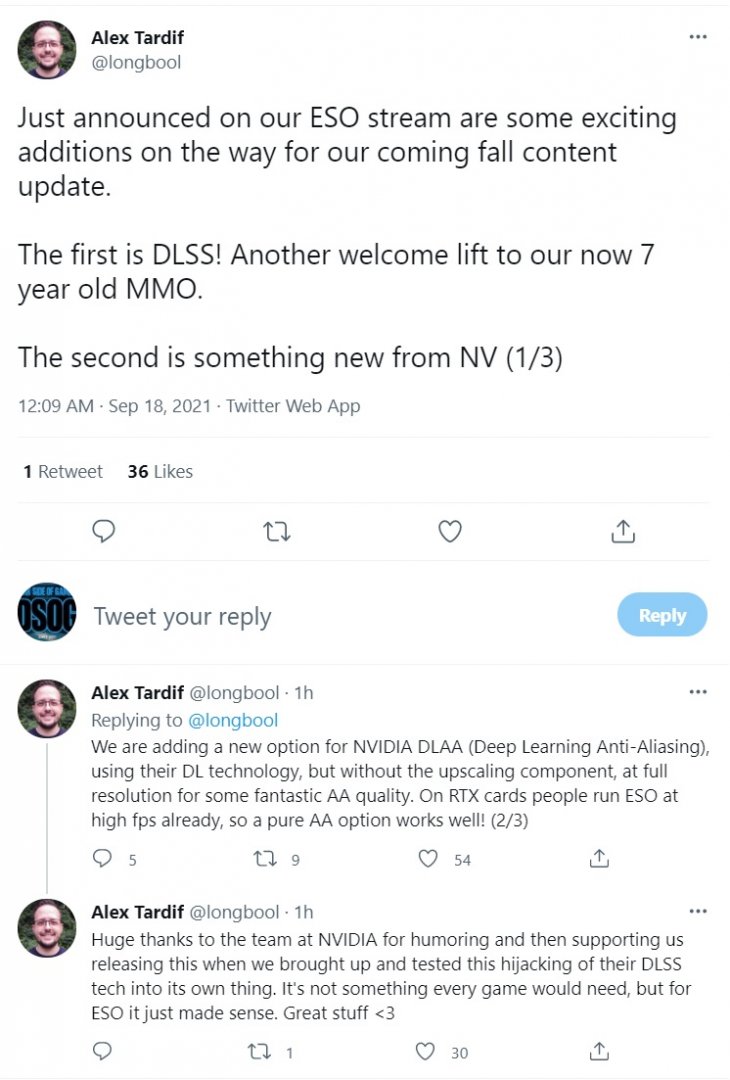

Alex Tardif, Lead Graphics Engineer at ZeniMax Online Studios, announced that The Elder Scrolls Online will support NVIDIA’s DLAA tech. In addition, the team will also add support for the more “traditional” deep learning technique, DLSS.

DLAA stands for Deep Learning Anti-Aliasing. This basically means that the game will use AI techniques at full resolution in order to provide better anti-aliasing results.

DLAA also sounds like what NVIDIA advertised as DLSS 2X (back when it announced its Turing GPUs). As the green team stated back then.

“In addition to the DLSS capability described above, which is the standard DLSS mode, we provide a second mode, called DLSS 2X. In this case, DLSS input is rendered at the final target resolution and then combined by a larger DLSS network to produce an output image that approaches the level of the 64x super sample rendering – a result that would be impossible to achieve in real time by any traditional means.”

Now I’m pretty sure DLAA won’t approach results similar to 64X supersampling. Despite that, though, it appears to be working in a similar way with DLSS 2X.

As we’ve seen, DLSS can match or even surpass native resolutions. Not only that, but it introduces new details thanks to its deep learning techniques. So, theoretically, DLAA should provide a way better and crisper AA than TAA or most common AA methods.

There is currently no ETA on when this DLSS/DLAA patch will go live. Naturally, though, we’ll be sure to keep you posted!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email