Yesterday, AMD has officially announced its first 7nm graphics card that will be based on Vega 20, the AMD Radeon VII. AMD Radeon VII will compete with the NVIDIA GeForce RTX2080 and from the looks of it, NVIDIA’s CEO was not really impressed by what AMD has achieved.

When asked about the new AMD GPU, Jensen Huang told PCWorld that he found this new GPU underwhelming.

“It’s underwhelming. The performance is lousy and there’s nothing new. [There’s] no ray tracing, no AI. It’s 7nm with HBM memory that barely keeps up with a 2080. And if we turn on DLSS we’ll crush it. And if we turn on ray tracing we’ll crush it.”

The AMD Radeon VII will come out in February and will be priced at $699, however most gamers can already find some NVIDIA GeForce RTX2080s at similar prices.

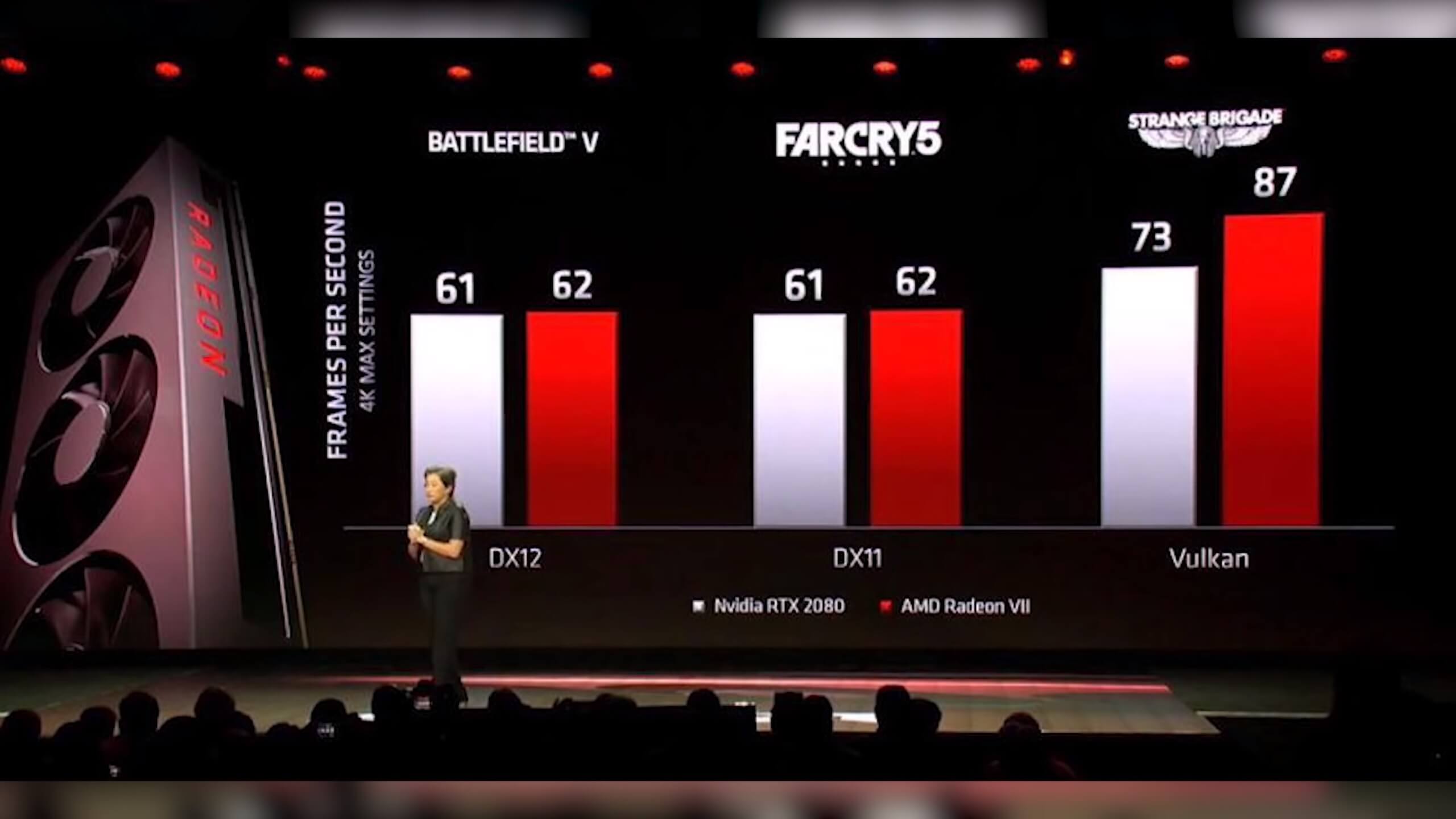

AMD also showed some benchmarks between the Radeon VII and the GeForce RTX2080. In Battlefield 5 and Far Cry 5, AMD’s latest GPU was as fast as NVIDIA’s opponent, whereas in Strange Brigade the Radeon VII was noticeably faster than the GeForce RTX2080.

Now since a lot of people criticized NVIDIA for pricing the RTX2080 at $699, I’m pretty sure that the same people will criticize AMD for releasing a new overpriced product that does not bring anything new to the table. Yes, the Radeon VII can match the performance of the GeForce RTX2080 and while competition is great for us consumers, we do have to wonder why should gamers bother with it when they can already get an RTX2080; a GPU that performs identically, has the same price, and comes with more/newer features.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email