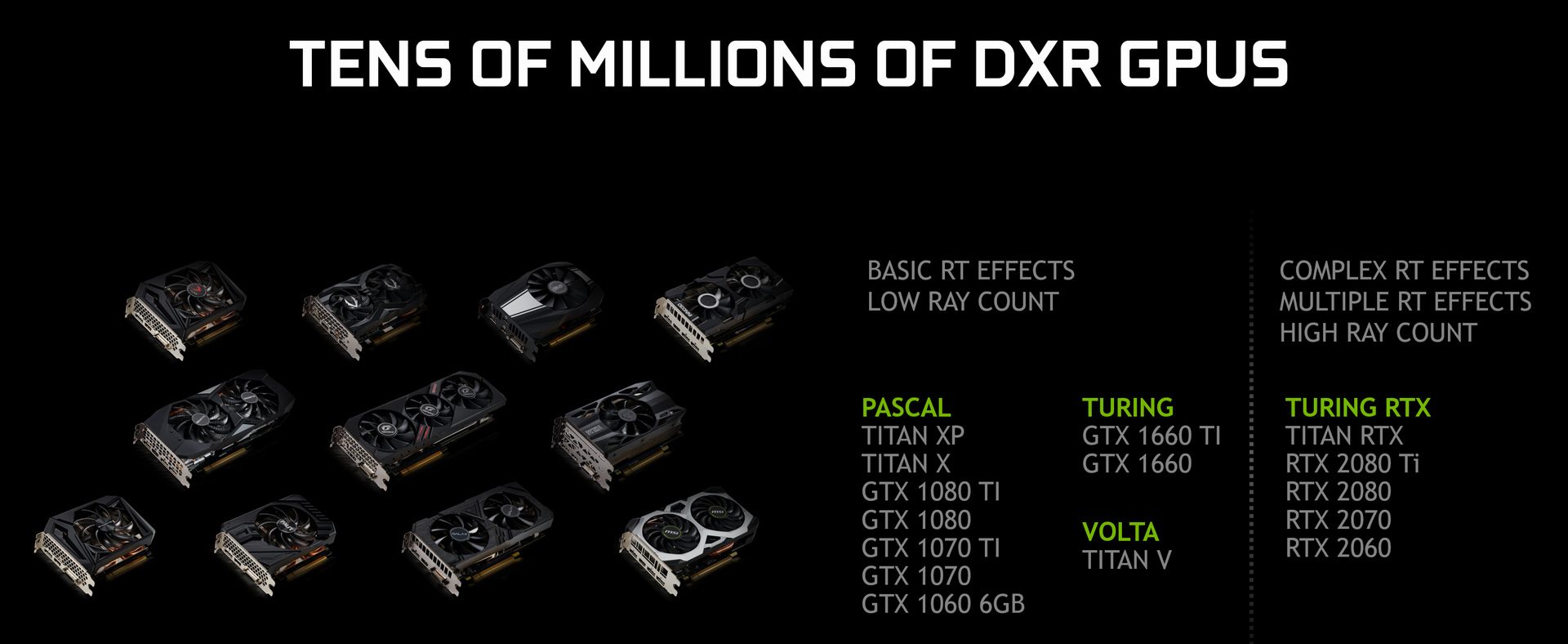

NVIDIA has announced that it will be bringing real-time ray tracing to its Pascal GPUs. According to the green team, a new driver will enable all GTX10XX series graphics cards, from GTX1060 all the way up to the GTX1080Ti, to run real-time ray tracing via the DXR API.

As NVIDIA noted, GeForce GTX gamers will have an opportunity to use ray tracing at lower RT quality settings and resolutions, while GeForce RTX users will experience up to 2-3x faster performance. Naturally, this performance jump is thanks to the dedicated RT Cores that the Turing GPUs feature.

Realistically speaking, we don’t expect a lot of GTX gamers to be able to enjoy the real-time ray tracing effects at an acceptable framerate/visual ratio. Still, this is great news as it will allow more developers to start implementing real-time ray tracing effects in their games.

NVIDIA recommends Pascal owners to use basic real-time ray tracing effects with low ray count. The driver that will enable RT on the Pascal GPUs will come out in April and the the supported GeForce GTX graphics cards will work without any newer game updates.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email