DLSS, or Deep Learning Super Resolution, renders a game at a lower resolution, and then uses AI techniques in order to reconstruct it at a higher resolution (while also offering better performance). However, everyone was kind of disappointed when NVIDIA first launched it. You see, DLSS 1 was blurry as hell and looked way worse than native resolutions. NVIDIA was quick to react and then released DLSS 2, which impressed all of us.

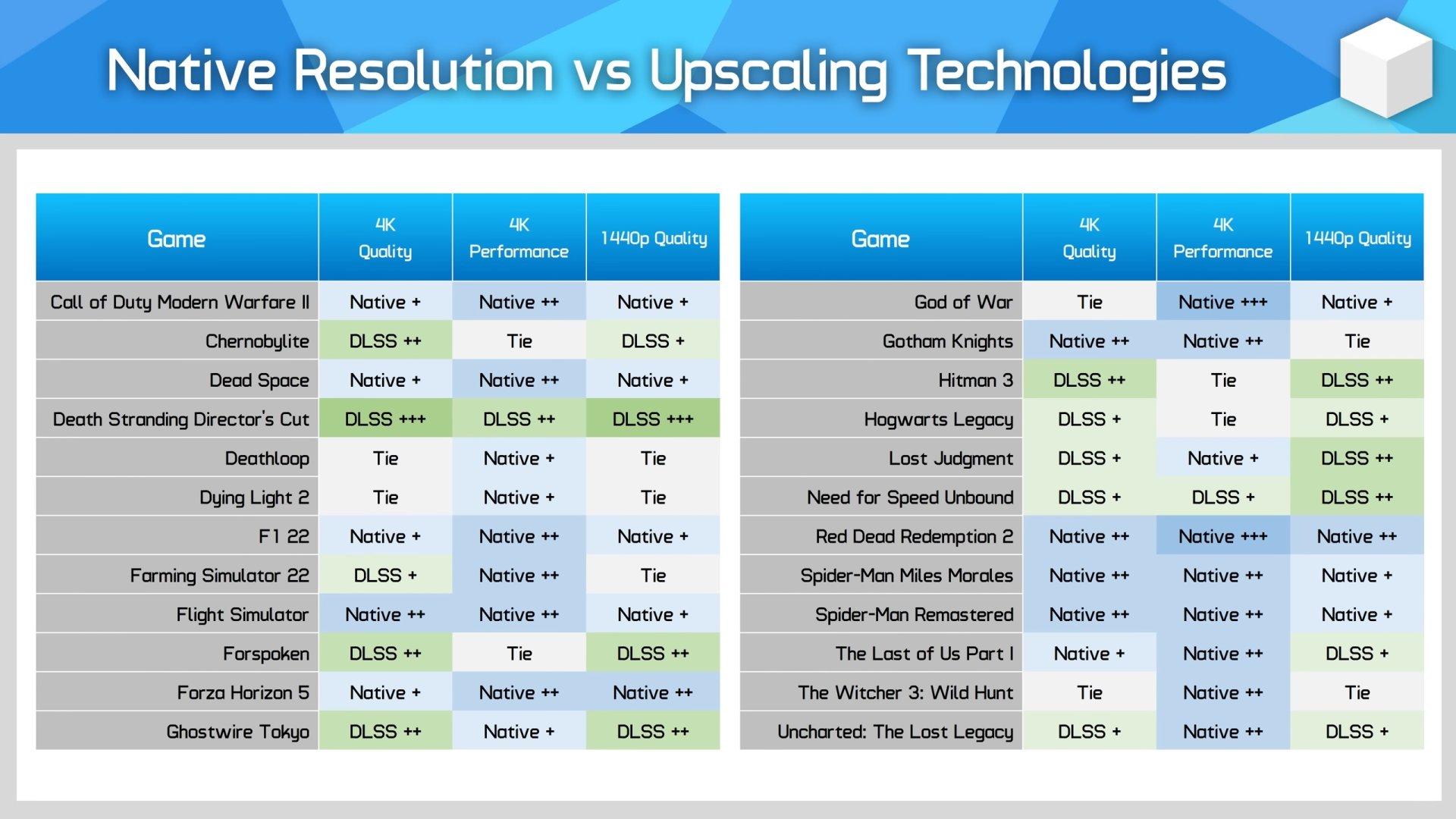

So, Hardware Unboxed has decided to test NVIDIA’s DLSS 2 tech in 24 games. The purpose of its video was to see whether DLSS 2 could be as good (or better) as the native 4K or native 1440p. And the results are truly shocking.

In the 24 games that HU tested, DLSS 2 was able to match the quality of native 4K in 4 games. That’s of course in its Quality Mode. And, as you may have noticed in our articles, that’s the only mode we suggest using. Moreover, in five games, DLSS 2 looked slightly better than native 4K. Not only that, but DLSS 2 looked noticeably better than native 4K in six games. And then, native 4K looked slightly and noticeably better than DLSS 2 in ten games.

Let’s start with the obvious. NVIDIA never claimed that DLSS 2 could provide better image quality than native 4K or native 1440p. Thus, the fact that DLSS 2 can look better than native 4K in five games is incredible. For the most part, DLSS 2 can match the quality of native 4K and native 1440p, which is why we always suggest enabling it. Furthermore, players can use newer DLLs in order to resolve some issues that were present in older games (in which DLSS 2 looked noticeably worse than native resolution).

All in all, DLSS 2 is an impressive reconstruction tech. We’ve been saying this for a while, and the following video backs up our claims.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email