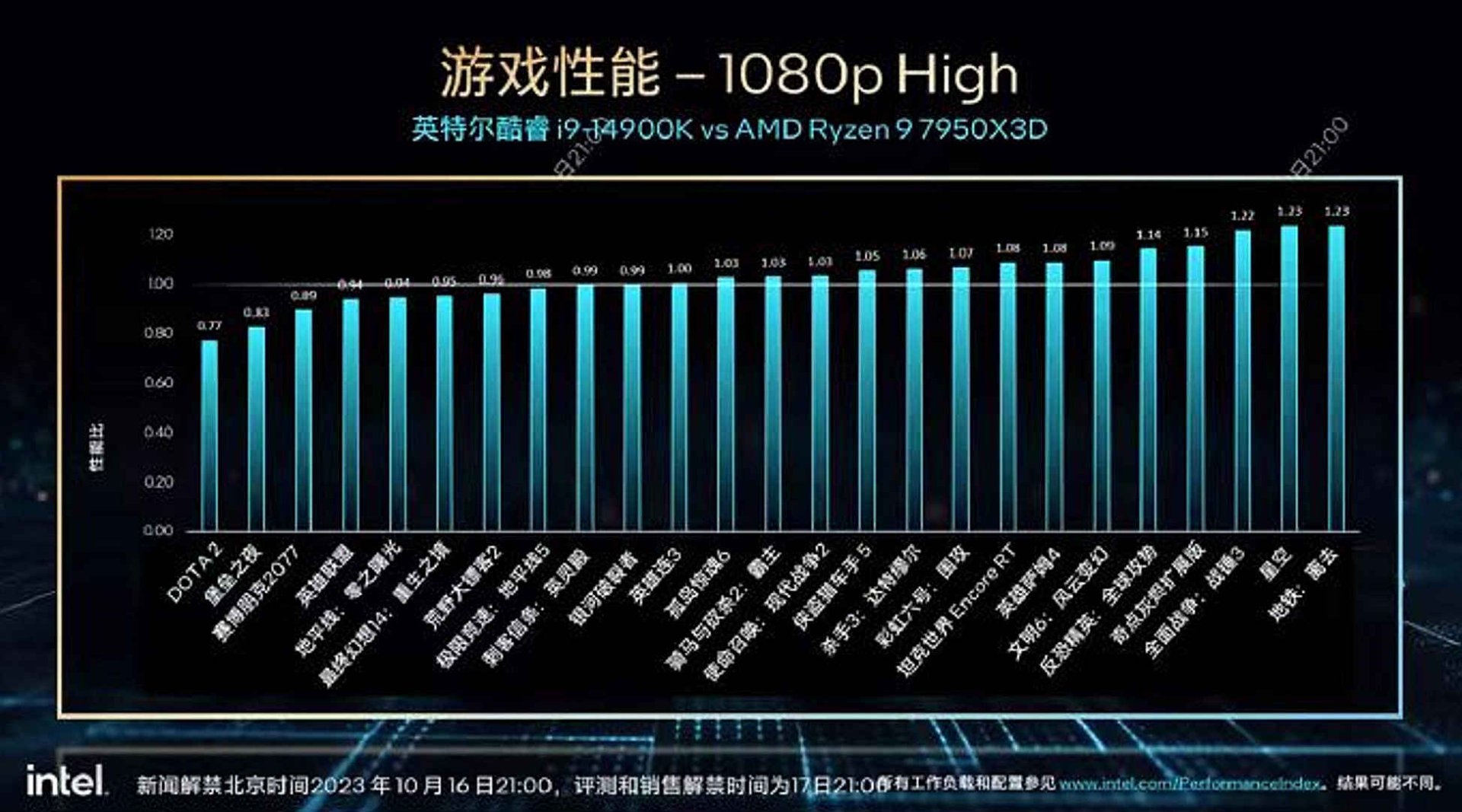

Intel will soon announce its upcoming 14th-generation CPUs and according to a leaked slide, the Intel Core i9-14900K will be 2% faster than the AMD Ryzen 9 7950X3D.

The Intel Core i9 14900K will be the flagship CPU, featuring 24 cores. The CPU will have 8 P-cores and 16 E-cores, and it will support up to 32 threads.

What’s shocking here is the fact that Intel has included some games in which the Intel Core i9 14900K underperforms. For instance, in DOTA 2, the Ryzen 9 7950X3D is 23% faster than the i9 14900K. Similarly, in Fortnite and Cyberpunk 2077, the AMD CPU is faster by 18% and 11%, respectively.

Here is the full list of games (from the left to the right).

- DOTA 2

- Fortnite

- Cyberpunk 2077

- League of Legends

- Horizon Zero Dawn

- Final Fantasy 14

- Red Dead Redemption 2

- Forza Horizon 5

- Assassin’s Creed Valhalla

- Galaxy Breaker

- Company of Heroes 3

- Far Cry 6

- Mount & Blade II: Bannerlord

- COD: MW2

- GTA5

- Hitman 3

- Rainbow Six Siege

- World of Tanks Encore RT

- Serious Sam 4

- Civilization 6

- Counter Strike Global Offensive

- Ashes of the Singularity

- Total War Warhammer 3

- Starfield

- Metro Exodus

It’s also crucial to note is that the second CCD of the AMD Ryzen 9 7950X3D can harm its performance in some games. This is one of the reasons we’ve disabled it in our PC performance analyses. And I’m certain that Intel has not disabled it in its very own tests.

What this basically means is that by disabling the second CCD, the gap between the Intel Core i9 14900K and the AMD Ryzen 9 7950X3D may be even narrower. Okay, I can’t imagine a narrower performance difference than 2%, so the Ryzen 9 7950X3D might be faster without its second CCD.

Still, if these initial performance benchmarks are to be believed, Intel’s new CPUs will not be that impressive for gaming.

Stay tuned for more!

Thanks HXL

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email