It appears that the Chinese Bilibili channel @TecLab has just leaked some alleged benchmark results of the GeForce RTX 3080 GPU. There are both synthetic and gaming benchmark scores, but we will only focus on the gaming part of this leak. The original link has been taken down, but you can watch the video over here.

But before I continue, do take these results/scores with a Grain of salt. Some scores have been compiled by @_rogame, and @davideneco25320. The leaker claims to own one of the Ampere GPU samples and a working driver, which is still under NDA, though he has used the press driver version 456.16 for the GeForce RTX 3080 reviews.

To quote TecLab:

“RTX 3080 has 30% (performance) increase compared to 2080Ti and 50% increase compared to 2080S. Power consumption of the whole machine is 500W.”

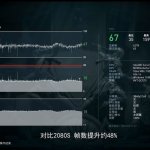

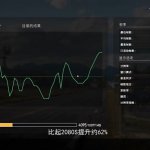

Coming to the Gaming benchmarks, the following games were tested; Far Cry 5, Borderlands 3, Horizon Zero Dawn, Assassin’s Creed Odyssey, Forza Horizon 4, Shadow of the Tomb Raider, Control with DLSS On/OFF, and lastly Death Stranding with DLSS ON/OFF. These are 4K in-game benchmarks. From the looks of it, the RTX 3080 appears to be 48 to 62% faster than the RTX 2080 SUPER GPU.

RTX 3080 4K Gaming vs 2080 SUPER:

- Far Cry 5 +62%

- Borderland 3 +56%

- AC Odyssey +48%

- Forza Horizon 4 +48%

4K Gaming vs 2080S

Far Cry 5 +62%

Borderland 3 +56%

AC Odyssey +48%

Forza Horizon 4 +48%— _rogame 🇵🇸 (@_rogame) September 9, 2020

RTX 3080 vs 2080 Ti:

- BL3: 1.34x

- Doom Eternal: 1.34x

- RDR2: 1.30x

RTX 3070 vs 2080 Ti:

- BL3: 0.97x

- Doom Eternal: 1.00x

- RDR2: 1.01x

https://twitter.com/davideneco25320/status/1303693882975227904

Death stranding also gets 100FPS at 4K with DLSS off, and 160-170 FPS with DLSS ON.

RTX 3080

- Far cry 5 : 100 FPS 4K

- Borderlands 3 : 61 FPS 4K

- Horizon zero dawn : 76 FPS 4k

- Assassin’s creed odyssey : 67 FPS 4K

- Forza horizon 4 : 150 FPS 4K

- Shadow of tomb raider : RTX DLSS 100 fps 4K , RTX no DLSS 84 (ofc 4k)

- Control : DLSS ON 100FPS , no DLSS 50-60 FPS (4k)

https://twitter.com/davideneco25320/status/1303687388988833792

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email