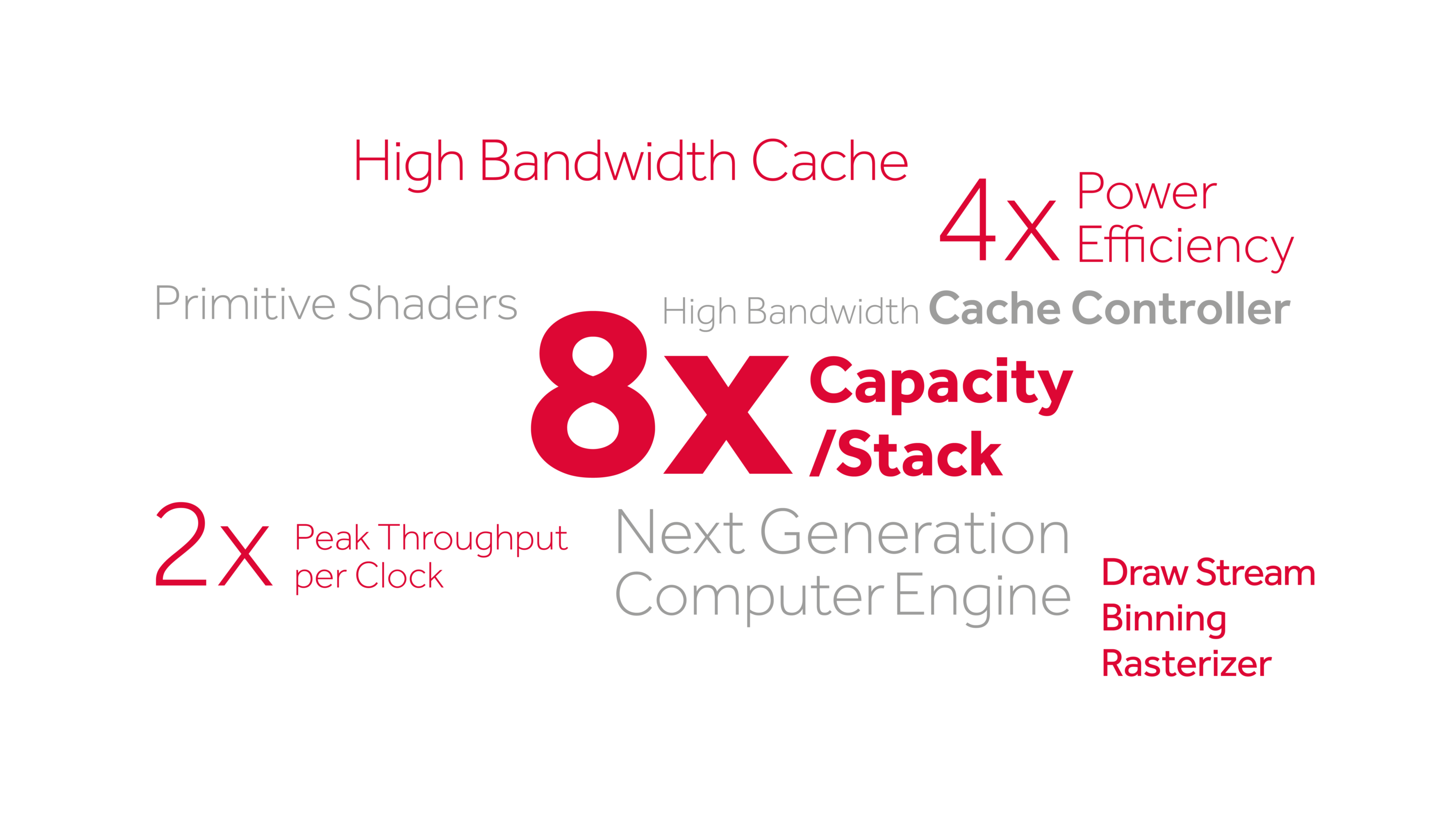

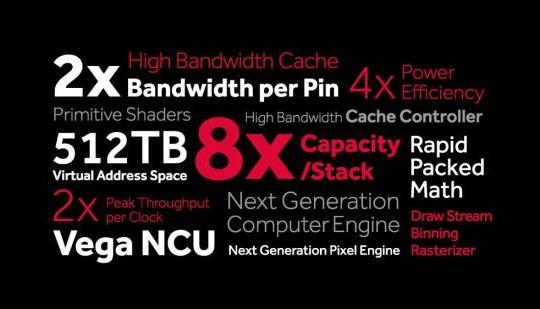

AMD will officially preview its Radeon Vega Architecture this Thursday, however we just got the first information about it via a leaked image. This image comes from AMD’s official Vega website, meaning that it is as legit as it can get.

As we can see, VEGA will feature high bandwidth cache, will be 4X more efficient (our guess is compared to Fiji’s HBM1 as VEGA will take advantage of HBM2), will sport a high bandwidth cache controller, will feature a draw stream binning rasterizer, will support Next Generation Computer Engine, and will offer 2X peak throughput per clock.

As said, AMD will preview its VEGA Architecture this Thursday, so stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email