Last week, NVIDIA officially revealed its RTX 50 series GPUs. The NVIDIA GeForce RTX 5090 and RTX 5080 are currently planned to come out on January 30th. And, from the looks of it, the high-end model will be around 30-40% faster than the RTX 4090.

Do note, that these are preliminary results. We’ll know for sure the performance gap between the RTX 5090 and the RTX 4090 once we get the RTX 5090 on our hands and test it.

For these preliminary results, we have two games that can give us a pretty good idea of how they run on both the RTX 4090 and the RTX 5090. The first one is Black Myth: Wukong and the second is Cyberpunk 2077.

For our tests, we used an AMD Ryzen 9 7950X3D with 32GB of DDR5 at 6000Mhz, and the NVIDIA GeForce RTX 4090. We also used Windows 10 64-bit, and the GeForce 566.14 driver. Moreover, we’ve disabled the second CCD on our 7950X3D.

Before continuing, it’s crucial to note that we have a Founder’s Edition for the RTX 4090. That’s the key to these preliminary comparisons. By using an FE GPU, we should get a pretty good idea of how much faster the RTX 5090 is. If we had an AIB GPU, our comparisons wouldn’t be valid. After all, most – if not all – of the AIB GPUs are OCed. So, you can’t compare an OCed RTX 4090 with an RTX 5090 FE.

So, with this out of the way, let’s start. Frame Chasers was able to capture some gameplay footage from Black Myth: Wukong with and without DLSS 4. Without DLSS 4, the game ran with 29FPS on the RTX 5090. In the exact same location, our RTX 4090 was pushing 21FPS. So, we’re looking at a 38% performance improvement.

For Cyberpunk 2077, we used NVIDIA’s own video. In that video, the RTX 5090 was pushing 27FPS at Native 4K with Path Tracing. In that very same scene, our RTX 4090 was pushing 20FPS. This means that the RTX 5090 was 35% faster than the RTX 4090.

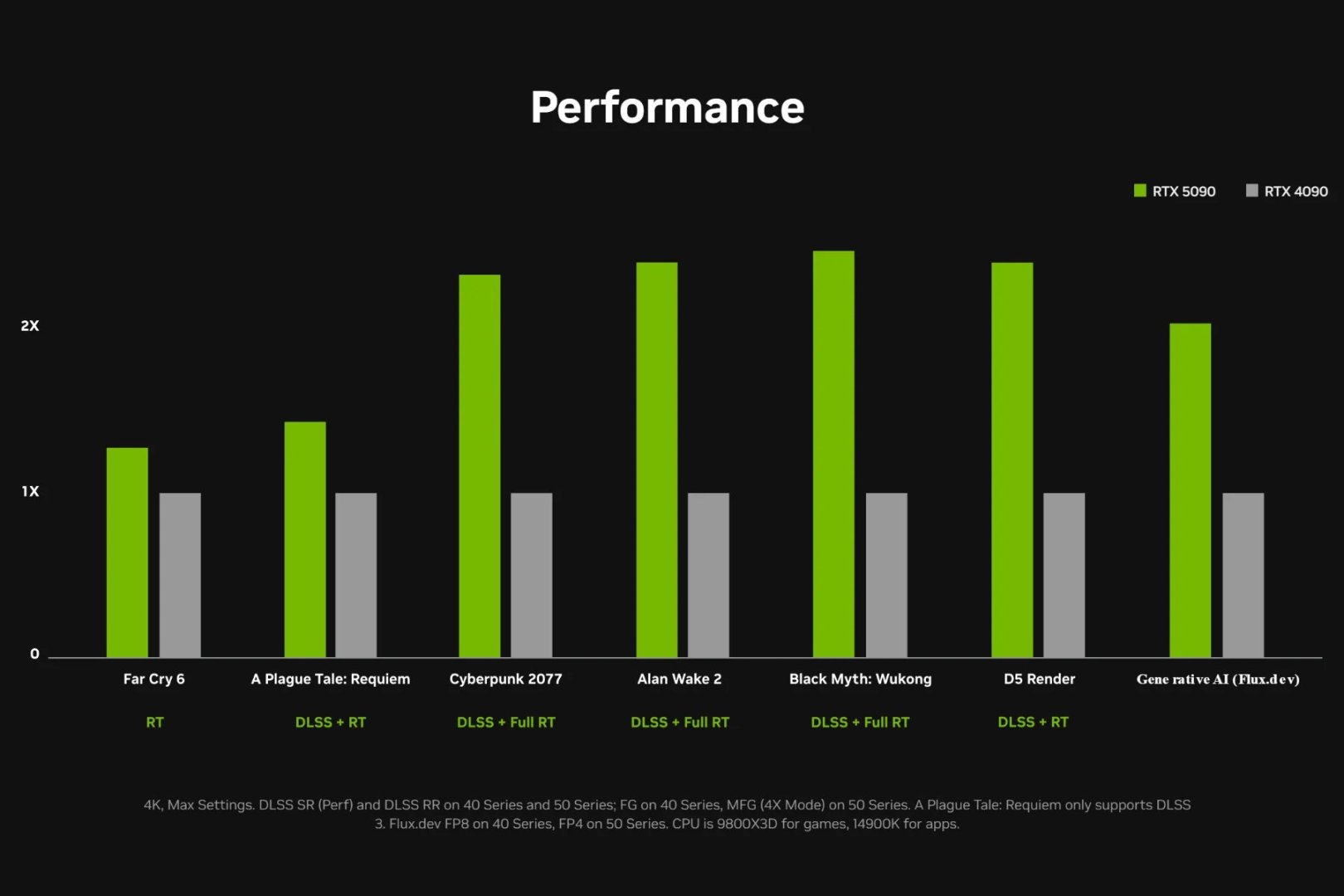

NVIDIA has also shared some graphs when it announced its new GPUs. Without the new DLSS 4, the NVIDIA RTX 5090 appeared to be around 30-40% faster than the RTX 4090. This falls in line with our findings.

As I said, we won’t know the exact performance difference between the RTX 4090 and RTX 5090 until we test them ourselves. However, it’s safe to say that without DLSS 4, the RTX 5090 will be about 30-40% faster than the RTX 4090.

Now I don’t know if this percentage will disappoint any of you. However, that was exactly what I was expecting from the RTX 5090. And let’s be realistic. A 30-40% performance increase per generation is what we typically get. For instance, the RTX 3080Ti was 25-40% faster than the RTX 2080Ti. Or how about the RTX 4080 Super which was 25-35% faster than the RTX 3080Ti.

All in all, the RTX 5090 will be noticeably faster than the RTX 4090, even without DLSS 4. However, you should temper your expectations. You will NOT be able to run path-traced games at Native 4K with 60FPS. If you are expecting something like that, you are simply delusional. For Ray Tracing games (or for UE5 games using Lumen), you’ll still have to use DLSS Super Resolution. For Path Tracing games, you’ll need DLSS 4 Frame Generation. And that’s in today’s games. Future titles will, obviously, have even higher GPU requirements.

Stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email