Just a few days ago AMD officially announced its RDNA 2-based flagship Big Navi graphics cards, the Radeon RX 6900XT, RX 6800XT and RX 6800, respectively. Even though the RDNA 2 Live event showcased benchmarks that favored the Radeon RX 6900 XT, Radeon RX 6800 XT, and Radeon RX 6800 over the competition, none of them included metrics related to ray tracing.

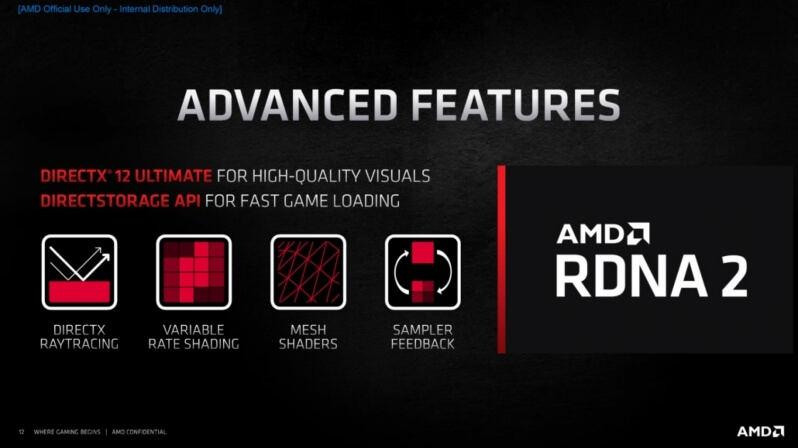

A lot of gamers have been waiting to hear about AMD’s approach to Ray Tracing. Recently a statement from AMD was published by AdoredTV, where the manufacturer has confirmed that it will support ray tracing in all games that do not come with proprietary APIs and extensions, such as NVIDIA DirectX RTX or NVIDIA Vulkan RTX.

AMD confirmed that they would be only supporting the industry standards, which basically includes Microsoft’s DXR API and Vulkan’s raytracing API.

“AMD will support all ray tracing titles using industry-based standards, including the Microsoft DXR API and the upcoming Vulkan raytracing API. Games making of use of proprietary raytracing APIs and extensions will not be supported.”— AMD marketing.

Both Microsoft DXR or Vulcan ray tracing API industry standards are slowly getting more popular, and the launch of AMD’s RX 6000-series GPUs will also push game developers away from NVIDIA’s in-house implementation, or proprietary APIs. For what’s it worth, Intel also plans to support DXR as well, with its Xe-HPG discrete GPU lineup.

What this basically means is that existing DXR-supported titles are supported, and also PC titles which will utilize the official Vulkan ray tracing API, like e.g. Control PC game which uses the DXR API. However, titles such as Wolfenstein: Youngblood and Quake II RTX will not be supported, because both of these titles use Nvidia’s proprietary Vulkan RTX API extensions.

Wolfenstein: Youngblood and Quake II RTX use non-standard, proprietary API extensions, so AMD’s RDNA 2 cards won’t support similar PC titles, though most of these games can still run without ray tracing enabled, and still offer a satisfying visual/gaming experience.

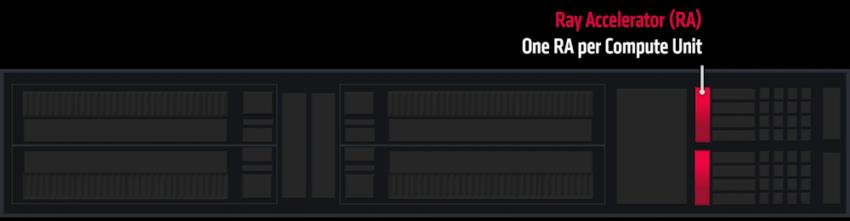

In case you didn’t know, AMD Radeon RX 6000 series also feature Ray Accelerators. One official slide elaborates on the hardware component that Radeon RX 6000 Series GPUs leverage for ray tracing – the Ray Accelerator (RA). Each Compute Unit carries one Accelerator as shown below:

These RA units are responsible for the hardware acceleration of ray tracing in games. The RX 6900 XT features 80 RAs, RX 6800 XT features 72, and RX 6800 has 60. The same Ray Accelerators can be found in RDNA2-based next-gen gaming consoles.

“New to the AMD RDNA 2 compute unit is the implementation of a high-performance ray tracing acceleration architecture known as the Ray Accelerator,” a description reads. “The Ray Accelerator is specialized hardware that handles the intersection of rays providing an order of magnitude increase in intersection performance compared to a software implementation.” via AMD.

AMD will launch the Radeon RX 6800 and RX 6800 XT series of graphics cards on November 18th. The AMD Radeon RX 6900 XT flagship card will be available on December 8th and will cost around $999 USD.

Thanks, OC3D.

Stay tuned for more!

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email