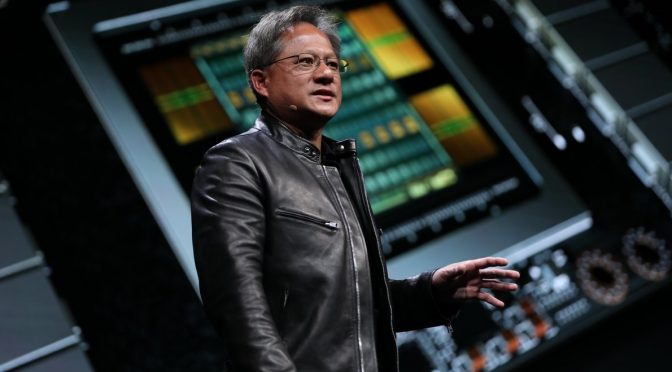

During his GTC China 2019 keynote presentation, NVIDIA’s CEO Jensen Huang claimed that the GeForce RTX2080 is more powerful than a next-gen console. In short, Huang claims that both the RTX2080 and the RTX2080Ti will be more powerful than either PS5 or the next Xbox console.

Now there are some interesting details to note here, so hold on to your butts.

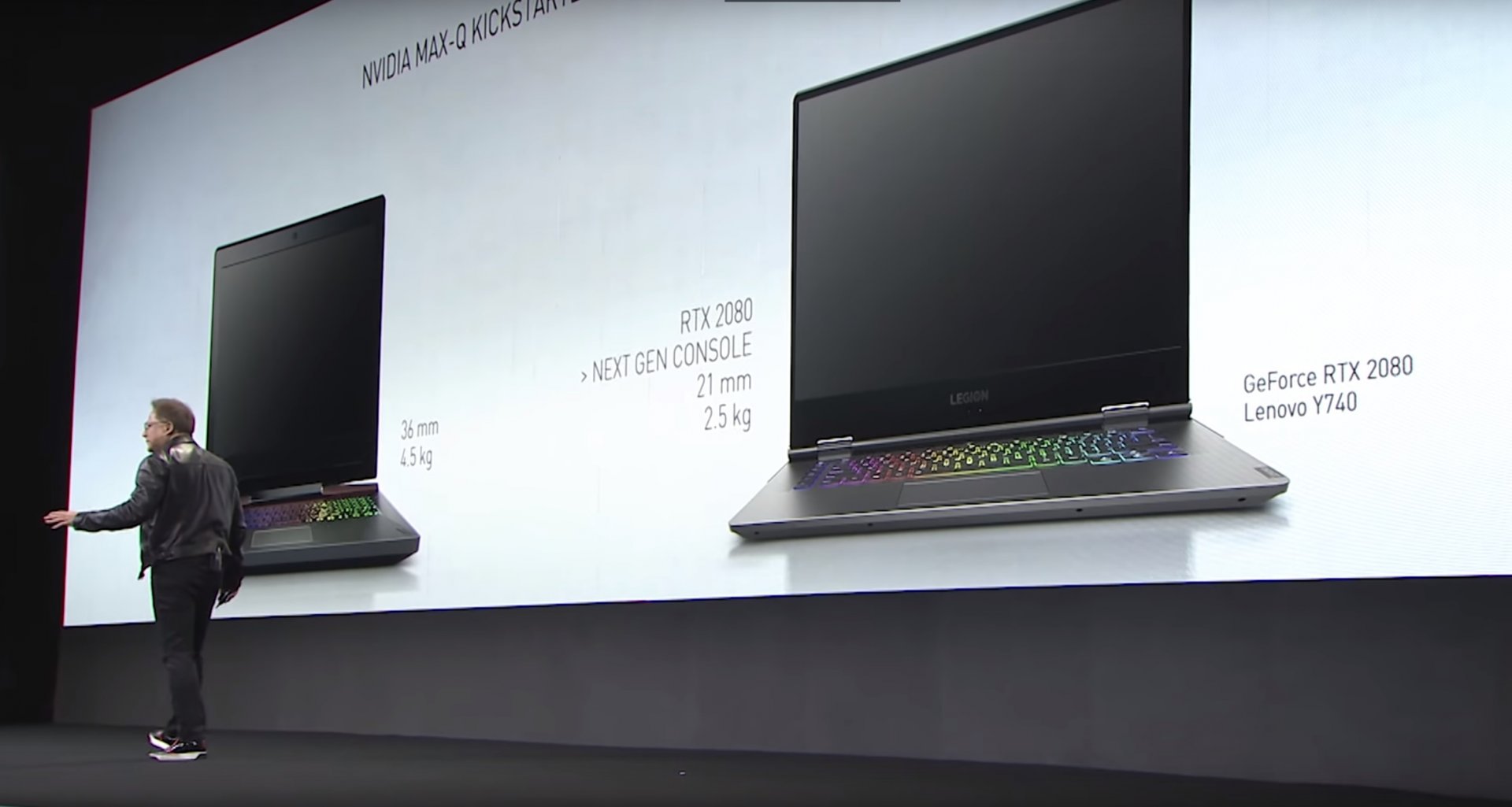

NVIDIA’s slide compares the RTX2080 with only one next-gen console. As such, we don’t know whether NVIDIA is comparing it with PS5 or the next-gen Xbox. We also don’t know whether the green team is comparing the RTX2080 with Lockhart (the less powerful next-gen Xbox console).

Let’s also not forget that the RTX2080 has been out for one and a half years. Furthermore, both of the next-gen consoles will come out in one year from now. This obviously means that both MS and Sony have enough time in order to further enhance and tweak the frequencies of their GPUs. Not only that, but console GPUs are more efficient when it comes overall performance and optimization.

Since NVIDIA did not clarify what this next-gen console actually is in its slide, we can only speculate about it. As said, this could very well be Lockhart and not the high-end model of the next-gen Xbox console. Still, it may give you an idea of how powerful these new consoles will actually be.

Lastly, it will be interesting to see whether the RTX2080 will be able handle the first next-gen games. It will be also interesting to see whether NVIDIA’s new GPUs will be significantly faster than the RTX2080Ti; a GPU that NVIDIA hints at being way more powerful than both the next-gen consoles.

Below you can find a screenshot from that slide.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email