Nvidia today released its latest beta driver for its graphics cards. According to their release notes, the GeForce 337.50 Beta drivers pack key DirectX optimizations which result in reduced game-loading times and significant performance increases across a wide variety of games. Moreover, these gains were said to be noticed in CPU bound titles, therefore we decided to give these drivers a test. The end result pleased us but the performance boost is not as good as the one witnessed with AMD’s Mantle.

Our Q9650 was the perfect CPU to test. While this CPU can still run – without major issues – all latest titles, it is also the CPU that sees the highest performance gains from such optimizations. AMD’s Mantle shined on low/mid end CPUs, therefore we expected Nvidia’s latest driver to give us a noticeable performance boost.

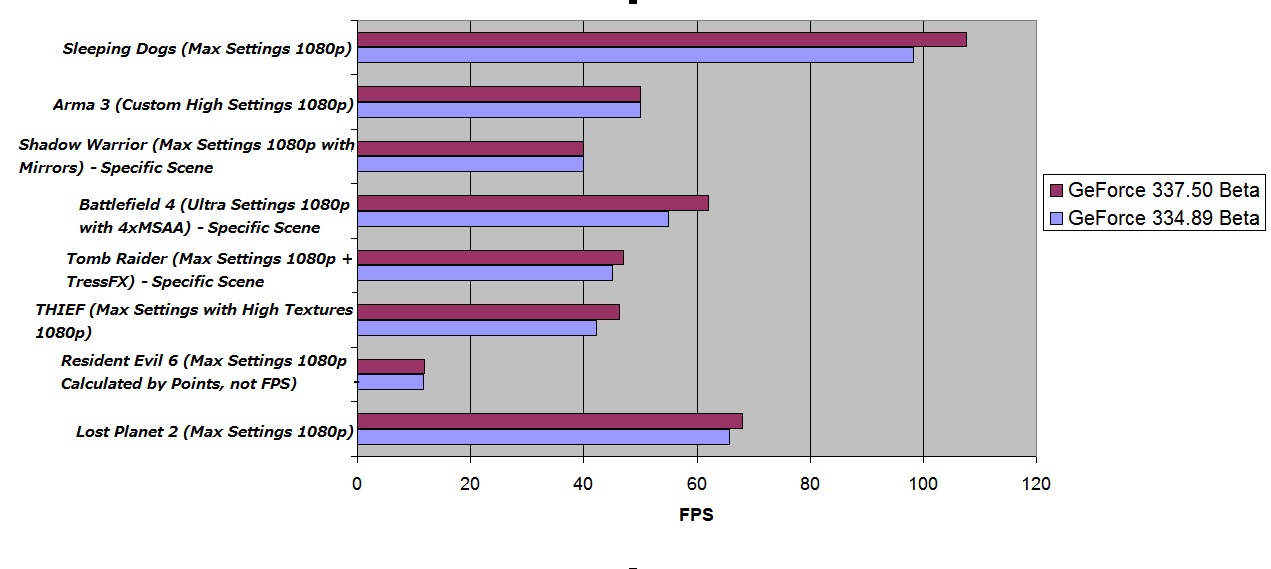

Well, after testing both GeForce 334.89 and GeForce 337.50, we have to admit that there were noticeable improvements in just a couple of games. Unfortunately, a lot of titles were merely affected by this new driver, suggesting that Nvidia’s optimizations were not made for all DX titles.

Arma 3 and Shadow Warrior (with Mirrors option enabled; an option that stresses both the CPU and the GPU) did not receive any performance boost at all. Tomb Raider, on the other hand, saw a 2fps improvement. Lost Planet 2’s Test B benchmark improved by 3.5fps while THIEF’s benchmark saw a minor 4fps improvement.

Battlefield 4 and Sleeping Dogs, however, surprised us with their performance boost. Battlefield 4 saw a 7-10fps increase in scenes we were CPU limited, and Sleeping Dogs’ benchmark was running without stuttering issues whatsoever. Not only that, but the framerate in Sleeping Dogs was increased by 7-9fps.

Do note that Resident Evil 6’s performance was measured by its points. The game scored 11.693 points with the GF334.89 Beta drivers and 11.867 with the GF337.50 Beta drivers.

Unfortunately, we were unable to test Assassin’s Creed IV: Black Flag as our key was not working (that’s because Ubisoft de-activated review codes after the game’s official release).

All in all, while this new driver is better than the previous ones released by the green team, it is not up to what AMD achieved with Mantle. Although there are improvements that will help a lot of gamers on a number of CPU bound titles, this new driver does not put AMD’s API to shame. Mantle was able to double the performance of AMD’s GPUs when used with low/mid tier CPUs, something that this driver does not even come close to.

Below you can find the scenes that were tested in Tomb Raider, Shadow Warrior, Arma 3 and Battlefield 4.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email