It appears that PC gamers have found a way to enable DLSS 3 Frame Generation on NVIDIA’s RTX 30 and 20 series GPUs in Portal Prelude RTX.

So, let’s start with the good news. By simply using DLSS FG DLL 1.0.1 (you can download it from TechpowerUp), you can enable DLSS 3 Frame Generation on GPUs like the RTX 2080Ti or the RTX 3080. Generally speaking, this workaround works with all the RTX20 and RTX30 series graphics cards. Do note that the original dll or any other dll than 1.0.1 does not work. Moreover, this “workaround” only works with Portal Prelude RTX. It does not work in other DLSS 3 games.

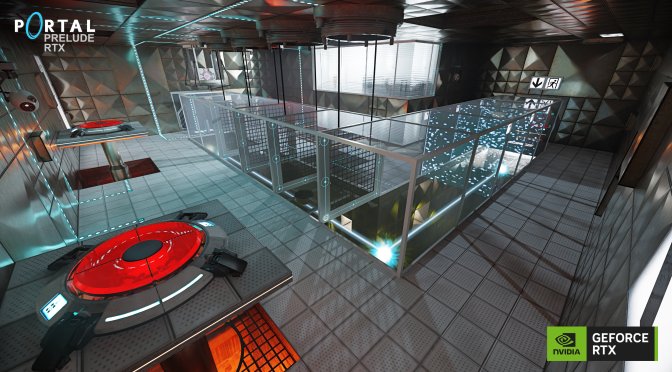

Now the bad news is that the game is completely unplayable. As you can see below, there are major frame pacing issues on the RTX3060 with DLSS 3 Frame Generation. Despite the high framerate, the camera is also jittery, making the game feel awful. To put it simply, it’s a stuttering mess and completely unplayable.

For those wondering, yes. This actually does work. This isn’t a fake video. You can also find here other PC gamers who were able to enable DLSS 3 Frame Generation on their GPUs. And yes, all of them report the exact same experience. While DLSS 3 Frame Generation manages to double their framerates, the overall gaming experience is worse with DLSS 3 enabled.

To be honest, I’m not really surprised by this. NVIDIA has told us that DLSS 3 Frame Generation could work on the RTX20 and RTX30 series GPUs. The reason why NVIDIA has not enabled it as it currently has major issues. And this is exactly what the green team meant.

DLSS 3 Frame Generation takes advantage of the Optical Flow Accelerator that the RTX40 series GPUs have. This is why DLSS 3 Frame Generation works as well as it does. So no, I don’t think you can say that NVIDIA has locked this feature behind the RTX 40 just so it can screw its RTX20 and RTX30 customers. I mean, the proof is right here. With DLSS 3, Portal Prelude RTX is completely unplayable on the RTX 3060.

In a way, these frame pacing issues remind those we saw in AMD’s FSR 3.0. As we’ve reported, both Immortals of Aveum and Forspoken suffer from major frame pacing issues when using FSR 3’s Frame Generation on VRR displays. So, this could be another indication of how important the Optical Flow Accelerator actually is for DLSS 3.

It will be interesting to see whether gamers will find a way to somehow improve DLSS 3 Frame Generation on the RTX20 and RTX30 series GPUs. I doubt this is possible but hey, you never know!

UPDATE:

NVIDIA has issued an official statement about this that you can find below.

“We’ve noticed that some members of the PC gaming community, like Discord Communities before it, have encountered a bug in an old v1.0.1.0 DLSS DLL that falsely appears to generate frames on 20/30-series hardware. There is no DLSS Frame Generation occurring—just raw duplication of existing frames. Frame counters show a higher FPS, but there is no improvement in smoothness or experience. This can be verified by evaluating the duplicated frames using tools like NVIDIA ICAT, or viewing the error message in the Remix logs indicating an error with frame generation.

DLSS frame generation DLLs have since fixed this bug, and NVIDIA will be issuing an update to RTX Remix in the future to prevent further confusion when frame generation is behaving incorrectly.”

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email