Written by Metal Messiah

On the HardwareLeaks website, which belongs to “rogame” (the renowned hardware leaker from Twitter), some new “NAVI 21” GPU IDs have been leaked, which gives us some idea about AMD’s plans for their next-gen GPU lineup.

According to “rogame” AMD is planning several NAVI 21 GPU variants, which will be powered by the “RDNA2” architecture, and we also have the NAVI 10 “refresh” GPU lineup which is based on the older “RDNA1” architecture/silicon. As per this leak and other rumors, we know that AMD plans to release next-generation RDNA 2-based “Big Navi” 2X lineup, and also RDNA 1 parts which will be offered as a “refresh” of the existing Radeon RX 5000 series cards.

With the Navi 2X GPU lineup, AMD’s primary focus is to release next-gen “enthusiast” and “flagship” offerings, to tackle existing flagship models from NVIDIA such as the GeForce RTX 2080 Ti and the GeForce RTX 2080 SUPER. These might also challenge Nvidia’s next-gen “Ampere” Gaming GPU lineup.

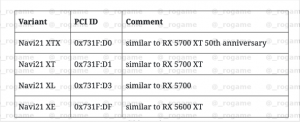

For the AMD NAVI 21 high-end “Gaming cards”, we have 4 variants listed. These are going to power high-end enthusiast GPUs. The information is derived from the PCI IDs of the GPUs which have been leaked out.

Following are the four SKUs, “” Navi 21 XTX (0x731F:D0), Navi 21 XT (0x731F:D1), Navi 21 XL (0x731F:D3), Navi 21 XLE (0x731F:DF) “”. Each of these Navi 21 GPU SKUs might be a replacement of the existing cards in the Radeon RX 5000 series family, though these might target a totally different performance segment, most probably ‘high-end”.

The list mentions several “cut-down” variants of Navi 21, which could mean that AMD is trying to reuse these chips as much as possible. So we can expect a new Radeon RX 6600 SKU featuring the same silicon, or whatever name AMD decides to give.

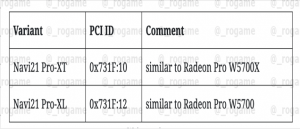

Apart from these gaming graphics cards, we also have two “Radeon Pro” variants based on the Navi RDNA2 design. Following are the SKUs, “Navi 21 Pro-XT (0x731F:10)” and the “Navi 21 Pro-XL (0x731F:12)”. These might replace the existing PRO cards such as the W5700X/W5700 Pro series.

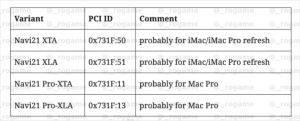

AMD is also releasing at least four variants for the next-generation products from “Apple”. We also have several PCI IDs listed for the AMD Navi 22 and Navi 23 GPUs, as leaked by “rogame”.

Finally, we have AMD NAVI 10 RDNA1-based “gaming” refresh lineup of graphics cards. There are at least three Navi 10 Refresh SKUs in the works which include, “” Navi 10 XT+ (731F:E1), Navi 10 XM+ (731F:E3), and Navi 10 XTE+ (731F:E7) “”.

According to this leak, the Navi 10 XT+ GPU should replace the Navi 10 XT GPU which powers the current RX 5700XT card, the Navi 10 XM+ should replace the Navi 10 XM which powers the current 5600M and finally, we have the Navi 10 XTE+ which will “be” a new replacement SKU which currently powers the RX 5600XT GPU.

These are mostly going to target the “mainstream” GPU market segment, unlike big NAVI 21 which is for the “high-end” and enthusiast segments. We can expect a much “lower” price point than the existing lineup of Radeon RX 5000 series cards, with these new “refreshed” parts.

AMD RDNA 2 Navi 21, Navi 22, Navi 23 GPU Device IDs For Radeon RX and Radeon Pro Graphics Cards. (Image Credits: Rogame).

User’s articles is a column dedicated to the readers of DSOGaming. Readers can submit their stories and the Editorial team of DSOGaming can decide which story it will publish. All credits of these stories go to the writers that are mentioned at the beginning of each story. Contact: Email