Sea of Thieves is a new shared-world adventure game from Rare that is available exclusively on Microsoft’s platforms, allowing players to take the role of a pirate and sail the seas of a fantastical world. The game has just been released so it’s time to benchmark it and see how it performs on the PC platform.

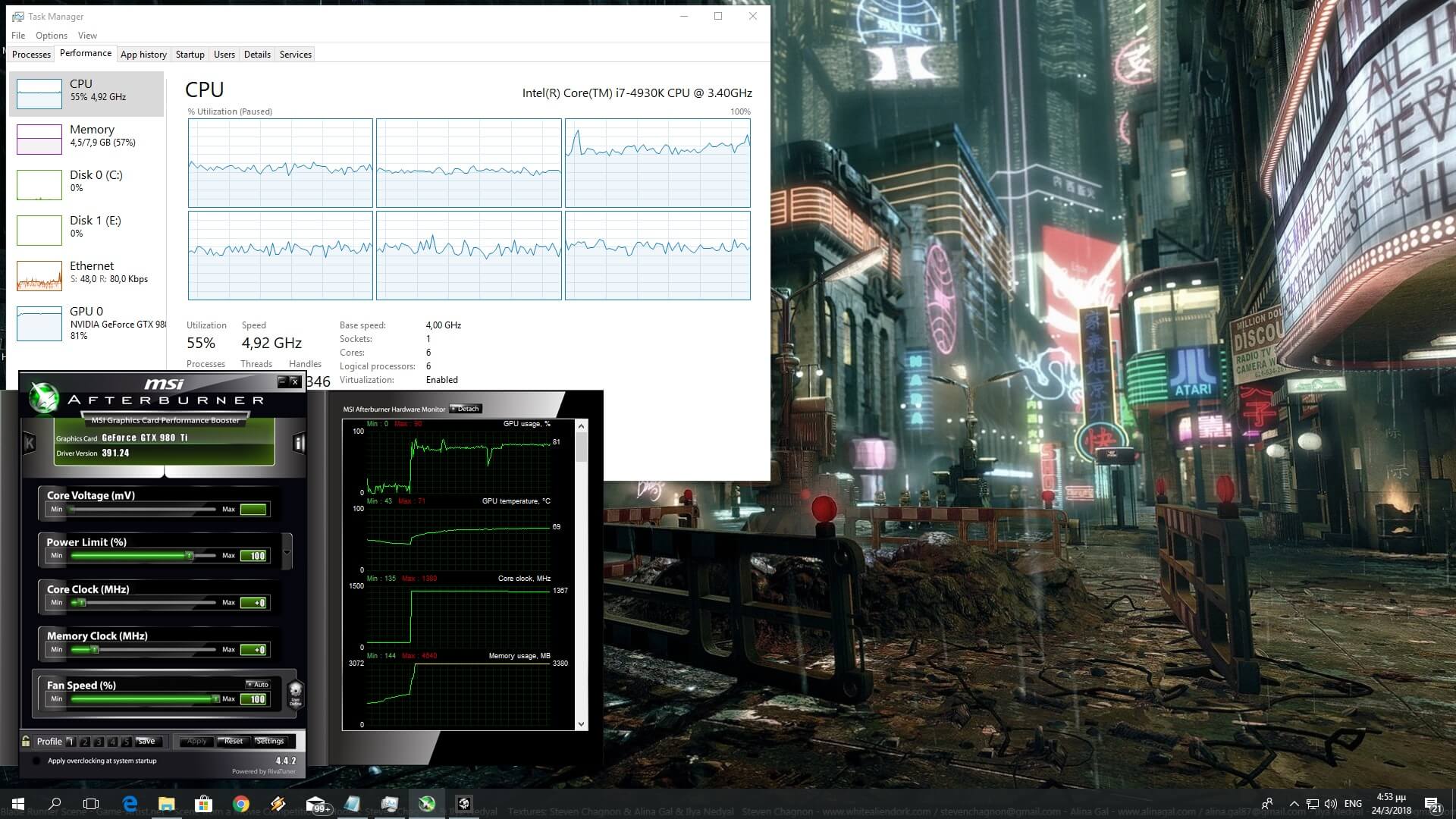

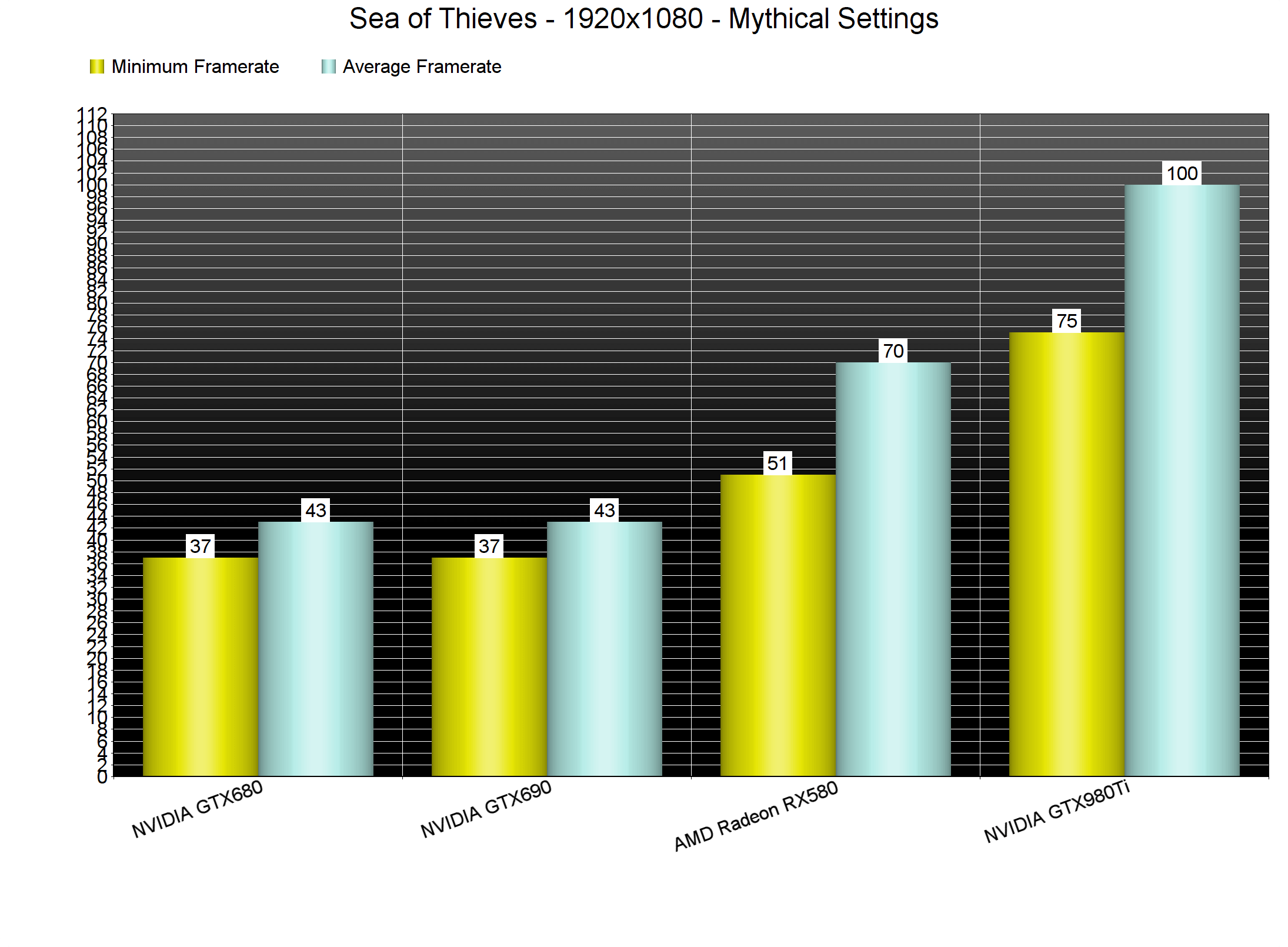

For this PC Performance Analysis, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 8GB RAM, AMD’s Radeon RX580, NVIDIA’s GTX980Ti and GTX690, Windows 10 64-bit and the latest version of the GeForce and Catalyst drivers. NVIDIA has not included any SLI profile for this game, meaning that our GTX690 behaved similarly to a single GTX680 (notice a trend lately with SLI?).

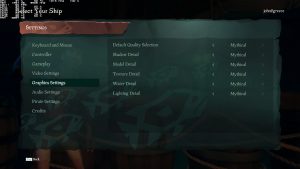

Rare has added a few graphics settings to tweak. PC gamers can adjust the quality of Shadows, Models, Textures, Water and Lighting. Gamers can also lock their framerates to 15,30,60,90 or 120 (or leave it uncapped) and there is an option for Performance Metrics.

[nextpage title=”GPU, CPU metrics, Graphics & Screenshots”]

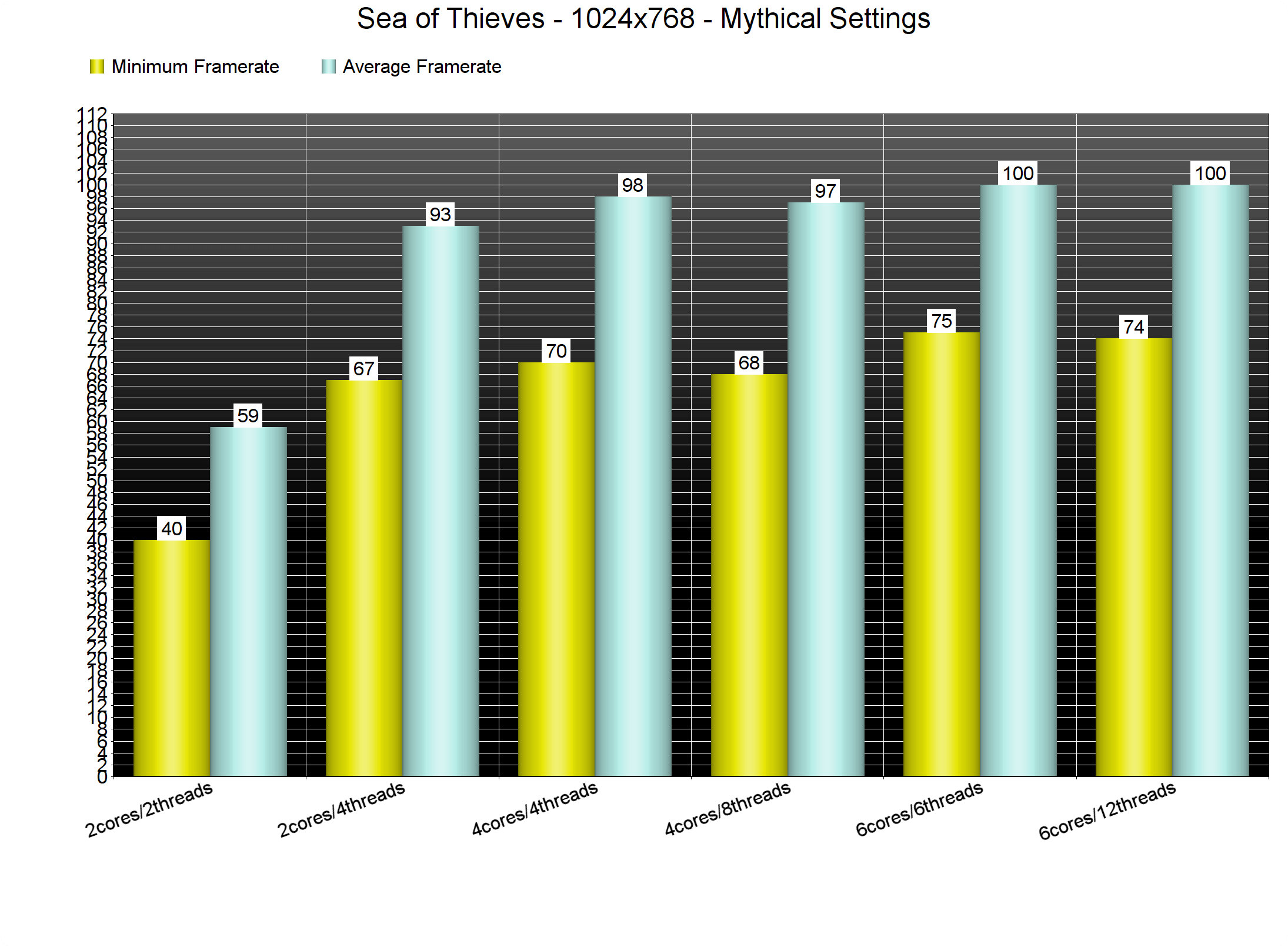

In order to find out how the game performs on a variety of CPUs, we simulated a dual-core and a quad-core CPU. For our CPU tests, we lowered our resolution to 1024×768 in order to eliminate any possible GPU bottleneck. Without Hyper Threading enabled, our simulated dual-core system was unable to offer a smooth gaming experience. With Hyper Threading enabled, our simulated dual-core was able run the game with a minimum of 67fps and an average of 93fps. Our six-core and our simulated quad-core systems had no trouble running the game, and performed similarly.

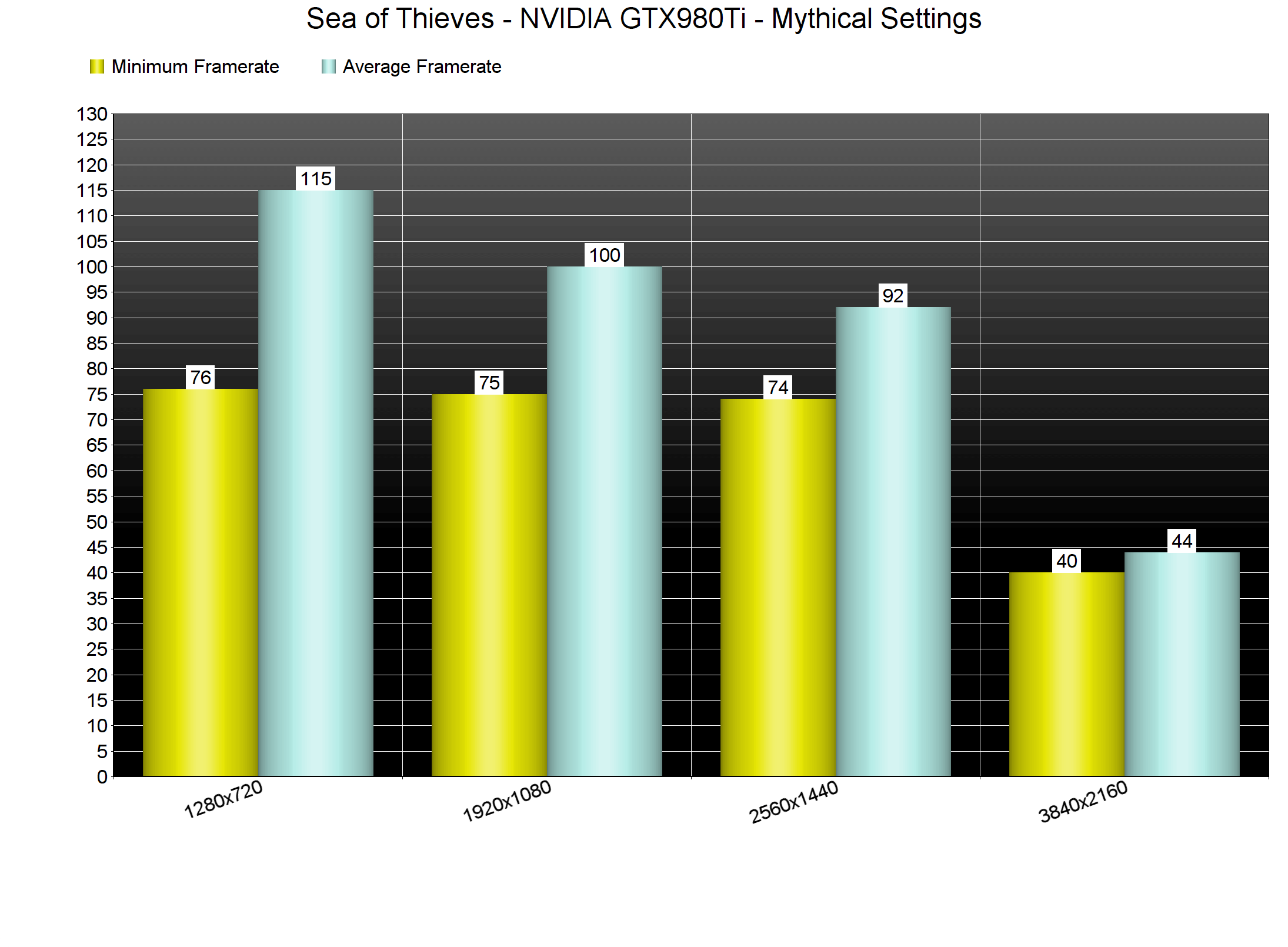

Regarding its GPU requirements, Sea of Thieves needs a moderate GPU in order to be enjoyed. Our AMD Radeon RX580 was unable to offer a constant 60fps experience on Mythical settings at 1080p. In order to hit a minimum framerate of 60fps, we had to lower our settings to Rare. On the other hand, our GTX980Ti was able to run the game on both 1080p and 1440p with consant 60fps. At this point, we should note that our GTX980Ti was underused in a variety of scenes (especially in the starting town). Normally, this would hint at a CPU bottleneck, however we can clearly see that our CPU was never stressed on those scenes. Moreover, the game is using the DX11 API instead of the DX12 API. Therefore, this could be caused from an increased number of drawcalls (though this is just an assumption).

As said, our GTX980Ti was able to run the game with more than 60fps at all times in 1080p and 1440p. In 4K and on Mythical settings, our GTX980Ti pushed a minimum of 40fps and an average of 44fps.

Graphics wise, Sea of Thieves has a cartoon-ish look that may put off a number of players. Rare has done an incredible job with its ocean simulation system and it’s not a stretch to say that this game features some of the best water effects we’ve seen in a video-game. Compared to its E3 2015 reveal, however, the game’s lighting system appears to have taken a hit, though it still looks great. Rare has used light propagation volumes for its Global Illumination solution and has created some truly amazing ‘fluffy’ clouds. Most textures are of high quality, though we do have to note that the game’s special ‘cartoon-ish’ style makes it possible to hide some low-res textures. Sea of Thieves looks very good and cute, but it does not really push the graphical boundaries of video-games.

In conclusion, Sea of Thieves performs great on the PC platform. The game does not require a high-end PC system in order to be enjoyed, and we did not notice any mouse acceleration or smoothing issues. There are also proper mouse+keyboard on-screen indicators as you’d expect from a PC game. It’s not perfect as we still can’t figure out what was causing our GPU to be underused in some scenes (again our guess is the increased drawcalls that cannot be handled ideally by the DX11 API but as we’ve said, this is just an assumption), so Rare will have to take a look and improve things.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email