Bloober Team has just released Layers of Fear, one of the first games using Unreal Engine 5, on PC. And, since Bloober Team has provided us with a review code, we’ve decided to benchmark it and see how it performs on the PC platform.

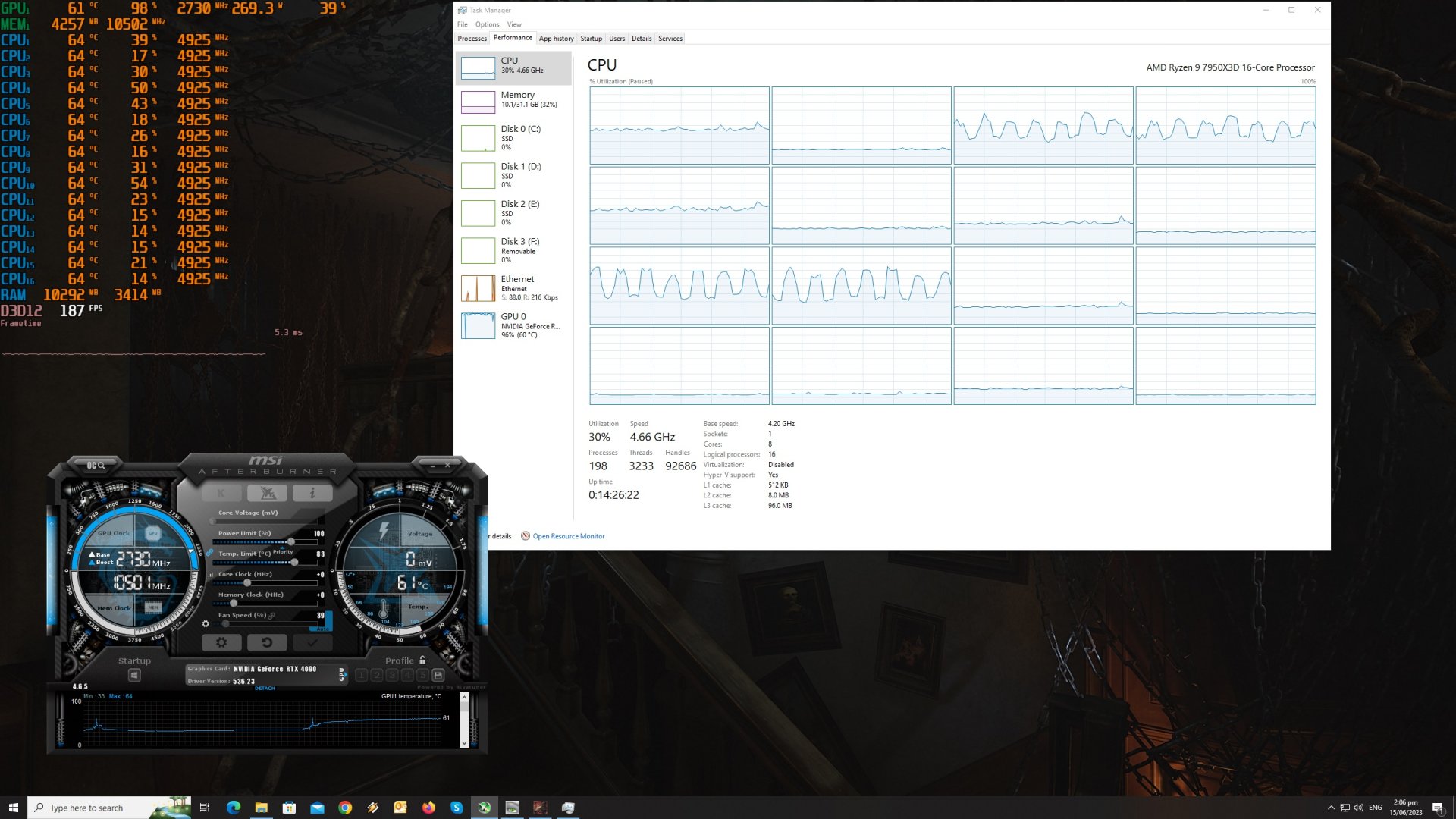

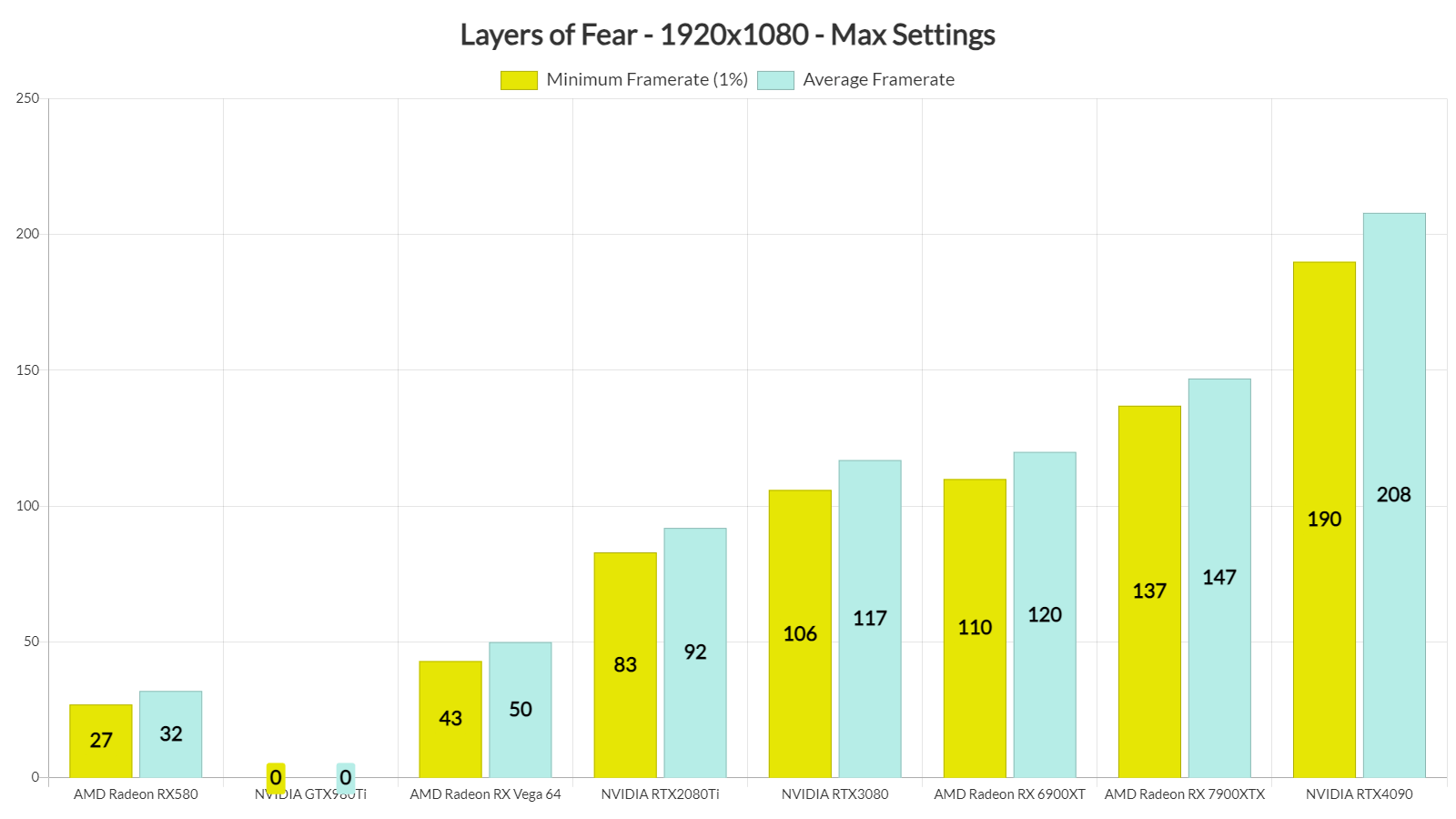

For this PC Performance Analysis, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, AMD’s Radeon RX580, RX Vega 64, RX 6900XT, RX 7900XTX, NVIDIA’s GTX980Ti, RTX 2080Ti, RTX 3080 and RTX 4090. We also used Windows 10 64-bit, the GeForce 536.23 and the Radeon Software Adrenalin 2020 Edition 23.5.2 drivers. Moreover, we’ve disabled the second CCD on our 7950X3D.

Layers of Fear features the exact same graphics settings that its PC demo currently has. PC gamers can adjust the quality View Distance, Anti-Aliasing, Textures, Shadows, Global Illumination, Reflections, Effects, Post-Process, Foliage and Shading. There are also options for Bloom, Lens Flares, and Motion Blur. Not only that, but the game supports NVIDIA DLSS 2, Intel XeSS and AMD FSR 2.0.

Layers of Fear does not feature any built-in benchmark tool. Thus, for our GPU benchmarks, we used the chains room area from the Musician’s Story Campaign Mode. That area appeared to be one of the most demanding ones. For our benchmarks, we also enabled Ray Tracing (for those GPUs that supported it). The game uses RT only for some of its reflections, and its performance penalty is only 1-3fps in most of the game’s scenes.

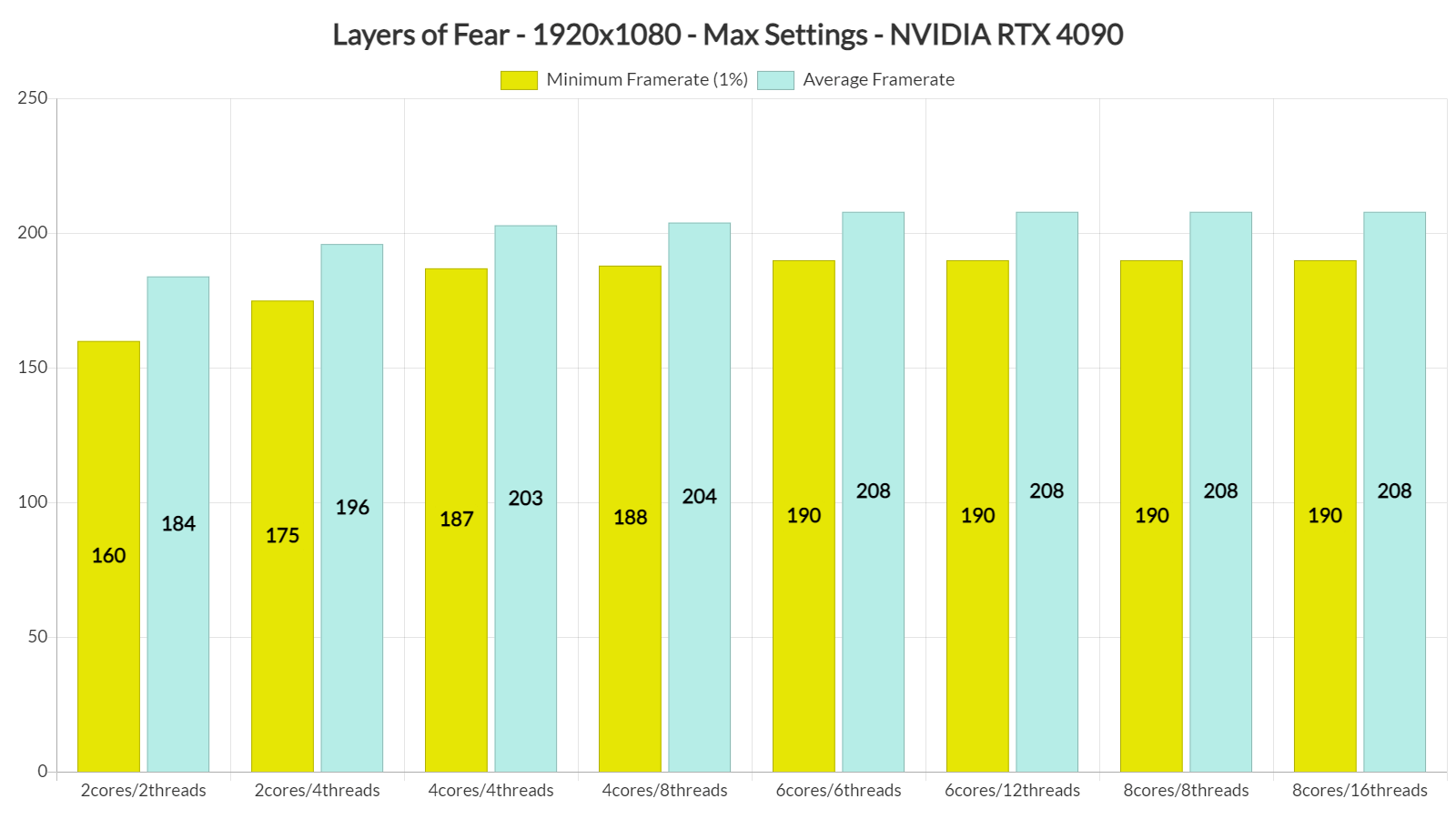

Layers of Fear does not require a high-end CPU. At 1080p/Max Settings/RT On, our AMD Ryzen 9 7950X3D was pushing over 190fps. With only two CPU cores, we were able to get over 160fps (though the game suffered from stuttering issues). When we enabled SMT (Hyper-Threading for Intel CPUs), on this simulated dual-core system, we managed to increase our minimum framerates to 175fps.

Speaking of stuttering issues, although the game does not have any shader compilation stutters, it does have some traversal stutters. These traversal stutters do not happen frequently, so most of you may not even notice them.

Most of our GPUs were able to provide a smooth gaming experience at 1080p/Max Settings. However, and for some unknown reason, the game was constantly crashing on the GTX980Ti.

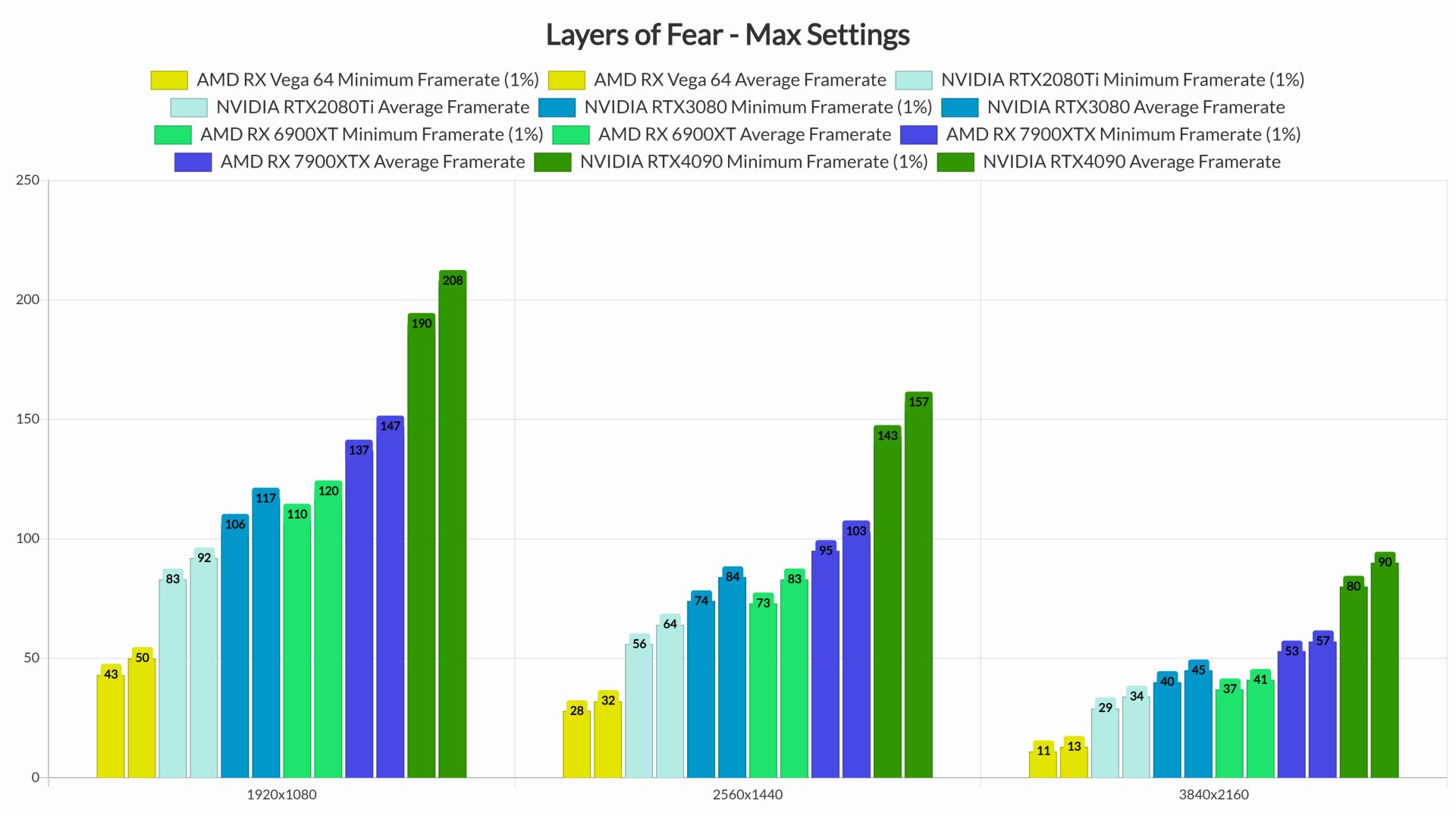

At 1440p/Max Settings/RT, you’ll need a GPU equivalent to the RTX3080 for gaming with over 60fps. Owners of an RTX2080Ti can also get a smooth experience with a G-Sync monitor. As for Native 4K/Max Settings/Ray Tracing, the only GPU that was able to get over 60fps was the RTX 4090.

Graphics-wise, Layers of Fear looks great. The game takes advantage of Lumen for lighting, but it does not use Nanite. As such, you’ll notice minor pop-ins while approaching objects. Bloober Team has also used high-resolution textures, and everything seems polished. The only downside is the elevated black levels, an issue that was also present in the PC demo. Other than that, I really don’t have any issues with the game’s graphics. And while Layers of Fear does not showcase what Unreal Engine 5 is truly capable of, it’s at least a great-looking game.

All in all, Layers of Fear runs and looks similar to its PC demo. In other words, the PC demo is representative of the final version, and we highly recommend downloading it. The game does not require a high-end CPU, and can run on a wide range of GPUs, especially if you use DLSS 2 or FSR 2.0. Moreover, it’s a great looker so it justifies its GPU requirements. As said, the only downsides are the elevated black levels and its traversal stutters. So yeah, great news for those that were looking forward to this remake!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email