Deliver Us Mars is a new atmospheric sci-fi adventure game that came out last week. Powered by Unreal Engine 4, it’s time to benchmark it and see how it performs on the PC platform.

For this PC Performance Analysis, we used an Intel i9 9900K, 16GB of DDR4 at 3800Mhz, AMD’s Radeon RX580, RX Vega 64, RX 6900XT, RX 7900XTX, NVIDIA’s GTX980Ti, RTX 2080Ti, RTX 3080 and RTX 4090. We also used Windows 10 64-bit, the GeForce 528.24 and the Radeon Software Adrenalin 2020 Edition 22.11.2 drivers (for the RX7900XTX we used the special 23.1.2 driver).

KeokeN Interactive has added a respectable number of graphics settings. PC gamers can adjust the quality of Shadows, View Distance, Post-Processing, and Textures. There are also options for Motion Blur and Framerate. Furthermore, the game supports ray-traced reflections and shadows, as well as DLSS 2/3.

Deliver Us Mars does not feature any built-in benchmark tool. As such, we’ve benchmarked the game’s intro sequence. Moreover, and since the game is GPU-bound, we didn’t test any other CPU configurations.

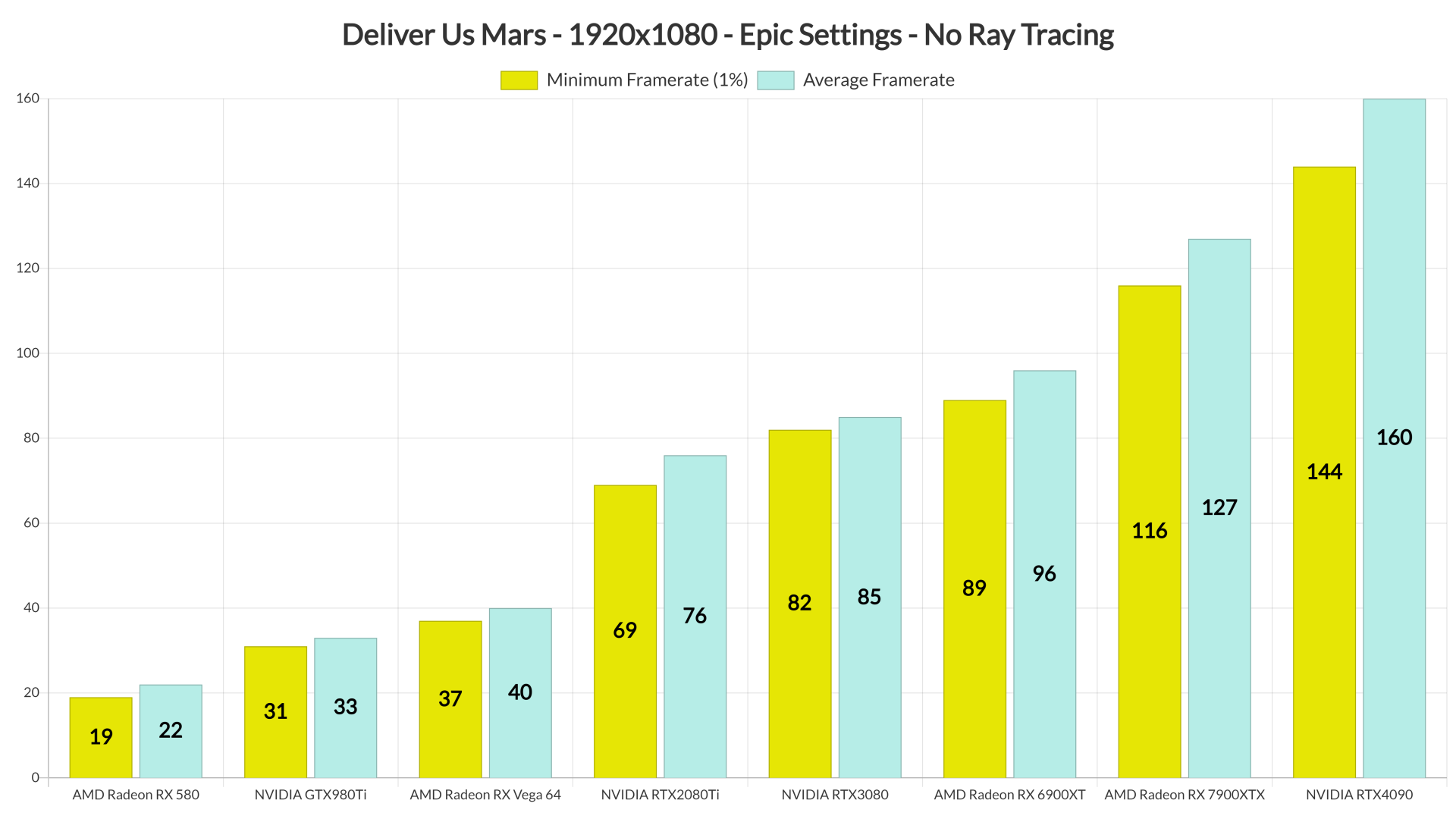

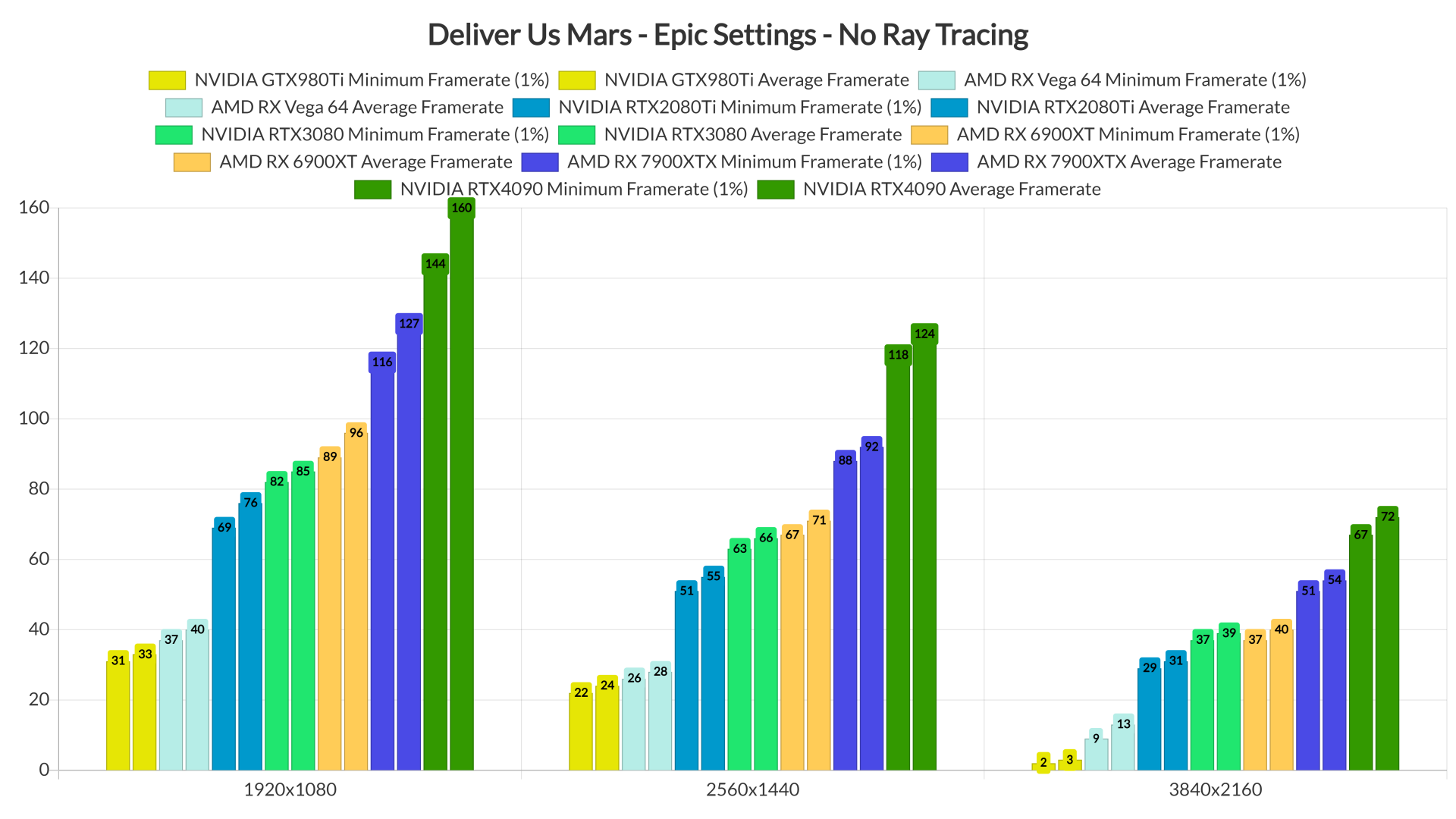

Let’s start with the game’s rasterized version. At 1080p/Epic Settings/No RT, the game requires at least an NVIDIA RTX2080Ti for a smooth experience. Our AMD Radeon Vega 64 and NVIDIA GTX980Ti were only able to offer a 30fps experience. As for the AMD RX580, the game was unplayable with those framerates.

At 1440p/Epic Settings/No RT, the RTX2080Ti was unable to run the game with 60fps. On the other hand, our RTX3080, RTX4090, RX 6900XT and RX 7900XTX were able to push over 60fps. And as for native 4K, the only GPU that could run the game smoothly was the RTX4090.

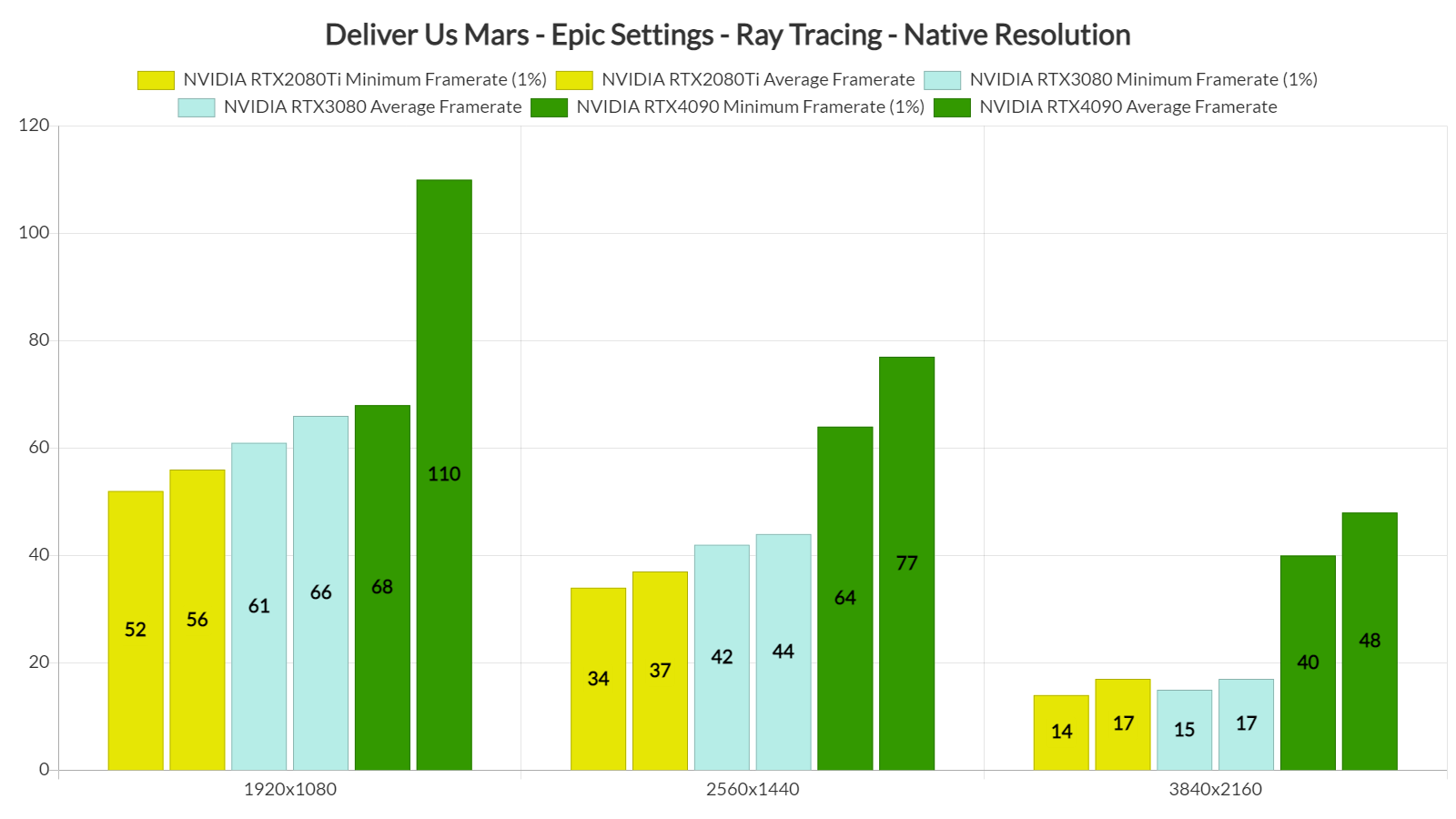

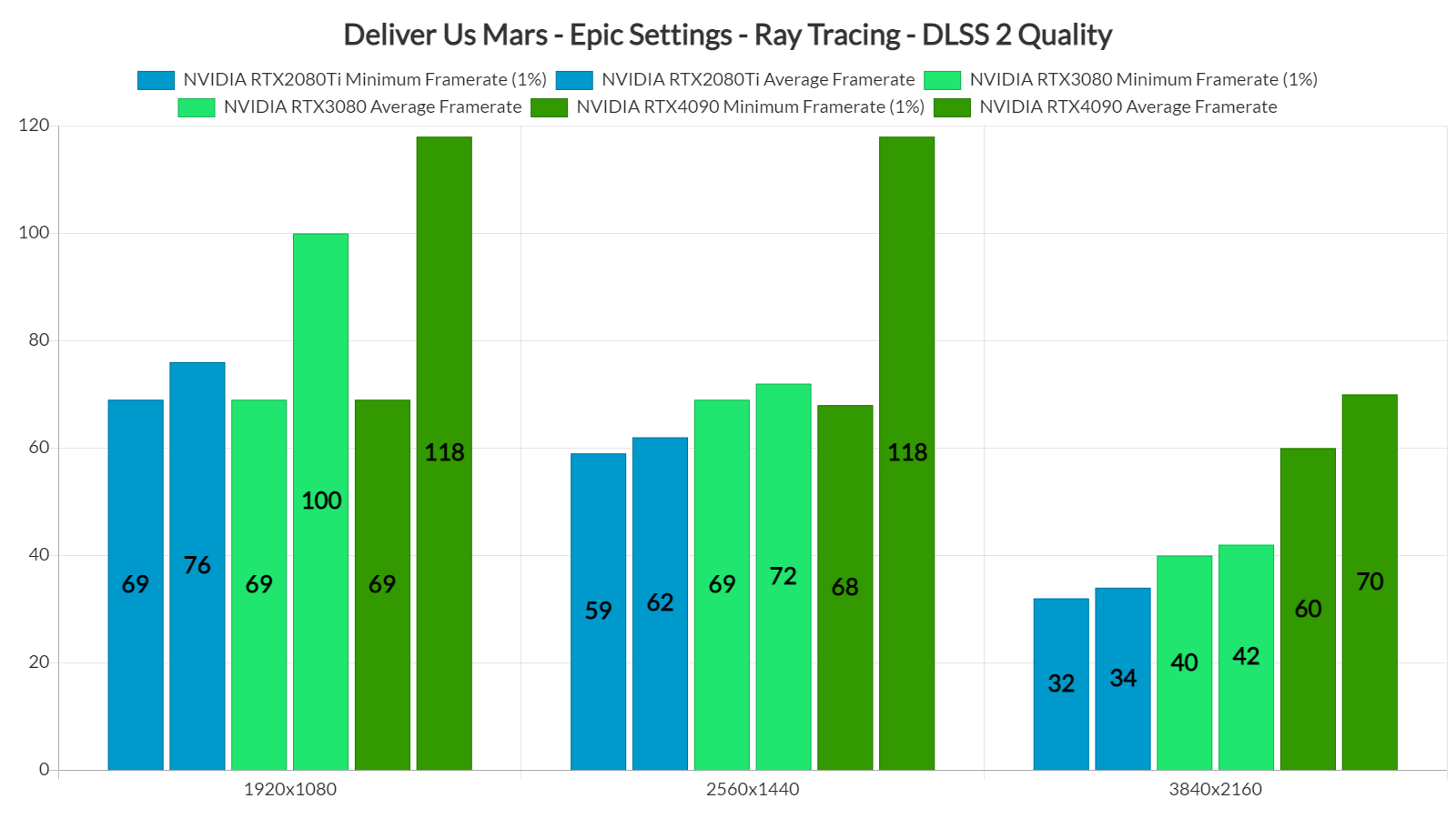

As said, the game supports Ray Tracing for Reflections and Shadows. And unfortunately, these effects come with a HUGE performance hit. For these Ray Tracing tests, we only used NVIDIA GPUs (as the game does not support FSR 2.0).

At native 1080p/Epic Settings/Ray Tracing, the RTX2080Ti could not push over 56fps. At native 1440p, the only GPU that could run the game with over 60fps was the RTX4090. And as for native 4K, NVIDIA’s most powerful GPU was only able to push an average of 48fps.

To be honest, these RT effects are not that great. Below you can find some comparison screenshots. The left screenshots are with RT On and the right screenshots are with RT Off. And yes, while there are some differences between them, the game’s RT effects do not justify their huge GPU requirements.

As said, the game supports both DLSS 2 and DLSS 3. And, by enabling DLSS 2 Quality, we can significantly improve performance. Thanks to DLSS 2 Quality, the RTX2080Ti can now offer 60fps at 1080p. Similarly, the RTX3080 can push over 60fps at 1440p. And finally, the RTX4090 can borderline run the game with 60fps.

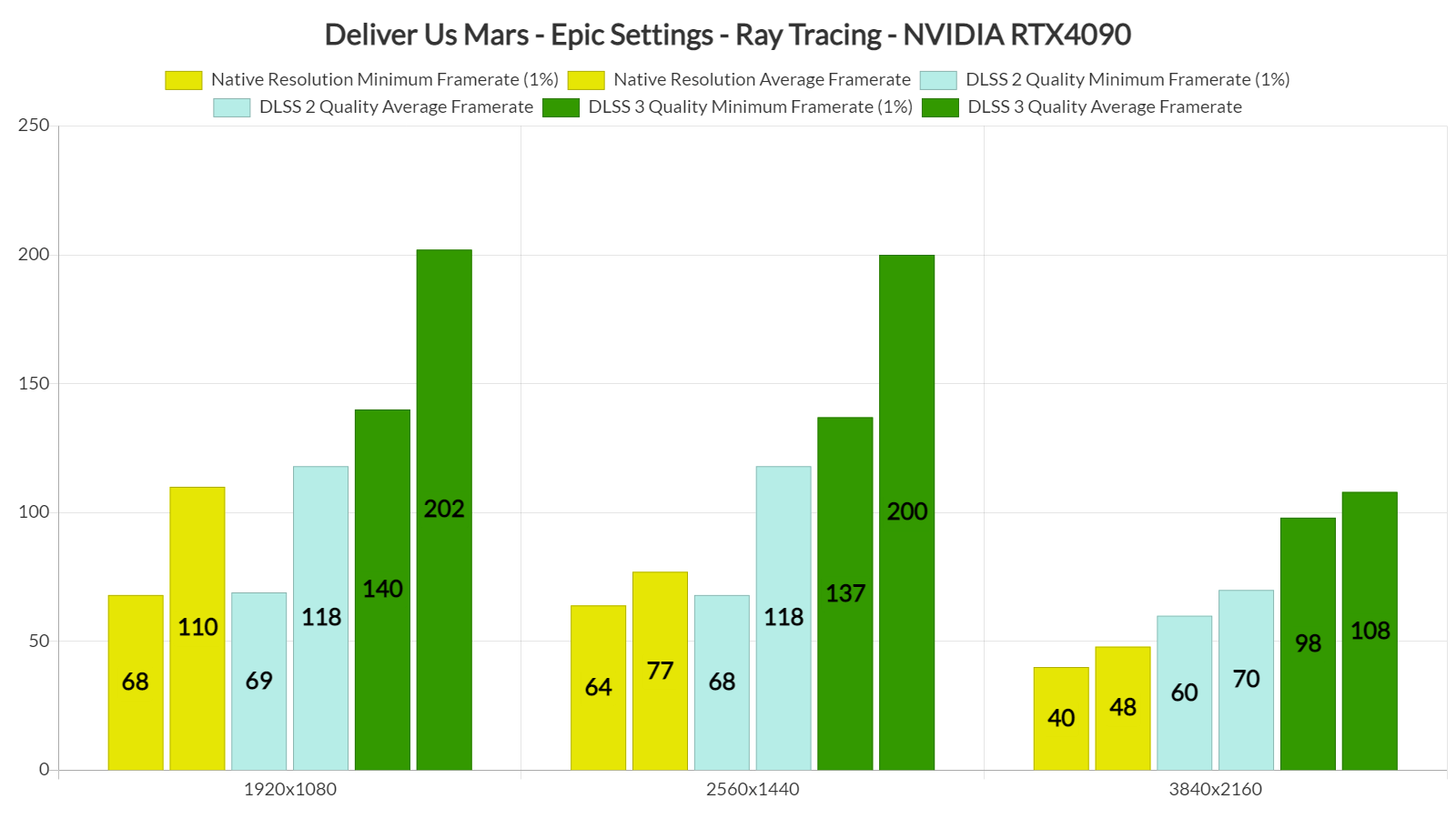

However, and as you may have noticed from the CPU scaling image, the game suffers from major CPU optimization issues when you enable its Ray Tracing effects. Deliver Us Mars mainly uses one CPU core/thread, which will limit overall performance in a lot of PC configurations. Seriously, due to these CPU issues, our minimum framerate drops from 140fps to 68fps at 1080p. Ouch. Thus, and similarly to The Witcher 3, you’ll need DLSS 3’s Frame Generation in order to overcome these CPU optimization issues.

With DLSS 3 Quality, our RTX4090 was able to push a minimum of 98fps and an average of 108fps at 4K with Epic Settings and Ray Tracing.

So, Deliver Us Mars is a really demanding game. But do its visuals justify its high GPU requirements? In our opinion, no. Don’t get me wrong. I get it, KeokeN Interactive is a small team. However, and for what is being displayed here, the game should be running better.

All in all, Deliver Us Mars will require really powerful GPUs to achieve smooth framerates. The game also has shader compilation stutters. Thankfully, it lets you enable/disable settings without the need to reload the game. In conclusion, overall performance is not up to what we’re expecting. But hey, at least you can enable DLSS 2 and DLSS 3 to overcome some of these optimization issues, so that’s something I guess!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email