Darksiders 3 has just been released to everyone and we’ve decided to use the final/retail version for our PC Performance Analysis purposes. The reason we’ve postpone this PC Performance Analysis was because we wanted to see whether things would improve with the game’s day-1 update. As such, we’ve benchmarked the final version and it’s time to see how this new action RPG performs on the PC platform.

For this PC Performance Analysis, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 16GB RAM, AMD’s Radeon RX580 and RX Vega 64, NVIDIA’s RTX 2080Ti, GTX980Ti and GTX690, Windows 10 64-bit, GeForce driver 417.01 and Catalyst driver 18.11.2. NVIDIA has not released any SLI profile for this game so our GTX690 performed similarly to a single GTX680.

Gunfire Games has included very few settings to tweak. PC gamers can adjust the quality of Shadows, Anti-Aliasing, View Distance, Textures, Post Processing, Effects and Foliage. The game supports Window, Windowed Fullscreen and Fullscreen, however it currently locks at your native resolution in Fullscreen. In order to run higher resolutions, we had to increase our Desktop resolution to either 1440p or 4K and then select Windowed Fullscreen, so bear that in mind if you are interested in downsampling. The game also lacks a FOV slider and is locked at 62fps (though you can remove the framerate lock via its .INI file).

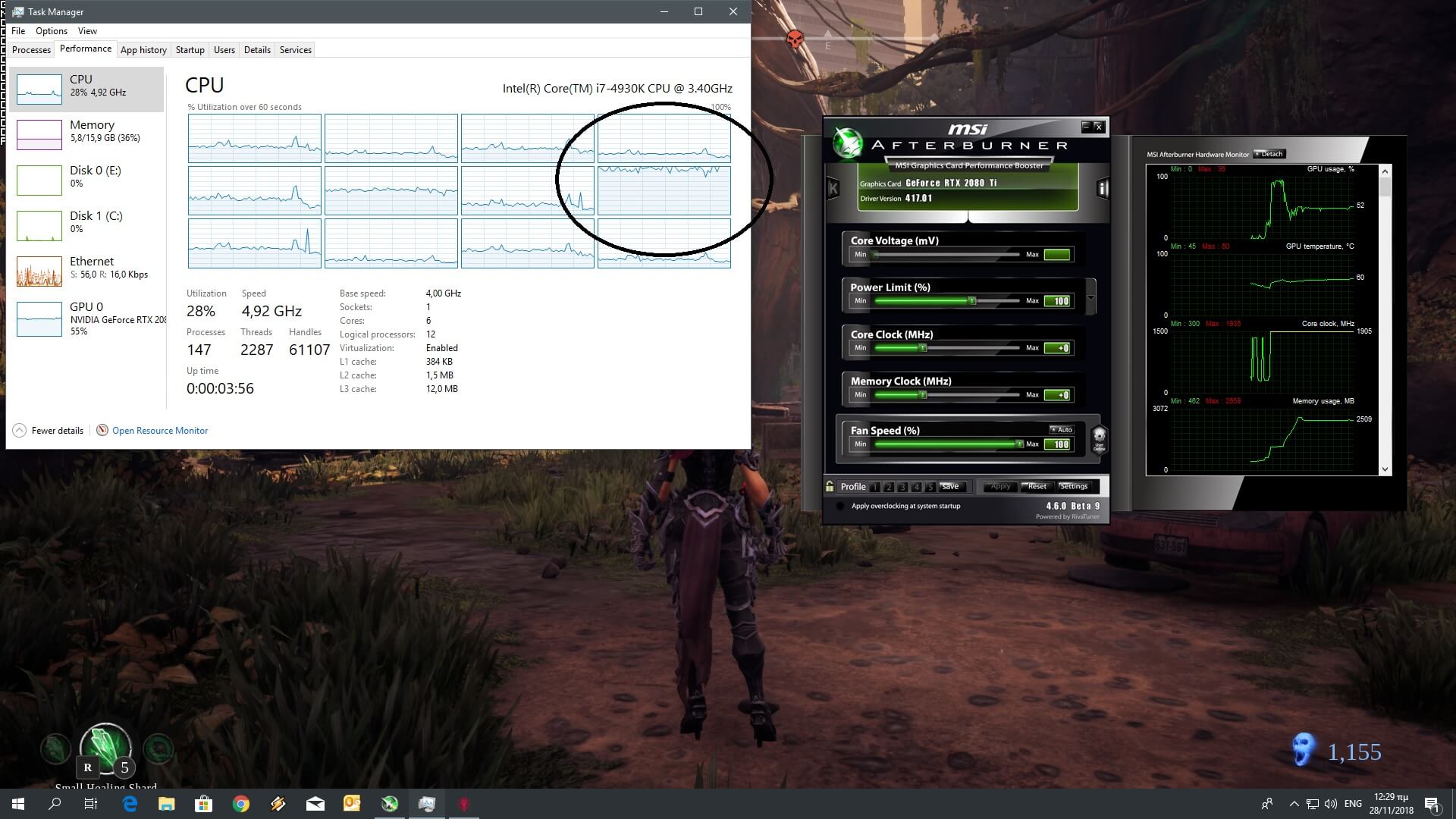

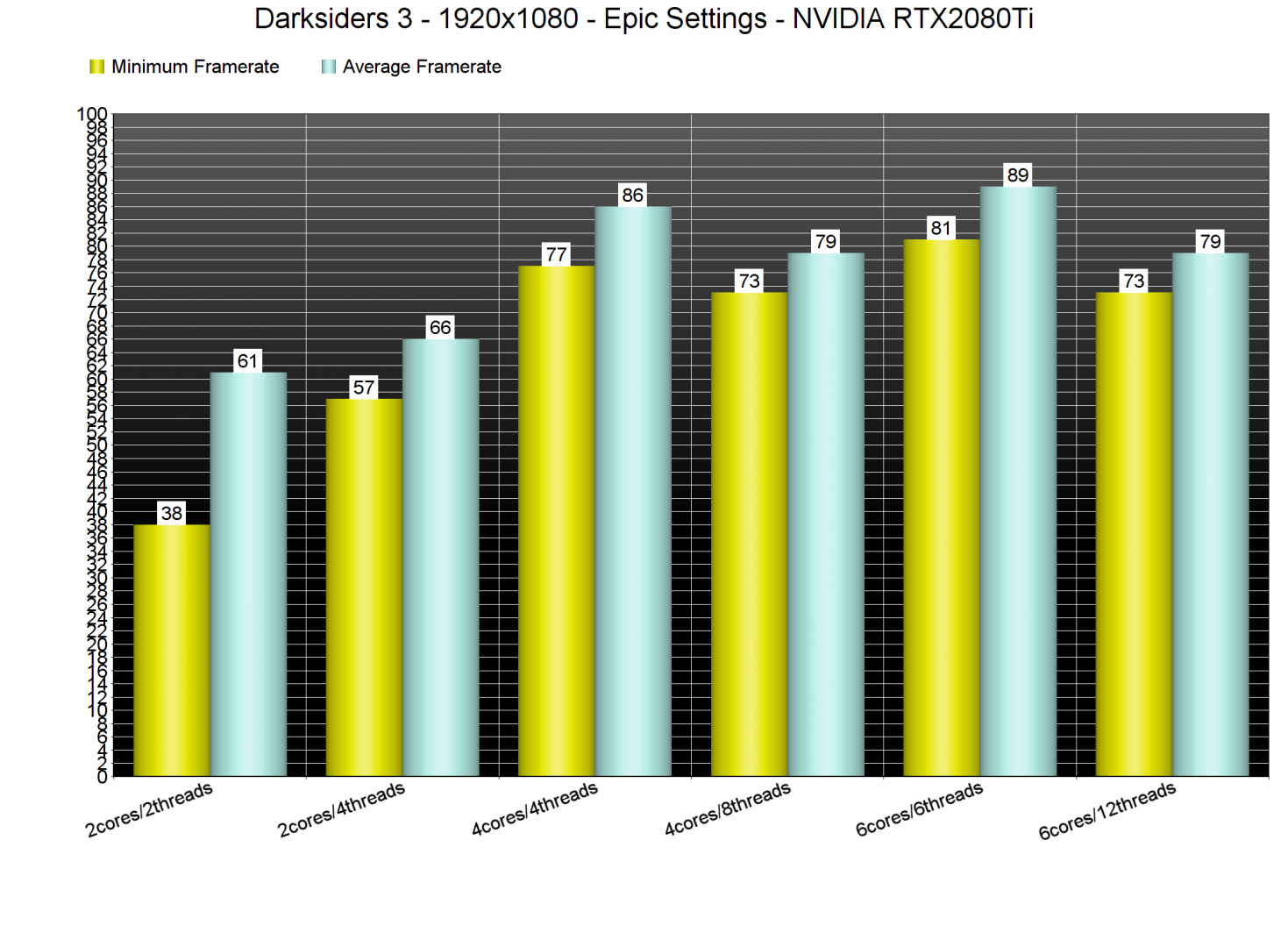

Darksiders 3 is powered by Unreal Engine 4; an engine that is well known for its multi-threaded capabilities. However, and even though we are in 2018, Darksiders 3 is mainly a single-threaded game. While the game uses partially two or three cores/threads but relies heavily on a single thread. This basically means that a lot of gamers may be CPU limited due to the game’s inability to take proper advantage of multiple CPU cores/threads. And to be honest, this behaviour is inexcusable for a 2018 title. Due to these CPU issues, our simulated quad-core and six-core CPUs were slower when we enabled Hyper Threading. Thankfully though, and despite these CPU optimization issues, Darksiders 3 can run with 60fps on a variety of CPUs.

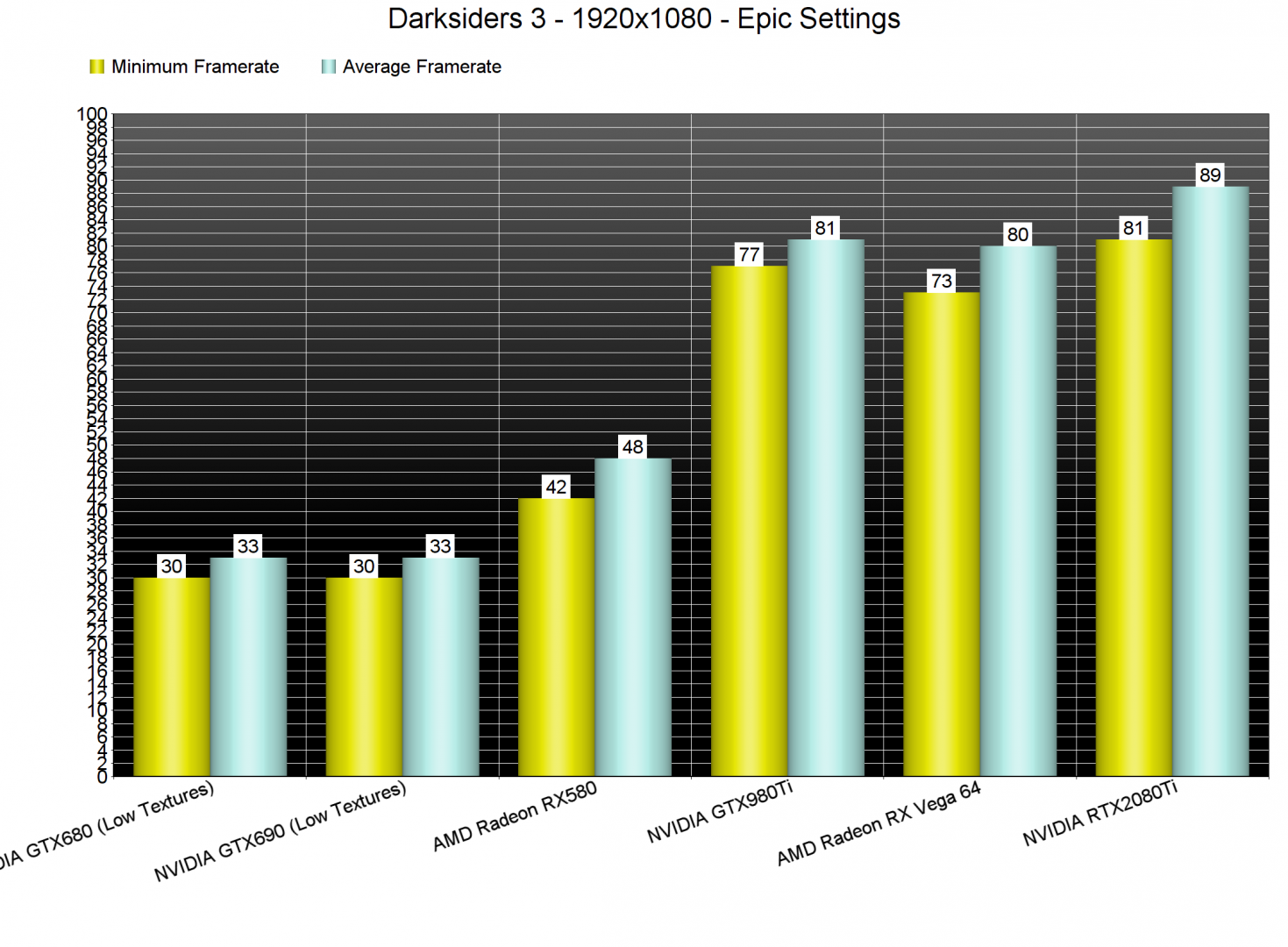

Now while the game is mainly using one CPU core/thread, it can be easily described as a GPU-bound title. With the exception of our NVIDIA GeForce RTX2080Ti, all of our other graphics cards were used to their fullest at 1080p on Epic settings. Yes, even the AMD Radeon RX Vega 64 was being used at 95-98% at 1080p, something that clearly shows how GPU demanding this new game actually is.

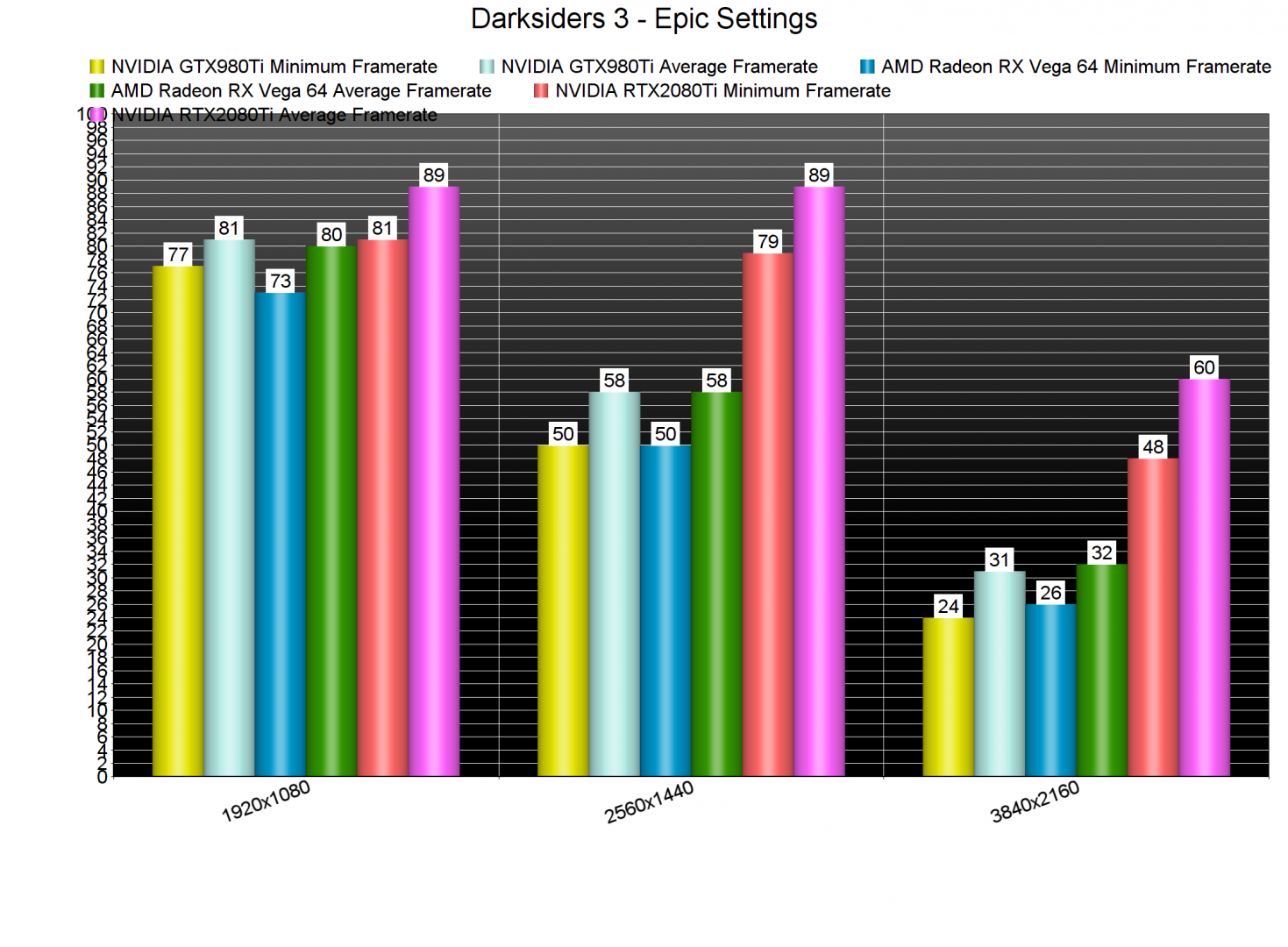

At 1080p, our AMD Radeon RX580 was unable to offer a smooth gaming experience. At 1440p, our NVIDIA GeForce GTX980Ti and AMD Radeon RX Vega 64 were not able to run the game with 60fps and in 4K, our NVIDIA GeForce RTX2080Ti was unable to offer a constant 60fps experience. Now as always, we used an open area that seemed to really push our graphics cards to their limits. As such, consider our benchmarks as stress tests, meaning that there are other areas that can run better/smoother. Still, we believe it’s best to use the worst case scenarios for our benchmarks.

Now we wouldn’t mind these extremely high GPU requirements if the game’s visuals justified them. However, that’s not the case here. Darksiders 3 looks very good but it’s not the next Crysis game. A lot of light sources do not cast shadows, environmental interactivity and destructibility are limited, the game’s lighting system does not appear as impressive and it’s not up to what we were expecting from a game using Unreal Engine 4, and there is literally nothing to ‘wow’ you here.

All in all, Darksiders 3 is a really demanding title that simply does not justify its GPU requirements. The game is also mainly using a single CPU thread, something that is really inexcusable in 2018. At 1080p, an NVIDIA GeForce GTX980Ti or an AMD Radeon RX Vega 64 are enough for 60fps on Epic settings. At 1440p, PC gamers will need a high-end GPU like an RTX2080 or a GTX1080Ti. And at 4K… well… even the most powerful GPU is unable to offer a constant 60fps experience. There is undoubtedly room for improvement here and Gunfire Games has stated that the game will support NVIDIA’s DLSS tech (right now it does not) so here is hoping that the team will fix at least some of the issues we’ve mentioned – and especially the window/fullscreen issues when using higher resolutions than native – via a post-launch patch!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email