Call of Duty is a pretty interesting series when it comes to the PC. Call of Duty: Advanced Warfare performed great on our platform, whereas Call of Duty: Black Ops III was not as impressive as its predecessor. On the other hand, the next COD game, Call of Duty: Infinite Warfare, was one of the most optimized PC games of 2016. So, how does this new Call of Duty title perform on the PC?

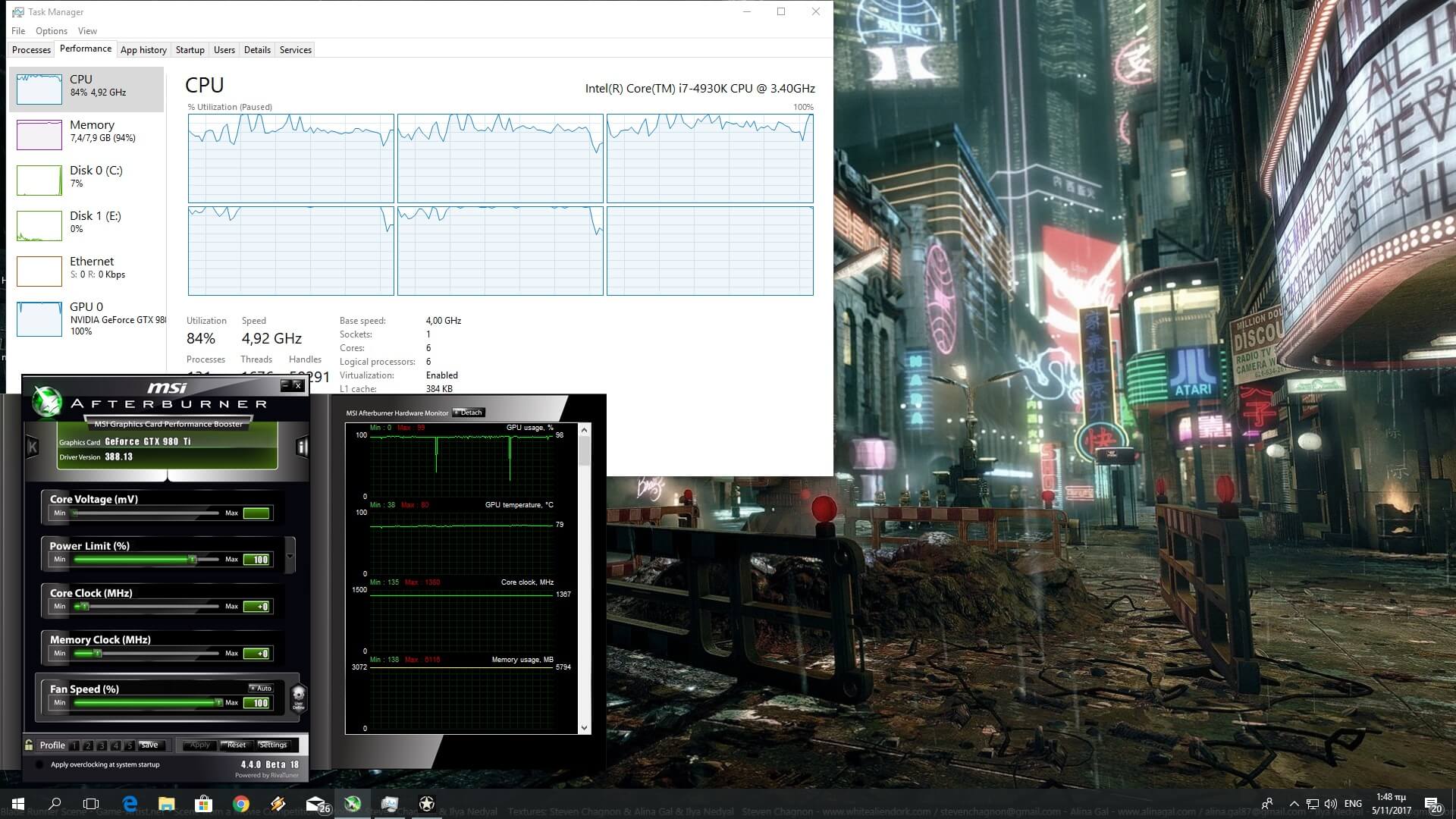

For this PC Performance Analysis, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 8GB RAM, AMD’s Radeon RX580, NVIDIA’s GTX980Ti and GTX690, Windows 10 64-bit and the latest version of the GeForce and Catalyst drivers. NVIDIA has not included any SLI profile for it yet. However, SLI owners can enable it by using the ‘0x080020F5’ compatibility bit. This SLI bit offers great scaling and does not introduce any artifacts or buggy shadows.

Sledgehammer Games has implemented a nice amount of PC graphics settings. PC gamers can adjust the quality of Anti-Aliasing, Textures, Normal Maps, Specular Maps, Sky, Anisotropic Filtering, Shadow Maps, Shadow Depth, Screen Space Reflections, Depth of Field, Motion Blur and Ambient Occlusion. There are also options to enable/disable Subsurface Scattering. Furthermore, there are FOV slider and Resolution Scaler.

[nextpage title=”GPU, CPU metrics, Graphics & Screenshots”]

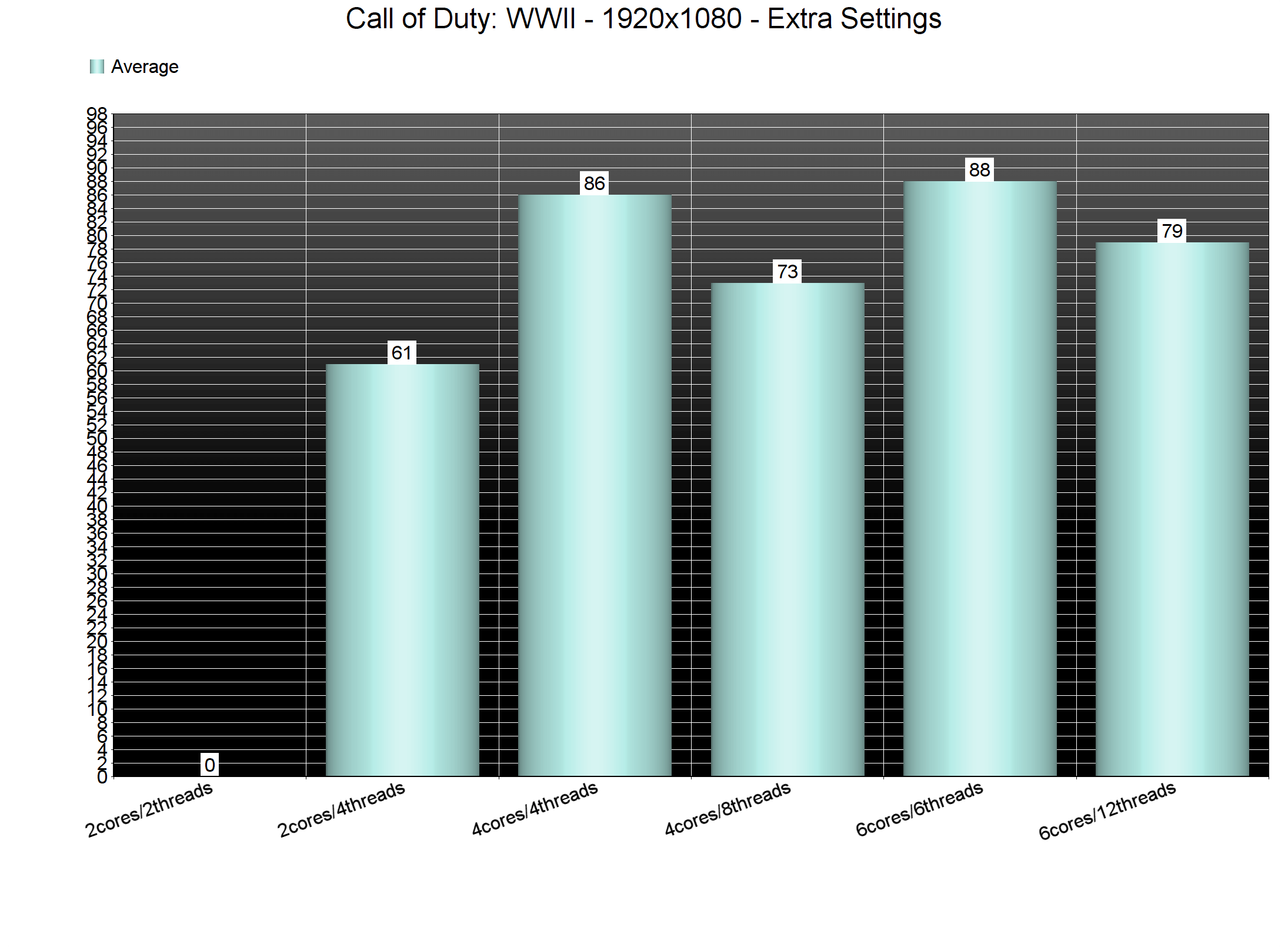

Call of Duty: WWII appears to be suffering from some CPU optimization issues. For our CPU tests, we used the beginning of the third SP campaign level. This particular scene is very CPU-bound, even at 1080p on Extra settings. And while the game is CPU-bound in various occasions, it does not take advantage of more than four CPU cores/threads. Truth be told, the game does scale on more than four CPU cores. However, and as we can see below, it runs exactly the same on a quad-core and a hexa-core CPU.

In order to find out how the game performs on a variety of CPUs, we simulated a dual-core and a quad-core CPU. Our simulated dual-core system was unable offer an acceptable performance without Hyper Threading due to severe stuttering. With Hyper Threading, our CPU scene ran with 61fps. Our hexa-core and our simulated quad-core systems ran the scene with 88fps and 86fps, respectively. However, when we enabled Hyper Threading, we noticed a performance hit on both of these systems. Our performance dropped to 79fps on our hexa-core, and to 73fps on our simulated quad-core. As such, we strongly suggest disabling Hyper Threading for this particular title.

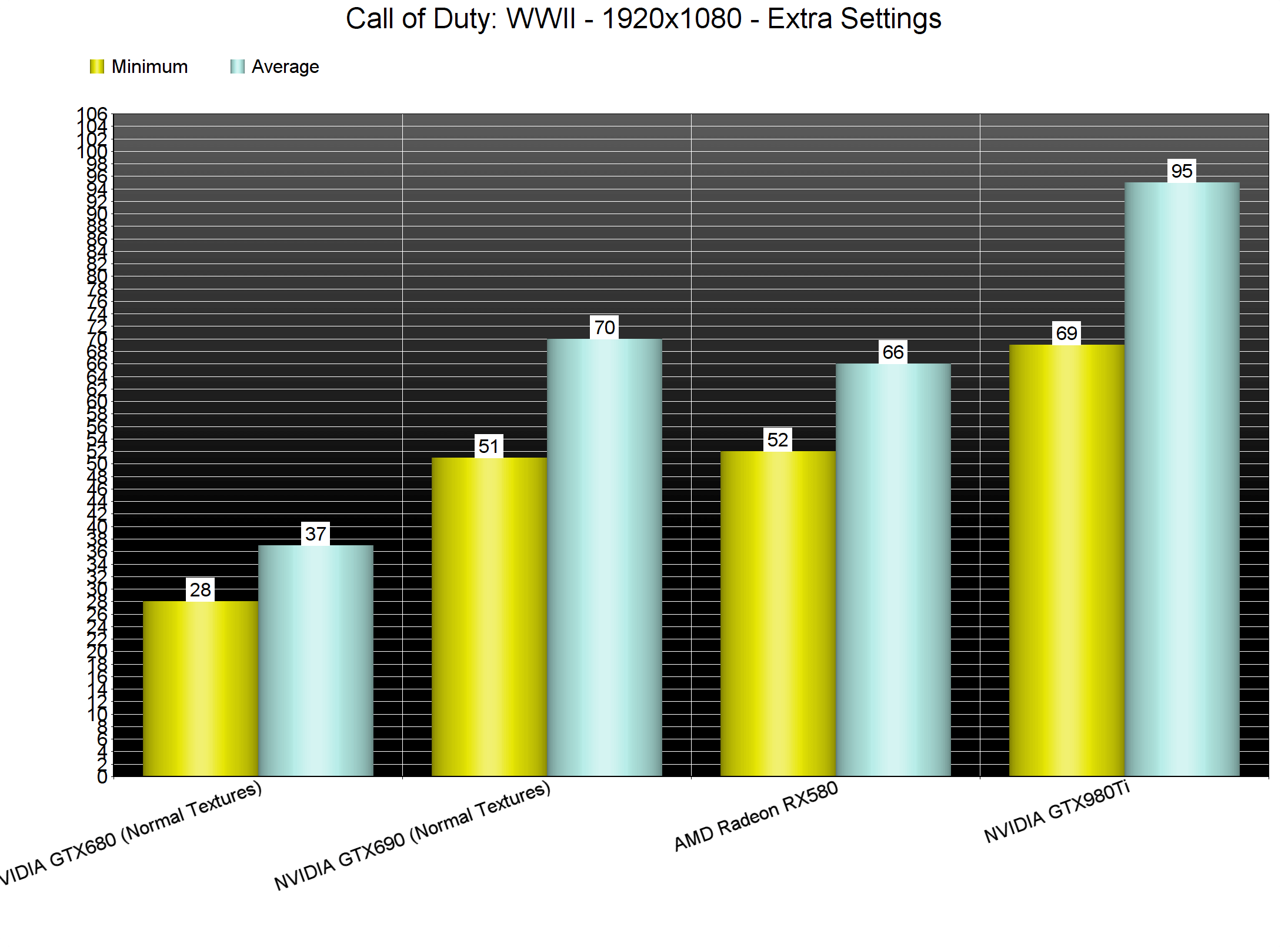

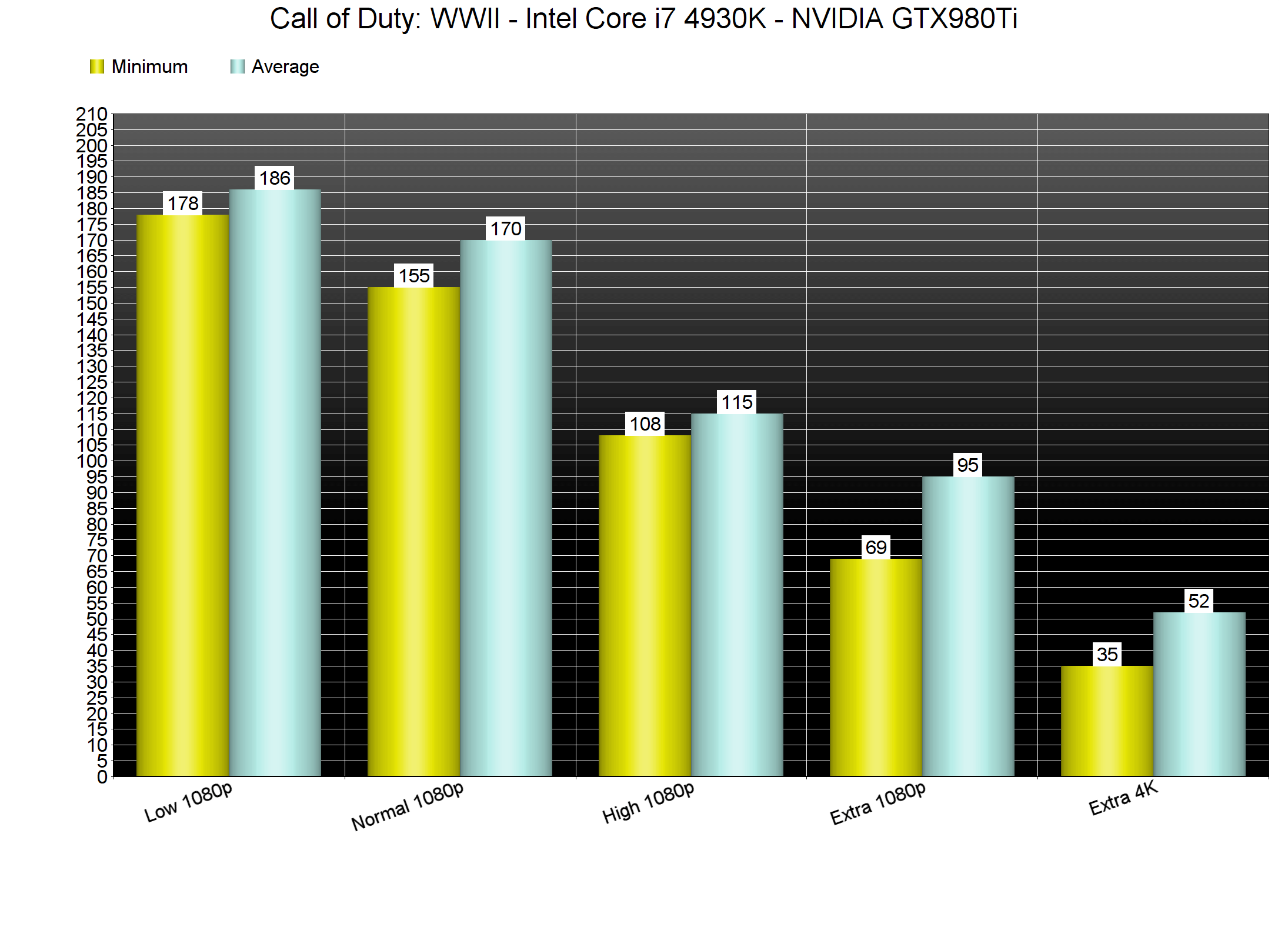

Call of Duty: WWII also requires a high-end GPU for its Extra settings, though it scales incredibly well on older NVIDIA hardware. Our GTX980Ti was able to push an average of 95fps and a minimum of 69fps at 1080p on Extra settings during the first two SP missions. In 4K, the GTX980Ti was able to offer an acceptable performance of 52fps. During cut-scenes, our framerate dropped to 35fps, mainly due to the high quality Depth of Field effect. With various adjustments, we are almost certain that this GPU can offer a 60fps experience in 4K. Moreover, our GTX690 was able to push a minimum of 51fps and an average of 70fps (though we set our Textures to Normal so we could avoid any VRAM limitation).

On the other hand, the game appears to have optimization issues on AMD’s hardware. Our AMD Radeon RX580 was able to run it with a minimum of 52fps and an average of 60fps. AMD’s GPU was significantly slower than the GTX690, though we could use higher quality textures. Not only that, but our CPU scene saw a 30fps hit on AMD’s hardware. Below you can find a comparison between the GTX980Ti and the RX580 on that particular scene. As we can see, both of our GPUs are used at only 70%. Normally, this should not be happening. Since our Intel Core i7 4930K can feed a GPU so that it can run this scene with an average of 88fps, the RX580 usage should be higher than the GTX980Ti usage. Instead, and perhaps due to AMD’s bad DX11 drivers, the RX580 was underused.

Call of Duty: WWII does not feature any presets. As such, we used some custom presets for Low, Normal, High and Extra settings. On Low settings, our GTX980Ti was able to push a minimum of 178fps and an average of 186fps in GPU-bound scenarios. Our GTX680 was also able to run the game with constant 60fps on Normal settings at 1080p. Generally speaking, those with older hardware will be able to run the game.

Graphics wise, Call of Duty: WWII looks great. Even though it has been slightly downgraded, it still is a looker. The main character models in particular are among the best we’ve seen. The Call of Duty series always had stunning character models, and WWII is no exception to that. The environments also look great, and there are some really cool modern-day effects. However, you will notice some really low-resolution textures here and there. Not only that, but the game suffers from flickering issues on both AMD’s and NVIDIA’s hardware. Furthermore, the game uses a really aggressive LOD system, even on Extra settings, resulting in atrocious pop-in issues as you explore the environments. The explosions also look underwhelming and average for a 2017 title. We’ve also experienced some hilarious graphical glitches in the first SP mission. So yeah, while the game is overall beautiful, it suffers from these graphical shortcomings.

In conclusion, Call of Duty: WWII performs great on NVIDIA’s hardware. Moreover, and contrary to other titles, this one scales well on older graphics cards. However, AMD needs to step up its game with its DX11 drivers as there are numerous optimization issues with it.

Apart from the graphical shortcomings (that shouldn’t be present in the PC version as there was room for visual improvement), we were really disappointed with Call of Duty: WWII’s inability to take advantage of more than four CPU cores. As such, the game becomes CPU limited in some scenes. We’ve also experienced some stuttering issues (that were reduced when we enabled VSync and set our refresh rate to 60Hz). Still, and even with these CPU optimization issues, the game can easily run with constant 60fps on NVIDIA’s hardware. Call of Duty: WWII may not be THE most optimized PC game of 2017, however it looks and runs better than most of its rivals.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email