Back in 2014, we were really amazed by Assassin’s Creed: Unity. As we claimed back then, Unity was way ahead of its time. Three years later and that particular game still looks amazing. The next title in the series, Syndicate, was not as impressive and here we are today with the latest Assassin’s Creed game, Assassin’s Creed Origins. However, the Assassin’s Creed Origins performance is a mixed bag.

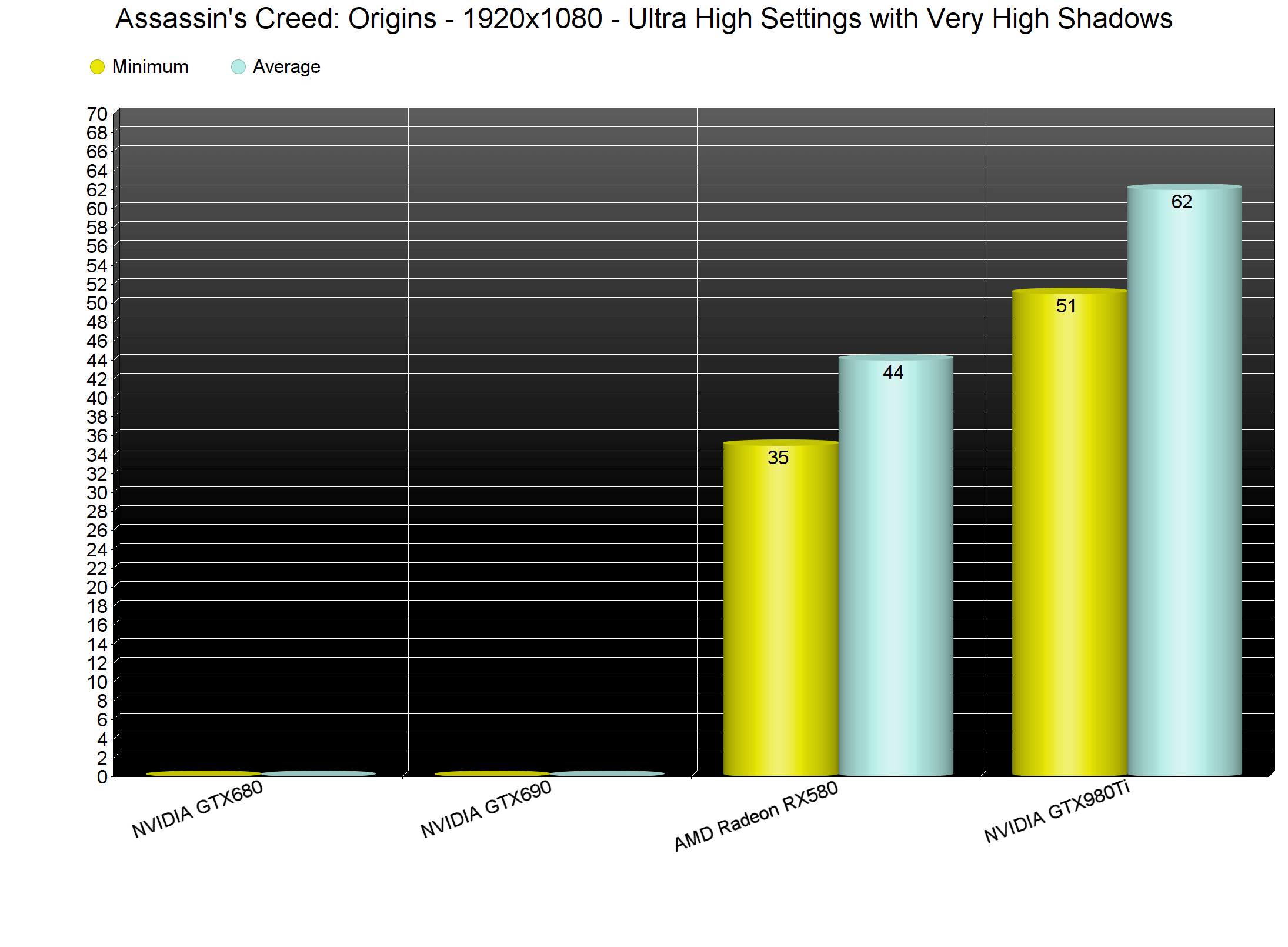

For this PC Performance Analysis, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 8GB RAM, AMD’s Radeon RX580 and NVIDIA’s GTX980Ti, Windows 10 64-bit and the latest version of the GeForce and Catalyst drivers. We did not test the GTX690 as NVIDIA has not added yet an official SLI profile for this game. Not only that, but the game uses more than 2GB of VRAM. As such, we would be limited by the card’s available memory on the higher settings. So yeah, owners of relatively dated SLI systems should wait until the green team releases an SLI profile.

Ubisoft has added a lot of graphics settings to tweak. PC gamers can adjust the quality of Anti-Aliasing, Shadows, Environment Details, Textures, Tessellation, Terrain and Cluster. Moreover, gamers can tweak Fog, Water, Screen Space Reflections, Character Textures, Character Detail and Ambient Occlussion. There are also options to enable/disable Depth of Field and Volumetric Clouds. Furthermore, Ubisoft has added a FOV setting, a Resolution Scaler and an FPS limiter.

[nextpage title=”GPU, CPU metrics, Benchmark, Graphics & Screenshots”]

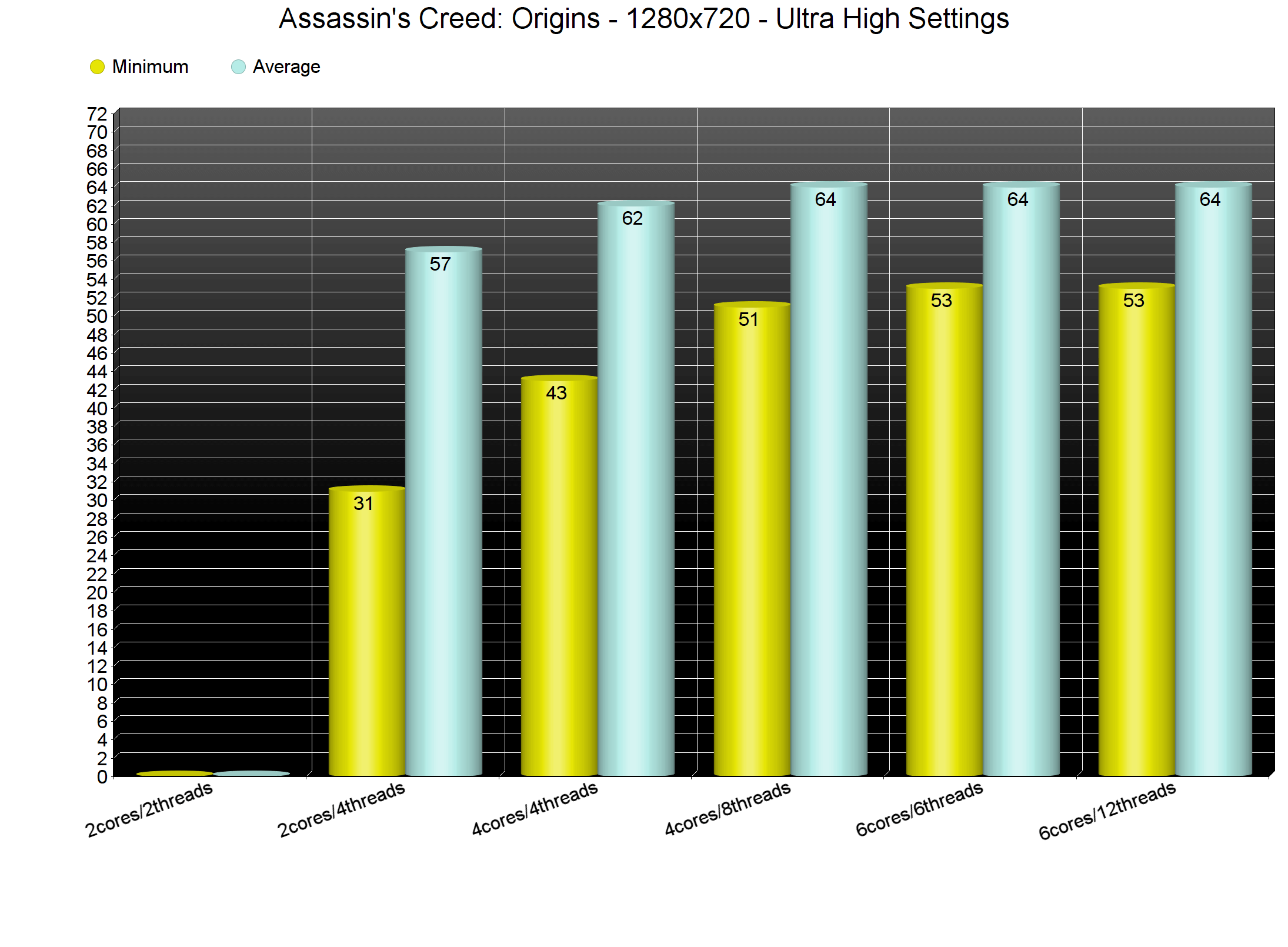

Assassin’s Creed: Origins requires a high-end CPU for those who want to play it at more than 30fps. In order to find out how the game performs on a variety of CPUs, we simulated a dual-core and a quad-core CPU. We’ve also lowered our resolution to 720p and set Resolution Scaler to 50% for our CPU tests. By doing that, we’ve managed to remove any possible GPU limitation (as we were getting a 60-70% GPU usage at all times on our GTX980Ti).

Our simulated dual-core system was simply unable to offer an acceptable performance without Hyper Threading due to severe stuttering. With Hyper Threading enabled, the in-game benchmark ran with a minimum of 31fps and an average of 57fps. However, we experienced some stutters here and there. As such, and due to these stutters, the experience is not smooth at all, unless we lock the framerate to 30fps.

Assassin’s Creed Origins is one of the few games that benefits from Hyper Threading even on quad-core CPUs. The game scaled on all eight threads on our simulated quad-core, and the performance difference (between Hyper Threading On and Off) was around 10fps in minimum framerates. Strangely enough, the average framerates remained the same. Still, in real world scenarios, and especially in cities, Hyper Threading will make a difference.

However, we were a bit disappointed with the game’s performance on our six-core. As we can see, the game used all twelve threads. Since we threw four additional threads, we were expecting at least a small performance boost. Disappointingly, we did not experience any performance increase. Hell, even our minimum framerate remained the same with that of our simulated quad-core system. Moreover, disabling Hyper Threading on our six-core system had no affect at all on our overall performance.

We seriously don’t know why the game uses additional threads when in fact there isn’t any performance increase whatsoever. It’s worth noting that Assassin’s Creed Origins uses the Denuvo anti-tamper tech. Whether this has anything to do with this extreme CPU usage remains to be seen.

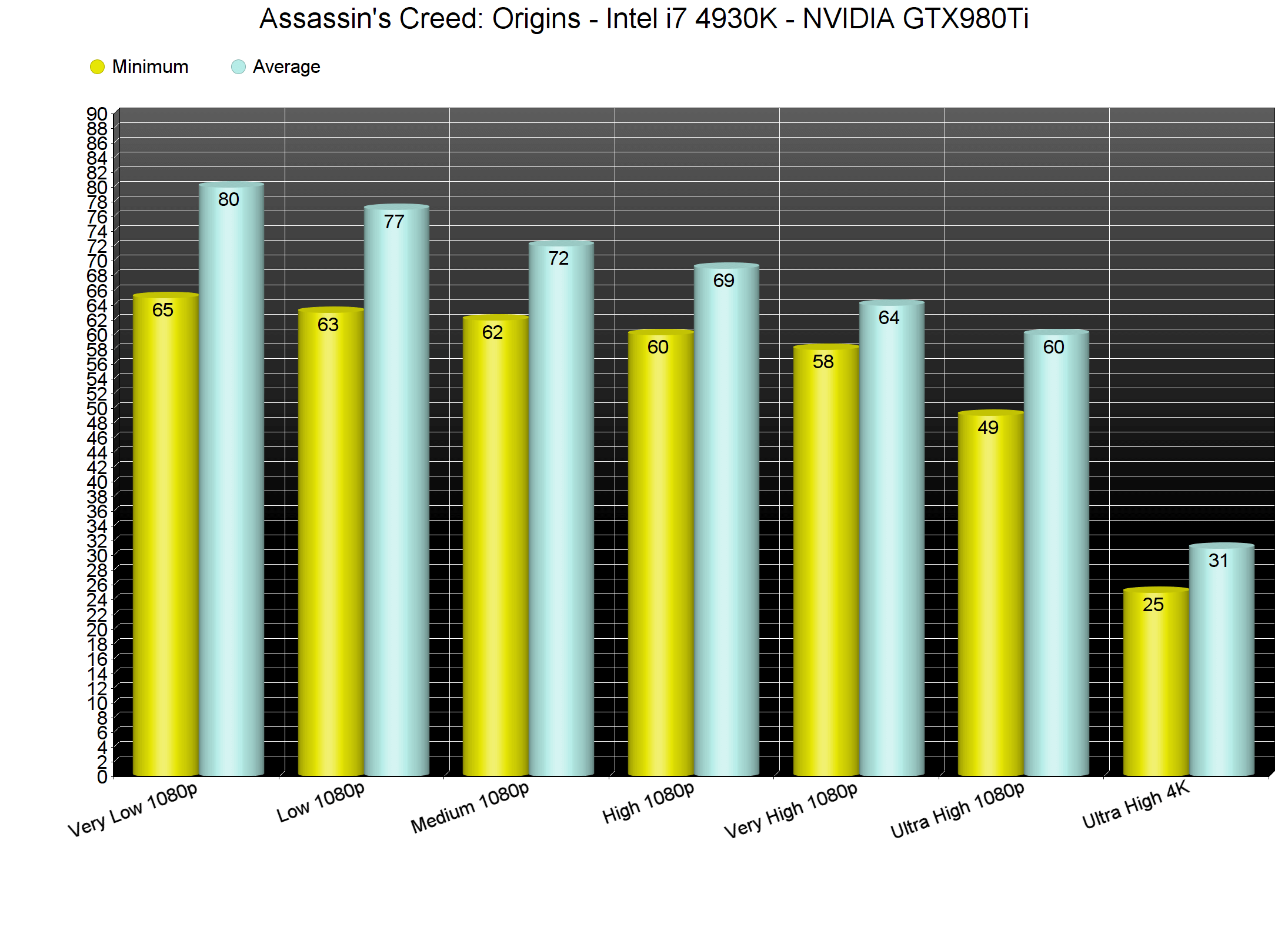

Assassin’s Creed Origins comes with six presets: Very Low, Low, Medium, High, Very High and Ultra High. However, and while there are lots of settings to tweak, the performance difference between Very Low and Very High is only 7fps in minimum framerates. This basically means that Assassin’s Creed Origins does not scale well on older PC systems. Still, the good news is that we were able to get an almost locked 60fps experience on Very High. On Ultra settings, our minimum and average framerates dropped to 49fps and 60fps, respectively. And to be honest, the visual difference between Very High and Ultra High is very minimal. Also, and from what we discovered, the option that really affects the CPU here is the Environment Detail. Therefore, we strongly suggest tweaking this option first if you are CPU-bound.

As said, we did not test the NVIDIA GTX690 as there isn’t any SLI profile yet for Assassin’s Creed Origins. Owners of GPUs with 2GB of VRAM will have to use medium Textures in order to avoid any limitations. On the other hand, owners of GPUs with 4GB of video memory will have no trouble at all with Assassin’s Creed Origins as the game does not use more than 3.7GB on Ultra High at 1080p. Increasing the resolution will also increase the VRAM usage, so you might encounter some VRAM issues at higher resolutions.

During our tests, the NVIDIA GTX980Ti performed fine on slightly custom Ultra Settings. For some reason we were experiencing a lot of stutters with Ultra High Shadows. By simply dropping them to Very High, we were able to resolve these issues. On the red camp, and even with the latest drivers that pack optimizations for Assassin’s Creed Origins, the AMD Radeon RX580 performed poorly. As we can see below, our GPU usage was not ideal, even though we were not hitting any CPU bottlenecks. It’s pretty obvious that AMD needs to further optimize its drivers for Ubisoft’s title. Therefore, if you own an AMD GPU, we strongly suggest waiting until both Ubisoft and AMD address these issues.

Graphics wise, Assassin’s Creed Origins looks absolutely stunning. Ubisoft has used a lot of high-resolution textures, the environments look great, there is bendable grass, and everything looks top notch. However, the lighting system did not impress as much as it did back in 2014. Don’t get us wrong, the lighting system is better than what we can find in other open-world games like Shadow of War. However, and from a series that made our jaws drop in 2014, we were expecting something more. Furthermore, there is noticeable pop-in of objects and shadows, even on Ultra High settings. Still, Assassin’s Creed Origins is, without a doubt, one of the most beautiful open-world games to date.

In conclusion, the game suffers from some performance and optimizations issues on the PC. SLI owners will not be able to take full advantage of their machines, and the game is currently having major optimization issues on AMD’s GPUs. The game also requires a high-end CPU, and even though it scales on twelve CPU threads, the performance difference between a quad-core and a six-core is really small. Naturally, we were expecting a larger performance difference than the 10fps we are getting. With Hyper Threading enabled, there is no performance difference at all, so there is definitely something going on here (as the game does use the additional threads of a six-core CPU).

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email