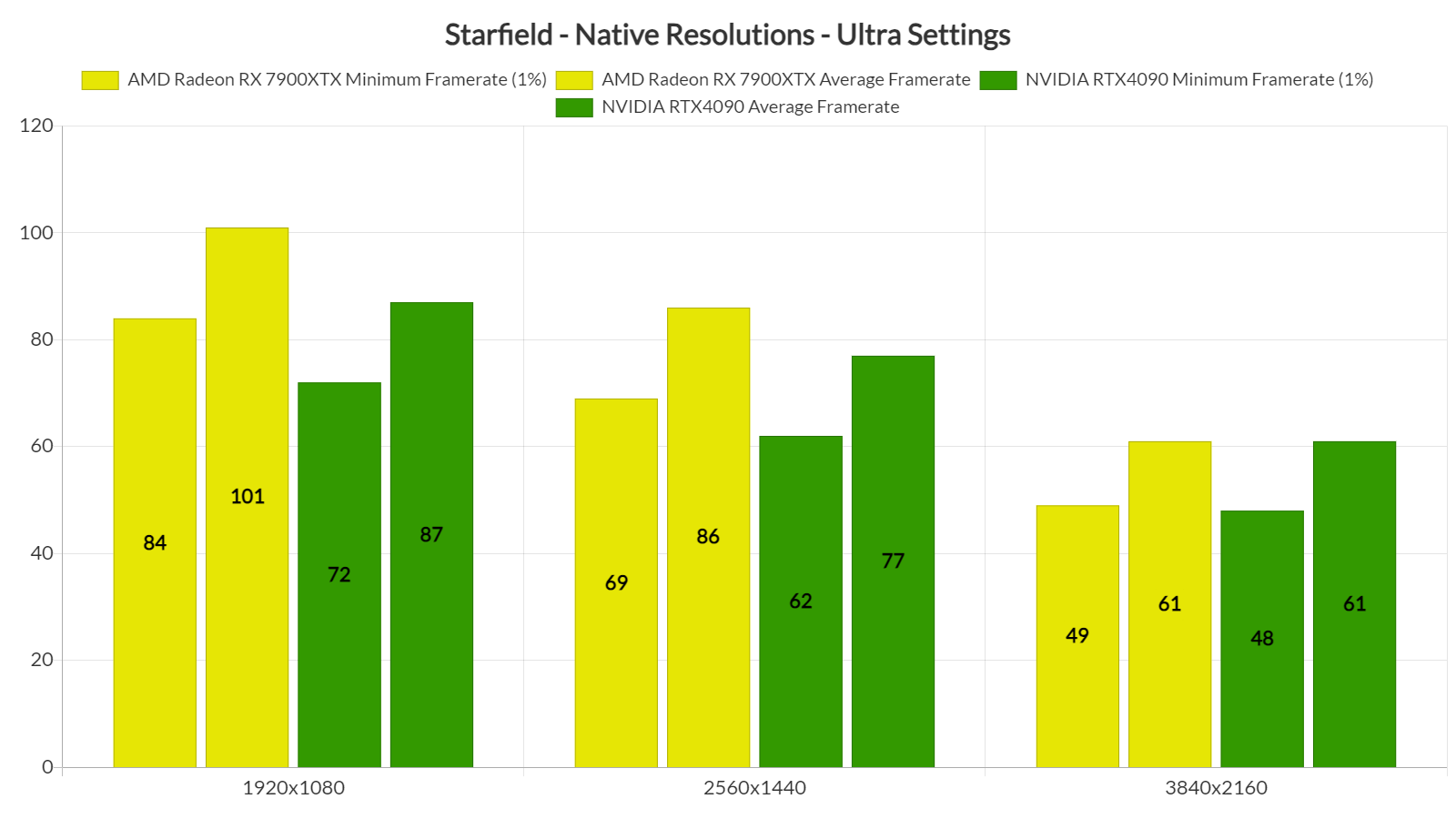

Now here is something that shocked me while benchmarking Starfield. According to our benchmarks, the AMD Radeon RX 7900XTX is noticeably faster than NVIDIA’s flagship GPU, the GeForce RTX 4090, in Starfield.

For our benchmarks, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, NVIDIA’s RTX 4090, and AMD’s Radeon RX 7900XTX. We also used Windows 10 64-bit, the GeForce 537.13 and the AMD Adrenalin 23.8.2 drivers. Furthermore, we’ve disabled the second CCD on our 7950X3D.

Starfield does not feature any built-in benchmark tool. As such, we’ve decided to benchmark the New Atlantis city. This is the biggest city of the game, featuring a lot of NPCs. Thus, it can give us a pretty good idea of how the rest of the game will run. The area we chose to benchmark is this one. From what we could tell, this was the most demanding scene in New Atlantis.

Before continuing, we should note a really bizarre behavior. Bethesda has tied FSR 2.0 and Render Resolution with the in-game graphics presets. When you select the Ultra preset, Starfield automatically enables FSR 2.0 and sets Render Resolution at 75%. When you select the High preset, the game will automatically reduce the Render Resolution to 62%. At Medium Settings, it automatically goes all the way down to 50%. This is something you should pay attention to, otherwise, the game will run at noticeably lower internal resolutions.

So, with this out of the way, at Native 1080/Ultra Settings, the AMD Radeon RX 7900XTX is 16% faster than the NVIDIA RTX 4090. Then, at Native 1440p/Ultra, the performance gap between these two GPUs drops to 11%. And finally, at native 4K/Ultra, the RTX 4090 manages to catch up to the RX 7900XTX.

At first, I couldn’t believe my eyes (and my notes). So I went ahead and uninstalled the NVIDIA drivers, removed the RTX 4090 from our PC system, re-installed the AMD Radeon RX 7900XTX, re-installed the AMD drivers, and re-tested the game. And behold the performance difference between these two GPUs. The screenshots on the left are on the RX 7900XTX and the screenshots on the right are on the RTX 4090. Just wow.

I seriously don’t know what is going on here. However, you can clearly see that the RTX 4090 is used to its fullest (so no, we aren’t CPU bottlenecked on NVIDIA’s GPU in any way). We all assumed that Starfield would work best on AMD’s hardware but these performance differences are insane.

Stay tuned for our PC Performance Analysis!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email