Valve has just shared Steam’s GPU and CPU Hardware Survey May 2023 Results. These results are from a monthly survey that collects data about what kinds of computer hardware and software Valve’s customers are using.

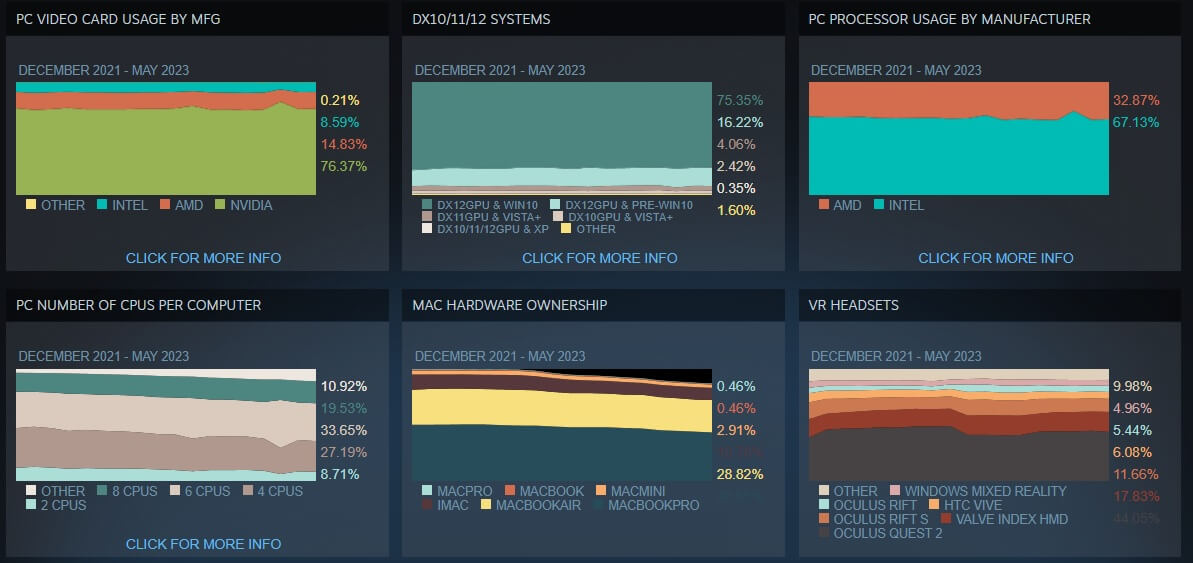

As we can see, Intel remains the king of CPUs. Although most of the Ryzen CPUs reviewed favorably, the red team has not managed to gain enough CPU market. From December 2021 until May 2023, the red team has only managed to increase its market share by 2.5%.

When it comes to GPUs, NVIDIA is simply unstoppable. The green team has 76% of the GPU market share, making it the number-one choice for most PC gamers. And yes, even after the ridiculous launch of some of its RTX 40 series GPUs, the green team has still managed to retain its market share. Similarly, AMD has managed to retain its own GPU market share, and Intel is still in third place (with only 8.5%).

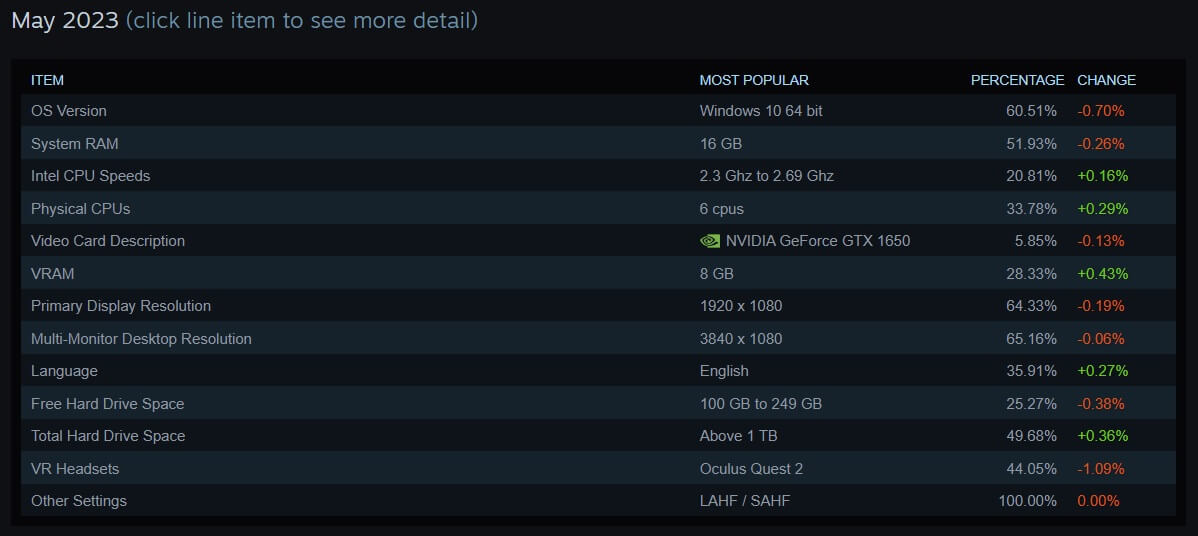

The most common GPU appears to be the NVIDIA GeForce GTX1650. Thankfully, we see that CPUs with at least 6 CPU cores are what the majority of the survey takers are using. Moreover, we can see that most of them run their games at 1080p, and that the majority of GPUs that they use are equipped with 8GB of VRAM.

All in all, it becomes clear that NVIDIA has no reason to worry about the reception of its GPUs. I mean, its market share remained the same, even after the launch of the RTX 4060. And, let’s be realistic here. The gap between the green and the red team is HUGE. So, unfortunately, I don’t see AMD pushing any pressure on NVIDIA in the foreseeable future. As for Intel… well… I won’t be surprised if the blue team abandons its GPU plans after the release of Battlemage.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email