Creative Assembly has just released the DX12 patch for Total War: WARHAMMER. And while the game’s DX12 renderer is still in beta stage, it appears that there are no benefits at all for NVIDIA users. In fact, the DX12 renderer for Total War: WARHAMMER comes with a significant performance hit on NVIDIA’s hardware, something that undoubtedly surprised us.

In order to measure the DX12 performance, we’ve used both an Extreme scenario, as well as the in-game benchmark tool.

Here are the graphics settings that we used in both DX11 and DX12, and here are our specs:

- Intel i7 4930K (turbo boosted at 4.0Ghz)

- 8GB RAM

- NVIDIA’s GTX980Ti

- Windows 10 64-bit

- Latest NVIDIA WHQL driver

As we can see, there is a 26fps performance difference between DX11 and DX12. What’s really surprising here is that DX11 runs way, way, way better than DX12 on NVIDIA’s hardware (DX11 performance is on the left, whereas DX12 performance is on the right).

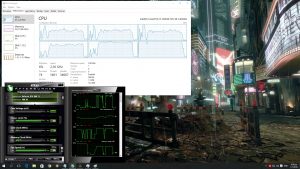

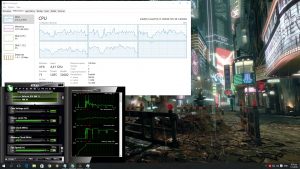

One of the biggest advantages of DX12 – at least according to Microsoft – is its improved multi-threading capabilities. However, as we can clearly see in both the in-game benchmark and our Extreme scenario, the game is unable to properly take advantage of all our CPU cores (again, DX11 graph is on the left whereas DX12 graph is on the right).

There are no improvements at all to the engine’s multi-threading capabilities under DX12, something that explains the underwhelming performance in our Extreme scenario (we were getting 11-16fps in both DX11 and DX12). And here is the Extreme scenario we used:

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email

*grabs popcorn*

Make sure it’s a extra large box.

And cheese on top. And bacon.

With NVIDIA and AMD fanboy logos on the side of the box with a box of tears for side order.

There’s isn’t at box that is big enough for all those salty tears. :p

Nvidia DX12: -27%

AMD Radeon DX12: +50%

Nvidia cards don’t support Asynchronic Shaders. Only Radeon have full support of DirectX 12 and Vulkan. Radeon RX 480 FTW! Few weeks ago I want buy GTX 1080 but now I know that 2x RX 480 is better for me

There is a 7FPS difference with a GTX 1080 between DX11/12 and it can get 70FPS at 1440p ultra 2xMSAA. This is a AMD logo game which AMD even provided a DX12 benchmark for. So yeah, for the last month AMD have been working with the dev on DX12, NVIDIA haven’t. Also, where did you get 50% from? LOL

Maxwell isn’t going to see gains with DX12’s Async, that is old news.

pascal wont get much better, they still using pre-emp, it wont be slower but it wont be also much faster. AMD on the other hand has huge boost from AS and thanks to DX12/vulkan all those console optimization will be port onto PC version very easily.

2 cards are useless for Unreal 4 engine games, Unity engine games and most current DX12 games since there is no Crossfire or even SLI support.

“We’re pleased to confirm that Total War: WARHAMMER will also be DX12

compatible, and our graphics team has been working in close concert with

AMD’s engineers on the implementation. This will be patched in a little

after the game launches, but we’re really happy with the DX12

performance we’re seeing so far”

It is Nvidia fault if CA never contacted them for DX12?

They are partnered with AMD and first poritiy is AMD. They are not hiding it. They did not even contacted nvidia driver team or engineer to take their input like ID tech or Square enix.

Of course and it is right.

Nope and people that say that probably are stupid, we know Gameworks hurts AMD’s performance, it shouldn’t be in the pre-sets but AMD use such titles with Gameworks off in their own benchmarks so it’s a none issue if you don’t use pre-sets, some pre-sets don’t have any Gameworks enabled at all.

I’m totally against Gameworks features being in the pre-sets, AMD users can turn them off just like AMD do it in their tests.

Go look at the benchmarks yourself then, The Division runs better on AMD GPUs, The RX480 on ultra with hairworks off runs at a 53FPS average The Witcher 3. Doom on ultra 1080p runs at 93FPS.

Depends on what benchmarks you look at, also AMD perform better in some DX11 games than NVIDIA, don’t see the big deal. We know AMD perform better in DX12, the RX480 will perform better in DX12 to come and people saying i’m an NVIDIA fanboy, well an NVIDIA fanboy would never admit to AMD being better in any way.

want more example? how about Far Cry 4 (another gameworks title)? AMD even use that game to showcase how their Fury X is significantly faster than Nvidia 980Ti.

Assasins Creed Unity and Watch Dogs, 2 of Ubisoft’s most expensive IPs were total disasters and they were Gameworks titles.

Since then Ubisoft changed the way they use nvidia features, they clearly have less impact, that is why AMD is able to perform better in newer Ubisoft games and this will only further improve as most Ubisoft games in 2017 should be DX12 compatible.

Gameworks doesn’t scupper AMD cards, UNLESS you turn the features on. They’re extra features to improve the look of the game. You a bit stupid.

Wish more people would understand that Amd fans are so salty over the fact Amd has basically no market share in the GPU sector. Now with the 480 RX having the chance to actually kill motherboards or cause instability i see little to know reason to even bother with Amd anymore i mean how the hell was that not tested before release.

Microsoft and Sony have sold like 60 million consoles, GCN GPUs are more relevant for game developers than any nvidia GPU generation.

Then explain why modern games still perform better except for 0.1% of games coming out with that logic you would see every game performing better on Amd then Nvidia. Not happening the people making the games themselves use nvidia not Amd work station GPUs.

You should explain how AMD has no GPU market share when GCN GPUs are more relevant for game developers than any nvidia GPU.

Also studios have just started to seriously tune their game engines for GCN, games that will launch at the end of this year and next year will be quite interesting for AMD GPU owners.

Man all i ever hear from Amd fans and sadly some times form the company is wait to its amazing! Phenom had it when it didn’t have DDR3 memory people said wait for DDR3 it will be way better, Bulldozer before the software update on 7, Amd fans claimed it would be so much better after the update, Piledriver was supposed to fix everything, Polaris was supposed to be even with a 980 but it fails to even keep its wattage under control and overall its on 14nm and it performs and uses just as much wattage as a 970 on 28nm.

Now we have Zen, which i hope actually has the IPC needed to make it competitive and the clock speed to go with it.

I’m just happy Amds stocks are up and i really hope Zen changes things.

So no answer for may question, typical.

I already answered that you ignored it “You should explain how AMD has no GPU market share when GCN GPUs are more relevant for game developers than any nvidia GPU”

Because their not and they do not use Amd Work station GPUs when coding for the game or making the game they mainly use Nvidia. Again if it was different Amd would be performing a lot better then they are in modern games.

But as always just keep on waiting its been since 2006 since Amd fans have been waiting i stopped after bulldozer guess it takes even more time for others.

Now that is a lot of nonsense, good job you truly are incoherent.

No not really and you did ignore the answer again numbers do not lie most dev’s aren’t using Firepro

Consoles have their own dedicated dev kits.

How on earth is this nonsense have you even been following Amd for the last 10 years? Again its a actual fact that firepro has very very little market share and that most game devs use nvidia. No conspiracy here, as for waiting to its better that has been happening since the original phenom(for amd fans), go look at read for yourself people claimed phenom I was under performing over it only having DDR2 BUT with phenom II that proved to be false as it still under performed with DDR3 support.

Software fixes never fixed the horrible single threaded performance of bulldozer. Again take off your freaking bias glasses.

You keep dancing around the subject, mostly because you are incapably of giving a strait answer.

“Amd has basically no market share in the GPU sector”

This statement is obviously wrong but continue whit your nonsense.

Again they have 25-30% market share in the PC market, they have 100% control on the console market. Again if the console market mattered that much to the PC market when it comes to optimization why on earth wouldn’t Amd be performing better today?

Again what? you said “basically no market share” and then started to mumble something about phonem and ddr3 like that has any relevance to this discussion.

Also it’s takes a few years until game developers tune their game engine for the new generation of consoles, this is why Microsoft launched DX12, to allow developers to make better games for Xbox.

DX12 support from most upcoming AAA games is expected.

Mantle now 12 is supposed to save Amd, What is next? What’s going to save Amd is a better more efficient architecture compared to their competition.

I thought DX12 was going to save Nvidia and destroy Mantle.

Na Amd fanboys before hand made the claims that 12 is mantle, now you have Nvidia fanboys claiming vuklan

Not even sure why i bother all Amd fanboys ever claimed was “just wait and see” Again that has been going on since the original phenom

“just wait and see” are not my words but continue with your nonsense.

You keep saying false things which obviosly you can’t support.

Your insistence to go on is a fine example of stupidity.

Not really as i can’t expect others to even take your wait and see approach to such a topic and i used evidence of Amd fanboys doing that in the past as well. The only thing i want people seeing is how the products perform TODAY! Again 10 years from now just 10 years ago i will be hearing the same thing from Amd fans “just wait and see approach”.

Users don’t have to wait for anything you are delusional.

What 10 years?

This is what happens when you have no arguments.

Right from the start you couldn’t support anything of what you said.

You must be new to the PC hardware and have no idea what you are saying when did you build your first PC two years ago? When did you start following forums?

Back before the first phenom came out Amd fans were claiming it was going to bring Amd back to the high-end but it didn’t, then phenom 2 and they even went as far as saying the phenom 2 was performing worse since it started out only using DDR2 memory but of course that was false.

Then they all said just wait for bulldzoer and well we all know how that went down, Now we got Zen which i hope will finally break the trend and make Amd competitive again in the CPU market.

As for their GPU market things are typically a lot more competitive except maybe in sales. To the PC market Nvidia will get the most optimizations since 70+% own a Nvidia video card. That is why we see so many Nvidia sponsored titles and few Amd ones.

Gameworks games run like dogs on AMD hardware even with those features disabled. most of the time crap is baked into those games that give Nvidia a big boost. Seems no one has an issue with that as long as its their card that benefits.

In general games that use Gameworks features have performance problem even on Nvidia GPU, only these GPUs are not affected at the same level.

You know ATI payed billions for Half-Life 2. Since AMD bought ATI they continue to gimp Nvidia performance.

both company actually do it. just that between the two nvidia have more money to throw at developer. look at dirt showdown for example. they were using AMD tech called forward+ engine for global illumination which running terrible on any nvidia hardware. but there is no internet rage about it because nvidia never attack AMD back then.

Are you sh**ting me!? You lot can’t wait to jump on Nvidia when a game struggles. Nvidia Crapworks etc. Piss off out.

it is? then why we see gamework rage everywhere? whenever the game being sponsored by nvidia people are quick to blame gameworks even when gameworks effect not being use. look at project cars. developer already saying that the game did not use any kind of GPU PhysX but people still blaming it that PhysX is the main cause why AMD have poor performance in that game.

No. It is exactly the same. AMD fabnboys compalin NV from everything they don’t understand. And NV fanboys do the same.

CA never worked with Nvidia on DX12 due to gaming evolved partnership.

“We’re pleased to confirm that Total War: WARHAMMER will also be DX12

compatible, and our graphics team has been working in close concert with

AMD’s engineers on the implementation. This will be patched in a little

after the game launches, but we’re really happy with the DX12

performance we’re seeing so far”

Because AMD is the only with a clue about DX12.

You mean they finally stopped crying and started doing something about their sub-par DX11 performance, poor drivers and blame game.

They’ve been winning ever since the first DX12 games came out and will continue through this generation as Nvidia still can’t handle DX12 properly.

THis is total BS. AMD and NVIDIA has experts in this area. As Wrath wrote, AMD started to do something. They finnaly undestand that crying and complaining is not enough.

Yes, remember both Lisa Su and Raja Koduri crying several times.

Everytime when NV comes with some tech like PhysX or Gameworks, AMD was complaining and creating BS about that. As I remember, NV wasn’t complain on anything and didn’t participate on writing articles complaining on their competition.

That’s because Gameworks is a black box and it’s not just Nvidia that has complained. Developers have been complaining about it as well. Dismissing this as “crying” is both disingenuous and infantile.

Ok. Source code of the most used Gameworks parts are now available. Can you tell us where exactly are problems in source code because of which where these complains?

The developer can request source code, but AMD cannot naturally. So when Gameworks is used Nvidia is already covered on the optimization front, but AMD generally has to wait until the game is released to start optimizing.

The source code for the old SDK versions, nvidia is pushing newer Gameworks code that is not opened in any way.

So basically nothing changed.

But if I remember correct guys at nvidia stated that they worked closely with Microsoft during the DX12 development but they seem to be running away from DX12. Why would a developer ask nvidia to help them with DX12 when nvidia is trying to avoid this API?

Look what happened whit Tomb Raider, the previous game was decently optimised and even the new game runs more than OK on the weak Xbox but the PC version has been a total let down in terms of optimisation. Thank you Nvidia.

You should thank to game developers for good or bad optimisation. This is not the work of AMD or NVIDIA. They can cooperate with devs if devs want to or to implement advanced features (to distinguish PC version from console one). But optimisation is as a whole is responsibility of game developer studio. Becasue AMD and NVIDIA are not developing games.

Funny enough when Nvidia collaborates whit developers their games turn out bad, unoptimized little disasters.

Like Far Cry 3 vs far Cry 4 for example.

Because NVIDIA is rewriting developers code right? 🙂 There are also games like Division, Witcher 3, Dying light and other which runs good. You don’t know what are you talking about. I see that you have no experiences from development at all. You know only some statements from internet.

“Why would a developer ask nvidia to help them with DX12 when nvidia is trying to avoid this API?”

This is totall BS. NVIDIA is not running away from DX12. From where did you get that?

Would have been better to show 1070 or 1080 performance instead of 980ti since they have better preemption than Maxwell.

Would have to test again after beta is over. Injecting DX12 features instead of building the foundation of the game on DX12 gives two totally different scenarios. It was pretty much the same for DX12 RotR

All GTX cards are hammered in Warhammer DX12 even GTX 1080 and look at steam forum.

They never worked with nvidia on DX12.

Shame if true.

But still, injecting DX12 features into a DX11 title is like getting pyrite and calling it gold :/

True. But Hitman is actually performing better on DX12 than other games so you might say that RotTR’s implementation was not something they wanted to push. Don’t want to sound fanboyish nor anything like that but I’m starting to believe that Nvidia is leveraging its market share to hold back low level APIs as much as possible in order to catch up. I mean, their only DX12 sponsored game is RotTR and, as you said, it’s crap. My guess is Nvidia never actually foresaw the demand for this and didn’t actually improve their tech to leverage it. AMD did because they actually had to work with that on consoles and then pushed Mantle that soon gave birth to DX12 and Vulkan. For that very reason, I doubt Nvidia actually managed to improve Async much in Pascal. We’ll have to wait and see how Pascal fares with newer and better implemented DX12 games.

even in hitman the result is not always positive. and according many that review the game DX12 end up have far more stability issues than DX11 version. but then again it is a game that build to take advantage of AMD hardware from the ground up. that’s why the game was quite significantly faster in even in DX11 compared to nvidia equivalent.

I believe Quantum Break and Forza Motorsport 6: Apex are, but I maybe wrong.

Future compatible. Future proof is non existent 😛

Just a joke bruh

I’ve seen a GTX 1080 get 7FPS less in the DX12 benchmark , See “DudeRandom84” tests on youtube.

1440p – Ultra – 2xMSAA

GTX 1080 – DX12 – 63FPS

GTX 1080 – DX11 – 70FPS

GTX 1070 – DX12 – 47FPS

FuryX – DX12 41FPS

“The Nvidia cards did stutter during gameplay and the game crashed constantly. I didn’t have any problems with the AMD.”

Looks like NVIDIA need a driver update like Tomb Raider DX12 because that performs exactly the same averages on my GTX 970 at 75FPS each.

I never even made such a claim, maybe you just made that up in your own head.

“We’re pleased to confirm that Total War: WARHAMMER will also be DX12

compatible, and our graphics team has been working in close concert with

AMD’s engineers on the implementation. This will be patched in a little

after the game launches, but we’re really happy with the DX12

performance we’re seeing so far”

This explain very well why Nvidia card are getting hammered. CA did not take any input of Nvidia for DX12.

That’s not why, AMD cards are simply better than nvidia in DX12, and conversely nvidia dx11 implementatin is a little better than AMD’s.

precisely

Maxwell has async compute. It’s 31 pipelines vs. AMD’s 64 though

An audience member asked the NVIDIA Engineer about Async compute in Pascal as he finished explaining how Async Compute works in Pascal.

Audience member: Is this in hardware or software?

NVIDIA Engineer: This is in hardware, it has to be in hardware.

NVIDIA showed a Async compute demo running in DX11.

/watch/?v=Bh7ECiXfMWQ

Yes we know about AMD’s ACE units, Pascal’s improved preemption is still behind AMD’s ACE units, it’s just the silly people saying “no Async” it’s there is just implemented differently or in Maxwell’s case, broken. However, under some workloads NVIDIA Async is fast with lower latency, high workloads it’s useless.

The right question would have been: Do you support Async Compute the same way AMD does, can your GPUs do the same things?

The clear answer will be: NO.

DO not use MSAA. It will stutter.

dont mind him he’s an nvidia fanboy i see him alot on wccftech spewing bs about AMD all the time

You are an idiot and an AMD fanboy by your own comment history, also go look at my comment history you liar, show me where I “spewing bs about AMD all the time” and I don’t comment on that site

Are you taking one title and trying to say its a conspiracy?

What conspiracy?

Ahh I see ur defending ur main account by using a sockpoppet account nice work

Good luck trying to prove something I can’t disprove, idiot.

I see him on YT a lot as well. Same story.

Not an argument.

Dirty AMD tricks. Good thing we didn’t need to wait long for payback.

wccftech . com/amd-rx-480-killing-pcie-slots/

Nvidia and AMD using different architectures. People need to realize that AMD made Mantle-DX12 only for their GPUs.

So I realize that if I want to play in DX12 I just need to simply have 1 Radeon card and if I want use vulkan I’ll just simply switch out the Radeon and put in the Nvidia card? How convenient…

Negative. Both are good for both API’s. AMD gets more benfits out of Async Compute, and future Asynchronous uses as their hardware is geared towards single threaded API’s (Open GL, DX11, etc) while AMD is geared towards low level APIs. Its just this title needs some work with Nvidia hardware.

Well I can say Nvidia gets no benefits from DX12, none. tried it with my 980ti.

And why is that?

It doesn’t cooperate well with asynchronus computations between the cpu and gpu, but AMD can. Tried it with my 980ti and it blows HARD it’s as if the 980ti is like 10 generations behind.

Maxwell, and pascal, are both software scheduled while their hardware is geared towards serialized scheduling. Pascal is better at preemption compared to maxwell, which results in better async performance, however there is still a large discrepancy between the companies.

AMD GCN is geared towards parallel processing, which Async (compute and otherwise) is a form of with hardware scheduling. It actually is harder for GCN to perform single threaded loads compared to parallel.

Nvidia will focus more on preemption in order to tackle async, however this will be only when Low Level API’s hit more mainstream to justify the move. They want to gear their hardware towards the future, only when the future hits. AMD instead made their hardware for gear that wouldn’t be out for 4+ years after they decided they didn’t want DX11 (only when they found out DX11 Async was bust).

ASFAIK people that have 980ti’s(that’s me) and people that have gtx1080’s

are reporting poor fps in Warhammer no matter how “good” our preemption process works (as of right now) DX11 for Nvidia seems to be the ONLY best option people with 1080’s have reported as low as the 30’s while actually battling and not the benchmark testing.

Thats a single title. Their support of nvidia in that game could be absolute garbage. AOTS they still run fine. I haven’t heard anything negative about ROTR, hitman or other titles. Remember its up for the developers to essentially add their own support for the cards in their game.

You can thank AMD for that.

Microsoft DX11 – favors no one.

AMD DX12 – favors AMD.

I can thank whoever wants to split the market which is both companies. To which i’ll give the benefit of the doubt that on both companies it was inadvertent.

it doesnt favor AMD… its nvidias fault that they did not want to use dx12

Microsoft dx12 favor AMD. Fixed for you

So now It’s Microsoft DX12. Where are all AMD trolls who claiming that Mantle is DX12?

AMD already archives impressive results with Vulkan. (pic)

http://image.prntscr.com/image/e79b38acc37043019a6dbc6da3ab848f.png

5% faster than a 980Ti isn’t impressive considering AMD claimed FuryX would be 20% faster. 980Ti is £110 cheap than the FuryX as well now.

This is a Fury not a Fury X, it is already faster than a Titan X and almost as fat as a 1080.

Agreed

I wouldnt call this bad:

BAHAHAHAHAHAHHAAHHAHAHAAHAHAHAHAHAHAHAHHAHAHAHAHAAHHAHAHAHAHAHAH

And you have to realize that AMD did Mantle for their gpus. *Microsoft* made dx12 for its xbox.

I got a deffo increase with a 970. I checked it to make sure.

I’m right here, getting MORE FPS with DX12 in this game than I was in DX11 on a 970. Don’t know why others are having issues, but I’m more than happy. Did you want me for something BTW?

That’s good for us all, hope we move to dx12 soon.

What about GTX 1080 and does SLI work in DX12?

For TW;Warhammer? No

I doubt CA will implement NVIDIA multi-adapter for it, since DX12 is fundamentally broken for NVIDIA.

They have stated they’re working on further optimising CrossFire for DX12, though (it already works).

On my R9 280X i’m getting 10-15 fps performance boost soo DX12 is working for AMD cards.

it work on r9 200 series gcn 1.0 ??

DX12 support on AMD cards starts with 7xxx series and upward, got it? 🙂

on this game not work on 200 series gcn 1.0 not run

http://wiki.totalwar.com/w/Total_War_WARHAMMER_DirectX_12_System_Requirements

guru3d have their hands on the benchmark before. it seems in their test the game will not run in DX12 for GCN 1.0 and kepler.

on this game not work on 200 series gcn 1.2 and up for work

They putting 290x under the rug.

AMD moves another piece across the chessboard, nice.

AMD’s sweep of the current gen gaming consoles, and the next generation consoles is checkmate in their favour already.

checkmate when they are in 5 billion dept? AMD “won” console generation because AMD was the only one that could provide X86+GPU. (remember that Nvidia is not allowed to do X86 CPUs/or codemorphing. Intel payed over 700 million dollars to Nvidia: one of the demands where that Nvidia is not allowed to do X86 codemorphing.) Intel needs AMD to survive otherwise the government would force Intel to license out X86.

But this is out of topic a bit guys.

I’d rather amd then price gouging nvidia and Intel business practises.

Yes, because all this ‘Dx12’ patch has done is enable async compute. There is a LOT more to Dx12 than this feature.

It’s not a question of it working or not – as many people think AC IS Dx12, thus AMD supports Dx12, Nvidia doesn’t (which is nonsense) – it’s the fact that CA are in AMD’s pocket entirely so they aren’t actually making proper use of Dx12.

Async Compute will be DX12’s most important feature. Looks like Nvidia is a barkes for the advancement of Dx12.

We’ve already seen that AMD improved even more their Async Compute capabilities with Polaris and the new consoles will both used similar GPUs.

Let me appeal to logic:

How can an API with much less overhead run worse on ANY GPU? There should always be gains, even on Nvidia– and most importantly on 8 core+ CPUs.

We haven’t seen any REAL Dx12 titles yet (that only tends to happen when widespread adoption occurs and the game only supports that). Certainly AC is going to give some significant gains on AMD, but sense means you should see some improvement on Nvidia as well– if the API is working properly and it optimised well.

Dx12 running worse on any system just means one thing: crappy Dx12 implementation.

In doesn’t run worse on ANY GPU, it mostly runs worse on Nvidia GPUs.

And the fact that we haven’t seen any real DX12 games is an advantage for Nvidia, hopefully their next GPU generation will be available by that time because it won’t look great for Pascal and especially Maxwell.

You don’t see improvement for Nvidia because their GPUs are not designed to take advantage of DX12 and it doesn’t look like they can’t do anything about it with drivers updates.

I give up; you can’t read.

‘not designed to take advantage of Dx12’.

For the final time: Async Compute does not = Dx12.

It’s like speaking to a dense brick wall.

Why do you think ROTTR runs no better on AMD Dx12? Well, because it probably doesn’t use AC.

Hmmm. Dx12 without AC, imagine that– that must mean there’s more to Dx12 than Async Compute alone.. well.

Bye.

The only reason Microsoft did not make Async Compute a mandatory feature is because None of Nvidia’s GPUs would be DX12 compatible then.

The fact that both consoles are can use this feature and development for important games starts with the console version makes it a crucial DX12 feature.

And with or without this feature Nvidia doesn’t perform under DX12.

Yep. Game Development is basically DX12/Mantle (Xbone/Ps4) as both use AMD CPU/GPU and will continue doing so with the new versions… unless intel/nvidia can keep up, expect to see them lose a lot of marketshare with Zen and raven ridge (The APU we’ve all been waiting for)

Dx12 is nvidias answer to keeping vulkan away. Vulkan defecates on dx12.

darn this doesnt look good for Nvidia.. wanted to get a 1070.. will Nvidia fix it?

Hardware/Design incompatibility from what I heard

Where do you have this info from?

DTC ?

Considering how i play games in DX11, how most of my games prolly wont support it.. and how most modern games suck.. Nvidia is the way to go. There are barely any games im interested in, let alone DX 12 games.

Maybe if we start getting more strategy games, more RPG games and more awesome portal like games… yea? But right NOW (as in today) gaming is kind of meh.

So basically you will be abandoning PC gaming in the next year or two? because by then everything will be DX12.

lol no it is not. why people still not understand that DX12 is not replacing DX11? some triple A might use DX12 but outside that small and indie dev will most likely stick with DX11. hey DX9 even. DX11 already 6 years right now. why we still seeing games being release in DX9? and DX11 was suppose to replace DX9 and DX10 altogether unlike DX12 which is optional for developer that want more control and have more resource to do it.

Naah 🙂 Considering how i play Warcraft 3 almost every day, and some other older games ( around 100 actually) i wont be doing any abandoning.

In fact, i got so many games to finish, i’ll be busy for the next 10 years at least. If my computer dies by then, i’ll upgrade it to W10/DX12 and yea, i’ll catch up with the few games im interested in.

LOL wtf i know a guy like you he basically plays PC games off a 5 year old laptop and never touches anything released beyond 2004. lol you guys arent relevant tbh.

Nah, i play modern games too! 🙂 I’m playing Dota 2, Starcraft 2, Diablo 3, Portal 2 (multiplayer custom maps), Battlefield 4 (Soon BF1 too) and a few others. Thats still like 5-15 games pass 2013.

We are relevant because we do upgrade our computers ( i just got 2 SSD’s for a shitload of money) and i’m already thinking of upgrading my PC. I got over 300 games on Steam/Origin, so you are wrong.

You know whats sad? People that buy a game, finish it and move on to the next one. We all do it, heck, i barely touch most of those 300 games.

Thats a clear sign of MEH games. If you cant go back to it, then its not that great of a game (even if it feels good while ur playing it). Why do you think some of the most player games are SC1/2, Dota1/2,League, Counter Strike and TF2? Super old games that are MOST played years after they were out, yet every BF game and CoD game has problems filling up 5 servers 1 year after the release. I should know, my friends get those games (i do too)

Ehh games that have a story and are meant for one solid playthrough are fine. You are aware that games with tons of replay value release even today right? Look at the paradox lineup. Games like Stellaris will be played forever. Look at the new Total Warhammer game. Games that last forever are still a thing.

I didnt check Stellaris yet, but i heard a bit about it. How is it?

The new Total War game is not my cup of tea (i gave up on the series for now). They use the same combat system that annoyed me for years. The last game i got was Rome 2, and they haven’t changed anything (units vs units wise) I can explain more if you wanna know ;p

Anyways. Civ 5,Starcraft 2,GTA4+5, Portal 2, Skyrim and Fallout 4 are singleplayer games i can play forever. With games like those, i don’t even know if i’ll ever need another game lol. They alone keep me so busy, i barely got time to finish Mass Effect 3, and that came out years ago lol.

But yea, if i name all the famous games ive missed as of late (last 5 years) You will probably get shocked. They only games im excited about are

Half Life 3, Portal 3, Age of Empires 4, new C&C, new Homeworld (3?) and Dawn of War 3. Out of those, only DoW3 has been announced.

Stellaris launched with a weak mid game (this is why it was rated in the 70s) but thats been fixed and patched since then. give it a shot if u see it on sale. its awesome.

I’ll see a few videos of the game, PC gamer always says nice things about it 😛

“future proof” except maybe for your motherboard

Big bump for my Fury X. Nice!

What did I tell ya all?

NVIDIA will hold back gaming forever, stop buying crap gimped hardware that is designed to be obsolete fast.

All I see is that you have up-voted yourself 5 times, including this comment… not cool man.

Why wouldn’t I? My comments are awesome.

Give it some time.

Once Nvidia starts losing major market share, they’ll start pushing out real cards, not the copy/paste jobs “with a new architecture” they’ve been vomiting out for years now.

So is it 27% slower in DX12 on NVIDIA’s hardware dealing with anything under Pascal? Oh even on Pascal… Wow AMD playing dirty like Nvidia did to them under DX11 games

AMD users had to suffer for years during DX11… its time to flip the script.

That’s because of AMD’s bad drivers and blame game, AMD blame the big boys beating it up for years and now decided to grow up instead of blaming NVIDIA or Intel.

The problem is you are a blind nvidia fanboys.

AMD’s DX11 drivers are OK, the problem was AMD was late most of the time but that did improve significantly in these last months, not that an Nvidia fanboy would care.

AMD took a lot of advice from Microsoft and Sony when they developed GCN and GCN works best under low level APIs. That makes it harder for AMD to improve DX11(which is a high level API), they don’t have a lot of options and it’s more time consuming. DX12 will change this and most new games and game engines will be developed to be GCN compatible right from the start.

AMD has been playing the long term strategy but it looks like it’s working.

How exactly is AMD playing dirty with dx12? nobody stopped nvidia from creating a dx12 friendly architecture.

That’s because Nvidia was to busy building their Arch’s around MS DX11. While behind their very own backs AMD was working with MS. And that is why DX12 works on older AMD cards very well next to Nvidia’s older cards.

NVIDIA have had nearly 5 years (if they had no prior knowledge of AMD’s work, before the first GCN 1.0 cards launched) to come up with something. As usual, they first tried to blackmail devs (and succeeded to some extent), then campaigned to hold back DX12 spec (and failed), and finally in a panic tried to fudge software emulation (and completely failed).

By the time Volta launches, it will have been 7 years since AMD supported a-synch, and 5 years since they supported resource heap.

im thinking it might be time to jump to the red team once Vega and Zen release and DX12 is more of a thing for PC games.

Uhm and how about reporting how AMD cards are affected by the update?

I’m new to this site but this is a pretty weak report considering they covered only Nvidia hardware results. What is this?

I guess he means AMD card run 27% faster.

This is no different than when HL2 was launched and dx9 was the new hot API. ATI had fully dx9 compliant hardware and nvidia did not. No gimping going on, just lack of hardware support for features in the API. Nvidia runs fine in dx11 mode and AMD benefits from dx12. Game doesn’t look any better either way.

graphical feature wise everything that can be done in DX11 can be done in DX12. just that technically with low overhead and such dev can afford to use more demanding setting. but so far i haven’t seen that in DX12 games. in fact they increase game requirement such as increasing VRAM usage in games.

What I don’t understand is that the problem is that multithreading can not be taken advantage of with DX12. Doesn’t that mean that not all of the CPU cores are being used properly? How is that a GPU problem? Isn’t it a DX12 design flaw?

Multithreading in DX12 is not out of the box. This API is ready for it but it is up to developers to use it. If they don’t, DX12 rendering can run on one core.

On the GPU hardware? Or GPU Drivers?

lol, Doom does. And probably others as well.

No. Multithreading means the same process using more than a single execution thread. Doesn’t matter what the various threads are each being used for.

Until it kills your PCIe slot that is.

For a better performance you can buy performance DLC for 5.99$ and 2$ for gore and 3$ for more resolution options.

AMD has actual hardware async, nvidia is being nvidia and emulating it. The result is pretty clear.

Are you a m-oron?? They used a 980Ti and not a 1080 or 1070 for the test !!

GTX1080 is faster from FuryX in all DX12 games you AMD tards need a fricking life. Async can only give 3% better performance does not worth the overhype

The 1080 is a hair ahead in the new patch, rather than significant lead of the original DX12 bench. The 1080 is ~$400 more expensive than a Fury X.

Mr John Papadopoulos next time make your tests based on newer cards like the 1080 and 1070 and not based on a older product (980Ti)

The GTX1080 destroys FuryX in DX12 and especially in higher resolutions you should update your tests based on Nvidia Pascal products they handle DX12 better from Maxwell

You do realise that doesn’t prove your point right? Lol look at fury x it’s worse than a 980ti usually and is a little over 980 but in dx12 it’s so Damn close to the 1070/1080 shows amd killing it in dx12

Further he’s showing the old bench, not the new patch which further increases reliance on a-synch / resource heap / multithreading (thereby improving AMD further and gimping NV).

AMD run like sh*t in DX11 in this game, the GTX 970 is 2FPS behind the FuryX, NVIDIA already had good performance.

But not 4K or VR proof.

as usual get async compute nvidia you are still crap in dx12…lmao

dx12 is the future of gaming.its still early for it soon games will be made for dx12 and nvidia will be left behind..lol

My frame rates all over the place with the GTX 1070 under DX12 very inconsistent.

Using same settings as above

GTX 2088MHz – mem 9.5GHz – 4790k @ 4.9GHz

DX11 70min (right at the start) but other wise min 105, average 111, max 117

DX12 min 86, average 98, max 111 but the min frame rates are all over the place with the lowest 86.

Don’t use DX12 in any game for NVIDIA.

Even with good DX12 support, the RX 480 still performs under a GTX 1070 in DX 12 games. So, The GTX 1070 is more future-proof than an RX 480.

A good DX12 card with base specs that are the same as a mid-tier card from 2 years ago, the GTX 970, is still only a low-mid card, and not very future-proof. Already there are games that an RX 480 cannot max out settings in while at 1080p.

Yeah man but 1070s performance won’t get better in time it could even decrease.

The 480 on the other hand should see at least 10-20% constant improvements when DX12 will be the norm.

That is 2017, just so you know.

” late to the party..”

Is not a phrase an Nvidia fan should use when talking about anything DX12.

Very odd. Nvidia had gains before according to PCGamers article(may 26). Now they are losing performance. AMD paying off developers?

No, Creative Assembly are just optimising the DX12 engine to take more and more advantage of a-synch computer / shaders and resource heap, and hence it relies more on them. The more they do this, the more FPS tanks on NV Pascal / Maxwell / Kepler and the more the driver / game will crash; simultaneously all AMD cards will improve more and more.

Maxwell (NV 9xx) could get away with it as DX12 / Vulkan were on the horizon but not there yet, but the Pascal (NV 10xx) cards are fatally flawed for a new architecture when DX12 / Vulkan are becoming the norm … the 2 year wait for Volta (11xx) is going to be crippling for NVIDIA – and then we don’t even know if they’ll get it working properly then.

I think AMD is still butthurt about witcher 3 performance, they’re trying to hit Nvidia harder than ever

Async Compute does not Dx12 make in its entirety.

The propaganda has truly worked wonders on all the clueless.

Isn’t it funny the way all these games are completely backed by AMD? Look at CA– they have almost entirely ignored Nvidia, and in fact have screwed over all of us by not using Dx12 properly and making much better use of CPU threading– which is actually what matters on a strategy game. You’ll still see your FPS tank on massive battles because your CPU can’t handle it, and those 4 extra cores are being wasted.

What is this CA? and anyway I haven’t seen proof of Nvidia being ignored or games studios actively using features that gimp Nvidia’s performance or make it harder for Nvidia to optimize their divers.

Nvidia simply does not perform under DX12, this has been the norm right from the start.

They just started doing that, but you need to remember that just because it’s open source doesn’t mean that it’s open.

Read the license, it contains this:

Object Code: Developer agrees not to disassemble, decompile or reverse engineer the Object Code versions of any of the Materials. Developer acknowledges that certain of the Materials provided in Object Code version may contain third party components that may be subject to restrictions, and expressly agrees not to attempt to modify or distribute such Materials without first receiving consent from NVIDIA.”

Moreover it still requires a license from Nvidia.

when DX12 is mainstream there will be 200 dollar cards that are at least twice as fast as RX 480. Buy stuff you want to use today/next 12 month. Not in 2+ years. And who cares about RX480? Its a 1080P card something advanced users have left over 12 years ago. (bought my 30 inch 2560×1600 in 2004!, moved to 4K in 2013 and 5K in 2015. Console peasants use AMD)

The Killing zone just again started for all GTX’s.

DX12 requires pretty much a rethink of your rendering pipeline and code .. Clearly it can run worse on DX12 even with its thiner layer because 1. either the HW is deficient in certain features and SW needs to take over (driver) 2. the devs CA’s Dx12 impelmentation is terrible … You can’t just assume DX12 api (1yr old) will outperform DX11 api (thats had 11+yrs of evolution) .. its a new api … Devs are still comming to grips with the new api and how to code it

what Nvidia calls Async Compute is Pre-emption, then improved with dynamic load balancing.

what AMD calls Async Compute is Multi-Engine (or whatever it called, it’s like hyperthreading in CPU).

Nvidia cards only support Pre-emption, but AMD cards support both.

DX12 has Async compute, but the problem is which ‘Async Compute’ is the game support.

AMD already use the words ‘Async Compute’ since 2011.

Nvidia failing so hard.

I notice their is a conspiracy to make the 290x disappear. The 780ti and 280x show up alot but rarely the 290x.